Software Development with AI: Tools, Patterns, and Real-World Workflows

When you're building software today, software development, the process of designing, coding, testing, and deploying applications. Also known as app development, it's no longer just about writing code—it's about working alongside AI tools that help you ship faster, avoid mistakes, and handle complexity without drowning in it. The old way—writing everything by hand, debugging for hours, and guessing how your app will behave under load—is fading. Now, developers use AI to generate code, manage APIs, and even enforce security rules before a single line runs in production.

vibe coding, a workflow where AI assists in real-time during development, often using tools like Cursor.sh or Wasp is changing how teams build features. Instead of spending weeks on architecture diagrams, you focus on one small, end-to-end feature at a time—what’s called a vertical slice, a complete, working piece of functionality from UI to database. This isn’t just faster—it’s smarter. You test real user flows early, catch bugs before they spread, and avoid over-engineering. And when you’re building SaaS apps, you can’t ignore multi-tenancy, the ability to serve multiple customers from the same codebase while keeping their data completely separate. Get this wrong, and you risk data leaks, billing chaos, or compliance fines.

Then there’s the problem of vendor lock-in. If you build your app to work only with OpenAI, what happens when prices change or the API goes down? That’s where LLM interoperability, using patterns like LiteLLM or LangChain to switch between AI providers without rewriting your code comes in. It’s not a luxury—it’s a survival tactic. Teams that abstract their AI layer can swap models in minutes, test cheaper alternatives, and keep costs under control. And when you’re using AI to call external tools—like databases, payment systems, or calendars—you need function calling, a way to let LLMs trigger real actions instead of guessing or hallucinating answers. Without it, your app will give you confident, wrong answers.

None of this matters if your team can’t onboard new people. Vibe-coded codebases often have unwritten rules—patterns only the original builders know. That’s why successful teams create onboarding playbooks, living guides that walk new devs through the real workflow, not just the docs. It’s not about perfect documentation. It’s about capturing how things actually work. And when you measure success, you don’t count lines of code or bug tickets. You look at quality, speed, and whether the feature actually moved the business needle.

What you’ll find below isn’t theory. These are real, battle-tested approaches from developers who’ve been there—building AI-powered apps that work under pressure, stay secure, and actually get used. Whether you’re just starting with AI tools or trying to scale a team that’s already using them, the posts here give you the exact steps, pitfalls to avoid, and patterns that make the difference between chaos and control.

Quantization-Aware Training for LLMs: How to Keep Accuracy While Shrinking Model Size

Quantization-aware training lets you shrink large language models to 4-bit without losing accuracy. Learn how it works, why it beats traditional methods, and how to use it in 2026.

Read MoreMemory Planning to Avoid OOM in Large Language Model Inference

Learn how memory planning techniques like CAMELoT and Dynamic Memory Sparsification reduce OOM errors in LLM inference without sacrificing accuracy, enabling larger models to run on standard hardware.

Read MoreOpen Source in the Vibe Coding Era: How Community Models Are Shaping AI-Powered Development

Open-source AI models are reshaping software development through community-driven fine-tuning, offering customization and control that closed-source models can't match-especially in privacy-sensitive and legacy code environments.

Read MoreSecurity Risks in LLM Agents: Injection, Escalation, and Isolation

LLM agents can act autonomously, making them powerful but vulnerable to prompt injection, privilege escalation, and isolation failures. Learn how these attacks work and how to protect your systems before it's too late.

Read MoreConstrained Decoding for LLMs: How JSON, Regex, and Schema Control Improve Output Reliability

Learn how constrained decoding ensures LLMs generate valid JSON, regex, and schema-compliant outputs-without manual fixes. See when it helps, when it hurts, and how to use it right.

Read MoreRAG Failure Modes: Diagnosing Retrieval Gaps That Mislead Large Language Models

RAG systems often appear to work but quietly fail due to retrieval gaps that mislead large language models. Learn the 10 hidden failure modes-from embedding drift to citation hallucination-and how to detect them before they cause real damage.

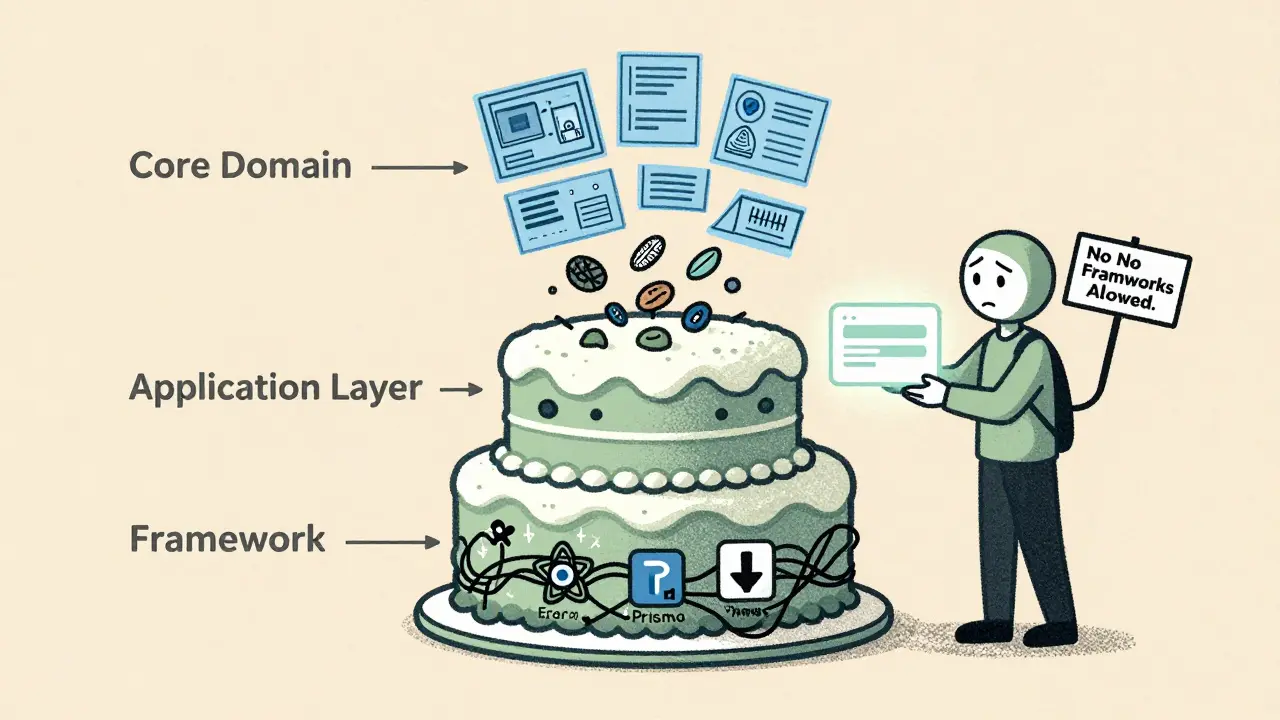

Read MoreClean Architecture in Vibe-Coded Projects: How to Keep Frameworks at the Edges

Clean Architecture keeps business logic separate from frameworks like React or Prisma. In vibe-coded projects, AI tools often mix them-leading to unmaintainable code. Learn how to enforce boundaries early and avoid framework lock-in.

Read MoreProductivity Uplift with Vibe Coding: What 74% of Developers Report

74% of developers report productivity gains with vibe coding, but real-world results vary wildly. Learn how AI coding tools actually impact speed, quality, and skill growth-and who benefits most.

Read MoreCode Ownership Models for Vibe-Coded Repos: Avoiding Orphaned Modules

Vibe coding with AI tools like GitHub Copilot is speeding up development-but leaving behind orphaned modules no one understands or owns. Learn the three proven ownership models that prevent production disasters and how to enforce them today.

Read MoreThreat Modeling for Vibe-Coded Applications: A Practical Workshop Guide

Vibe coding speeds up development but introduces serious security risks. This guide shows how to run a lightweight threat modeling workshop to catch AI-generated flaws before they reach production.

Read MoreInteroperability Patterns to Abstract Large Language Model Providers

Learn how to abstract large language model providers using proven interoperability patterns like LiteLLM and LangChain to avoid vendor lock-in, reduce costs, and maintain reliability across model changes.

Read MoreOnboarding Developers to Vibe-Coded Codebases: Playbooks and Tours

Onboarding developers to vibe-coded codebases requires more than documentation-it needs guided tours and living playbooks that capture unwritten patterns. Learn how to turn cultural code habits into maintainable systems.

Read More