Large language models (LLMs) are powerful, but they lie. Not because they’re malicious, but because they guess. When you ask them a factual question-What was the population of Toronto in 2023?-they don’t check. They predict. And sometimes, that prediction is wrong. That’s called hallucination. It’s the biggest problem holding back LLMs in real-world use: medical advice, legal research, customer support, even homework help. The fix isn’t just better training. It’s combining retrieval with smarter generation. That’s where Retrieval-Augmented Generation (RAG) and advanced decoding strategies come in.

What RAG Actually Does

RAG isn’t magic. It’s a three-step system. First, your question gets turned into a vector-a mathematical snapshot of what it means. Then, a retriever scans a live database-maybe Wikipedia, your company’s internal docs, or a curated knowledge base-and pulls back the top 3-5 most relevant passages. Finally, the LLM sees your original question plus those passages, and generates its answer based on both. It’s like giving the model a textbook during a test instead of letting it rely on memory alone.This alone cuts hallucinations by 30-50% on fact-heavy tasks. But it’s still not perfect. Why? Because once the retriever grabs those passages, it locks them in. The model then generates word by word, ignoring whether the new text it’s producing still matches the evidence. That’s where decoding strategies step in.

Decoding: How LLMs Choose Each Word

Every time an LLM writes a word, it picks from thousands of possibilities. Normally, it picks the one with the highest probability. That’s greedy decoding. It’s fast, but risky. Beam search tries a few top options at once, which helps-but still doesn’t care about facts. What if, instead of just picking the most likely word, the model checked: Does this word match what the retrieved documents say?That’s the core idea behind decoding strategies. Instead of letting the model run wild, we guide it. We don’t just feed it context-we make it listen to that context as it writes.

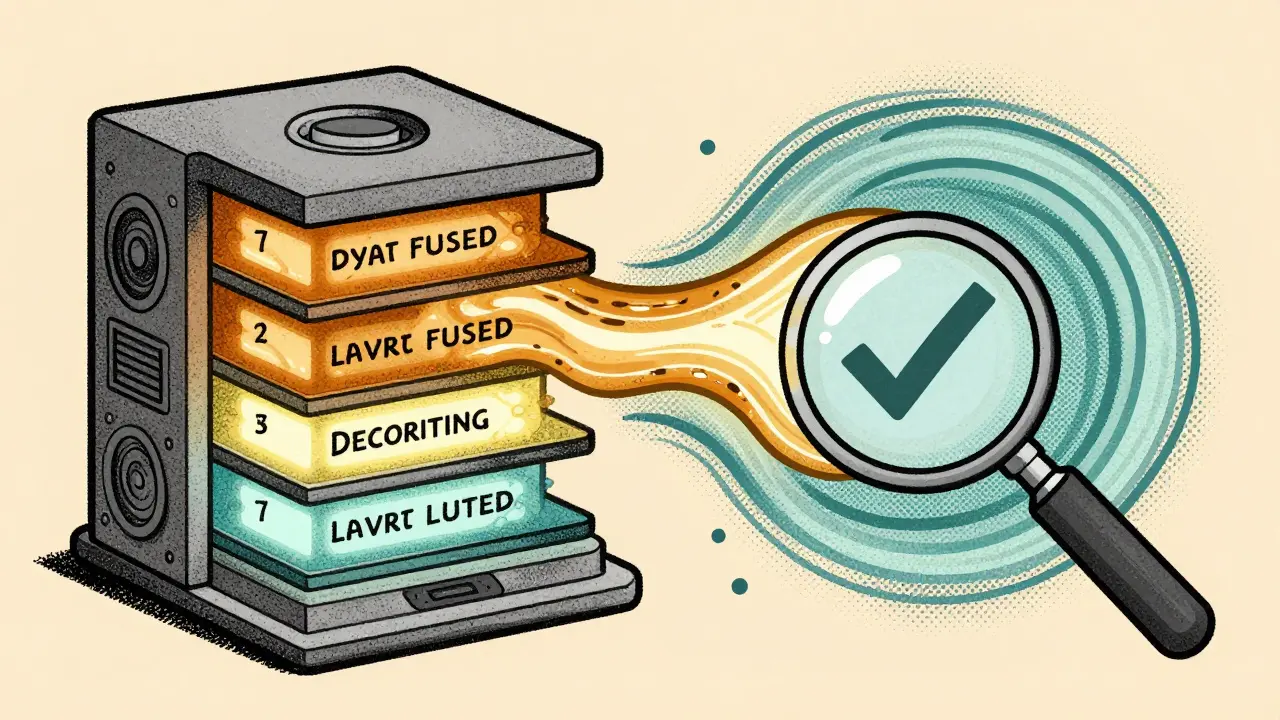

Layer Fused Decoding: Letting the Model Self-Correct

One of the most effective methods is Layer Fused Decoding (LFD). Transformer models have layers-stacks of processing units. Not all layers are equal. Some are better at holding onto facts. LFD finds the layer where the model’s internal representation shifts the most when it sees retrieved text. That’s the layer where factual grounding happens.LFD takes the logits (raw scores for each possible next word) from that layer, blends them with the final output logits, and then re-normalizes the result. Think of it like a vote: the model’s own guess gets weighed against what the retrieved documents suggest. The system only accepts the blended result if confidence is high. This isn’t just adding a fact-it’s forcing the model to reconcile its intuition with evidence.

Studies show LFD reduces factual errors by up to 22% on multi-hop QA tasks, where the answer requires connecting two or more facts. It works best on models like Llama 3 and Mistral, where internal attention patterns are well understood.

Entropy-Based Decoding: Trusting Confidence Over Guesswork

Another smart trick is entropy-based decoding. Here, the model runs multiple forward passes-not just one. Each pass conditions on a different retrieved passage. Then, it looks at how certain each pass is. Low entropy means high confidence. High entropy means the model is unsure.The system then weights each passage’s output by its negative entropy. If one passage leads to a confident answer, it gets more influence. If another leads to a fuzzy guess, it’s downplayed. The final answer is a weighted average of all these runs. This doesn’t just improve accuracy-it makes the model’s uncertainty visible. If all paths are uncertain, you know the question might be outside the knowledge base.

This approach boosted accuracy on the TruthfulQA benchmark by 4.1%, outperforming standard RAG and even fine-tuned models. It’s especially useful for open-ended questions where there’s no single right answer, but there are definitely wrong ones.

Guided Decoding: Forcing Structure, Preventing Lies

Sometimes, the problem isn’t just what the model says-it’s how it says it. If you need a JSON response with fields like"date", "source", and "confidence", an LLM will often make up fields or format it wrong. Guided decoding solves this by locking the output into a strict format.

Tools like Outlines, XGrammar, and LM Format Enforcer let you define rules: Only output JSON. The "date" field must be ISO 8601. No extra keys. At each decoding step, the system filters out tokens that break the rule. No more malformed responses. No more hallucinated fields. This isn’t just about structure-it’s about trust. If you can’t parse the output, you can’t use it.

When combined with RAG, guided decoding ensures that retrieved facts are presented in a usable, consistent way. In one test, a customer support bot using this combo reduced invalid responses by 89% compared to plain RAG.

Retrieval-Augmented Contextual Decoding: Learning from Truth

Here’s the most elegant trick: RCD (Retrieval-Augmented Contextual Decoding). Instead of retrieving documents, it retrieves truths. You give it 10 examples of correct answers paired with their context. The system learns a mapping: When the context says X, the next token should be Y.At inference time, when you ask a new question, it retrieves the top-N most similar past truths. Then, it takes the logits (next-word scores) from those truths and averages them into the model’s own logits. It’s like asking: What did we do last time when we saw this?

This method needs no retraining. It works on any off-the-shelf LLM. On the WikiQA benchmark, it improved exact match scores by 2.4%. On biographies, it made the model less likely to invent dates or titles. The best part? It only takes one generation pass. No loops. No extra compute.

When to Use What

Not every strategy fits every task. Here’s how to choose:- For single-hop questions (one fact needed): Use basic RAG + guided decoding. Simple, fast, reliable.

- For multi-hop reasoning (connecting facts): Layer Fused Decoding or iterative retrieval. These handle complexity better.

- For open-ended answers (opinions, summaries): Entropy-based decoding. It surfaces uncertainty.

- For structured outputs (JSON, forms, code): Guided decoding. Non-negotiable.

- For quick deployment (no training allowed): RCD. Works with any model, needs only 10 examples.

Most real systems use a mix. A customer service bot might use RCD for grounding, guided decoding for form fields, and entropy-based checks to flag low-confidence answers for human review.

What’s Still Missing

None of this is perfect. Retrieval still fails if the right document isn’t in the database. Decoding strategies can slow generation. Some methods require access to internal model layers, which isn’t possible with closed APIs. And no system can fix a completely wrong or missing source.But we’re past the point of just hoping the model gets it right. We’re building systems that check, correct, and constrain. The future of accurate LLMs isn’t bigger models. It’s smarter interactions between retrieval and generation.

Does RAG eliminate all hallucinations?

No. RAG reduces hallucinations significantly-often by 30-50%-but it doesn’t remove them entirely. If the retrieved documents are outdated, incomplete, or contradictory, the model can still generate incorrect answers. Combining RAG with decoding strategies like Layer Fused Decoding or entropy-based weighting further cuts hallucinations by addressing generation-time errors that retrieval alone can’t fix.

Can I use RAG with any LLM?

Yes. RAG is a framework, not a model. You can plug it into Llama 3, Mistral, GPT-4, or even smaller open models. The key is having access to the model’s input and output layers. For advanced decoding strategies like Layer Fused Decoding or RCD, you need access to internal logits, which limits you to open models or self-hosted APIs. Cloud APIs like OpenAI’s don’t expose those layers, so you’re stuck with basic RAG and guided decoding.

Is RAG slower than regular LLMs?

It depends. Basic RAG adds one retrieval step, which usually takes 50-200ms. That’s negligible compared to generation time. But iterative methods like LoRAG or multi-step retrieval can double or triple latency. Entropy-based decoding runs multiple forward passes, which increases compute cost. For real-time apps, use single-step RAG with guided decoding. For batch processing or research, heavier methods are worth the cost.

What’s the difference between RAG and fine-tuning?

Fine-tuning changes the model’s weights to memorize new facts. RAG doesn’t change the model at all-it just gives it new information at inference time. That means RAG updates instantly: swap your knowledge base, and the model uses the new data. Fine-tuning needs retraining, which can take hours and cost thousands. RAG is better for dynamic data (news, product specs, internal docs). Fine-tuning works better for style, tone, or consistent formatting.

Do I need a vector database for RAG?

Not always. You can use keyword search (like Elasticsearch) for simple cases. But for accurate semantic retrieval-finding documents that mean the same thing even if they use different words-you need a vector database. Tools like Pinecone, Weaviate, or Qdrant are standard. They turn text into vectors and find the closest matches in milliseconds. Without them, RAG’s retrieval step becomes unreliable.