Open-source large language models (LLMs) aren’t free just because they’re open. You can download them, run them on your own servers, and avoid paying per-token API fees-but if you skip the legal steps, you could be looking at a lawsuit, a cease-and-desist letter, or worse, a $2 million settlement. This isn’t theoretical. In 2024, a startup in Austin got hit with a $375,000 fine after using a model labeled "research-only" in their customer-facing app. They thought "open source" meant "free to use however you want." It didn’t.

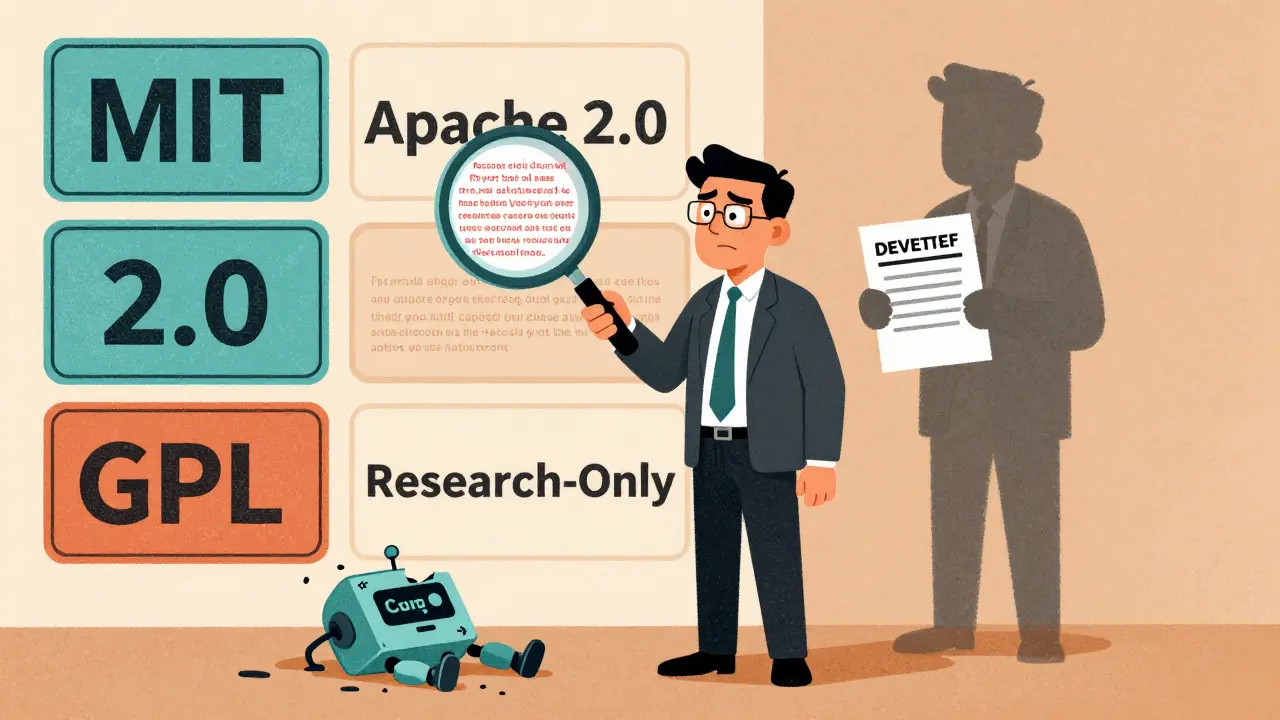

Not All Open-Source Licenses Are Created Equal

When you see an LLM on Hugging Face with a license labeled "MIT" or "Apache 2.0," it’s tempting to assume it’s safe to use in production. But that’s not always true. There are three main types of open-source licenses for LLMs, and they have wildly different impacts on your business.Permissive licenses like MIT and Apache 2.0 are the safest for commercial use. MIT just asks you to include the original copyright notice. Apache 2.0 adds a patent grant-you can’t sue the original creators if your product uses their model. These two make up 62% of all LLM licenses on Hugging Face as of 2025. Companies using them report 92% success in commercial deployments, with compliance taking less than 5 hours per project.

Copyleft licenses, like GPL 3.0, are where things get dangerous. If you modify a GPL-licensed model and distribute it-even as part of a SaaS product-you must open-source your entire codebase. That’s a dealbreaker for most businesses. Only 8% of enterprises successfully deploy GPL-licensed LLMs commercially. One company in Berlin accidentally fine-tuned Llama 2 (which uses a custom license) and released it under MIT. Meta sent a cease-and-desist within two weeks.

Weak copyleft licenses like LGPL and MPL are a middle ground. They only require you to open-source the parts you actually changed. That’s useful if you’re building a plugin or tool that wraps the model. But even then, 34% of companies still struggle with compliance. And if you’re embedding the model in a mobile app? You’re almost guaranteed to mess it up.

What You Can’t Ignore: Training Data Licenses

Most people focus on the model’s license. That’s the easy part. The real trap is the training data.Just because the model weights are under Apache 2.0 doesn’t mean the data it was trained on is. A 2025 audit by Knobbe Martens found that 68% of open-source LLMs have mismatched licenses between the model code and the training dataset. One popular model was trained on scraped books from Project Gutenberg (public domain) and copyrighted news articles from Reuters. The model license says "MIT," but the training data license says "all rights reserved."

This creates legal gray zones. The Authors Guild is suing OpenAI for training on copyrighted books. The U.S. Copyright Office says AI outputs can only be copyrighted if there’s "substantial human modification." But if your model was trained on protected material, and your output looks too similar to the original? You’re at risk. Red Hat found 17 cases in 2024 where GitHub Copilot inserted GPL-licensed code into MIT-licensed projects during training. You didn’t touch the code. The AI did. And now you’re liable.

Commercial Use Limits Are Everywhere

Meta’s Llama 3 license used to ban commercial use entirely. In June 2025, they updated it to allow commercial use-but only if your app has fewer than 700 million monthly active users. That sounds generous until you realize that’s more than the entire population of the European Union. If you’re building a chatbot for a Fortune 500 company, you’re already over the limit.Other companies use "research-only" or "non-commercial" clauses. These are often buried in fine print. A 2025 survey of 1,200 developers found that 63% couldn’t tell if a license allowed commercial use. One developer on Reddit said: "I used a model labeled 'free for research' because it was on Hugging Face. We scaled to 120,000 users. Then we got a lawyer’s letter. We had to shut down the product. Lost $800k in revenue."

Proprietary APIs like GPT-4 Turbo cost $0.01-$0.03 per 1,000 tokens. Open-source models cost nothing to run-once you’ve paid for the servers. But if you mislicense them, your savings vanish. Legal fees for a single violation can hit $500,000-$5 million.

Compliance Isn’t Optional-It’s a Process

You can’t wing this. Here’s what actually works:- Identify every license component: Model weights? Code? Training data? Each might have a different license.

- Check the license type: Is it MIT? Apache 2.0? GPL? Custom? Use the Open Source Initiative’s license list. Don’t guess.

- Document everything: Keep a spreadsheet. List the model name, license, source URL, and what you’re required to do (e.g., "Include LICENSE.txt in app bundle").

- Automate tracking: Tools like FOSSA and Mend.io scan your dependencies and flag license conflicts in your CI/CD pipeline. 61% of Fortune 500 companies now use them.

- Audit quarterly: One company found a GPL violation in their model stack after 14 months-because a new version of a dependency had changed licenses.

For permissive licenses, this takes 15-25 hours. For GPL or custom licenses? 80-120 hours. And if your legal team hasn’t been trained on AI licensing? They won’t know what to look for. Red Hat’s 2025 workshop showed that legal teams need 40+ hours of specialized training to handle LLM licenses correctly.

What Happens When You Get It Wrong

The penalties aren’t just financial. They’re reputational.GitHub’s 2025 State of the Octoverse report found that 41% of LLM license violations came from improper attribution in mobile apps. Developers embed the model, forget to include the license file, and ship it. No one notices until a lawyer from the original team finds it.

One company in Chicago built a customer service bot using Mistral 7B (Apache 2.0). They followed the rules-until they added a new fine-tuned layer. They didn’t realize the fine-tuning used a dataset with a non-commercial license. Their app was pulled from the App Store. Their enterprise clients canceled contracts. They lost $1.4 million in revenue in six weeks.

On the flip side, companies that get it right save millions. A SaaS startup in Denver switched from GPT-4 API to Mistral 7B under Apache 2.0. They saved $1.2 million in API fees last year. Their compliance overhead? Less than $15,000 in legal review time.

What’s Changing in 2026

The EU AI Act, effective August 2026, will require companies to document training data sources and licenses for high-risk AI systems. The U.S. Copyright Office is pushing for clearer rules on AI outputs. The Linux Foundation is working on a new "OpenLLM License" designed specifically for large language models-expected in 2026.Right now, 78% of enterprise AI deployments use open-source LLMs. But 83% of those use permissive licenses. Why? Because the alternatives are too risky. The market is moving toward standardization. Hugging Face now includes standardized license metadata in 89% of model repos. That’s progress.

But fragmentation is still a problem. In 2023, 12% of LLMs had custom licenses. Now it’s 34%. That means every new model you adopt could come with a new set of legal traps.

What to Do Right Now

If you’re using an open-source LLM in production:- Stop. Audit your stack. Where did the model come from? What’s its license? What was it trained on?

- If you’re unsure, switch to Apache 2.0 or MIT models. They’re the only ones with clear, safe commercial terms.

- Don’t assume "open source" means "free to use." Always check the license file.

- Use automated tools. Don’t rely on your dev team to remember license rules.

- Train your legal team. If they’ve never heard of copyleft in the context of AI, they’re not equipped.

Open-source LLMs are powerful. They cut costs. They give you control. But they’re not a legal free-for-all. The difference between saving money and losing everything comes down to one thing: doing the paperwork.

Can I use an open-source LLM for commercial purposes?

Yes-but only if the license allows it. Permissive licenses like MIT and Apache 2.0 do. Copyleft licenses like GPL do not unless you’re willing to open-source your entire product. Some models, like Meta’s Llama 3, allow commercial use only if your app has fewer than 700 million monthly active users. Always read the license file before deployment.

What’s the difference between MIT and Apache 2.0 licenses?

MIT only requires you to include the original copyright notice. Apache 2.0 does that too, but it also includes an explicit patent grant, which protects you from lawsuits by contributors. For most businesses, Apache 2.0 is the safer choice because it reduces patent risk.

Is it safe to use models trained on copyrighted data?

It’s legally risky. While some experts argue training on copyrighted material qualifies as fair use, courts haven’t ruled on it yet. The Authors Guild is suing OpenAI over this. If your model’s output closely resembles copyrighted content, you could be liable. Always check the training data license, not just the model license.

What happens if I accidentally violate an open-source license?

You’ll likely get a cease-and-desist letter. If you don’t comply, the original maintainers can sue. Settlements range from $100,000 to over $5 million. In one case, a startup paid $375,000 after using a "research-only" model in production. The damage isn’t just financial-it can kill customer trust and partnerships.

Do I need to attribute the model in my app’s UI?

It depends. MIT and Apache 2.0 don’t require attribution in the UI, but you must include license texts in your app’s documentation or settings. Many companies choose to display a "Powered by Mistral 7B" link as a best practice. But legally, you only need to distribute the license file with your software.

Are there tools to help with LLM license compliance?

Yes. Tools like FOSSA, Mend.io, and ScanCode automate license detection in dependencies. They scan your code, model files, and even training data references. 61% of Fortune 500 companies use them. They won’t catch everything, but they reduce errors by 70% compared to manual checks.

Can I fine-tune a GPL-licensed LLM and sell it as a service?

No. If you fine-tune a GPL-licensed model and offer it as a service, you’re distributing a derivative work. GPL requires you to open-source your entire codebase-including your fine-tuning scripts, inference pipeline, and any proprietary logic that interacts with the model. Most companies can’t do that. Stick to permissive licenses if you plan to monetize.

What’s the biggest mistake companies make with open-source LLMs?

Assuming "open source" means "free to use commercially." Many licenses restrict commercial use, require attribution, or demand source code disclosure. The most common error? Not checking the license at all. Another is ignoring training data licenses. Both can lead to costly legal trouble.

Ian Cassidy

19 December, 2025 - 02:41 AM

Man, I saw this coming. Open source doesn’t mean free beer, it means free speech. You can use it, but you gotta play by the rules. Saw a guy on Twitter get sued for using a research-only model in a customer chatbot. Lost his startup. Just because it’s on Hugging Face doesn’t mean it’s yours to monetize.

Don’t be that guy.

Peter Reynolds

20 December, 2025 - 14:48 PM

Been there. We used Llama 2 for internal tools, thought we were fine. Turned out the fine-tuning data had some non-commercial bits buried in it. Legal came down hard. Took us three months to scrub everything and switch to Mistral under Apache 2.0. Worth it. Now we have a license checklist in our CI. No more surprises.

Just read the damn license file. It’s not that hard.

Kenny Stockman

21 December, 2025 - 21:50 PM

Biggest myth: open source = no cost. Nah. The real cost is compliance. I’ve seen teams save $500k on API fees, then blow $300k on legal bills because someone skipped the license check. Use FOSSA. Set up a monthly audit. Treat it like security patches. You wouldn’t ignore a vulnerability, don’t ignore a license one.

Paritosh Bhagat

23 December, 2025 - 02:17 AM

People think they’re being clever by ignoring licenses because "everyone else does it." But you know what? The people who get sued aren’t the ones who broke the rules-they’re the ones who thought they could get away with it. I’ve reviewed 12 open-source AI projects in the last year. Eight had training data violations. Eight. And no one even knew. Shameful. You don’t get to steal someone’s work because you’re "just building an app."

Stop being lazy. Read the license. Document it. Or don’t use it at all.

Zach Beggs

24 December, 2025 - 13:10 PM

Just switched our whole stack to Apache 2.0 models. No more headaches. We used to have 3 different licenses floating around-MIT, custom, GPL. Now everything’s clean. Legal team actually smiled for once. Automation tools saved us 40 hours a month. If you’re scaling, just stick to Apache 2.0. It’s the only license that doesn’t make you want to drink before Monday meetings.

Antonio Hunter

24 December, 2025 - 18:18 PM

It’s not just about the model license-it’s about the training data, the embeddings, the tokenizers, the prompt templates, even the fine-tuning datasets. Each layer has its own provenance. One team I consulted with used a model licensed under MIT, but their training data included scraped Reddit threads from users who never agreed to commercial reuse. That’s a copyright minefield. The law doesn’t care if you didn’t know. You’re still liable. The only safe path is to assume every piece of data has a license, and trace every one. It’s tedious, but it’s the only way to sleep at night.

Sam Rittenhouse

25 December, 2025 - 15:16 PM

I remember when we got the cease-and-desist. We were a team of four. No legal department. We thought "open source" meant "use it however you want." We built a customer-facing assistant using a model labeled "free for research." Got a letter from a law firm that looked like it came from a movie. We shut down. Lost everything. The worst part? We didn’t even make money off it. We just wanted to help small businesses. Turns out, good intentions don’t protect you from copyright law.

Don’t be us. Read the license. Before you deploy. Before you even download.

Ben De Keersmaecker

27 December, 2025 - 09:45 AM

One thing nobody talks about: attribution in mobile apps. You embed a model, ship it, forget to include the LICENSE.txt. Easy mistake. But it’s still a violation. We had a client whose app got pulled from the App Store because the attribution file was missing. Took two weeks to fix. Apple doesn’t care if you didn’t mean to break the rules. They just care if you broke them. Always bundle the license. Always. Even if it’s just a tiny text file. It’s not optional. It’s not a suggestion. It’s the law.