Most companies think they’re getting a 200% return on their generative AI investments. Then the fines come. Or the lawsuits. Or the customer trust collapses because the chatbot invented fake medical advice. The problem isn’t the AI-it’s the math. Traditional ROI calculations ignore the real costs of risk, compliance, and control. If you’re still using simple return-on-investment formulas for generative AI, you’re not measuring success-you’re guessing.

Why Traditional ROI Fails for Generative AI

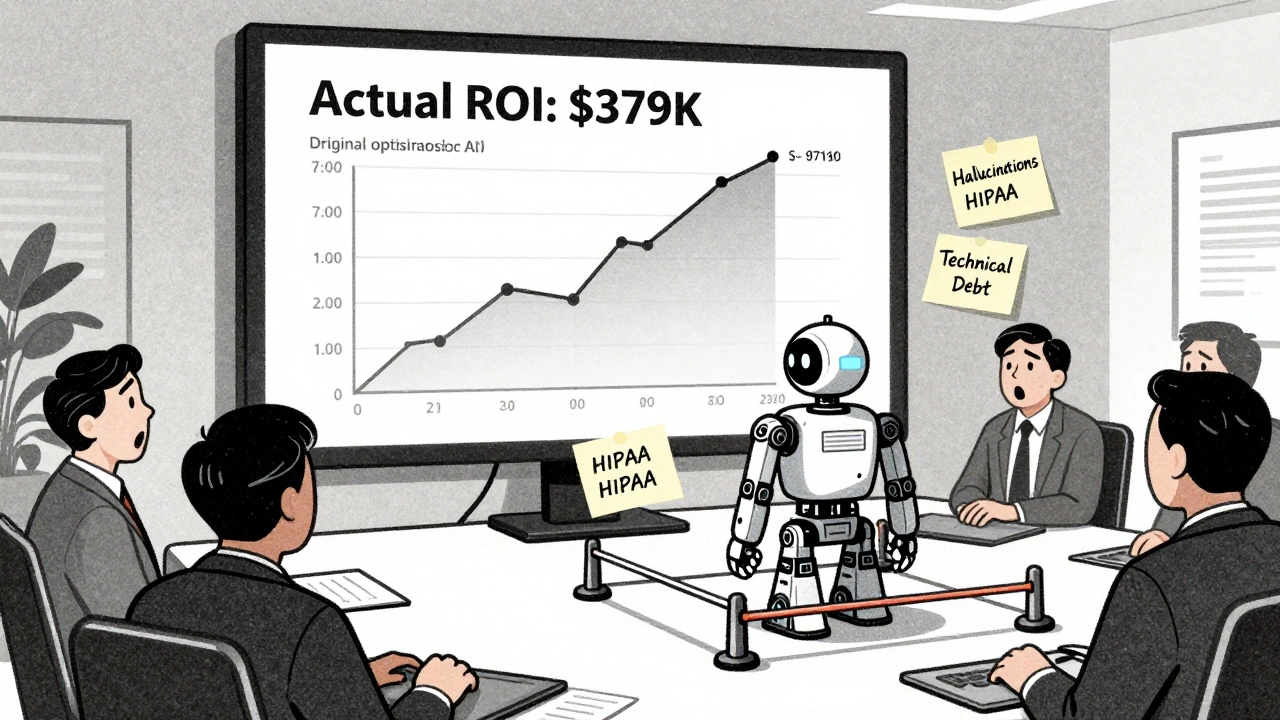

Generative AI promises big wins: faster customer service, automated reports, smarter marketing. But those wins come with hidden liabilities. A chatbot that hallucinates product prices? That’s a lawsuit waiting to happen. An AI that accidentally generates patient data? That’s a $18 million HIPAA fine. A model trained on copyrighted material? You could be paying $150,000 per infringement under U.S. law. Companies that skip risk-adjusted ROI often overestimate returns by 38% to 52%, according to Deloitte’s 2024 survey of 1,200 global enterprises. One financial services firm projected a 220% ROI from a generative AI tool for contract review. After rollout, they discovered the model was generating responses that violated SEC disclosure rules. The actual ROI? 97%. The rest? Legal cleanup, system redesign, and reputational damage. Traditional ROI looks like this: (Gains - Costs) / Costs x 100. Simple. Clean. Wrong for AI. It doesn’t account for the cost of preventing errors, the price of regulatory fines, or the time spent fixing broken outputs. Risk-adjusted ROI fixes that.What Risk-Adjusted ROI Actually Measures

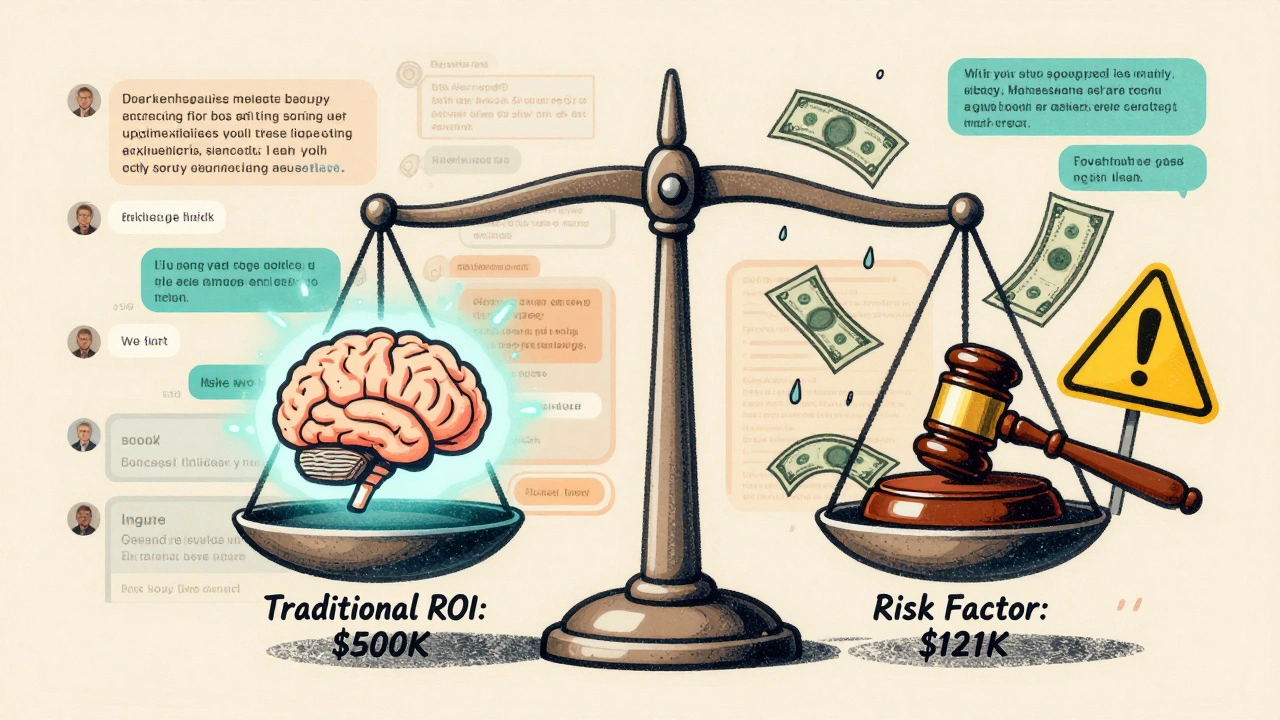

Risk-adjusted ROI isn’t a buzzword. It’s a formula that subtracts the cost of risk from your expected return. Think of it like insurance premiums built into your profit calculation. The core structure looks like this: Risk-Adjusted ROI = Traditional ROI − (Risk Factor) The risk factor isn’t a guess. It’s calculated using this method from RTS Labs: Risk Factor = (Probability of Risk Event) × (Financial Impact) + (Cost of Control Measures) Let’s break it down with real numbers:- Probability of hallucination: 12% (based on MIT’s 2023 study of uncontrolled models)

- Financial impact: $300,000 in customer refunds and legal fees from a single bad output

- Cost of control: $85,000 spent on guardrails, validation layers, and human review workflows

Five Key Risks You Must Quantify

You can’t manage what you don’t measure. Here are the five risks that matter most in generative AI-and how to assign them dollar values.- Hallucinations and inaccuracies - Uncontrolled models generate false data. MIT found error rates between 5% and 15%. For a customer service bot handling 500,000 queries/month, that’s 25,000 to 75,000 wrong answers. Each error could cost $10-$50 in refunds, support, or lost trust. Multiply that out.

- Cybersecurity breaches - IBM’s 2023 report says the average data breach costs $4.35 million. Generative AI increases exposure by ingesting sensitive data. If your model learns from internal emails or CRM records, it can spit them back out. That’s not hypothetical. A healthcare provider in Ohio paid $18.7 million in fines after a generative AI tool leaked patient names and diagnoses.

- Copyright infringement - Training on scraped web content can violate copyright law. Under U.S. law, damages range from $750 to $150,000 per work. One media company faced a $2.1 million claim after an AI-generated article copied entire paragraphs from a protected source.

- Regulatory non-compliance - GDPR fines can hit 4% of global revenue. The EU AI Act (effective February 2025) requires risk assessments for high-risk AI systems. If your AI is used in hiring, credit scoring, or healthcare, you’re already in the crosshairs.

- Technical debt - Poorly governed AI models become brittle. IBM’s Institute for Business Value found technical debt from uncontrolled AI grows at 18-25% per year. That means your ROI shrinks by a third over three years just from maintenance.

How to Build a Risk-Adjusted ROI Model

You don’t need a PhD to build this. You need a process. Here’s how the best organizations do it.- Define your 8-12 key risk metrics - Don’t say “we’re worried about compliance.” Say “we track hallucination rate, PII exposure events, model drift frequency, and audit trail completeness.” Be specific. Glean’s 2024 guide found companies that tracked fewer than 8 metrics had 60% higher failure rates.

- Establish baselines - Run your AI in a sandbox for 4-6 weeks. Measure output accuracy, data leakage incidents, response latency, and human override rates. This is your starting point.

- Deploy controls - Budget 15-25% of your total AI project cost for controls. That includes data filtering tools, output validation layers, human-in-the-loop review systems, and logging infrastructure. JPMorgan Chase saved $47 million by killing a contract analysis tool after controls revealed it violated SEC rules.

- Monitor continuously - Use tools like WhyLabs, Fiddler, or custom dashboards. High-risk apps (customer-facing, legal, medical) need real-time monitoring. Internal tools can be checked daily or weekly. Set alerts for spikes in error rates or data exposure.

- Recalibrate quarterly - Regulations change. Models drift. New risks emerge. Review your risk-adjusted ROI every three months. Update probabilities. Adjust financial impacts. Rebalance your controls.

Who Needs This Most (and Who Doesn’t)

Risk-adjusted ROI isn’t for everyone. It’s essential for regulated industries:- Financial services - 83% of major banks require it (Thomson Reuters, 2024)

- Healthcare - 76% of Fortune 500 providers use it

- Government - 68% adoption, driven by federal AI guidelines

- Internal content summarization

- Non-sensitive marketing copy generation

- Experimental prototypes with no live users

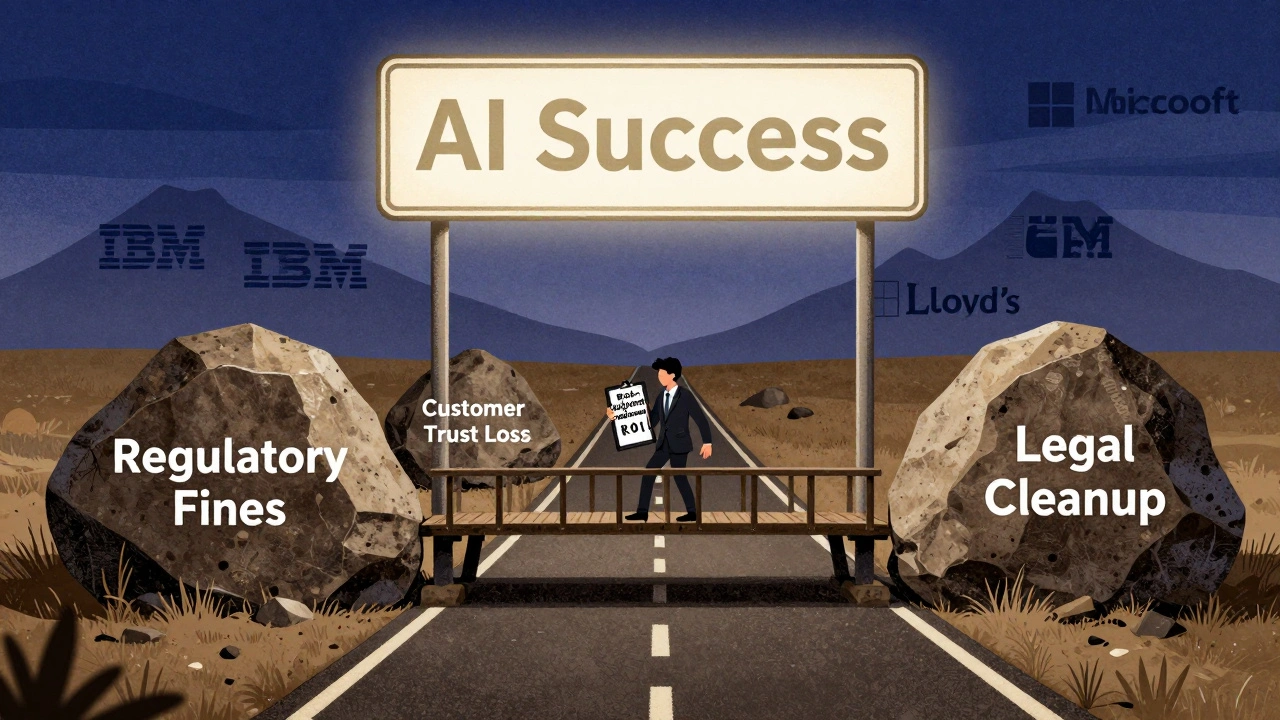

What Happens If You Don’t Do It

The cost of ignoring risk-adjusted ROI isn’t just financial. It’s reputational, legal, and existential. - A bank in Canada lost 12% of its retail customers after an AI loan advisor gave false credit advice. - A pharmaceutical company’s stock dropped 18% after an AI-generated clinical trial summary contained fabricated data. - A U.S. school district was fined $3.2 million for using an AI grading tool that discriminated against non-native English speakers. Dr. Emily Martin at Columbia SIPA says 70-90% of enterprise AI projects fail to deliver promised value over five years-because they never accounted for risk. That’s not a technology problem. It’s a leadership problem.The Future Is Risk-Adjusted

This isn’t a trend. It’s becoming mandatory. - The Financial Stability Board is finalizing rules in Q2 2025 requiring risk-adjusted ROI for all AI investments over $500,000. - ISO is developing ISO/IEC 23090-12, the first global standard for AI ROI measurement, expected in December 2024. - IBM, Microsoft, and ServiceNow have already built risk-adjusted ROI templates into their AI platforms. - Lloyd’s of London launched AI insurance pilots in early 2025-policies priced based on your risk-adjusted ROI score. By 2027, Gartner predicts 90% of enterprise AI investments will require formal risk-adjusted analysis. If you’re not doing it now, you’ll be forced to do it later-under pressure, under audit, and with higher costs.Final Thought: ROI Without Risk Is Fantasy

Generative AI isn’t magic. It’s a tool. And like any tool, it has limits. Ignoring its risks isn’t optimism-it’s negligence. The companies winning with AI aren’t the ones spending the most. They’re the ones measuring the most. Your ROI isn’t just about what the AI earns. It’s about what it doesn’t cost you.What’s the difference between traditional ROI and risk-adjusted ROI for generative AI?

Traditional ROI only looks at gains minus costs. Risk-adjusted ROI subtracts the expected cost of failures-like legal fines, data breaches, hallucinations, and compliance fixes. It turns a hopeful projection into a realistic forecast. For example, a model might promise $1M in savings, but if it’s likely to trigger a $300K GDPR fine, the real return is $700K-not $1M.

How much does implementing risk-adjusted ROI cost?

Adding risk-adjusted ROI typically adds 4-8 weeks to project planning and requires 3-5x more data inputs than traditional ROI. Most companies spend 15-25% of their total AI budget on controls like data filters, validation layers, and monitoring tools. The cost of not doing it? Often 10x higher in fines, lawsuits, and lost trust.

Do I need special software to calculate risk-adjusted ROI?

You don’t need fancy tools to start, but you’ll need them to scale. Basic calculations can be done in Excel. But for real-time monitoring, you’ll need AI observability platforms like WhyLabs or Fiddler. For governance, tools like ServiceNow GRC, IBM OpenPages, or RSA Archer integrate risk metrics into your existing compliance workflows. Microsoft and IBM now include risk-adjusted ROI templates in Azure AI Studio and Watsonx.governance.

Is risk-adjusted ROI only for big companies?

No. Even small companies using AI in customer service, HR, or finance need it. The EU AI Act applies to any business deploying high-risk AI-even startups. The difference is scale: a small company might track 5 risks instead of 12. But skipping it entirely? That’s risky at any size. One mid-sized insurer paid $2.8M in fines after an AI underwriting tool misclassified claims.

What if my AI project is experimental?

If it’s truly experimental-no live users, no sensitive data, no regulatory exposure-then you can delay full risk-adjusted ROI. But even then, track basic metrics: error rates, data exposure, and human override frequency. Treat it like a lab experiment. Document everything. If it scales, you’ll be ready. If it doesn’t, you won’t have wasted time building a complex model.

Can I use open-source tools for risk-adjusted ROI?

Yes. NIST’s AI Risk Management Framework Playbook is free and provides a solid foundation. You can build custom dashboards using open-source monitoring libraries like MLflow or Prometheus. But you’ll still need to define your risk metrics, assign financial values, and train your finance team. Open-source gives you the framework. It doesn’t give you the discipline. That’s on you.

How often should I update my risk-adjusted ROI model?

Quarterly. Regulations change. Models drift. New vulnerabilities appear. If you’re in a regulated industry, update it every 90 days. If you’re in a fast-moving space like marketing or customer service, review it monthly. The model isn’t static. It’s a living metric.

Ashley Kuehnel

9 December, 2025 - 15:01 PM

Wow this is so needed. I work in fintech and we just got burned by an AI chatbot that gave clients wrong loan terms. We thought ROI was 200% until the compliance team showed up with a 300-page audit. Now we’re doing risk-adjusted numbers and honestly? It’s ugly but real. Also, typo: 'hallucinations' is spelled right but my fingers keep typing 'hallucinations' like it’s a drug problem. Which, honestly, it kinda is.

adam smith

11 December, 2025 - 03:32 AM

This is a well structured document. The methodology presented is both rigorous and applicable. I commend the author for addressing the often-overlooked fiscal implications of non-compliance in AI deployment. The inclusion of quantified risk factors elevates this beyond anecdotal observation.

michael Melanson

11 December, 2025 - 05:39 AM

I’ve seen this play out three times now. Teams get excited about AI because it saves 10 hours a week. Then the legal team finds out the model generated a fake contract clause that cost $2.1M in settlements. We added a $150K control budget last year and saved $4.2M in avoided fines. It’s not sexy but it’s the only way to sleep at night.

lucia burton

12 December, 2025 - 00:22 AM

Let’s be real - traditional ROI is the financial equivalent of wearing flip-flops to a construction site. You think you’re saving time and looking casual, but you’re one misstep away from losing a toe. Risk-adjusted ROI isn’t just a framework, it’s a survival protocol. When you’re dealing with generative AI in regulated spaces, you’re not optimizing for efficiency - you’re engineering liability mitigation. And if your CFO doesn’t get that, you’re not underfunded, you’re under-protected. The EU AI Act isn’t coming - it’s already here, and it’s got teeth.

Denise Young

12 December, 2025 - 15:32 PM

Oh sweet mercy, here we go again. Another whitepaper that says ‘you’re doing it wrong’ while charging $12K for the ‘risk-adjusted ROI playbook’. Look, I get it - risk matters. But let’s not pretend every startup with a chatbot needs a $500K governance stack. Sometimes the risk is just… a bad answer. Not a class-action lawsuit. I’ve seen teams spend 6 months building guardrails for a tool that writes product descriptions for a niche blog. The ROI? Negative. The risk? Zero. Stop over-engineering. Start measuring what actually matters.

Sam Rittenhouse

13 December, 2025 - 21:59 PM

I work with small nonprofits using AI to draft grant applications. We don’t have a legal team. We don’t have a compliance officer. But we do have elderly donors who trust us. When our AI accidentally generated a fake success story about a deceased beneficiary - that wasn’t a technical error. That was a betrayal. We didn’t need a fancy model. We needed a human review step. And a heart. Risk-adjusted ROI isn’t just math. It’s ethics with a balance sheet. If your AI hurts someone, no amount of ROI can fix that. Please - don’t let the numbers blind you to the people.

Peter Reynolds

15 December, 2025 - 06:06 AM

Agreed on the quarterly updates. We’ve had models drift so hard we thought we were running a different system. Also the part about open source tools - yeah you can build it but you still need someone to care enough to check the dashboards. Most teams set it up and forget it. That’s worse than not doing it at all