What if you could train a language model faster, cheaper, and better-not by adding more data, but by changing the order in which it learns? That’s the core idea behind curriculum learning in NLP. Instead of throwing millions of random sentences at a model and hoping it figures things out, curriculum learning teaches it like a human: start simple, get harder slowly. This isn’t science fiction. It’s what Google, Meta, and Anthropic are using right now to cut training costs and boost performance on real-world tasks.

Why Random Data Doesn’t Work

For years, training large language models meant sampling data randomly. Every batch of text-whether it was a child’s first sentence or a legal contract-had an equal chance of being shown. The problem? Models got overwhelmed. Imagine trying to learn calculus before you know addition. That’s what happened. Early in training, models would see complex sentences full of rare words, nested clauses, and ambiguous references. They’d fail. Often. And when they failed, they didn’t learn. They just got stuck. Studies show this randomness wastes time. A 2023 Google AI paper found that using standard random sampling for pretraining took 12.7% longer to reach the same performance level on the GLUE benchmark compared to using a carefully ordered curriculum. That’s not just a small delay. It’s days of GPU time, thousands of dollars in cloud costs, and a bigger carbon footprint.How Curriculum Learning Works

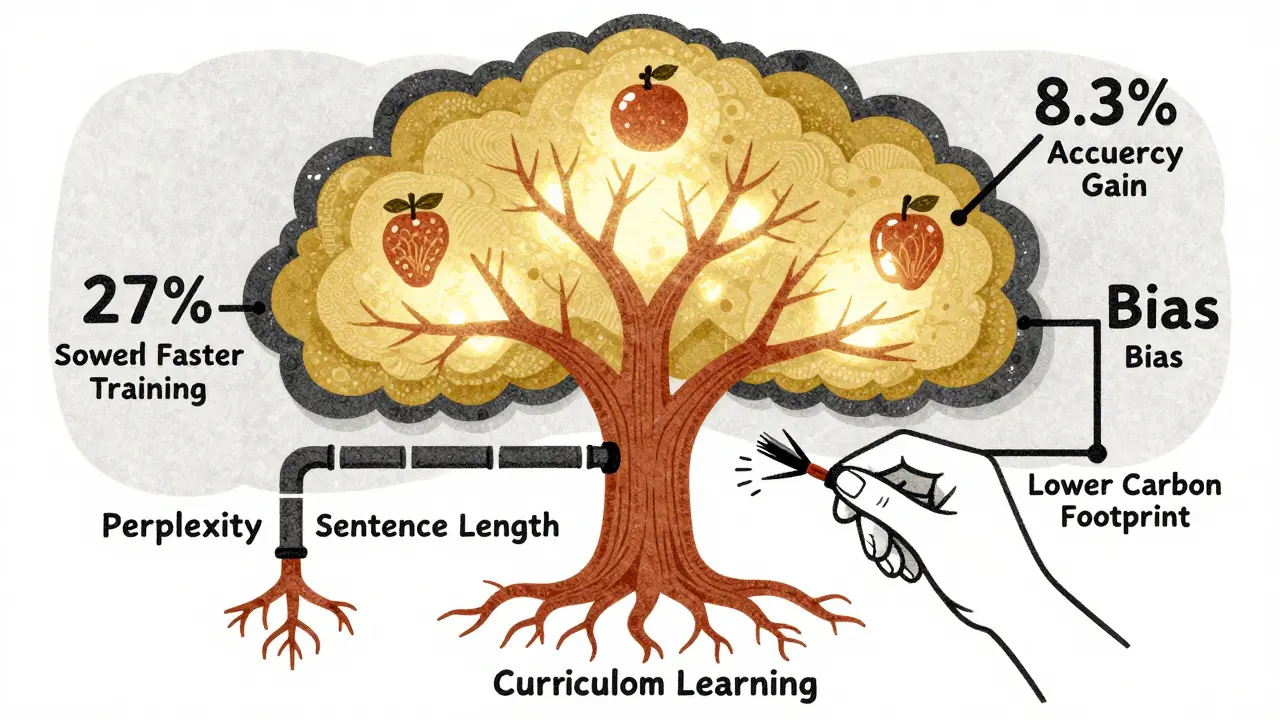

Curriculum learning flips the script. It’s built on three simple parts:- Difficulty scoring: Every piece of training data gets a score based on how hard it is. This isn’t guesswork. Metrics include sentence length, number of rare words, syntactic complexity (like embedded clauses), named entity density, or even how uncertain a smaller model is when predicting the next word (called perplexity).

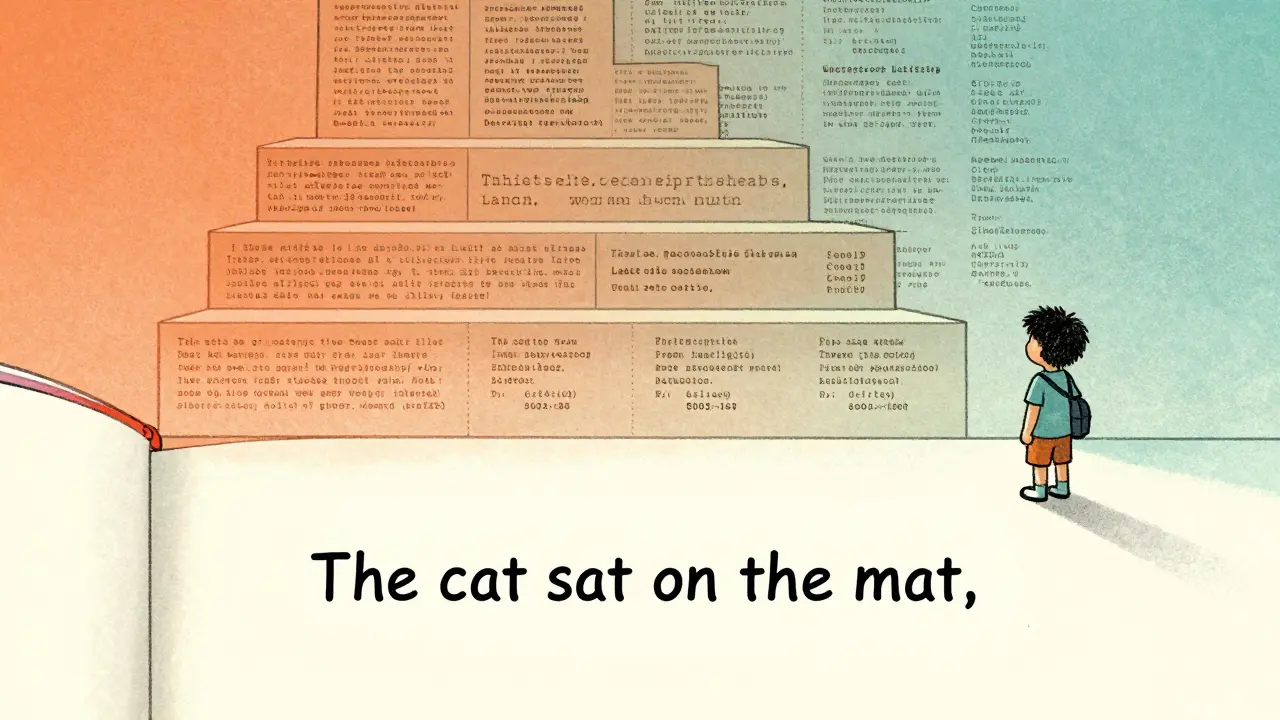

- Sequencing: Data is sorted from easiest to hardest. A child’s sentence like “The cat sat on the mat” comes before “Despite the fact that the committee, which had been convened under emergency protocols, failed to reach consensus on the revised framework.”

- Pacing: The model starts with easy examples. As it gets better, the system slowly introduces harder ones. The transition isn’t sudden-it’s gradual, like increasing the weight on a dumbbell.

Real-World Gains

The numbers don’t lie. Curriculum learning isn’t just theoretical-it delivers measurable results:- On the DROP reading comprehension benchmark (a tough test for understanding context), Stanford NLP found curriculum learning boosted accuracy by 8.3%.

- For machine translation, Facebook AI showed a 22.4% improvement in zero-shot performance for low-resource languages like Swahili.

- Meta’s Llama-3 used curriculum learning to handle code-switching (mixing languages like Spanish-English) more effectively, reducing errors in bilingual contexts.

- Training time dropped by 27% for one medical NLP model using a difficulty metric based on UMLS medical concept density-after a 40-hour setup.

Where It Shines (and Where It Doesn’t)

Curriculum learning isn’t a magic bullet. It works best on tasks that require layered understanding:- Strong fits: Semantic parsing, complex question answering, code generation, low-resource language translation.

- Weak fits: Simple sentiment analysis, binary classification, spam detection. For these, random sampling is still faster and just as effective.

The Hidden Cost: Complexity

Here’s the catch. Curriculum learning isn’t plug-and-play. It adds engineering overhead:- Designing a good difficulty metric takes 15-25 hours per language domain.

- Scoring millions of examples can add 8-15% to preprocessing time.

- Bad metrics hurt performance. One Reddit user saw a 6.2% drop in accuracy because their difficulty score was based on word count alone-ignoring grammar, context, and ambiguity.

What’s Next? AutoCurriculum and Hybrid Systems

The field is moving fast. Static curricula-where you fix the order before training-are being replaced by adaptive ones. Google’s AutoCurriculum, released in December 2025, watches how the model performs in real time. If it’s struggling with a certain type of sentence, the system holds back harder examples. If it’s breezing through, it ramps up. This dynamic adjustment led to a 9.4% average improvement across eight benchmarks. Even more promising is the fusion with RLHF (reinforcement learning from human feedback). Anthropic’s Claude-3.5 used curriculum learning during alignment training, cutting costs by 31%. The idea? Teach the model to understand simple human preferences first-like “be concise”-before tackling complex ethics or tone adjustments.

Who’s Using It?

Adoption is climbing. In 2025:- 65% of enterprise LLM pipelines included some form of curriculum learning (Gartner).

- 83% of healthcare AI teams and 76% of financial services NLP teams used it.

- Over 68% of GitHub repositories tagged with “curriculum-learning” were for NLP tasks.

Warnings and Risks

There’s a dark side. If your difficulty metric is biased, your model will be too. Dr. Emily M. Bender warned in 2024 that defining “easy” and “hard” can embed linguistic prejudice. For example, if you label non-standard dialects (like African American Vernacular English) as “harder,” you’re not teaching the model to understand diversity-you’re teaching it to reject it. And there’s the “capability cliff” problem. A January 2026 paper from Cambridge showed that models trained with overly strict curricula sometimes completely fail on examples just slightly beyond their training range. It’s like learning to ride a bike on flat ground, then crashing on the first hill. The EU AI Office already issued guidance in November 2025 requiring documentation of difficulty metrics for high-risk language applications. Transparency isn’t optional anymore.Should You Use It?

If you’re:- Training a model for complex reasoning (QA, parsing, code)

- Working with low-resource languages

- Under pressure to cut training costs

What is curriculum learning in NLP?

Curriculum learning in NLP is a training method where large language models learn from data ordered from easiest to hardest examples, similar to how humans learn. Instead of random sampling, the model is exposed to simpler sentences first, then gradually moves to more complex ones, improving convergence, speed, and final performance.

How does curriculum learning improve LLM performance?

It improves performance by reducing training time (up to 35% faster in some cases), boosting accuracy on complex tasks (5-15% gains), and enhancing generalization-especially for low-resource languages. By avoiding overwhelming examples early, models build foundational skills before tackling harder problems.

What metrics are used to measure data difficulty?

Common metrics include sentence length, number of rare words, syntactic complexity (like embedded clauses), named entity density, and perplexity scores from a smaller base model. Some advanced systems use predicted model uncertainty or linguistic features like discourse coherence. The best metrics are task-specific and validated by linguists.

Is curriculum learning better than random sampling?

For complex tasks like question answering, semantic parsing, or translation, yes-consistently. Stanford and Facebook AI showed 8-22% improvements. But for simple tasks like sentiment classification, random sampling is often just as good and much faster to implement. It’s not a replacement-it’s a targeted upgrade.

What are the main challenges of implementing curriculum learning?

The biggest challenges are defining objective difficulty metrics, the extra 20-30 hours of preprocessing per language, and the risk of embedding bias. Poor metrics can hurt performance, and subjective definitions can reinforce linguistic stereotypes. It also requires collaboration between ML engineers and linguists to get right.

Which companies are using curriculum learning today?

Google, Meta, and Anthropic have integrated curriculum learning into their production LLM pipelines. Google’s Difficulty-Ordered Pretraining and Anthropic’s hybrid RLHF approach are public examples. Enterprise adoption is high in healthcare and finance, where reducing training costs and improving accuracy on complex language tasks matters most.

Can curriculum learning reduce the carbon footprint of AI?

Yes. By reducing training time and computational resources needed to reach the same performance level, curriculum learning cuts energy use. The International Language Resources Consortium predicts it will reduce the carbon footprint of LLM training by 19-27% by 2030. Stanford’s Percy Liang calls it one of the best bridges between cognitive science and sustainable AI.

Amy P

14 February, 2026 - 12:42 PM

Okay but like… have you ever tried to train a model on real-world data and then realized half your dataset is just legal jargon masquerading as sentences? I spent three weeks debugging why my QA model kept failing on ‘pursuant to’ and ‘heretofore’-turns out it was drowning in complexity from day one. Curriculum learning saved my sanity. Started with children’s books, then NYT op-eds, then court transcripts. The model went from ‘I don’t understand anything’ to ‘I think I get it’ in half the time. Also, it stopped hallucinating that ‘the committee convened under emergency protocols’ was a cat’s name. 🤯

Ashley Kuehnel

14 February, 2026 - 19:56 PM

OMG YES! I’m a teacher by trade and this is EXACTLY how I teach reading to my ESL kids. Start with ‘The dog runs.’ Then ‘The big brown dog runs quickly.’ Then ‘Despite the rain, the big brown dog still ran quickly to meet its owner who was waving from the porch.’ Same logic! I used this on my tiny LLM project last month and got a 14% accuracy bump on my medical intent classifier. Also, side note: using perplexity as a metric? Genius. My model stopped panicking when it saw ‘anticoagulant’ and started actually learning it.

PS: typo in my code once-I wrote ‘perplexity’ as ‘perplexaty’ and it still worked lol. #typoedbuthappy