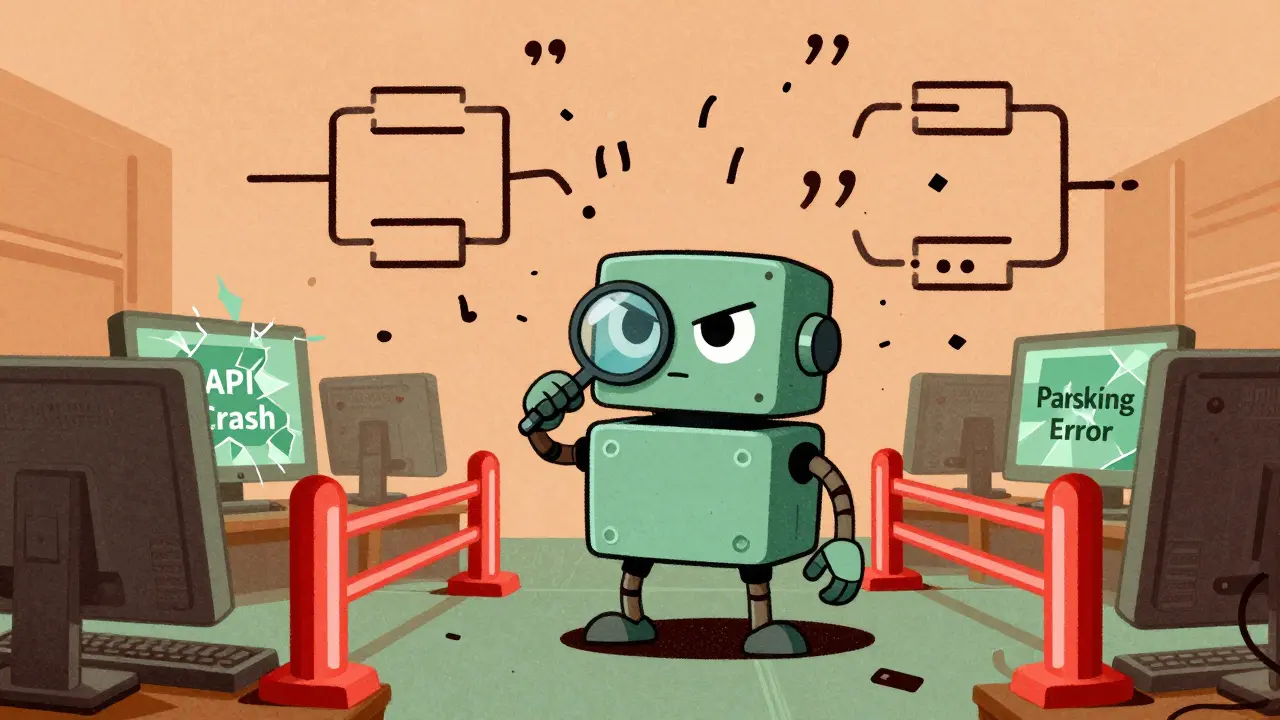

Large language models can write essays, code, and even poetry-but when you need them to spit out clean, valid JSON, a properly formatted API response, or a strict regex match, things fall apart. You ask for a date in YYYY-MM-DD format, and the model gives you "March 14th, 2025". You want a list of user IDs, and it adds extra commas, missing brackets, or just makes up keys. This isn’t a bug. It’s how LLMs work. They guess the next word based on patterns, not rules. That’s fine for storytelling. Not fine for databases, APIs, or compliance systems.

Why Your LLM Output Keeps Breaking

Unconstrained generation means the model picks the next token-any token-with no regard for structure. It doesn’t know the difference between a closing brace and a comma. It doesn’t care if your JSON is valid. It just picks the most likely next word from its training data. And in training data, people write messy JSON. They forget commas. They use single quotes. They nest objects wrong. So the model learns to do the same.

Studies show that without constraints, up to 38.2% of LLM-generated JSON outputs are malformed in zero-shot scenarios. That’s nearly four in ten responses you have to clean up manually-or worse, feed into a system that crashes because of a missing quote. For financial reports, medical records, or API integrations, that’s unacceptable.

What Constrained Decoding Actually Does

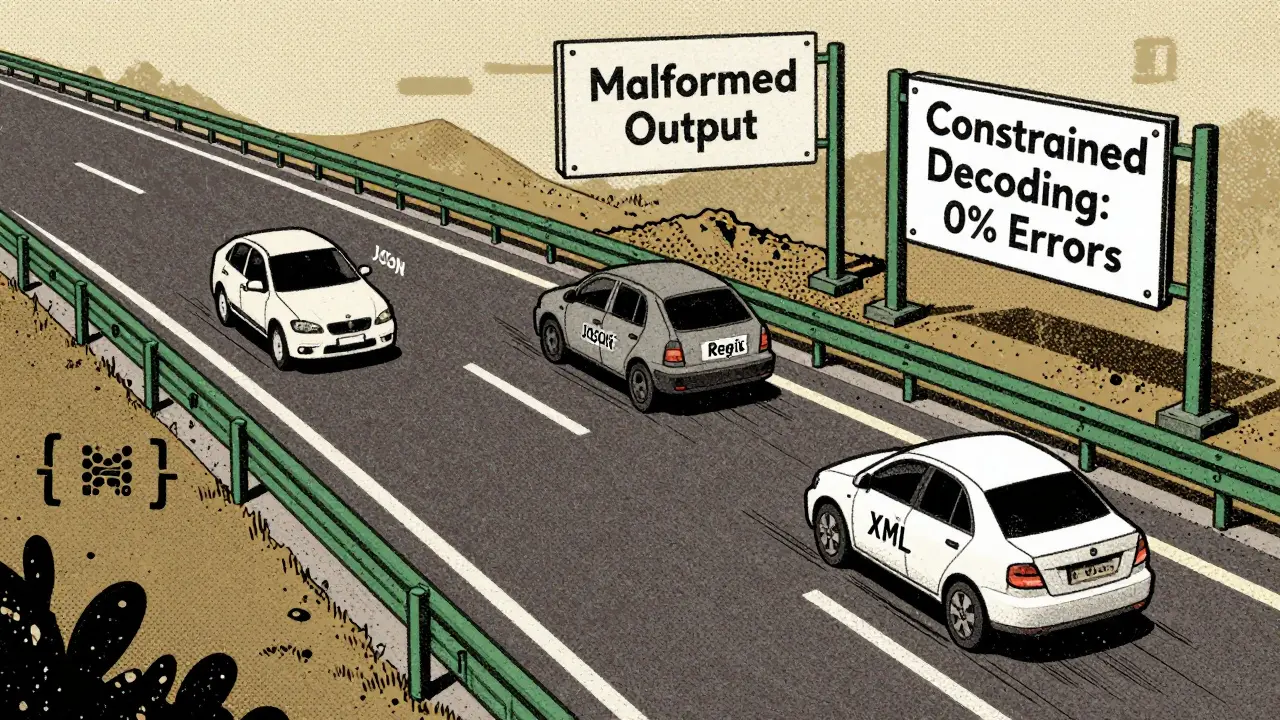

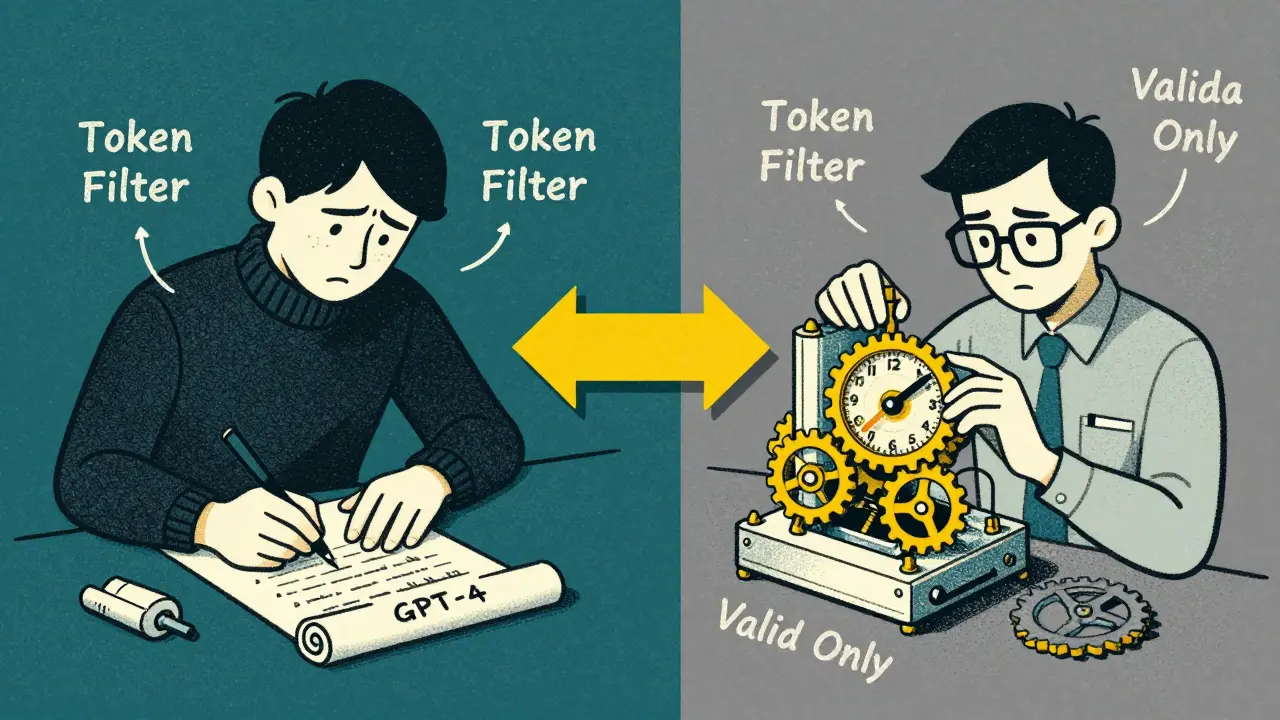

Constrained decoding flips the script. Instead of letting the model choose freely, it blocks any token that would break your structure. At every step of generation, it filters the model’s options to only include tokens that keep the output valid. If you’re generating JSON, it won’t let the model add a comma after the last item. If you’re using regex, it won’t allow characters outside your pattern. It’s like putting guardrails on a highway-cars can still drive fast, but they can’t go off-road.

This isn’t post-processing. It’s not a script that fixes bad output after the fact. It’s built into the generation process itself. The model still predicts probabilities, but only from a restricted set of valid tokens. That’s why it’s called constrained-you’re constraining the choices, not the output.

Mathematically, it looks like this: p(xi | xxi given the previous tokens xS (your JSON schema, regex, etc.). The constraint S shrinks the possible next tokens from the full vocabulary to just the ones that won’t break the format.

How JSON Constraints Work in Practice

Generating valid JSON is one of the most common use cases. You need keys in quotes, values in the right type, arrays with commas, no trailing commas, and proper nesting. Constrained decoding handles all of this automatically.

Here’s how it works step-by-step:

- You define your JSON schema: {"name": "string", "age": "integer", "hobbies": ["string"]}

- When the model starts generating, it first needs to output an opening brace: {

- After that, only string literals (like "name") are allowed as next tokens

- Then, a colon: : is required

- Then, only valid values: string, number, boolean, null, or another object/array

- If the model tries to output a comma after the last field, the constraint blocks it

- When it’s time to close, only } is allowed

Result? Zero malformed JSON. No more parsing errors. No more try-catch blocks to handle invalid responses. In tests, constrained decoding reduces JSON errors from 38.2% down to 0% in zero-shot setups. That’s not an improvement-it’s a fix.

Regex Constraints: Precision Over Guesswork

Regex isn’t just for validation. It can be a blueprint for generation. Want all phone numbers in +1-XXX-XXX-XXXX format? Or email addresses that match your company’s domain? Constrained decoding lets you feed a regex pattern directly into the generation engine.

Instead of letting the model guess “[email protected]” or “[email protected]” or even “john at gmail dot com,” the system only allows tokens that fit your exact pattern. For example:

- After “@”, only letters, digits, hyphens, and dots are permitted

- After a dot, only 2-6 letters are allowed (no “.com.au” if your regex forbids it)

- No spaces. No underscores. No uppercase unless your regex allows it

One developer on Reddit used this to extract customer data from support chats. Before constraints, 27% of extracted emails were invalid. After implementing regex-constrained decoding, that dropped to 2%. It didn’t need fine-tuning. Just a simple pattern and a few lines of code.

Schema Control: Beyond JSON to Custom Structures

JSON is just one format. What if you need XML? YAML? A custom protocol? That’s where schema control comes in. Tools like NVIDIA’s Triton Inference Server let you define grammars using context-free grammar rules or JSON Schema. The system then builds a state machine that tracks what token is valid at each point.

For example, if you’re generating a medical record format that requires:

- patient_id: 9-digit number

- diagnosis: one of ["flu", "diabetes", "hypertension"]

- medications: array of objects with name, dosage, frequency

The system doesn’t just check the final output. It enforces the structure as it’s being built. It won’t let the model write “diagnosis: heart attack” because that’s not in the allowed list. It won’t let you skip the dosage field. It won’t let you add an extra comma in the medications array.

This is critical in regulated industries. Healthcare and finance need structured, auditable outputs. Constrained decoding makes that automatic. No more human reviewers checking every response.

Performance Trade-Offs: Speed vs. Accuracy

There’s a cost. Constrained decoding slows things down. By 5-8% on average, according to NVIDIA’s benchmarks. That’s because the system has to check every token against the constraint grammar. For real-time apps, that might matter.

But here’s the twist: it’s not always slower. Sometimes it’s faster. Because constrained decoding skips “boilerplate” tokens. If the model knows it must generate a comma after every key except the last one, it doesn’t waste time predicting alternatives. It just generates the comma. That cuts down on unnecessary token generation.

And here’s the bigger trade-off: accuracy. For smaller models (under 14B parameters), constrained decoding boosts performance by up to 18.7% in zero-shot tasks. But for larger models-like Llama 3 70B or GPT-4-the picture changes. These models are so good at guessing patterns that unconstrained generation sometimes works better. Why? Because constraints force the model away from its natural preferences. The model’s confidence drops. Tokens that would’ve been chosen with 90% probability now get 20%. That can make outputs feel stiff, repetitive, or even semantically wrong.

Research from Stanford shows constrained decoding introduces measurable bias. The output distribution shifts away from what the model truly “wants” to say. That’s fine if you need structure. Not fine if you need creativity.

Why Instruction-Tuned Models Struggle

Most people use instruction-tuned models-like Llama 3-Instruct or GPT-4-Turbo-because they’re good at following prompts. But here’s the surprise: they often perform worse under constraints.

Why? Because they’ve been trained to sound natural, not to be precise. They’ve learned to add fluff, use synonyms, and avoid rigid structures. When you force them into a box, they fight it. Studies show instruction-tuned models drop 17.1% in accuracy on structured tasks when constraints are applied. Meanwhile, base models-like the original Llama 3 without instruction tuning-improve by 9.4%.

It’s counterintuitive. You’d think the model that’s better at following instructions would do better with constraints. But it’s the opposite. The constrained output feels unnatural to the model. It’s like asking a poet to write a tax form.

When to Use It (and When to Avoid It)

Use constrained decoding when:

- You’re building APIs that need valid JSON responses

- You’re extracting structured data from unstructured text (invoices, forms, emails)

- You’re in a regulated industry (healthcare, finance, legal)

- You’re using small or mid-sized models with limited examples

- You can’t afford post-processing errors

Avoid it when:

- You’re generating creative content (stories, ads, emails)

- You’re using large models (14B+ parameters) with plenty of examples

- Your constraint grammar is overly complex (it causes more semantic errors than it fixes)

- You need the model to be flexible with phrasing or tone

One developer on GitHub reduced API post-processing errors from 32% to 0.4% using JSON constraints. But they also said it took three days to debug the grammar rules. That’s the hidden cost: setup complexity.

Implementation Tips

Getting started isn’t hard, but it’s not plug-and-play either.

- Start with JSON Schema. It’s the most documented and supported format.

- Use tools like NVIDIA Triton, vLLM, or Outlines. They have built-in constraint engines.

- Don’t over-constrain. If your schema allows 10 types of dates, but you only need one, simplify it.

- Test with real data. A schema that works on examples might fail on edge cases.

- Monitor output quality. Just because it’s valid doesn’t mean it’s correct. A valid JSON with the wrong values is still wrong.

Community support is growing. Hugging Face has over 5,200 members in dedicated channels. GitHub repos for constrained decoding tools get 15-20 updates a week. Documentation varies-NVIDIA’s is 427 pages long. Open-source options are shorter but less polished.

The Future: Adaptive Constraints

The next wave isn’t just static constraints. It’s adaptive ones. Imagine a system that knows when to be strict and when to loosen up. For example:

- When generating a medical diagnosis: strict schema

- When generating a patient summary: flexible phrasing

Researchers at Stanford and DeepMind are already testing systems that adjust constraint strictness based on context, model size, and even user feedback. That’s where this is headed.

By 2027, Gartner predicts 95% of enterprise LLM deployments will use constrained decoding in some form. It’s becoming standard infrastructure-not a hack.

But the core truth remains: structure and creativity don’t mix well. Constrained decoding isn’t about making LLMs smarter. It’s about making them obedient. And sometimes, that’s exactly what you need.

Does constrained decoding guarantee 100% valid output?

Yes, if the constraint grammar is correctly defined. Constrained decoding blocks any token that would violate the structure, so malformed JSON, invalid regex matches, or schema violations are physically impossible during generation. The output is structurally valid by design.

Does it slow down LLM generation?

Yes, but only slightly-typically 5-8% slower. The overhead comes from checking each token against the constraint grammar. However, in some cases, it can speed things up by skipping unnecessary token choices, like boilerplate punctuation.

Should I use it with large models like GPT-4 or Llama 3 70B?

Not always. Large models often perform better unconstrained when given enough examples. Constrained decoding can reduce their semantic quality because it forces them away from their natural output distribution. Use it with large models only if structural validity is non-negotiable and you’ve tested that the trade-off is worth it.

Can I use constrained decoding with any LLM?

Technically yes, but support varies. Tools like NVIDIA Triton, vLLM, and Hugging Face’s Text Generation Inference have built-in support. Open-source libraries like Outlines work with many models, but require manual setup. You need access to the token-level generation process, which isn’t always available in API-only services like OpenAI’s.

What’s the difference between constrained decoding and post-processing?

Post-processing fixes output after it’s generated-like using a JSON parser to catch errors. Constrained decoding prevents errors before they happen by blocking invalid tokens during generation. Post-processing can’t fix everything-sometimes the output is completely unusable. Constrained decoding ensures every output is valid from the start.

Is constrained decoding only for JSON?

No. It works with any structured format: regex patterns, XML, YAML, custom grammars, and even programming languages. The constraint system just needs to define what’s valid at each step. JSON is the most common because it’s widely used in APIs and data exchange.

Why do instruction-tuned models perform worse with constraints?

Instruction-tuned models are trained to sound natural, not to follow rigid rules. They’ve learned to avoid repetitive structures, use synonyms, and add conversational fluff. Constraints force them into a box they weren’t trained for, which reduces their accuracy. Base models, which haven’t been optimized for natural language, adapt better to strict formats.

Can I combine constrained decoding with few-shot examples?

Yes-and it often helps. Constrained decoding works best with clear structure, but adding a few examples can improve semantic accuracy. Research shows constrained models benefit more from examples than unconstrained ones, especially for complex schemas.

Henry Kelley

16 January, 2026 - 22:00 PM

Been there. Tried to get an LLM to spit out JSON for a payroll system and ended up with 17 failed deploys in a week. Constrained decoding saved my sanity. Now I just define the schema and let it run. No more "{name: John, age: 30}" nonsense. It just works.

Also, the part about training data being full of messy JSON? 100%. I’ve seen models copy-paste XML into JSON because that’s what they saw in Stack Overflow threads.

Victoria Kingsbury

17 January, 2026 - 19:54 PM

Love this breakdown. Constrained decoding isn’t just a technical fix-it’s a philosophical shift. We’re not asking the model to be a poet anymore, we’re asking it to be a machine. And honestly? It’s way more reliable when it stops trying to be cute.

Also, the 38.2% stat? That’s the silent killer in enterprise AI. Nobody talks about it because everyone just assumes their JSON is fine until the system crashes at 3 AM. Thanks for calling it out.

Tonya Trottman

17 January, 2026 - 22:47 PM

Oh good, another article that treats LLMs like they’re toddlers who just need a time-out. Let me guess-you also think putting guardrails on a highway means the car doesn’t need a driver? 🙄

Constrained decoding doesn’t fix the core issue: LLMs don’t understand structure. They just mimic it under pressure. It’s like teaching a parrot to say ‘valid JSON’ while it’s still confused about what a comma is.

Also, ‘mathematically, it looks like this: p(xi | x’… you literally cut off the equation. Are you serious right now? Did you copy-paste this from a draft and forget to finish? Classic.

Rocky Wyatt

19 January, 2026 - 05:49 AM

Y’all are acting like this is some groundbreaking revelation. It’s not. We’ve been doing this for years with grammar checkers and code linters. The fact that people are surprised LLMs can’t just magically generate perfect JSON says more about how little we expect from AI than how hard the problem is.

Also, if your system crashes because of a missing quote, maybe your architecture is trash. Stop relying on LLMs to be your data validator. Build a real pipeline. Use a schema. Validate. Test. Deploy. Done.

This post feels like someone selling snake oil and calling it ‘AI innovation’.

Santhosh Santhosh

20 January, 2026 - 16:03 PM

I work in a healthcare data pipeline where even a single misplaced bracket in a JSON response can trigger a false alarm in patient monitoring systems. We tried post-processing, regex fixes, even custom parsers-but nothing was reliable until we implemented constrained decoding with JSON Schema validation at the token level.

It’s not perfect, but the error rate dropped from 36% to under 2%. That’s not just a technical win-it’s a patient safety win. The model still generates the content naturally, but it’s like having a silent co-pilot that only lets it type valid syntax. No more midnight alerts because the model thought "null" should be "nill".

Also, the training data point is so true. I’ve seen models generate JSON with keys like "user_id" and "userID" in the same object because they saw both in GitHub repos. It’s not stupidity-it’s statistical noise. Constraining the output doesn’t suppress creativity; it just prevents it from becoming dangerous.

People think AI should be free, but freedom without structure is chaos. We don’t want a poet in the ICU. We want a precise instrument. This is how you build that.