Imagine running a chatbot that answers customer questions. It works great-until one day, a single user sends a 50,000-word prompt. Your system churns through 2.8 million tokens in 17 minutes. When the bill arrives, it’s $1,120. Just for one mistake. This isn’t science fiction. It’s what happened to a startup in Austin last year. And it’s happening to companies every day.

Large language models (LLMs) are powerful, but they don’t come with price tags you can guess. You pay per token-not per request, not per minute. A token is roughly a word or part of a word. Input tokens (what you send in) cost less than output tokens (what the model generates). Alibaba Cloud’s Qwen-Flash charges $0.05 per million input tokens and $0.40 per million output tokens for small jobs. But if you push it to 1 million tokens? The price jumps to $0.25 and $2.00. One wrong setting, and your AI budget vanishes in hours.

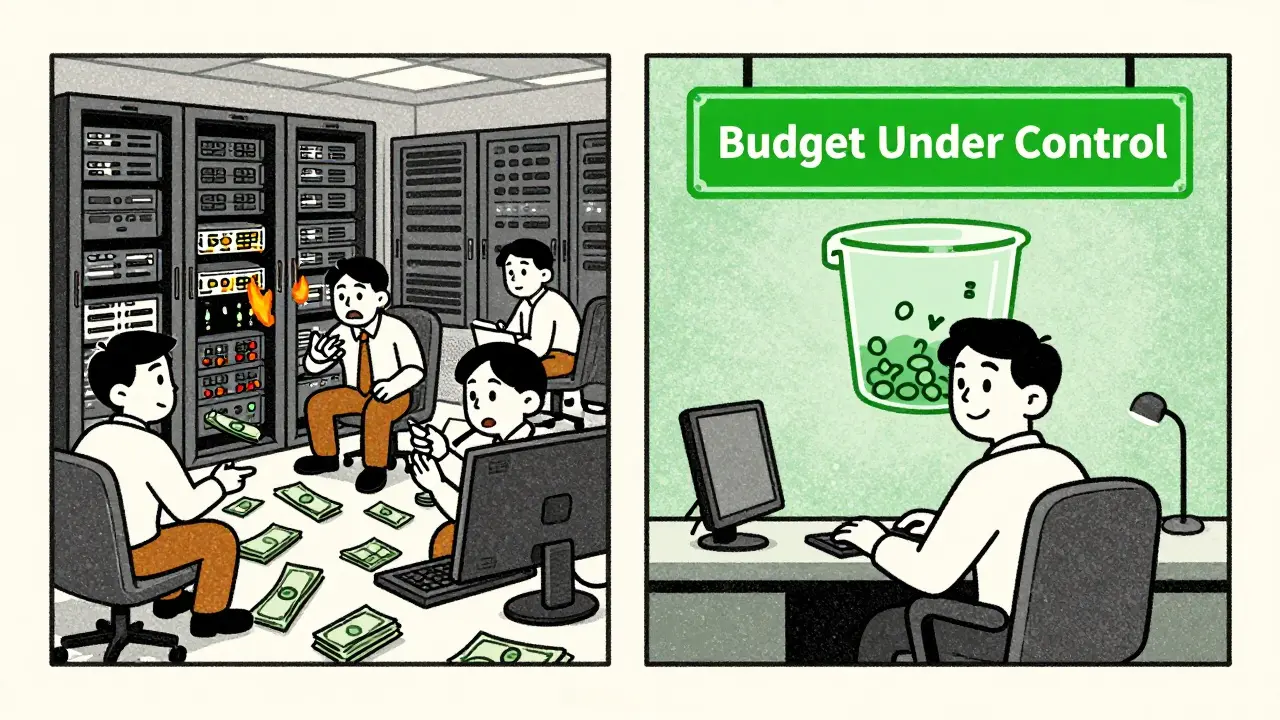

Why Token Budgets Are No Longer Optional

Before 2023, most teams treated LLM costs like electricity-something you just pay for. But as usage exploded, so did the surprises. In 2024, only 29% of enterprises used any kind of spending limit. By January 2026, that number jumped to 83%. Why? Because without controls, LLMs can run wild. A marketing team might use a model to generate 10,000 product descriptions. Without a cap, that’s 5 million tokens. At $0.40 per million output tokens? That’s $2,000 in one afternoon.

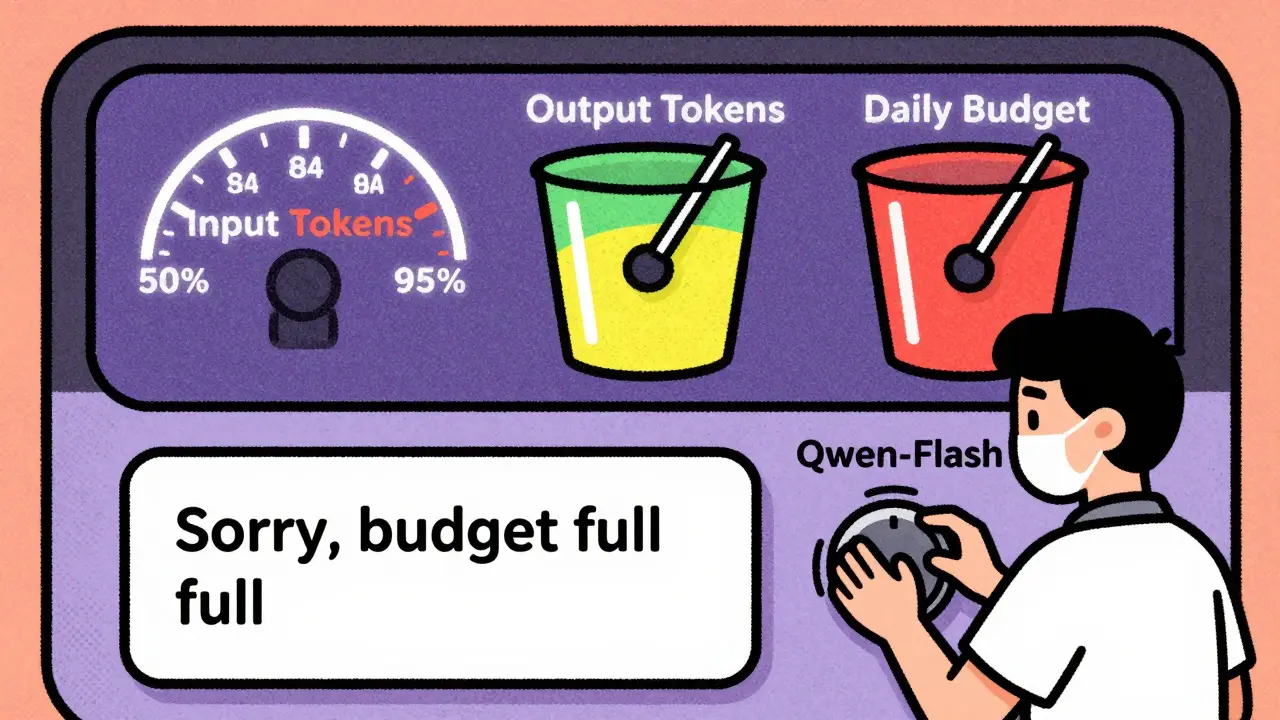

Token budgets fix this. They’re not just limits-they’re automated guards. Set a daily budget of $100. When you hit $95, the system warns you. At $99, it slows down responses. At $100? It blocks new requests until tomorrow. No more shocks. No more frantic calls to finance.

How Token Budgets Actually Work

Token budgeting isn’t magic. It’s engineering. Here’s how it breaks down:

- Prompt token limits: Stops users from pasting novels into your chatbot. Most systems cap this at 8,000-32,000 tokens per request.

- Output token limits: Prevents the model from writing novels in reply. A 500-token limit keeps answers concise and cheap.

- Daily/hourly total limits: A hard cap on total tokens used per period. Think of it like a phone plan: 5 million tokens/month.

- Concurrency caps: Limits how many requests can run at once. If 10 users hit your bot simultaneously, you don’t want them all maxing out the model.

These aren’t just settings. They’re algorithms. The most common is the token bucket: imagine a bucket that fills with 60,000 tokens every minute. Each request drains it. When it’s empty? You wait. Another method, the leaky bucket, lets bursts through but drains slowly-good for handling spikes without crashing.

Companies like KrakenD and OneUptime take this further. KrakenD can automatically switch your request from an expensive model (Qwen-Max) to a cheaper one (Qwen-Flash) when your budget hits 85%. OneUptime goes further: 50% usage? Email alert. 80%? Slack message. 95%? Slows down responses. 100%? Blocks everything. It’s like a thermostat for your AI spending.

Real-World Budgets: What Works

There’s no one-size-fits-all budget. But based on real deployments, here’s what successful teams do:

- Start low: Test your app with 500 tokens per request. See how much it uses in a week. Then set your budget at 2x that.

- Split by user or feature: A fintech company tracked tokens per customer. They found 3% of users were using 40% of the budget. They capped those users. Costs dropped 63%.

- Use graduated thresholds: Don’t just block at 100%. Use alerts at 50%, throttling at 80%, and blocking at 95%. That gives you time to react.

- Track context waste: If your chatbot keeps re-sending the last 20 messages, you’re burning tokens. Summarize history. Cut 30-40% off your bill.

One healthcare startup scaled from $500 to $50,000/month in LLM costs-without going over budget. How? They set per-user message limits (max 10 messages/hour) and capped output to 300 tokens. No one noticed. The system just worked.

Where Teams Fail

Most failures aren’t technical-they’re blind spots.

- Ignoring model tiers: Using Qwen-Max for simple tasks is like using a sports car to deliver groceries. Qwen-Flash handles 80% of use cases at 1/5th the cost.

- Not tracking free quotas: Alibaba Cloud says free tokens are shared across all users under one account. One team thought they had 10 million free tokens. They didn’t. Someone else used them.

- Forgetting tool use: If your LLM calls APIs, runs code, or searches the web-that costs extra. Some systems now charge more for these actions because they use more resources.

- Waiting too long to act: 78% of teams say they were shocked by their first bill. The fix? Start budgeting before you launch.

One enterprise spent 3 weeks just integrating token tracking into their billing system. Another didn’t bother-and ended up paying $28,000 in one month for a bot that should’ve cost $1,200.

Who’s Doing It Right

Finance and healthcare lead adoption. Why? They have strict budgets and audits. Marketing lags. Why? They think AI is "free creativity." It’s not.

Companies using token budgets see real results:

- 63% cost reduction after per-user tracking (Traceloop)

- 92% of users say budget caps prevented "catastrophic overruns" (G2 reviews)

- 83% of enterprises now use some form of token control (OneUptime survey)

And it’s getting smarter. KrakenD’s 2025 update lets you route requests based on budget health. If your Qwen-Max budget is low, it shifts traffic to Qwen-Plus. OneUptime’s 2026 update even charges more for long context windows-because they cost more to process.

Getting Started: 4 Steps

You don’t need a team of engineers. Here’s how to begin:

- Install a token counter: Use your API gateway (Nginx, Kong, KrakenD) to count tokens on every request. Most take 1-2 weeks to set up.

- Run a trial: Let your app run for 3-5 days without limits. Record total tokens used. That’s your baseline.

- Set thresholds: Start with a monthly budget. Set alerts at 50%, throttling at 80%, and blocking at 95%.

- Assign ownership: Who’s responsible? Finance? Engineering? Make it clear. Charge back costs to teams using the most tokens.

Don’t wait for a $10,000 bill. Start now. Even a simple cap of 1 million tokens/month will stop the worst overruns.

The Future Is Controlled Spending

Gartner predicts that by 2027, 95% of enterprise AI deployments will include token budgeting. Why? Because uncontrolled LLM use costs 227% more on average. Forrester says cost management will be the #2 concern after security by 2027.

This isn’t about cutting corners. It’s about using AI sustainably. You wouldn’t let your team print 10,000 pages without a printer limit. Why let them burn through tokens?

Token budgets turn AI from a wild card into a predictable tool. And that’s the only way to scale without going broke.