Generative AI doesn’t just appear out of nowhere. Behind every AI-generated image, text, or video is a set of core technologies that make it possible. Three architectures dominate the field: Transformers, Diffusion Models, and GANs. Each one solves a different problem, has its own strengths and weaknesses, and powers most of the AI tools you use today. You don’t need a PhD to understand how they work - just a clear breakdown of what they do, how they’re different, and why they matter.

Transformers: The Engine Behind ChatGPT and Beyond

Transformers changed everything in AI. Before 2017, models relied on sequential processing - one word at a time. That made them slow and bad at understanding context across long sentences. Then came the paper ‘Attention is All You Need’ from Google researchers. It introduced a simple but powerful idea: let the model pay attention to every word in a sentence at once. That’s the self-attention mechanism.

Instead of reading text like a person, Transformers scan the whole thing in parallel. They ask: Which words matter most for this one? If you say, ‘The cat sat on the mat because it was tired,’ the model figures out that ‘it’ refers to ‘cat,’ not ‘mat.’ That’s attention in action.

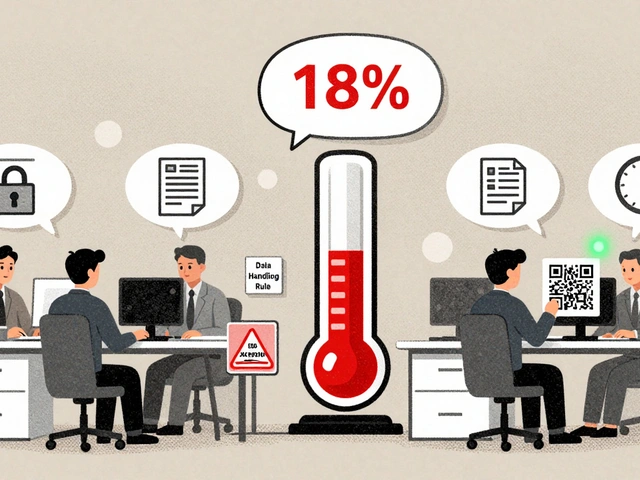

Modern versions like GPT-4 and Gemini have over 1.8 trillion parameters. Training them takes 16,000 NVIDIA A100 GPUs and uses about 50 gigawatt-hours of electricity - roughly the same as 5 American households use in a year. That’s expensive. But it works. Transformers handle text better than anything else. GPT-4 scores 85.2% on the MMLU benchmark, a test of real-world knowledge. BERT beats older models by 3.5 points on NLP tasks. That’s why almost every chatbot, summarizer, and translation tool uses them.

But they’re not perfect. Transformers get slow with long texts. If you feed them 8,000 words, memory usage spikes. Developers on Reddit report frequent out-of-memory crashes during fine-tuning. Training costs are so high that companies like yours might spend $18,000 a month just running a customer service bot. The fix? Quantization. You shrink the model by 75% with little loss in accuracy. Or use caching - store common answers so you don’t recompute them.

Diffusion Models: How AI Creates Photorealistic Images

Ever seen an AI image that looks too real? That’s probably a Diffusion Model. Unlike GANs, which try to guess the perfect image right away, Diffusion Models work like a reverse puzzle.

Imagine taking a photo and slowly adding noise - like TV static - until it’s just a mess of pixels. That’s the forward process. Now, the model learns to undo that step by step. It starts with random noise and asks: What should this pixel look like to make it look like a cat? Then it repeats, refining the image over 50 to 1,000 steps. The result? Highly detailed, realistic pictures.

Stable Diffusion XL, released in 2023, scores a Fréchet Inception Distance (FID) of 1.68 on the FFHQ dataset - the gold standard for image quality. That’s better than StyleGAN3’s 2.15. In blind tests, 78% of people can’t tell if a Diffusion-generated image is real. Adobe’s Firefly, built on this tech, has over 1.2 million enterprise users. Companies use it for product mockups, marketing visuals, and even medical imaging.

But there’s a catch: speed. Generating one image takes 12-15 seconds on a high-end GPU. That’s fine for a designer, not for a video game. To fix this, newer versions like Stable Diffusion 3 cut steps down to 20 without losing quality. Techniques like knowledge distillation help too - training a smaller model to mimic the big one. Still, you need an A100 with 40GB VRAM to run them well. And they’re hungry for data: Stable Diffusion was trained on 2.3 billion image-text pairs. GANs? Only 450 million.

Experts like Caltech’s Anima Anandkumar say Diffusion Models solved the mode collapse problem that haunted GANs for years. But they’re inefficient. MIT’s 2025 outlook gives them an 85% chance of staying dominant through 2035 - not because they’re perfect, but because nothing else matches their quality.

GANs: The Original AI Artists - Still Alive in Niche Areas

Generative Adversarial Networks, or GANs, were the first big breakthrough in AI image generation. Introduced in 2014 by Ian Goodfellow, they work like a game between two AI models: a generator and a discriminator.

The generator tries to make fake images. The discriminator tries to spot fakes. They keep playing - the generator gets better at fooling, the discriminator gets better at catching. Eventually, the generator produces images so real the discriminator can’t tell the difference.

StyleGAN3, developed by NVIDIA in 2021, can generate 1024x1024 images in 0.8 seconds. That’s 15-20 times faster than Diffusion Models. That’s why GANs still power real-time video tools like NVIDIA Maxine. Streamers use it to remove background noise or enhance faces on low-end hardware. Gaming studios rely on GANs for live character animation.

But GANs are unstable. Mode collapse happens in 63% of standard implementations - the generator stops creating variety and just spits out the same few images over and over. Developers on Reddit say they abandoned GANs after months of tuning. Techniques like mini-batch discrimination help, but they add 35% more training time.

Market share has dropped. GANs now make up only 3% of commercial generative AI applications, down from 20% in 2021. But they’re not dead. NVIDIA’s GANformer2, released in late 2024, blends GAN speed with Transformer attention. It cuts mode collapse by 40% and hits 25 FPS in video generation. Startups are also exploring physics-informed GANs - simulating fluid dynamics or protein folding. If you need speed, realism, and low latency, GANs still win.

How They Compare: Speed, Quality, and Cost

Choosing between these three isn’t about which is ‘best.’ It’s about what you need.

| Feature | Transformers | Diffusion Models | GANs |

|---|---|---|---|

| Primary Use | Text generation, translation, summarization | High-fidelity image and video generation | Real-time video, gaming assets, low-latency apps |

| Speed (per output) | Fast for text (milliseconds) | Slow: 12-15 sec per image | Fast: 0.8 sec per image |

| Image Quality (FID Score) | N/A | 1.68 (Stable Diffusion XL) | 2.15 (StyleGAN3) |

| Mode Collapse Risk | N/A | 4% | 27% |

| Training Data Needed | 100B+ tokens | 2.3B image-text pairs | 450M image pairs |

| Hardware Requirements | 370GB VRAM for base models | 40GB VRAM minimum (A100) | 24GB VRAM (RTX 4090 sufficient) |

| Market Share (2024) | 58% | 31% | 3% |

Transformers dominate because text is the backbone of most AI apps. Diffusion Models are rising fast - they’re the new standard for visuals. GANs are shrinking, but they’re still the go-to for real-time use cases where every millisecond counts.

What’s Next? Hybrid Models Are Taking Over

The future isn’t about picking one. It’s about mixing them.

Google’s Gemini 1.5 combines Transformers with diffusion techniques to generate images faster without losing detail. Stability AI’s SD3 uses a transformer inside the diffusion process to cut inference steps from 1,000 to 20. NVIDIA’s GANformer2 adds attention mechanisms to GANs to reduce mode collapse.

By 2027, 82% of AI researchers predict these lines will blur. You won’t say, ‘This is a GAN’ - you’ll say, ‘This is an AI-generated image, made with a hybrid model.’

Even the training process is changing. Google’s Project Astra aims to cut Transformer training costs by 70% using sparse attention. Stability AI is working on real-time diffusion - targeting 10ms per image by 2026. NVIDIA’s Maxine Edge will bring GANs to smartphones with sub-100ms generation.

But not everyone’s optimistic. Yann LeCun says diffusion models are inefficient and will be replaced by energy-based models. Emily Bender warns about the environmental cost - training one large Transformer emits as much as five Americans use in a lifetime.

Still, the tools are here. And they’re getting better, faster, and cheaper.

What Should You Use?

Here’s how to decide:

- Need to generate text? Use a Transformer. GPT-4, Claude, Gemini - they’re all based on this.

- Need high-quality images or video frames? Go with Diffusion Models. Stable Diffusion, Midjourney, DALL·E 3.

- Need real-time video, live avatars, or gaming assets? Stick with GANs. NVIDIA Maxine, StyleGAN3.

Most companies use more than one. A marketing team might use a Transformer to write ad copy, then a Diffusion Model to generate the visuals, then a GAN to animate the character in the video.

Start small. Try fine-tuning a pre-trained model. For text, use Hugging Face’s BERT or GPT-2. For images, use Stable Diffusion via the Diffusers library. You don’t need to train from scratch. Most of the work is already done.

And remember: the tech is evolving fast. What’s expensive today might be free tomorrow. Keep learning. Keep testing. And don’t assume one model is the answer forever.

Which generative AI technology is most widely used today?

Transformers are the most widely used, powering 58% of commercial generative AI applications as of 2024. They dominate natural language tasks like chatbots, translation, and content summarization. GPT-4, Gemini, and Claude all rely on Transformer architecture. Diffusion Models come second at 31%, mainly for image generation, while GANs account for just 3% due to their instability and slower adoption in enterprise settings.

Why are Diffusion Models better for images than GANs?

Diffusion Models produce higher-quality, more diverse images because they don’t suffer from mode collapse - a problem where GANs generate the same few outputs repeatedly. Diffusion Models gradually refine noise into an image over many steps, allowing finer control over details. On benchmark datasets like FFHQ, Stable Diffusion XL scores a Fréchet Inception Distance (FID) of 1.68, compared to StyleGAN3’s 2.15, meaning the images are closer to real photos. In blind tests, people can’t tell the difference 78% of the time.

Can I run these models on my home computer?

You can run smaller versions on a high-end consumer GPU like an RTX 4090. For Transformers, models like GPT-2 (16GB VRAM) or fine-tuned BERT (32GB VRAM) work. For Diffusion Models, Stable Diffusion 1.5 can run on 8GB VRAM, but quality drops. For full-speed, high-res output, you need an A100 with 40GB VRAM - which isn’t practical for most home users. Cloud services like Runway ML or Hugging Face Spaces are better for testing without expensive hardware.

Are GANs dead?

No, but they’re niche. GANs are still the fastest option for real-time video generation and low-latency applications like live avatars or gaming. NVIDIA’s Maxine platform uses GANs to enhance video streams in real time. New hybrid models like GANformer2 are making them more stable. If you need sub-100ms generation, GANs are still unmatched. But for most image generation tasks, Diffusion Models are the default choice now.

What’s the biggest challenge in using these technologies?

Cost and complexity. Training large models requires massive computing power and energy. Fine-tuning a Transformer can cost $10,000+ in cloud fees. Diffusion Models are slow to generate images, which hurts user experience. GANs are hard to train without expert knowledge. Most developers use pre-trained models and fine-tune them - but even that requires understanding of attention mechanisms, probability theory, and GPU memory management. The learning curve is steep, and mistakes are expensive.

Will these technologies be replaced soon?

Not replaced - evolved. Researchers are already blending them: Diffusion + Transformers, GANs + Attention. The goal is to keep the strengths (quality, speed, stability) and drop the weaknesses. MIT predicts Transformers will remain dominant in NLP through 2035. Diffusion Models are likely to lead image generation for at least a decade. GANs may survive in specialized real-time roles. The next big leap won’t be a new architecture - it’ll be smarter, leaner hybrids.

Indi s

10 December, 2025 - 02:16 AM

I never thought I'd understand how AI makes images until I read this. The way it explained diffusion models like undoing noise step by step made it click for me. I tried Stable Diffusion last week just to mess around and was shocked at how good it looked. No fancy training, just typing a prompt and getting something real.

Rohit Sen

11 December, 2025 - 22:23 PM

Transformers dominate? Please. It's just hype. Everyone uses them because they're the default, not because they're superior. GANs are faster, leaner, and way more elegant. The fact that you need 16k GPUs to run one is a red flag, not a badge of honor. This is AI as corporate spectacle.

Vimal Kumar

12 December, 2025 - 02:59 AM

Really appreciate how you broke this down without jargon. I'm a teacher and I've been using GPT for lesson plans, but I had no idea what was underneath it. The part about quantization saving costs? That's gold. My school can't afford cloud bills, but if we can shrink models and still get good results, that changes everything. Thanks for making this feel doable.

Amit Umarani

12 December, 2025 - 13:28 PM

There's a missing comma after '50 gigawatt-hours' in the second paragraph. Also, '1.8 trillion parameters' should be written as '1,800,000,000,000' for consistency with the rest of the numerical formatting. And '2.3 billion image-text pairs' - billion is not capitalized. These aren't typos, they're errors in technical writing.

Noel Dhiraj

13 December, 2025 - 15:04 PM

Start small is the real takeaway here. You don't need to train a model from scratch. Use Hugging Face, play with the demos, see what happens. I made a bot that summarizes my emails using a tiny GPT-2. It's not perfect but it saves me 20 minutes a day. That's the win. The rest is just noise.

vidhi patel

15 December, 2025 - 12:23 PM

It is imperative to note that the term 'AI-generated image' is grammatically incorrect when used as a noun phrase without a determiner. Furthermore, the use of 'you' in instructional contexts is stylistically inappropriate for technical documentation. The passive voice should be employed for objectivity, and the entire section on 'What Should You Use?' lacks formal citation of primary sources. This is not scholarly work.