When your product team needs to make a generative AI model behave in a specific way-like extracting structured data from customer emails, grading short answers, or summarizing legal documents-you’re faced with a simple but critical question: should you use few-shot learning or fine-tuning? It’s not about which is better. It’s about which fits your situation right now.

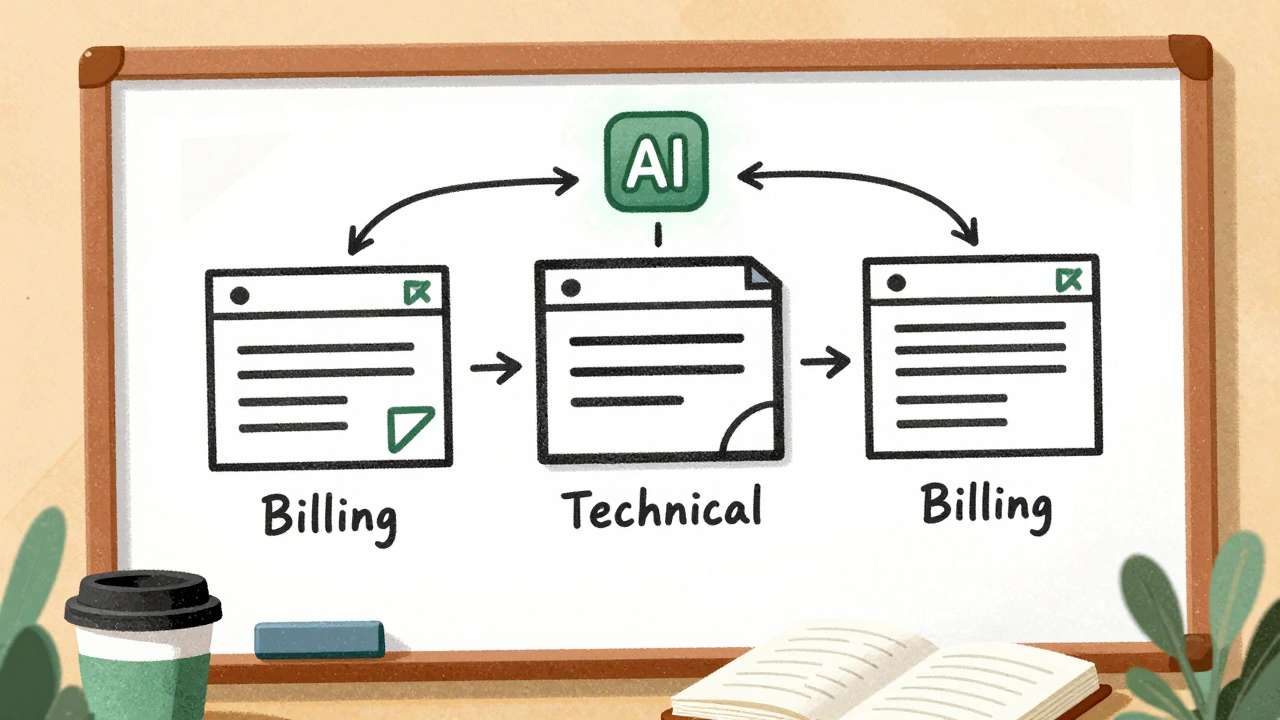

What Few-Shot Learning Actually Does

Few-shot learning doesn’t change the model. It changes the prompt. You give the model a few clear examples inside the input, and it uses those as a template to guess what you want. For instance, if you want it to classify support tickets as "Billing" or "Technical," you might write:- "My invoice was charged twice." → Billing

- "The app crashes when I open the settings." → Technical

- "Can I reset my password?" → Billing

The big win? You can test this in minutes. No training. No engineers needed. Just a product manager, a good example set, and a prompt editor. Most teams get their first working version in under an hour.

What Fine-Tuning Actually Does

Fine-tuning changes the model itself. You take a pre-trained model-say, gpt-3.5-turbo-and you feed it hundreds or thousands of labeled examples. The model adjusts its internal weights so it becomes better at your specific task. Think of it like retraining a chef who knows how to cook Italian food, but now you want them to specialize in vegan Thai curry. You don’t just give them a recipe-you change how they think about flavor combinations.This requires more work. You need:

- A clean dataset of at least 100-500 examples (more for complex tasks)

- Time to format the data correctly

- Access to a fine-tuning API (like OpenAI’s, AWS Bedrock, or Azure ML)

- At least a few days to train, test, and iterate

But the payoff? Consistency. Speed. Control.

After fine-tuning, your model doesn’t need examples in every prompt. It’s already learned the pattern. That means:

- Lower latency (320-450ms vs. 650-820ms for few-shot)

- Lower cost per request (25-40% cheaper)

- More reliable output formats (no more random JSON structures)

One financial services team reduced hallucinations in earnings call summaries from 23% to 7% after fine-tuning with 350 examples. That’s not a small improvement-it’s the difference between a tool that’s usable and one that’s risky.

When Few-Shot Learning Wins

Few-shot learning is your go-to when:- You have fewer than 50 labeled examples

- Your task is simple: binary classification, keyword extraction, or short-answer matching

- You need to move fast-like testing a hypothesis in a week

- You’re working with a closed model like GPT-4 or Claude 3, where you can’t fine-tune

For example, a startup building a customer feedback analyzer used 20 few-shot examples to classify reviews as "Positive," "Negative," or "Neutral." They hit 87% accuracy. Fine-tuning on the same data gave them 82%. Why? The model overfitted. Few-shot avoided the noise.

Another team building a legal document summarizer used few-shot to extract clause types ("NDA," "Termination," "Liability"). They didn’t have enough labeled data yet, so they tested 10 different prompt formats. Within 48 hours, they found one that worked reliably. That’s the power of few-shot: it’s a rapid prototyping tool.

When Fine-Tuning Wins

Fine-tuning is worth the effort when:- You’re processing over 10,000 requests per month

- Your output needs to be strictly structured (JSON, XML, fixed templates)

- You’re doing multi-step reasoning (e.g., "Summarize this contract, then flag any clauses that conflict with GDPR")

- You need consistent, auditable behavior (e.g., healthcare, finance, legal)

Stanford SCALE’s research found fine-tuned models outperformed few-shot by 18-22 percentage points on structured grading tasks with 500+ examples. Why? Few-shot prompts struggle with complex formats. A model might output:

- "Grade: A"

- "This is an A response."

- "The answer is excellent → A"

That’s messy. Fine-tuning forces the model to learn: "Output only the letter. No explanations."

One e-learning company fine-tuned a model to grade 10,000 student responses per week. Before, they needed human reviewers to clean up output. After fine-tuning, 94% of outputs were usable without editing. Their QA team cut work by 60%.

Cost Comparison: Upfront vs. Ongoing

Let’s break down real costs as of Q4 2024:| Factor | Few-Shot Learning | Fine-Tuning |

|---|---|---|

| Training Cost | $0 | $3-$6 per million tokens (OpenAI) |

| Setup Time | Hours | 2-3 weeks (for new teams) |

| Inference Cost (per 1M tokens) | $0.20 (GPT-4 Turbo) | $0.12-$0.15 (after fine-tuning) |

| Latency (avg. response) | 650-820ms | 320-450ms |

| Team Skill Required | Prompt engineering | ML engineering, data labeling |

| Scalability | Good for low volume | Excellent for high volume |

Here’s the math: if you’re doing 1 million requests per month, few-shot costs about $200/month in inference. Fine-tuning costs $50 to train, then $120/month in inference. You break even at around 4 months. After that, you save $80/month. If you’re scaling to 5 million requests, the savings jump to $400/month.

Common Mistakes Teams Make

With few-shot:- Using too many examples. More isn’t better. Past 20-30, performance often drops because the context window gets cluttered.

- Not controlling output format. Always include: "Output only the answer in JSON format. No explanations."

- Assuming all examples are equal. A few high-quality, diverse examples beat 50 random ones.

- Using noisy or inconsistent labels. If two annotators label the same example differently, the model learns confusion.

- Not validating on unseen data. A fine-tuned model can memorize your training set and fail on real-world inputs.

- Ignoring hyperparameters. Dropout rate, learning rate, and epochs matter. Most teams guess these.

One team fine-tuned a model on 120 customer service tickets-but 30 of them had conflicting labels. The model became erratic. They fixed it by re-labeling with a third reviewer and reducing the dataset to 90 clean examples. Accuracy jumped from 71% to 89%.

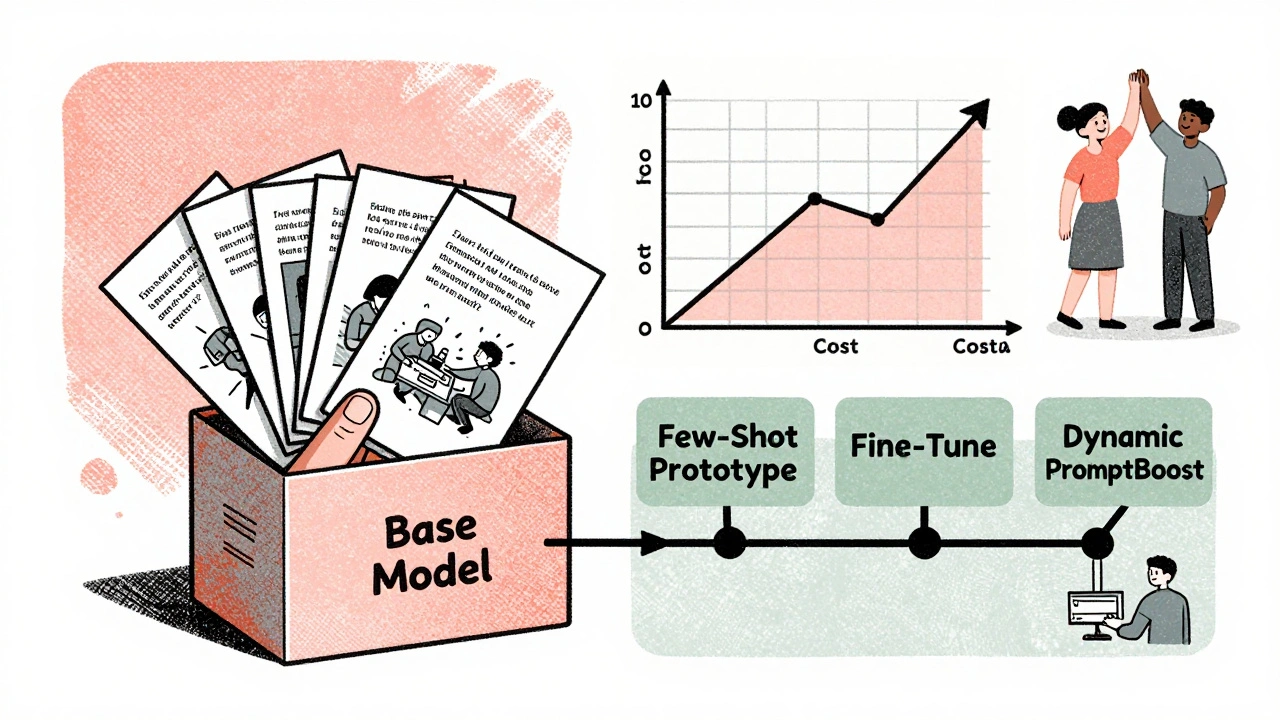

Hybrid Approaches Are the New Standard

The best teams aren’t choosing one or the other. They’re combining them.Here’s what’s working in 2025:

- Start with few-shot to validate the task and collect real-world examples.

- Use those examples to fine-tune a base model.

- After fine-tuning, keep adding 3-5 dynamic examples in prompts for edge cases.

This is called "fine-tune then prompt." It gives you the stability of fine-tuning with the flexibility of few-shot.

For example, a healthcare chatbot fine-tuned on 500 patient queries about medication side effects. Then, during deployment, it added 5 few-shot examples of rare symptoms (e.g., "I feel dizzy after taking metformin-could this be related?") to handle edge cases. Accuracy stayed above 92% even for unusual inputs.

What to Do Next

If you’re a product team deciding right now:- Start with few-shot. Build a prototype in 2 days. Test it with real users.

- If it works well and you have 100+ labeled examples, consider fine-tuning.

- If you’re processing over 10,000 requests/month, fine-tuning is almost always worth it.

- If your output must be perfectly structured (JSON, XML, forms), go fine-tuned.

- If you’re in a regulated industry (finance, health, legal), fine-tuning gives you auditability.

Don’t wait for perfection. Start small. Test fast. Scale smart.

Can I fine-tune GPT-4?

No, OpenAI doesn’t allow fine-tuning on GPT-4. You can only fine-tune gpt-3.5-turbo or use open-weight models like Llama 3 or Mistral. If you need GPT-4’s reasoning power, stick with few-shot prompting.

How many examples do I need for fine-tuning?

For simple tasks like classification, 100-200 clean examples are enough. For complex tasks-like generating structured reports or multi-step reasoning-you’ll need 500-2,000. Quality matters more than quantity. A dataset of 200 perfectly labeled examples beats 1,000 messy ones.

Is fine-tuning cheaper than using GPT-4?

Only after volume. Fine-tuning gpt-3.5-turbo costs $3-$6 per million tokens to train, then about $0.12 per million tokens for inference. GPT-4 Turbo costs $0.20 per million tokens for inference. So if you’re doing more than 1 million requests/month, fine-tuning saves money. For under 500k requests, few-shot is cheaper.

Can I use fine-tuning with open-source models?

Yes-and it’s often easier. Models like Llama 3 8B-Instruct or Mistral 7B can be fine-tuned on a single consumer GPU (like an RTX 4090) using QLORA. This reduces training cost to under $10 and time to under 2 hours. Open-weight models give you full control, but require more technical setup.

Why does my few-shot model work on some inputs but not others?

Because few-shot relies on the model’s ability to generalize from examples. If your examples are too narrow, the model won’t handle variations. Try adding 2-3 more examples that represent edge cases. Also, check your prompt formatting-sometimes a missing period or extra space breaks the pattern. Use explicit instructions: "Always output in this format: {\"label\": \"value\"}".

What’s the biggest risk with fine-tuning?

Overfitting. If your training data is too small or biased, the model will perform well on your examples but fail on real-world data. Always test on a holdout set you didn’t use for training. Use techniques like dropout, early stopping, and data augmentation to reduce this risk.