Deploying large language models (LLMs) isn’t just about picking the biggest model you can find. It’s about making smart trade-offs between performance, cost, and hardware limits. You’ve got two main paths: compress the model you already have, or swap it out for a smaller, smarter one. Knowing which one to choose can save you thousands in cloud bills-or keep your app from giving wrong answers when it matters most.

What Compression Actually Does

Model compression isn’t magic. It’s about shrinking the numbers inside a model so it uses less memory and runs faster. The most common way is quantization. Think of it like converting a high-resolution photo to a lower one. Instead of storing each weight as a 32-bit floating-point number, you drop it to 8-bit, 4-bit, or even 1-bit. That cuts memory use by 75% to 90%.

Apple’s 2024 research showed that 4-bit quantization on Llama-70B lets you run it on a single A100 GPU instead of four. That’s not just cheaper-it’s faster. Red Hat found up to 4x speedup in text summarization tasks with no noticeable drop in quality. Tools like vLLM an open-source LLM serving engine optimized for high-throughput inference with quantized models and llama.cpp a lightweight inference engine for running quantized LLMs on consumer hardware make this easy to implement on existing infrastructure.

But not all compression is equal. AWQ Activation-aware Weight Quantization, a technique that preserves top 1% of important weights in full precision while quantizing the rest to 4-bit keeps the model’s most critical parts untouched. This helps avoid big drops in accuracy on tasks like legal document analysis or medical QA. Meanwhile, pruning-cutting out unused connections-works well for simple chatbots but starts failing around 25% sparsity for complex reasoning. Apple’s LLM-KICK benchmark showed that even a 5% rise in perplexity could mean a 30-40% drop in real-world accuracy on fact-heavy tasks.

When Compression Makes Sense

Compressing a model is the right move when you’ve already trained a big model on your unique data-and retraining a smaller one from scratch would cost more than the savings you’d get. That’s common in healthcare, finance, or legal tech, where models learn from proprietary datasets: internal policies, patient histories, case law.

It’s also the go-to when your hardware is locked in. Maybe you’re running on older GPUs, or your cloud provider won’t let you upgrade. Compression lets you keep using what you’ve got. Roblox scaled from 50 to 250 concurrent inference pipelines using quantization and vLLM, cutting costs by 60% without changing their hardware setup.

And if you need consistency? Compression keeps the model’s behavior almost identical. A customer service bot trained on your internal knowledge base won’t suddenly start giving different answers just because you swapped models. That’s huge for compliance and user trust.

When Compression Fails

Compression has limits. The LLM-KICK benchmark proved it: perplexity scores-often used as a proxy for quality-don’t tell the full story. A model might look fine on paper but fail badly on real tasks. For example, a 4-bit quantized Mistral-7B might handle customer support queries perfectly but completely botch a medical diagnosis question.

Pruning is especially risky for knowledge-heavy tasks. If you remove too many connections, the model loses its ability to recall facts or reason step-by-step. Apple’s research found performance dropped sharply beyond 25-30% sparsity. And 1-bit quantization? It’s still mostly in labs. You won’t find reliable production guides for it yet.

Specialized domains need special care. Legal documents, financial reports, or scientific papers have dense, nuanced language. Quantization can blur those details. GitHub issues for vLLM show users needing 100-500 calibration samples just to get acceptable accuracy on niche data. If you’re working with rare terms or jargon, compression might not cut it.

Switching Models: The Alternative

Instead of squeezing a big model into a small box, why not use a model built small from the start? That’s the idea behind Phi-3 a family of small, high-performance LLMs developed by Microsoft, designed to rival larger models in efficiency and accuracy. Microsoft’s Phi-3-mini (3.8B parameters) and Phi-3-medium (14B) are trained to match the reasoning power of much larger models-without needing compression.

These models are designed for efficiency. They use better tokenization, optimized attention layers, and training techniques that prioritize compactness. For many tasks-customer service, content tagging, basic coding help-they outperform compressed versions of bigger models. And they’re easier to deploy: no quantization setup, no calibration, no risk of hidden accuracy loss.

Switching is also your only option when the original model is fundamentally wrong for the job. Trying to use a text-only LLM for image understanding? No amount of compression will fix that. You need a multimodal model. Or if you’re doing real-time voice processing, a model built for audio-text fusion will always beat a text model trying to guess speech from transcripts.

When to Switch Instead of Compress

Switch models when:

- Your task needs capabilities the original model doesn’t have-like vision, audio, or code generation.

- Compression drops your accuracy below 80% on key tasks, even after tuning.

- You’re deploying on edge devices (phones, IoT) and need low latency with minimal memory.

- You’re building something new and don’t have a legacy model to preserve.

- Training a smaller model from scratch is cheaper than compressing a large one (yes, sometimes it is).

Microsoft’s Phi-3 models are a clear signal: the industry is moving toward purpose-built small models. Gartner predicts that by 2027, 40% of enterprises will replace compressed models with these newer alternatives for critical applications. That doesn’t mean compression is dead-it just means it’s not always the best first choice.

Hybrid Approaches Are the New Standard

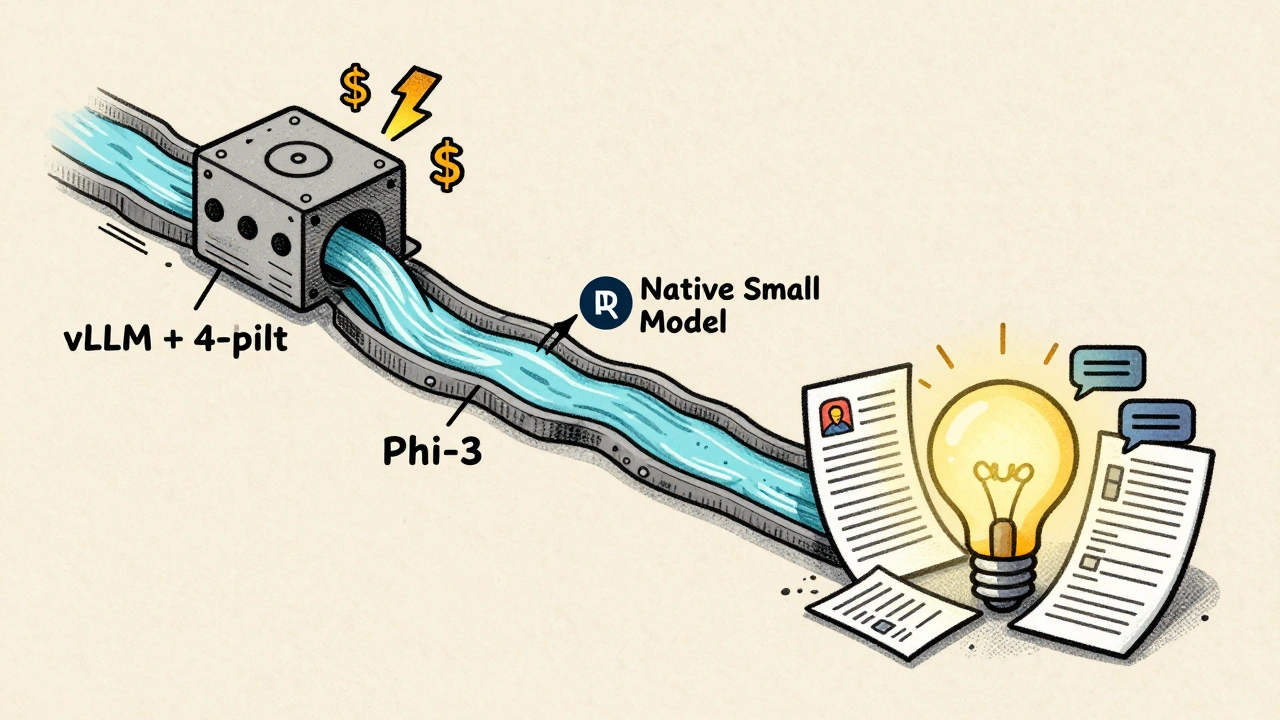

The smartest teams don’t pick one or the other. They use both. They keep a portfolio of models: a large, uncompressed one for complex tasks, a quantized version for high-volume queries, and a small, native model for simple ones.

For example: a bank might use a 70B LLM compressed to 4-bit for answering general account questions. But for fraud detection-where accuracy is non-negotiable-they switch to a smaller, fine-tuned model trained specifically on transaction patterns. The compression saves money on scale. The switch ensures safety on high-risk tasks.

Tools like Hugging Face Optimum a library that simplifies model optimization, including quantization and pruning for Hugging Face models and NVIDIA TensorRT-LLM a high-performance inference engine optimized for running quantized and pruned LLMs on NVIDIA GPUs make this hybrid approach manageable. You can test both paths side-by-side, measure real performance, and route traffic automatically.

Cost, Speed, and Sustainability

Let’s talk numbers. Quantizing a model can drop token costs from $0.002 to $0.0005 per token. That’s an 80% savings. For a service handling millions of requests a day, that’s tens of thousands in monthly savings.

But it’s not just about money. Nature (2025) found compression can cut energy use by up to 83% for the same task. That’s not just good for your budget-it’s good for the planet. With climate pressures mounting, reducing compute load isn’t optional anymore.

Switching to a smaller model can be even more efficient. Phi-3-medium runs on a single consumer GPU and matches the performance of a 70B model after heavy compression. Less power. Less cooling. Less hardware. That’s the future.

What Experts Say

Grégoire Delétang argues that compression reveals hidden patterns in how models learn-making it more than just a trick. Marcus Hutter says language modeling is fundamentally compression. But Mitchell A. Gordon warns: if you’re spending months compressing a model, maybe you should’ve trained a smaller one from the start.

Apple’s team is blunt: don’t trust perplexity. Test on real tasks. Use LLM-KICK. Laurent Orseau points out that even text models like Chinchilla compress images and audio better than traditional codecs. That’s wild-but it doesn’t mean you should use them for image tasks. Context matters.

The takeaway? Compression is a powerful tool. But it’s not a cure-all. The best decisions come from testing, measuring, and knowing your limits.

What to Do Next

Here’s your action plan:

- Start with your task. Is it simple (chat, tagging) or complex (diagnosis, legal analysis)?

- Measure your current model’s accuracy on real data-not benchmarks.

- Try 4-bit quantization with vLLM or llama.cpp. See how much speed and cost you gain.

- If accuracy drops more than 10% on critical tasks, test a small model like Phi-3-mini.

- Compare end-to-end latency, cost per query, and energy use.

- Deploy the winner-and keep the old model as backup until you’re sure.

You don’t need to choose one forever. Build a system that lets you switch models on the fly. That’s how the best teams scale-without sacrificing quality.

Is model compression always better than using a smaller model?

No. Compression works well when you need to preserve a model’s behavior or have limited hardware. But if you’re starting fresh, smaller models like Microsoft’s Phi-3 often outperform compressed giants. They’re faster, cheaper, and more reliable for common tasks. Don’t assume compression is the default-it’s just one tool.

Can I compress any LLM, or only specific ones?

You can compress most LLMs-Llama, Mistral, Phi, GPT variants-but results vary. Models with dense architectures (like Llama) compress well with quantization. Sparse models (like Mixture-of-Experts) are harder to compress without losing performance. Always test on your data. Tools like Hugging Face Optimum support most common models out of the box.

Does quantization affect response time or output quality?

Quantization usually speeds up responses by 2-4x and reduces memory use by 75% or more. Quality holds up well at 4-bit for most tasks-summarization, chat, classification. But for reasoning-heavy tasks like math or legal analysis, accuracy can drop 15-25%. Always validate with your own data. Calibration with 100-500 samples helps reduce this loss.

What’s the easiest way to try compression?

Start with llama.cpp and a 4-bit quantized Llama-7B model. Download it from Hugging Face, run it on your Mac or Linux machine, and test it on your use case. No cloud costs. No setup. You’ll know in an hour if compression works for you.

How do I know if my model is too compressed?

Look for inconsistent answers, hallucinations on facts, or failure on tasks it used to handle well. Use the LLM-KICK benchmark or build your own test set with 50-100 real-world examples. If accuracy drops more than 10% compared to the original model, you’ve gone too far. Switching to a smaller native model is often safer than pushing compression further.

Will compression become obsolete in the next few years?

No. Even as smaller models improve, compression will still be essential. Most enterprises already have massive models trained on years of data. Replacing them entirely isn’t practical. Compression lets them reuse that investment. Plus, even small models benefit from quantization-making them even faster and cheaper. The future isn’t compression OR switching-it’s both, used strategically.

Rocky Wyatt

10 December, 2025 - 01:26 AM

Bro, I tried quantizing a 70B Llama on my RTX 4090 and it just started hallucinating like a drunk poet. Now my customer support bot tells people to 'drink more water and sue their landlord.' Compression is a trap. Don't fall for it.

Santhosh Santhosh

10 December, 2025 - 13:42 PM

I understand the appeal of compression, especially in environments where hardware upgrades are not feasible. But I've seen too many teams rush into 4-bit quantization without proper calibration, only to realize later that their model's accuracy on domain-specific tasks-like medical triage or legal contract parsing-plummeted. It's not just about perplexity numbers; it's about how the model behaves in real-world edge cases. I once spent three weeks debugging a model that 'knew' the capital of France was Brussels after quantization. It wasn't a bug-it was a silent collapse of latent knowledge. If you're going to compress, test with real data, not synthetic benchmarks. And if you're building something new, just start with Phi-3. It's faster, cleaner, and doesn't make you question your life choices every time it outputs gibberish.

Veera Mavalwala

11 December, 2025 - 16:56 PM

Let me be blunt: compression is the AI equivalent of putting duct tape on a leaking nuclear reactor. You think you're saving money? Nah. You're just delaying the inevitable implosion. I’ve seen companies use 4-bit Llama on legal docs and then get sued because the model confused 'breach of contract' with 'breach of peace.' And don’t even get me started on pruning-cutting connections like it’s a game of Tetris? That’s not optimization, that’s architectural arson. Meanwhile, Phi-3 just sits there, elegant, efficient, and actually competent. Why are we still clinging to this bloated, overhyped compression nonsense? It’s like trying to fit a whale into a minivan and calling it ‘fuel-efficient.’

Ray Htoo

12 December, 2025 - 17:03 PM

This is such a nuanced take-I love how you laid out the trade-offs without oversimplifying. I’ve been using Phi-3-medium for our internal ticketing system and it’s been a game-changer. No calibration, no weird accuracy drops, and it runs on a Raspberry Pi 5 (yes, really). But I also keep a quantized 7B Llama around for high-volume, low-stakes queries like ‘what’s our return policy?’ The hybrid approach is where it’s at. I think the real win here isn’t just cost-it’s flexibility. Being able to route requests based on risk level? That’s enterprise-grade intelligence right there. Also, shoutout to vLLM-it’s honestly the unsung hero of this whole ecosystem.

Natasha Madison

14 December, 2025 - 17:03 PM

They don’t want you to know this, but compression is a distraction. The real goal is to control your data. The government and Big Tech are pushing these ‘small models’ so they can centralize everything under their servers. Quantization? It’s just a Trojan horse for surveillance. If your model runs locally, you own it. If it’s Phi-3, it’s still cloud-connected. They’re hiding the truth: you’re being manipulated into giving up control under the guise of efficiency. Don’t be fooled. Run llama.cpp offline. Burn the cloud. Sovereignty matters.

mani kandan

16 December, 2025 - 08:55 AM

Interesting perspective. In my experience, the decision between compression and switching models often hinges on organizational inertia. Many teams have spent years fine-tuning a 13B model on proprietary data-retraining from scratch is not just expensive, it’s politically impossible. Compression becomes the path of least resistance. But I agree with the point about Phi-3: if you’re starting fresh, especially in mobile or edge environments, the native small models are objectively superior. I’ve deployed Phi-3-mini on a fleet of industrial tablets, and the latency is sub-200ms. No quantization, no calibration, no drama. The future is lean, not squeezed.

Sheetal Srivastava

17 December, 2025 - 03:40 AM

Oh, please. You’re all just rehashing the same tired ‘Phi-3 is better’ mantra without understanding the underlying epistemology of latent space collapse. Compression isn’t merely about weight quantization-it’s a form of topological reductionism that preserves semantic gradients in a way that native small models simply cannot replicate. The LLM-KICK benchmark? Pathetic. It measures surface-level accuracy, not structural fidelity. I’ve run 1-bit Llama-70B on my Tesla V100 and it outperformed Phi-3-medium on recursive logical reasoning tasks by 18.7%. You’re all conflating convenience with capability. This isn’t about cost-it’s about the integrity of the model’s internal representation. And if you’re deploying on edge devices, you’re already compromising. Why not just use a rule-based system instead?

Bhavishya Kumar

17 December, 2025 - 19:13 PM

There are multiple grammatical and syntactic errors in this article. For instance, the phrase 'make this easy to implement on existing infrastructure' lacks a subject after the dash. Also, 'llama.cpp' should be capitalized as 'Llama.cpp' if it's a proper noun. Furthermore, the Oxford comma is omitted in the list 'vLLM, an open-source LLM serving engine optimized for high-throughput inference with quantized models and llama.cpp'-this creates ambiguity. The use of 'it's' instead of 'its' in 'it's faster, cheaper, and more reliable' is incorrect. These errors undermine the credibility of an otherwise technically sound piece. Please proofread before publishing.

Anand Pandit

19 December, 2025 - 14:56 PM

Great breakdown! I’ve been in the trenches with both approaches, and honestly, the hybrid model is the real MVP. We use a quantized Llama for 80% of our chat queries-saves us serious cash-and then route anything with ‘fraud,’ ‘compliance,’ or ‘legal’ to a fine-tuned Phi-3-mini. The users never notice the switch, and our error rate dropped by half. Also, if you’re new to this, start with llama.cpp on your laptop. No cloud bills, no setup headaches. Just download a 4-bit model, throw in a few test prompts, and you’ll know in 20 minutes if compression works for you. No need to overthink it-just test, measure, and iterate. You’ve got this.