Business Technology AI Scripts: Deploy LLMs, Cut Costs, and Stay Compliant

When you're building AI into your business tech, you're not just writing code—you're managing large language models, AI systems that process and generate human-like text based on massive datasets. Also known as LLMs, they power chatbots, automate reports, and even guide field technicians—but only if you handle them right. Most companies fail because they treat LLMs like regular software. They spin up a model, throw in some prompts, and hope for the best. Then the bills spike, the legal team panics, and the engineers are stuck debugging a black box that costs $2,000 a day to run.

That’s where smart business tech comes in. It’s not about having the fanciest model. It’s about knowing how cloud cost optimization, strategies like autoscaling and spot instances that reduce AI infrastructure expenses without losing performance works. It’s about understanding how AI compliance, the rules around data use, export controls, and state-level laws that govern how AI models are trained and deployed affects your bottom line. And it’s about using LLM autoscaling, automated systems that adjust computing power based on real-time demand to avoid paying for idle GPUs so you’re not overpaying during slow hours. These aren’t theoretical ideas. They’re the difference between a prototype that dies and a tool that makes your team 10x more efficient.

You’ll find posts here that show you exactly how to fix the biggest headaches in business AI: why your LLM bill jumps when users ask long questions, how California’s new law forces you to track training data, how spot instances can slash your cloud costs by 60%, and how field service teams use AI to cut repair times in half. No fluff. No buzzwords. Just real strategies used by teams running AI in production—where mistakes cost money, time, and trust.

What follows isn’t a list of tools. It’s a roadmap. A way to move from guessing what your AI will do next to knowing exactly how it behaves, how much it costs, and whether you’re breaking any rules. If you’re building, managing, or paying for AI in your business, this is where you start.

Enterprise Adoption, Governance, and Risk Management for Vibe Coding

Enterprise vibe coding accelerates development but introduces new risks. Learn how to govern AI-generated code, enforce compliance, and manage security without slowing innovation.

Read MoreInfrastructure Requirements for Serving Large Language Models in Production

Serving large language models in production requires specialized hardware, dynamic scaling, and smart cost optimization. Learn the real infrastructure needs-VRAM, GPUs, quantization, and hybrid cloud strategies-that make LLMs work at scale.

Read MoreKnowledge Management with Generative AI: Answer Engines Over Enterprise Documents

Generative AI is transforming enterprise knowledge management by turning document repositories into intelligent answer engines that deliver accurate, sourced responses to natural language questions - cutting search time by up to 75% and accelerating onboarding by 50%.

Read MoreLLM Evaluation Gates Before Switching from API to Self-Hosted

Before switching from an LLM API to self-hosted, organizations must pass strict performance, cost, and security gates. Learn the key thresholds, real-world failure rates, and the 7-step evaluation process that separates success from costly mistakes.

Read MoreLatency and Cost in Multimodal Generative AI: How to Budget Across Text, Images, and Video

Multimodal AI can boost accuracy but skyrockets costs and latency. Learn how to budget across text, images, and video by optimizing token use, choosing the right hardware, and avoiding common overspending traps.

Read MorePrivacy-Aware RAG: How to Protect Sensitive Data When Using Large Language Models

Privacy-Aware RAG protects sensitive data in AI systems by removing PII before it reaches large language models. Learn how it works, why it's critical for compliance, and how to implement it without losing accuracy.

Read MoreHuman-in-the-Loop Operations for Generative AI: Review, Approval, and Exceptions

Human-in-the-loop operations for generative AI ensure AI outputs are reviewed, approved, and corrected by people before deployment. Learn how top companies use structured workflows to balance speed, safety, and compliance.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches AI hallucinations before users see them, reducing errors by up to 73%. Learn how top companies use confidence scoring, domain experts, and smart workflows to prevent costly mistakes.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches dangerous AI hallucinations before users see them. Learn how it works, where it saves money and lives, and why automated filters alone aren't enough.

Read MoreMeasuring Hallucination Rate in Production LLM Systems: Key Metrics and Real-World Dashboards

Learn how to measure hallucination rates in production LLM systems using real-world metrics like semantic entropy and RAGAS. Discover what works, what doesn’t, and how top companies are reducing factuality risks in 2025.

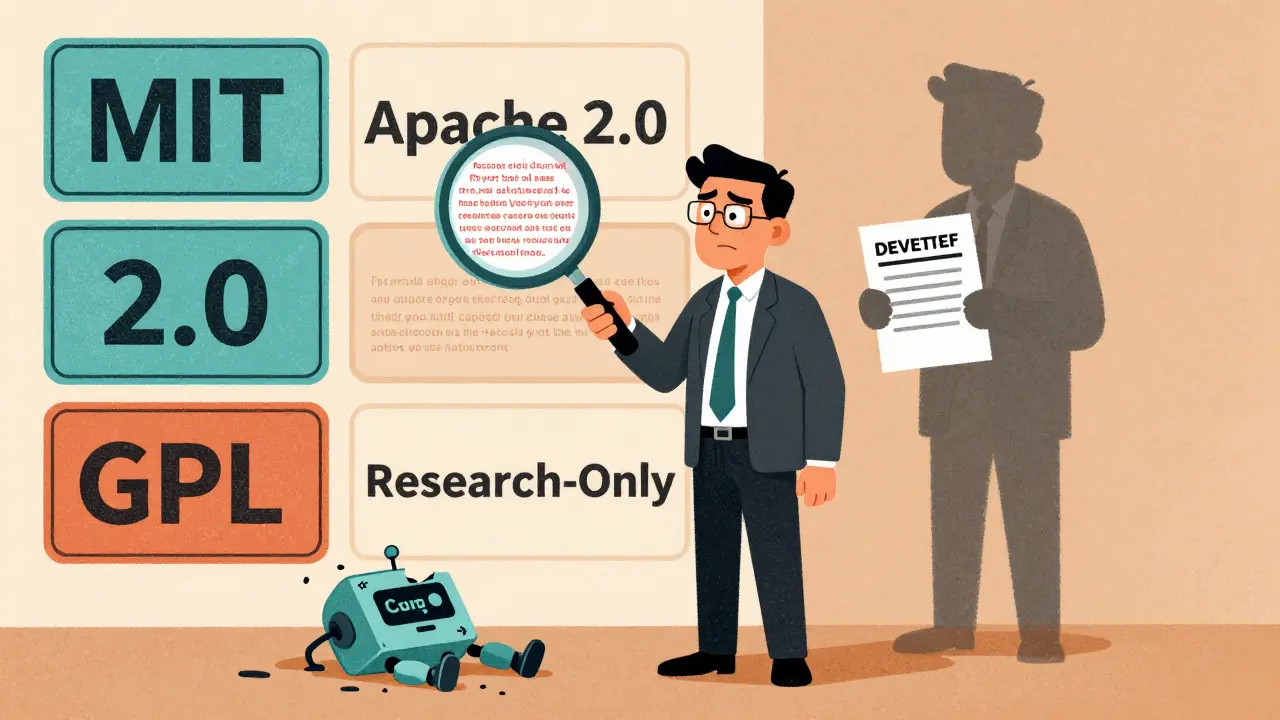

Read MoreLegal and Licensing Considerations for Deploying Open-Source Large Language Models

Open-source LLMs can save millions in API costs-but only if you follow the license rules. Learn how MIT, Apache 2.0, and GPL licenses affect commercial use, training data risks, and compliance steps to avoid lawsuits.

Read MoreModel Compression Economics: How Quantization and Distillation Cut LLM Costs by 90%

Quantization and distillation cut LLM inference costs by up to 95%, enabling affordable AI on edge devices and budget clouds. Learn how these techniques work, when to use them, and what hardware you need.

Read More