Generative AI: What It Is, How It Works, and How to Use It Smartly

When you ask an AI to write a poem, design a logo, or summarize a 50-page report, you’re using generative AI, a type of artificial intelligence that creates new content instead of just analyzing existing data. Also known as creative AI, it’s not magic—it’s math, data, and careful engineering working together to mimic human-like output. Unlike old-school rule-based systems, generative AI learns patterns from massive datasets and then spins out something new—like a writer who’s read every book ever written and now writes their own.

But here’s the catch: generative AI doesn’t know what’s true. It guesses what comes next based on patterns, not facts. That’s why large language models, the backbone of most text-generating AI, are trained on billions of sentences to predict the next word—but still hallucinate. That’s where prompt engineering, the art of crafting inputs that guide AI to better, more accurate outputs comes in. A well-written prompt can cut hallucinations by half. A bad one? You’ll get convincing nonsense.

And it’s not just about writing better prompts. Deploying generative AI safely means thinking about AI safety, the set of practices that prevent harmful, biased, or illegal outputs. Think content filters, redaction tools, and compliance checks—because if your AI spits out fake medical advice or deepfake videos, you’re on the hook. That’s why companies now track truthfulness benchmarks, audit training data, and even lock models inside encrypted environments so no one can sneak in and steal them.

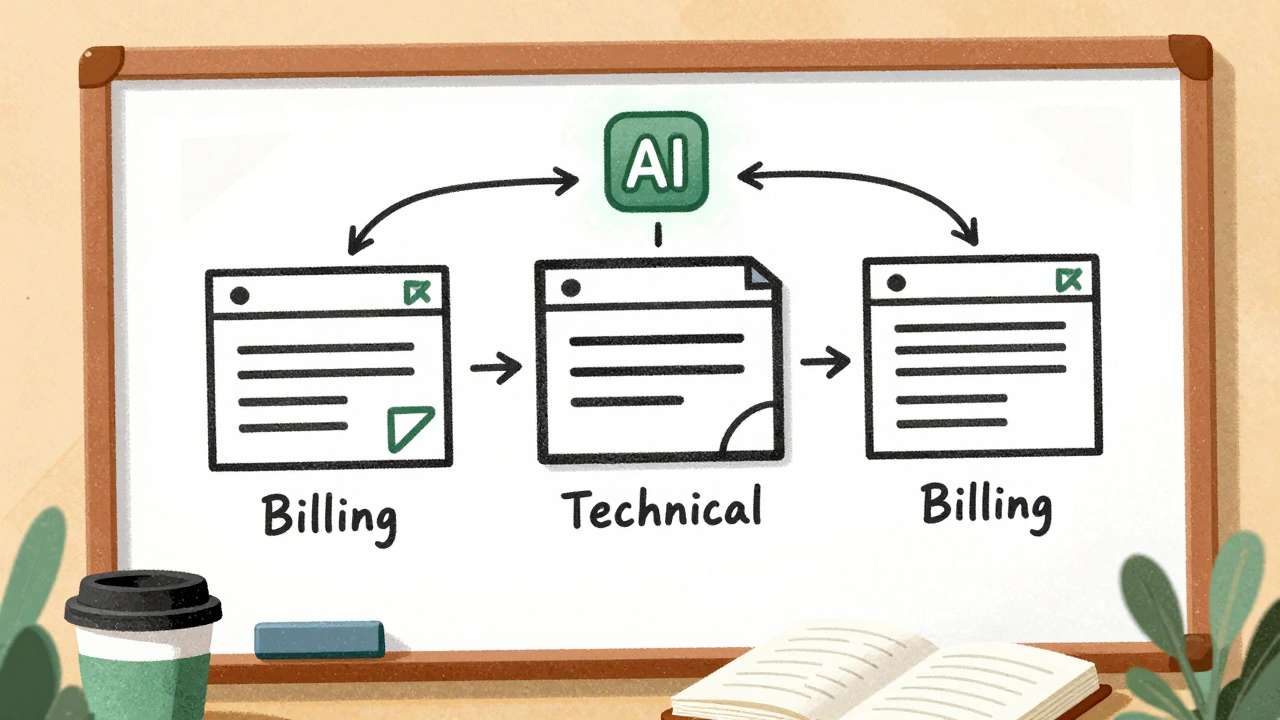

Then there’s cost. Running generative AI isn’t cheap. Every word it generates costs money—sometimes pennies, sometimes dollars—depending on how big the model is, how often it’s used, and where it’s hosted. That’s why smart teams use LLM deployment, the process of putting AI models into production with scaling, monitoring, and cost controls strategies like autoscaling, spot instances, and switching to smaller models when full power isn’t needed. You don’t need a Ferrari to drive to the grocery store.

And it’s not just tech teams using this stuff anymore. Founders without coding skills are building working apps in days. Designers are using AI to generate UI components that stay on-brand. Legal teams are checking state laws to avoid fines. Developers are building systems that work across OpenAI, Anthropic, and open-source models without getting locked in. All of it—every tool, every tactic, every mistake—is covered in the posts below.

What you’ll find here isn’t theory. It’s real-world guidance on how to build, govern, secure, and pay for generative AI without blowing up your budget or your reputation. Whether you’re tweaking prompts, auditing model outputs, or fighting vendor lock-in, you’ll find the exact strategies teams are using right now.

Knowledge Management with Generative AI: Answer Engines Over Enterprise Documents

Generative AI is transforming enterprise knowledge management by turning document repositories into intelligent answer engines that deliver accurate, sourced responses to natural language questions - cutting search time by up to 75% and accelerating onboarding by 50%.

Read MoreHuman-in-the-Loop Operations for Generative AI: Review, Approval, and Exceptions

Human-in-the-loop operations for generative AI ensure AI outputs are reviewed, approved, and corrected by people before deployment. Learn how top companies use structured workflows to balance speed, safety, and compliance.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches AI hallucinations before users see them, reducing errors by up to 73%. Learn how top companies use confidence scoring, domain experts, and smart workflows to prevent costly mistakes.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches dangerous AI hallucinations before users see them. Learn how it works, where it saves money and lives, and why automated filters alone aren't enough.

Read MoreWhy Multimodality Is the Next Big Leap in Generative AI

Multimodal AI combines text, images, audio, and video to understand context like humans do-making generative AI smarter, faster, and more accurate than text-only systems. Here's how it's already changing healthcare, customer service, and marketing.

Read MoreMultimodal Generative AI: Models That Understand Text, Images, Video, and Audio

Multimodal generative AI understands text, images, audio, and video together-making it smarter than older AI systems. Learn how models like GPT-4o and Llama 4 work, where they’re used, and why they’re changing industries in 2025.

Read MoreFew-Shot vs Fine-Tuned Generative AI: How Product Teams Should Decide

Learn how product teams should choose between few-shot learning and fine-tuning for generative AI. Real cost, performance, and time comparisons for practical decision-making.

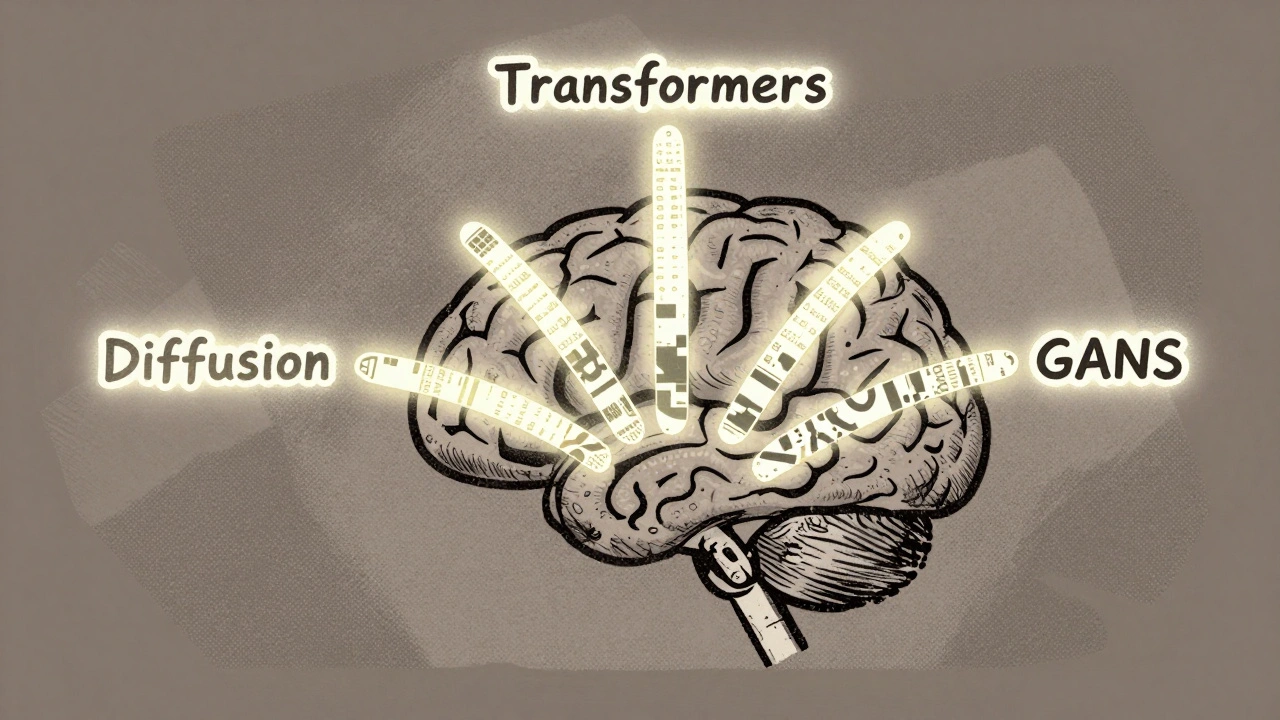

Read MoreFoundational Technologies Behind Generative AI: Transformers, Diffusion Models, and GANs Explained

Transformers, Diffusion Models, and GANs are the three core technologies behind today's generative AI. Learn how each works, where they excel, and which one to use for text, images, or real-time video.

Read MoreHow Generative AI, Blockchain, and Cryptography Are Together Building Trust in Digital Systems

Generative AI, blockchain, and cryptography are merging to create systems that prove AI decisions are trustworthy, private, and unchangeable. This combo is already reducing fraud in healthcare and finance - and it’s just getting started.

Read MoreFrom Proof of Concept to Production: Scaling Generative AI Without Surprises

Only 14% of generative AI proof of concepts make it to production. Learn how to bridge the gap with real-world strategies for security, monitoring, cost control, and cross-functional collaboration - without surprises.

Read More