Generative AI can write emails, draft reports, and even answer customer questions-but left unchecked, it can also spread misinformation, sound rude, or violate compliance rules. That’s why companies aren’t letting AI run wild. They’re putting humans back in the loop. Not as supervisors, but as active decision-makers. This isn’t about slowing things down. It’s about making sure AI does the right thing, every time.

What Human-in-the-Loop Really Means

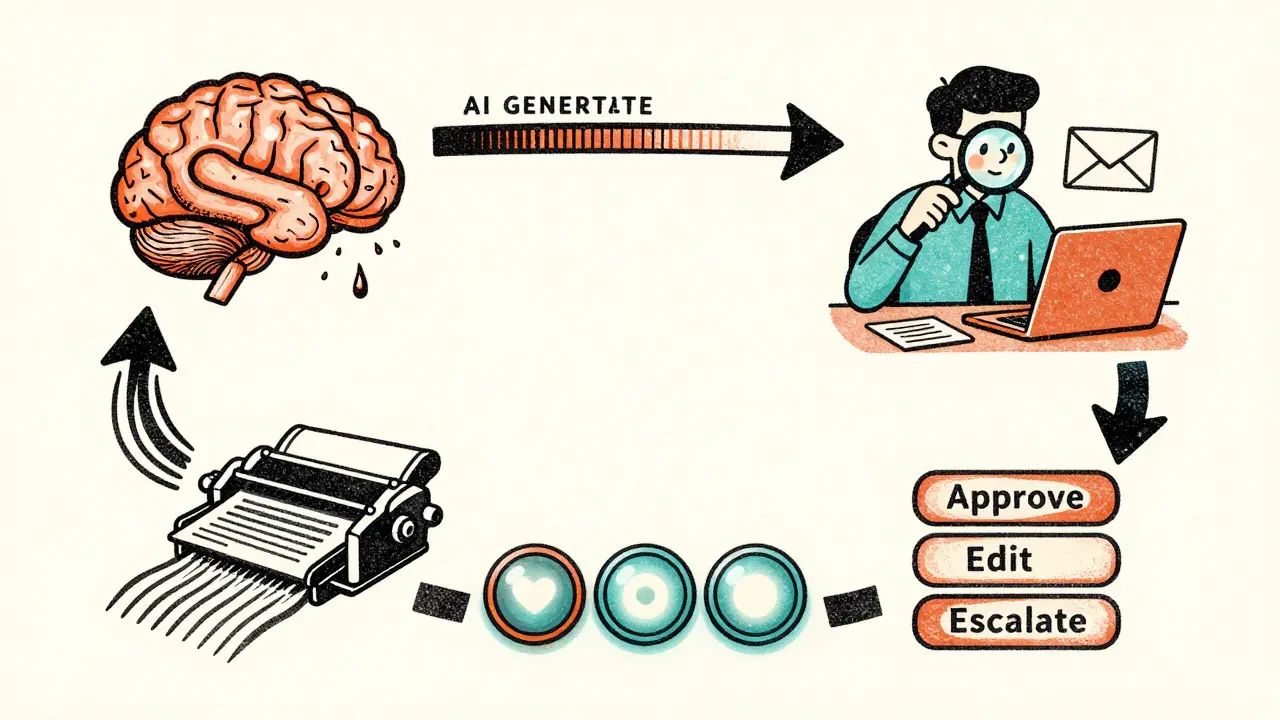

Human-in-the-loop (HITL) isn’t just having someone glance at an AI output. It’s a structured system where humans make final calls on what gets sent out, what gets corrected, and what gets escalated. AWS, in its December 2023 technical blog, defined it clearly: humans play an active role in decision-making alongside the AI. That means the system doesn’t just flag something-it waits for a person to say, “Yes, send this,” or “No, fix this.”Take customer service. An AI might draft a reply to a negative review. But if the tone feels off, or the response shows uncertainty about whether the product was defective, the system automatically pauses and routes it to a human. In AWS’s own tests, this setup correctly flagged content needing review 92.7% of the time. That’s not luck. That’s design.

And it’s not optional anymore. According to Forrester’s Q2 2024 AI Governance Report, 78% of enterprises now use some form of HITL. In healthcare, it’s 85%. In finance, it’s 89%. Why? Because regulators demand it. The FDA requires human review of all AI-generated patient communications. SEC Regulation AI-2023 says customer-facing AI outputs must be overseen by people. If you’re in these industries, skipping HITL isn’t a risk-it’s a violation.

The Four-Step Workflow

A solid HITL system follows four clear stages. Get these right, and you avoid chaos.- AI processes and scores confidence. The model generates content and assigns a confidence score-usually between 0% and 100%. If the score drops below 85-90%, it gets flagged. This isn’t arbitrary. Parseur’s 2024 guide shows this range catches most errors without overwhelming reviewers.

- Low-confidence items go to humans. The system doesn’t just notify someone. It sends the exact content, context, and reason for the flag-like “Tone detected as potentially hostile” or “Uncertainty in product warranty details.” This saves time and reduces guesswork.

- Humans review with clear options. Reviewers don’t get a blank form. They see buttons: “Approve,” “Edit and Resubmit,” “Reject and Escalate,” or “Send to Senior Reviewer.” KPMG trains every employee on these options. Their “Trusted AI” policy makes it clear: no output goes live without a human click.

- Feedback loops back to the model. Every decision-approve, edit, reject-is logged. That data retrains the AI. Over time, the model learns what humans reject and adjusts. Tredence’s case studies show this reduces review volume by 22-29% over six months. The AI gets smarter. The humans get less tired.

This isn’t theory. It’s how companies like Parexel handle pharmacovigilance reports. Instead of reviewing every single case manually, AI filters out low-risk entries. Humans only see the ones with red flags. Result? 47% faster processing without losing accuracy.

HITL vs. HOTL: The Big Difference

Don’t confuse human-in-the-loop with human-on-the-loop. They sound similar, but they’re worlds apart.Human-on-the-loop (HOTL) means humans watch the AI from afar. They step in only if something goes wrong-like a fire alarm going off. But generative AI doesn’t always explode. It quietly gets worse. It starts sounding sarcastic. It misquotes regulations. It makes up sources. By the time a human notices, damage is done.

HITL is different. It’s like having a co-pilot who checks every turn. The system doesn’t wait for disaster. It asks for permission at every critical step. AWS’s customer response system uses HITL because they can’t afford a single bad reply to go out unreviewed. In contrast, a self-driving car might use HOTL-humans only take over if the system fails. But customer service? Marketing? Legal docs? Those need HITL.

What Happens When HITL Fails

It’s not enough to just set up a review system. If you don’t do it right, it becomes a bottleneck-or worse, a false sense of security.Professor Fei-Fei Li’s Stanford HAI report studied 127 enterprise HITL deployments. She found three common failures:

- Inconsistent review standards. 68% of teams had different rules for what counted as “acceptable.” One reviewer approved a vague response. Another rejected it. That’s not control-that’s chaos.

- Inadequate training. 42% of reviewers got no formal training. They didn’t know how to spot bias, tone issues, or compliance risks. They just clicked “approve” to keep up.

- Poor feedback integration. 57% of systems didn’t use review data to improve the AI. So the same mistakes kept happening. The AI never learned.

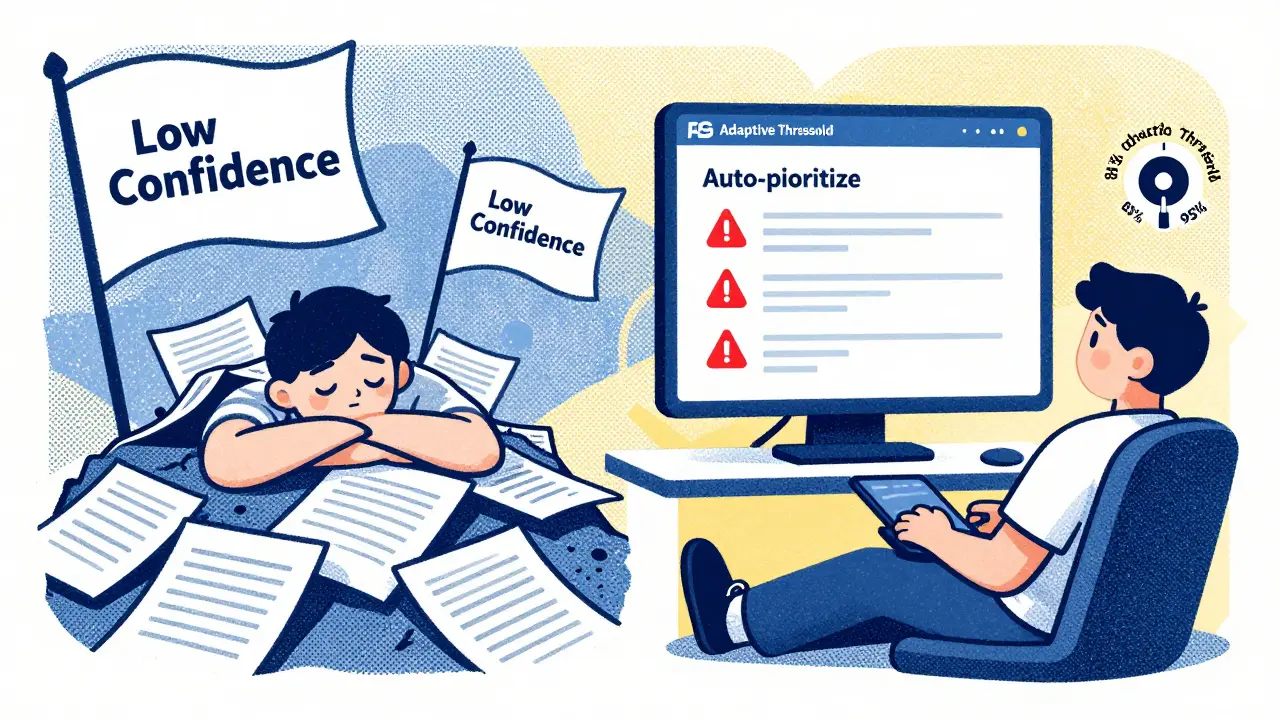

And then there’s volume. KPMG saw review times jump 22% during peak hours. Why? Too many flags, not enough reviewers. Their fix? AI-powered pre-filtering. The system now highlights the most likely issues-like toxicity or legal risk-and automates routine edits. That cut unnecessary reviews by 30%.

How to Build a Real HITL System

You don’t need a team of engineers to start. But you do need a plan.Start with a pilot. Pick one use case-like drafting support replies or summarizing internal memos. Measure three things: accuracy improvement, turnaround time, and human effort. AWS recommends this approach. It keeps things small, measurable, and safe.

Train your team. KPMG spent 16-24 hours per employee on “Trusted AI” training. They didn’t just teach them how to review. They taught them why it matters. And they created clear SOPs-Standard Operating Procedures-for every type of content. No guesswork. No ambiguity.

Choose the right tools. AWS Step Functions handles complex workflows with multiple checkpoints. Parseur’s system is built for document extraction with confidence thresholds. LXT.ai specializes in annotation pipelines. Don’t build your own unless you have to. Custom solutions failed 13.5% more often than cloud-native tools in Forrester’s Q3 2024 test.

Set thresholds wisely. Start at 85%. Then adjust. Tredence’s survey found 63% of companies needed 2-3 rounds of tuning before they got it right. Too low? Reviewers drown. Too high? Mistakes slip through.

The Future: Less Review, More Intelligence

Will humans always be in the loop? Maybe not. But they’ll always be needed-for the right reasons.Adaptive confidence scoring, introduced by AWS in October 2024, changes the game. Instead of a fixed 85% threshold, the system now adjusts based on content type. A legal memo? Review at 95%. A product description? Review at 80%. Historical error rates guide the call. Result? 37% fewer unnecessary reviews.

And then there’s human-in-the-loop reinforcement learning. Humans don’t just approve-they teach. Every edit becomes a lesson. The AI learns what “good” looks like, not just what’s wrong. Early results show review volume dropping 22-29% in six months.

By 2028, MIT predicts context-aware HITL will dominate. Humans won’t review everything. They’ll review exceptions-only when the AI hits a domain-specific edge case. That’s the goal: less busywork, more judgment.

But Gartner’s Whit Andrews is clear: “High-stakes decisions will always require human review.” And by 2026, 90% of enterprise AI applications will need formal HITL. That’s not hype. That’s regulation, reputation, and reality.

Final Thought: It’s Not About Control. It’s About Trust.

People don’t fear AI. They fear what happens when AI is left unchecked. A bot sending a rude reply to a grieving customer. A report misstating a drug interaction. A contract clause rewritten by AI without legal oversight.HITL isn’t a workaround. It’s the foundation of trustworthy AI. It’s the bridge between speed and safety. Between automation and accountability.

You don’t need to replace humans. You need to empower them-with better tools, clearer rules, and smarter systems. Because the best AI isn’t the one that works alone. It’s the one that knows when to ask for help.

What’s the difference between human-in-the-loop and human-on-the-loop?

Human-in-the-loop (HITL) means humans actively review and approve every output before it’s used. The system waits for a human decision. Human-on-the-loop (HOTL) means humans only step in if something goes wrong-like an alert or error. HITL is proactive. HOTL is reactive. For generative AI in customer service, legal, or healthcare, HITL is required. HOTL is risky.

Do I need a team of specialists to run HITL?

No. Many companies use existing staff-customer service reps, compliance officers, or content editors-as reviewers. The key isn’t hiring more people. It’s training them well and giving them clear tools. KPMG trains all employees on their “Trusted AI” policy. AWS Step Functions lets you assign roles without new hires. Focus on process, not headcount.

How do I know if my confidence threshold is set right?

Start at 85%. Then track two things: how many errors slip through (false negatives) and how many reviews are unnecessary (false positives). If reviewers are overwhelmed, raise the threshold. If mistakes are getting approved, lower it. Tredence’s data shows most companies need 2-3 rounds of tuning. Use your own data-not someone else’s guess.

Can AI ever fully replace human review?

In low-risk areas-like internal memos or draft social posts-maybe. But for anything that affects customers, compliance, or safety, no. Gartner predicts 100% of regulated industries will keep formal HITL through 2030. AI can reduce review volume, but it can’t replace judgment. Humans understand context, tone, and consequence in ways AI still can’t.

What happens if my reviewers fall behind?

You’ll get delays-and possibly bad decisions. KPMG saw review times spike 22% during peak hours. The fix? Add AI pre-filtering. Let the system highlight only the highest-risk items. Automate routine edits. Use tiered review: simple cases go to junior reviewers, complex ones to experts. Don’t just add more people. Make the system smarter.

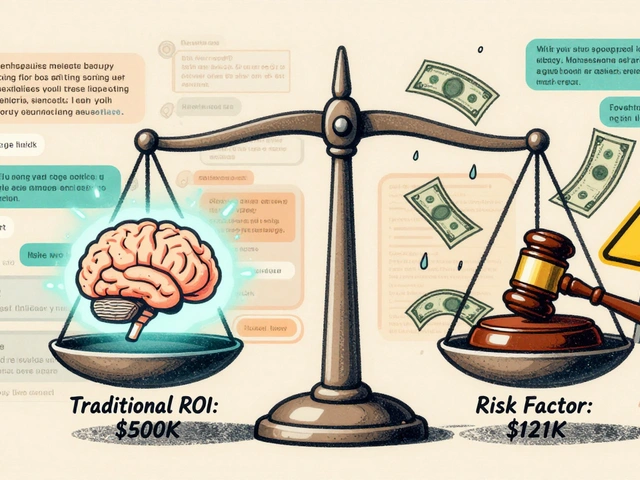

Is HITL expensive to implement?

Not compared to the cost of a single AI mistake. The HITL market grew from $1.2B in 2023 to $2.8B in 2024. Cloud tools like AWS Step Functions are affordable and scalable. The real cost is in training and process design-not software. A well-built HITL system pays for itself by avoiding fines, lawsuits, and reputational damage. One bad customer response can cost more than a year of review tools.

Jess Ciro

4 January, 2026 - 08:40 AM

So let me get this straight-we're paying humans to click buttons so AI doesn't accidentally call a grieving customer 'chill out bro'? Next they'll make us sign a form saying we didn't tell the bot to write a poem for a funeral. This is capitalism's answer to laziness: make humans the glue holding together a system that should've been smarter from day one.

saravana kumar

4 January, 2026 - 16:08 PM

The entire premise is flawed. HITL is not a solution-it is a bandage on a severed artery. If your AI requires human intervention for 90% of outputs, you built it wrong. The industry is conflating compliance with competence. Regulatory mandates are not engineering benchmarks. You don't fix bad AI with more humans-you fix it with better training data, better architectures, and less corporate FUD.

Mark Brantner

5 January, 2026 - 12:14 PM

ok so i read this whole thing and like… the ai is just gonna sit there waiting for a human to say ‘yep that’s fine’?? bro its 2025. why are we still doing this like its 2012? i mean… i guess if you wanna pay people $20/hr to read bot emails… go nuts. but i’m just here waiting for the day ai can be trusted to not be a dumbass without a babysitter.

Kate Tran

5 January, 2026 - 23:01 PM

i think the real issue is nobody trains the humans. i’ve seen teams where the reviewer just clicks approve because they’re overwhelmed. its not the ai’s fault. its the process. if you give someone a checklist and 10 seconds per item, you’re not doing HITL-you’re doing HITS (human-in-the-same-spot-trying-to-survive)

amber hopman

5 January, 2026 - 23:30 PM

I actually think this is the most pragmatic approach we’ve got right now. AI isn’t sentient-it doesn’t understand nuance, sarcasm, or grief. It can mimic empathy, but it can’t feel it. I’ve seen bots apologize for a death with a GIF. Humans catch that. And yes, it’s slower. But it’s safer. We’re not trying to be efficient-we’re trying to be humane.

Jim Sonntag

6 January, 2026 - 13:52 PM

so we’re paying humans to be AI’s emotional support animals now? cool. next they’ll make us hug the chatbot when it’s sad. i mean… if this is what ‘trustworthy AI’ looks like, i’d rather just have the bot say ‘idk ask a human’ and be done with it.

Deepak Sungra

7 January, 2026 - 19:26 PM

honestly? i think the whole thing is overcomplicated. if your ai is making mistakes that need human review, maybe stop using it for customer comms. i work in a call center-we use ai for scheduling and FAQs. no human in the loop. works fine. but for complaints? we hand it to a real person. simple. no buttons. no thresholds. no ‘trusted ai’ policies. just people. because people care.

Krzysztof Lasocki

8 January, 2026 - 17:55 PM

you know what’s wild? the fact that we’re even having this conversation. we spent 20 years trying to make AI autonomous… and now we’re building a whole new industry around making sure it doesn’t act like a drunk intern. congrats, we broke AI. now we’re just patching it with human labor. i’m not mad… i’m just disappointed.