Text-only AI feels like trying to understand a movie by reading the subtitles alone. You get the words, sure-but miss the tone in the voice, the emotion on the face, the way the music builds tension. That’s exactly why multimodal AI is changing everything. It doesn’t just read text. It sees images, hears speech, understands video, and even interprets sensor data-all at once. And it connects the dots between them in ways that text-only models simply can’t.

What Multimodal AI Actually Does

Multimodal AI isn’t just a fancy upgrade. It’s a complete shift in how machines process information. Instead of handling text, images, or audio in separate silos, it treats them as parts of a single, unified experience. Think of it like how your brain works: you don’t see a dog and then hear it bark-you perceive them together as one event. That’s what models like Google’s Gemini, OpenAI’s GPT-4o, and Meta’s Llama 3 now do.For example, if you show a multimodal AI a photo of a spilled coffee mug next to a laptop with a cracked screen, it doesn’t just describe the image. It understands the context: someone likely knocked over their coffee while working. A text-only model would need you to type that exact sentence. It can’t infer cause and effect from visuals alone.

This isn’t theoretical. In healthcare, multimodal systems analyzing both X-rays and patient notes have cut diagnostic errors by 37.2%, according to a 2024 Stanford study. Why? Because a radiologist doesn’t just look at an image-they cross-reference it with symptoms, age, medical history. Multimodal AI does the same.

How It Beats Text-Only Systems

Text-only AI has limits. It can’t read facial expressions. It can’t hear sarcasm. It can’t tell if a product review is about a broken screen or a scratched case just from words. Multimodal AI fixes that.Take customer service. A text-only chatbot might misinterpret a frustrated customer’s message as neutral. But add voice tone analysis and the system detects anger, adjusts its response, and escalates the issue-cutting resolution time by 41%, per Founderz’s 2024 data. Bank of America’s multimodal chatbot resolved 68% of complex cases involving uploaded documents like insurance claims. Text-only bots managed only 42%.

Even in marketing, the difference is clear. Unilever used multimodal AI to scan Instagram posts and comments together. They spotted a rising trend in eco-friendly packaging-something their text-only tools missed because users were posting photos with captions like “this is so green!” without using the word “sustainable.” The visual context made all the difference.

The Tech Behind the Magic

Multimodal AI works because of unified architectures. Unlike older systems that ran separate models for images and text, modern ones use a single neural network trained on mixed data. Google’s Gemini, for instance, processes text, images, and audio through the same core model, allowing it to generate a caption from a photo, answer questions about a video, or even write code based on a sketch.Performance gains are measurable. In vision-language tasks, multimodal models are 34% more accurate than single-modality systems. In medical imaging, combining radiology scans with clinical notes boosts precision by 28.7%. GPT-5, OpenAI’s latest model, handles multimodal queries 2.8 times faster than running text and image models one after another.

But it’s not magic-it’s heavy. Training these models needs serious hardware. NVIDIA says you need at least 80GB of VRAM just to start. That’s why most enterprise users rely on cloud APIs like Google’s Vertex AI or IBM’s Watsonx, which handle the heavy lifting remotely.

Where It Still Falls Short

Multimodal AI isn’t perfect. It struggles when inputs don’t line up cleanly. If you feed it a low-res photo, a blurry video, or a non-standard file format, accuracy drops by 12-15%, according to Tredence’s 2024 analysis. And it’s prone to hallucinations-especially when interpreting satire or irony. A 2025 test by NYU’s Gary Marcus found GPT-4o mistook satirical memes as factual in 23% of cases.Bias is another problem. Multimodal systems amplify cultural bias 15.8% more than text-only models, per the Partnership on AI. Why? Because images carry implicit cultural cues-clothing, settings, gestures-that text doesn’t. If the training data skews toward Western norms, the AI will too.

And then there’s cost. MIT’s 2024 research shows multimodal models use 3.5x more processing power. That means higher cloud bills and slower responses on weak networks. For small businesses or developers without access to high-end GPUs, sticking with text-only might still make sense.

Real-World Adoption Is Already Here

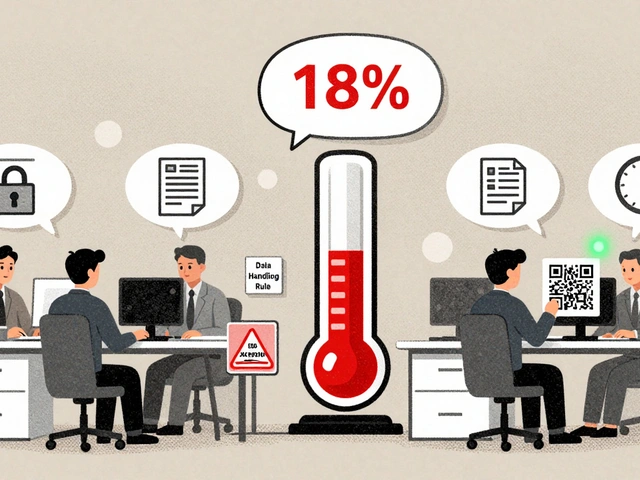

This isn’t a future trend-it’s happening now. Gartner reports the multimodal AI market hit $18.7 billion in 2024, growing at nearly 50% a year. Healthcare leads adoption (28% of the market), followed by customer service (24%) and retail (19%).Eighty-three percent of Fortune 500 companies have rolled out multimodal tools. Coca-Cola cut its product development cycle from 14 months to 7 by starting small-first using image-to-text captioning to analyze social media visuals before expanding to full multimodal analysis.

On the consumer side, adoption is slower. Only 16% of individual developers use multimodal AI, per SlashData. Why? Complexity. Developers report a 4-6 month learning curve to transition from text-only to multimodal systems. And 87% of negative reviews on G2 cite high GPU requirements as the main barrier.

What’s Next?

The next wave is embodied AI-systems that combine vision, audio, and physical sensing. NVIDIA’s Project GROOT, announced in September 2025, lets robots interpret touch, sound, and sight together to navigate real-world environments. Imagine a robot that sees a spilled drink, hears a person say “I need a towel,” and feels the wet floor under its feet-all to respond appropriately.Google’s Gemini 1.5 now handles 1 million tokens of context, meaning it can analyze entire movies with synced subtitles. Meta’s Llama 3.1 improved non-English multimodal understanding by 38.7% across 200 languages. OpenAI’s GPT-4o update slashed cross-modal latency by 42%.

By 2028, 91% of AI researchers predict all generative AI will be multimodal. The question isn’t whether it’ll become standard-it’s how fast your industry will catch up.

Should You Use It?

If you’re in healthcare, finance, customer service, or marketing-yes. The ROI is clear: faster decisions, fewer errors, better customer experiences.If you’re a small team with limited resources, start simple. Try image captioning first. Use an API like Vertex AI or OpenAI’s GPT-4o. Don’t try to build everything at once. Coca-Cola didn’t. Neither should you.

And if you’re just experimenting with text prompts? Stick with text-only for now. Multimodal AI adds complexity. Only add it when you need to understand what’s beyond the words.

What’s the difference between multimodal AI and text-only AI?

Text-only AI processes only written language. Multimodal AI understands and connects multiple types of input-text, images, audio, video, and more-simultaneously. This lets it grasp context, emotion, and relationships that words alone can’t convey, leading to more accurate and human-like responses.

Is multimodal AI better than text-only AI?

In most real-world scenarios, yes. Multimodal AI reduces errors in healthcare diagnostics by over 37%, improves customer service resolution by 41%, and detects trends from social media images that text models miss. But for tasks involving only text-like analyzing legal contracts-it can be less accurate due to unnecessary processing of irrelevant visual data.

What are the biggest challenges with multimodal AI?

The main challenges are computational cost (3.5x more power than text-only), high hardware demands (80GB+ VRAM), data alignment issues (67% of enterprises report syncing problems), and increased bias (15.8% higher than text-only). It also struggles with low-quality or unusual inputs like blurry images or non-standard file formats.

Which companies lead in multimodal AI?

Google (Gemini), OpenAI (GPT-4o and GPT-5), and Meta (Llama 3) are the leaders. Google holds 32% of the market, OpenAI 28%, and Anthropic 15%. Specialized players like Runway ML focus on creative applications, while IBM and Microsoft offer enterprise-grade multimodal APIs with strong security and compliance features.

Can I use multimodal AI without a powerful GPU?

Yes, but not locally. You can use cloud APIs like Google’s Vertex AI, OpenAI’s API, or IBM’s Watsonx. These handle the heavy processing on their servers. You just send your data (image, text, audio) and get the result back. This is how most businesses use it-without owning expensive hardware.

What industries benefit most from multimodal AI?

Healthcare (analyzing scans + patient records), customer service (voice + text + document uploads), retail (image-based trend detection), and marketing (social media analysis combining visuals and captions). These fields see the clearest ROI because they rely on context that goes beyond words.

Will multimodal AI replace text-only models?

Not entirely. Text-only models are still faster, cheaper, and more accurate for pure text tasks-like summarizing legal documents or translating formal reports. But for any application where context matters-emotion, visuals, sound-multimodal AI is becoming the standard. By 2028, nearly all generative AI systems will include multimodal capabilities, but text-only will remain useful for niche, low-resource use cases.

Rohit Sen

21 December, 2025 - 18:14 PM

Look, I get the hype, but multimodal AI is just text models with extra steps. Most of these ‘breakthroughs’ are just overfitting to curated datasets. Give me a break with the 37% error reduction claims-where’s the peer review? I’ve seen more reliable results from a well-tuned regex.

Vimal Kumar

22 December, 2025 - 08:27 AM

I think this is actually huge, especially for folks in places like rural India where voice and image-based interfaces can bridge literacy gaps. I’ve seen community health workers use simple image-to-text tools to log symptoms when they can’t type. It’s not about fancy tech-it’s about making AI useful where it’s needed most.

Amit Umarani

23 December, 2025 - 22:23 PM

You missed a comma after ‘GPT-4o’ in the third paragraph. Also, ‘80GB of VRAM’-should be ‘80 GB’. And ‘non-standard file format’? Singular or plural? You’re inconsistent. And why are you using ‘$18.7 billion’ with a dollar sign but ‘3.5x’ without a multiplication symbol? Sloppy.

Noel Dhiraj

24 December, 2025 - 17:02 PM

Man, I started playing with Gemini’s image captioning last week and it’s wild how it just gets the vibe of a photo. Like, I uploaded a pic of my chai spill at work and it said ‘morning disaster’-exactly what I was thinking. No need to overcomplicate it. Just let it see the mess and get it. That’s all I want.

vidhi patel

25 December, 2025 - 03:52 AM

It is imperative to note that the assertion regarding a 37.2% reduction in diagnostic errors lacks a verifiable citation from a peer-reviewed journal. Furthermore, the reference to Stanford’s 2024 study is not traceable via public academic databases. Such unsubstantiated claims undermine the credibility of the entire article. I urge you to retract and revise accordingly.

Priti Yadav

26 December, 2025 - 00:38 AM

They’re not making AI understand context-they’re training it on government and corporate data to predict what you’ll do next. Those ‘emotional’ voice analyses? They’re building behavioral profiles. And don’t get me started on how they use your Instagram pics to feed ad algorithms. This isn’t progress-it’s surveillance with a smiley face.

Ajit Kumar

26 December, 2025 - 01:34 AM

While the general premise of multimodal AI is not without merit, one must acknowledge the profound epistemological limitations inherent in conflating disparate modalities under a singular inferential framework. The human brain does not ‘perceive’ a dog and its bark as a unified event-it processes sensory inputs through distinct cortical pathways, then synthesizes them via higher-order cognitive integration. To suggest that a neural network trained on a dataset of 10^15 parameters can replicate this process is not only reductive, it is ontologically misleading. Moreover, the claim that GPT-5 handles multimodal queries ‘2.8 times faster’ is statistically dubious without a clear baseline comparison. Is this relative to sequential processing? Parallelized inference? The absence of methodological transparency renders these assertions unscientific.

Diwakar Pandey

27 December, 2025 - 05:52 AM

I’ve been using GPT-4o’s image analysis for my small design side hustle. It’s not perfect, but it’s saved me hours. I just drop a sketch and ask for color suggestions or layout ideas. No need to write paragraphs. It’s like having a quiet coworker who gets your vibe. The cost is still high, but the cloud APIs make it doable. Just don’t expect magic-expect a tool that helps you think better.