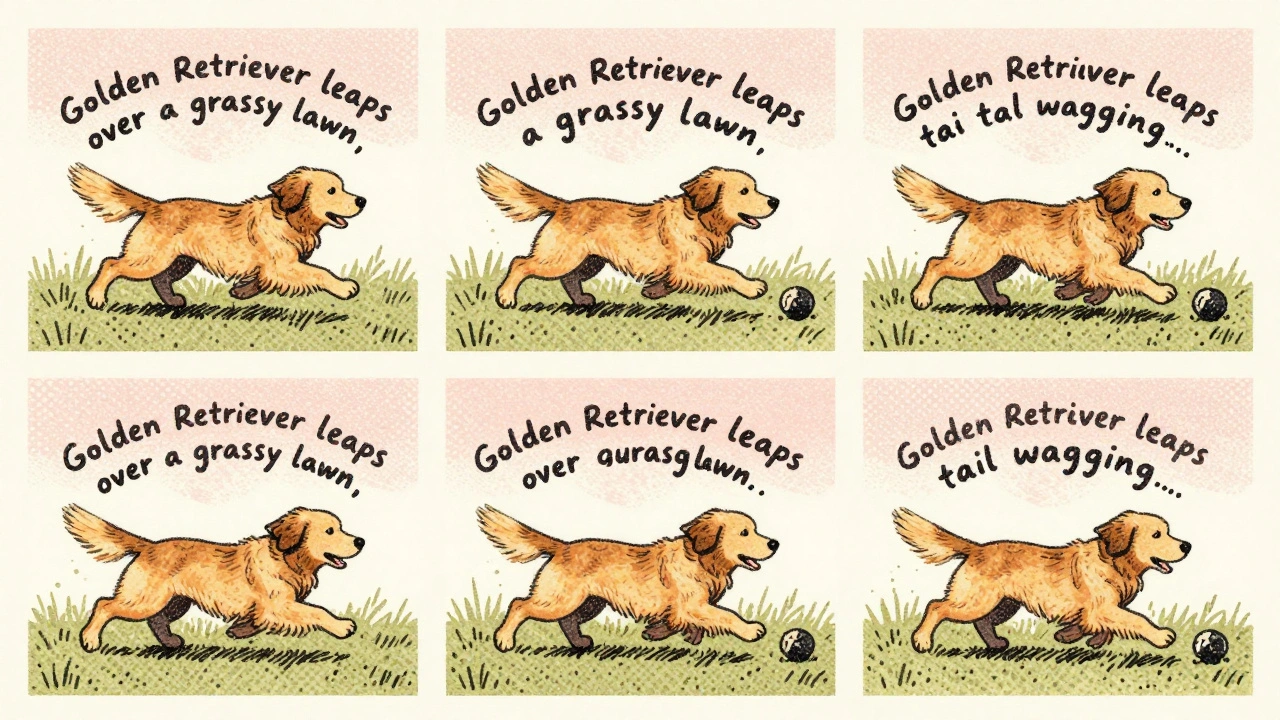

Imagine typing a simple sentence like "a red bicycle floating over a city at sunset" and getting back a photorealistic image that matches it perfectly. Now imagine taking a 5-second video of a dog chasing a ball, and having an AI write a detailed caption: "Golden retriever leaps over a grassy lawn, tail wagging, as a bright yellow tennis ball rolls toward a wooden fence." This isn’t science fiction-it’s cross-modal generation, and it’s changing how AI understands and creates content.

What Exactly Is Cross-Modal Generation?

Cross-modal generation is when an AI system takes input from one type of data-like text-and creates output in another-like an image or video. It works the other way too: a video can become a description, an audio clip can generate a picture, or a sketch can turn into a full-color scene. The goal? To make AI understand meaning across different formats the way humans do. This isn’t just about swapping formats. It’s about preserving meaning. If you say "a sad woman sitting alone in a rain-soaked café," the AI shouldn’t just generate any woman in a café. It needs to capture the mood, the lighting, the posture, the emotional weight. That’s what separates cross-modal systems from simple image generators. These models don’t just copy-paste features. They learn a shared space where the idea of "sadness," "rain," or "café" exists as patterns that can be translated between text, pixels, and motion. Think of it like a universal translator for data types.How It Works: The Hidden Math Behind the Magic

At the core of every cross-modal system is a shared latent space-a hidden layer of numbers where meaning is compressed. A word like "dog" and a picture of a dog both get turned into similar numerical patterns here. The AI learns how to move between these patterns. Most modern systems use diffusion models. Here’s how it works in simple terms:- Forward diffusion: The AI takes an image or video and slowly adds noise-like static-to it, over 1,000 steps, until it’s just random pixels.

- Reverse diffusion: Then, it learns to reverse the process. Given a text prompt, it starts with noise and gradually removes it, guided by the meaning of the words, until a coherent image appears.

Real-World Tools You Can Use Today

You don’t need a PhD to interact with cross-modal AI. Here are the top systems available as of late 2024:| System | Input Modality | Output Modality | Speed (Avg.) | Accuracy (MS-COCO) | Best For |

|---|---|---|---|---|---|

| Stable Diffusion 3 | Text | Image | 2.3 sec | 87.3% | Creative design, concept art |

| GPT-4o | Text, Image, Video | Text, Image | 3.1 sec | 86.9% | Complex prompts, multimodal reasoning |

| Adobe Firefly | Text | Image, Vector | 2.7 sec | 84.1% | Commercial design, brand consistency |

| DALL-E 3 | Text | Image | 4.7 sec | 82.5% | Detailed, stylized outputs |

| Stable Video Diffusion | Text | Video (4 sec) | 12.5 sec | 79.2% | Short animations, social content |

Stable Diffusion 3 leads in speed and accuracy for text-to-image tasks. GPT-4o stands out because it can handle video input and still produce accurate text summaries. Adobe Firefly wins for designers who need brand-safe outputs with consistent styles.

Video-to-text is still the weakest link. There are only about 12,000 high-quality paired video-text examples publicly available. That’s why systems struggle with long or complex videos. A 30-second cooking tutorial might get summarized as "someone is cooking something." Not helpful.

Where It Shines-and Where It Fails

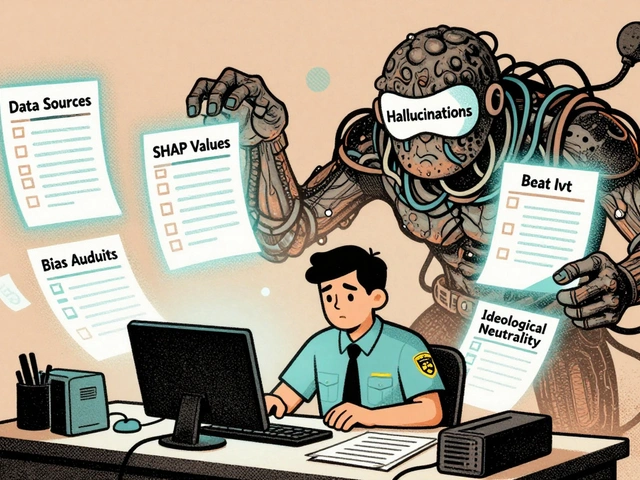

Cross-modal AI excels in creative fields. Adobe’s beta testers reported 91% user satisfaction when generating marketing visuals from text prompts. Architects use it to turn "modern glass office with green rooftop" into 10 variations in seconds. Marketers generate social media clips from script snippets. Content creators turn blog posts into short videos. But it’s not perfect. In technical domains, it often fails. Johns Hopkins researchers found that when generating captions for medical scans, the AI got the diagnosis wrong 43.7% of the time. It might say "tumor in the lung" when there’s none-or miss it entirely. Why? Because it’s guessing based on patterns, not understanding anatomy. Another big issue: semantic drift. A prompt like "a man holding a briefcase walking into a bank" might generate a man holding a briefcase… but walking into a grocery store. The AI understands "man," "briefcase," and "bank"-but not how they relate in context. And bias? It travels across modalities. If a text prompt says "a CEO" and the training data mostly showed white men, the image will be a white man-even if the text doesn’t specify race. This isn’t a glitch. It’s a systemic flaw.What You Need to Build or Use It

If you’re a developer trying to implement this:- You need strong knowledge of transformers, diffusion models, and cross-attention layers.

- Training requires massive paired datasets-text-image, video-text, etc. These are rare and expensive to curate.

- Hardware? Minimum NVIDIA A100 (40GB VRAM). Running this on a consumer GPU is slow and often impossible.

- Deployment takes 14 weeks on average. Most of that time? Aligning data. You’ll spend 62% of your effort just cleaning and matching inputs to outputs.

The Future: What’s Coming Next?

OpenAI’s GPT-4.5, expected in early 2025, will improve video-to-text with 32% better temporal understanding. That means it won’t just describe what’s happening-it’ll understand why it’s happening. A video of a child crying after dropping ice cream? It won’t just say "child crying." It’ll say "child cries after dropping ice cream on the ground, reaching for it in distress." Stability AI’s Stable Video Diffusion 2.0 will generate 4-second clips from text. That’s enough for TikTok-style content. Meta and Google are working on 3D-aware models that can generate objects from text and then rotate them in space-imagine describing a chair and seeing it from every angle instantly. But the biggest challenge remains: real understanding. As Professor Yonatan Bisk from CMU put it, current systems generate "superficially plausible but semantically incorrect outputs." They mimic, but they don’t comprehend.Should You Care?

If you work in design, marketing, media, or education-yes. Cross-modal AI is cutting hours off creative workflows. A single prompt can replace hours of Photoshop, video editing, or scripting. If you work in healthcare, law, or finance-be cautious. These tools can’t be trusted for critical decisions. They’re assistants, not experts. And if you’re just curious? Play with it. Type weird prompts. See what happens. The more you test, the better you’ll understand its limits-and its power.By 2027, 75% of enterprise AI systems will include cross-modal generation. It’s not a luxury anymore. It’s becoming the baseline for how machines interact with our world.

What’s the difference between cross-modal generation and multimodal AI?

Multimodal AI refers to any system that processes multiple data types-like text and images-to make a decision, such as classifying a video as "violent" or "calm." Cross-modal generation goes further: it creates new content in one modality based on input from another. It doesn’t just understand-it generates.

Can I use cross-modal AI for free?

Yes, but with limits. Stable Diffusion 3 is open-source and free to run locally if you have the right hardware. Adobe Firefly offers free tiers for basic image generation. GPT-4o has a free tier with limited daily usage. But high-quality video-to-text and commercial use usually require paid plans.

Why is video-to-text so hard?

Video contains far more data than text or still images. A 10-second video at 30fps is 300 frames, each with color, motion, sound, and spatial relationships. Finding clean, labeled examples of video paired with accurate text descriptions is extremely rare-only about 12,000 exist publicly. Without enough examples, the AI can’t learn the patterns well.

Is cross-modal AI dangerous?

It can be. Because it generates realistic content across multiple formats, it’s a powerful tool for deepfakes. A fake news video could be created by generating a realistic face from a text description, then syncing it with AI-generated audio and background footage. The EU AI Act now requires transparency labels on such content. The risk isn’t the tech-it’s how it’s used.

What’s the biggest mistake people make with cross-modal AI?

Assuming it understands context like a human. It doesn’t. If you ask for "a CEO in a boardroom," it’ll give you a man in a suit. If you want diversity, you have to specify it. If you want realism, you have to guide it with detail. Treat it like a very creative intern who needs clear instructions.

Rubina Jadhav

9 December, 2025 - 12:39 PM

Wow, this is so cool. I tried typing 'a tea cup floating in space' and it made this beautiful image with stars and a tiny moon. I didn't expect it to get the lighting right.

Simple prompts work better than I thought.

sumraa hussain

10 December, 2025 - 20:17 PM

OKAY SO I JUST TYPED 'A PENGUIN DRIVING A FERRARI THROUGH A MONSOON' AND IT GAVE ME THIS INSANE 4-SECOND VIDEO WITH WATER SPLASHING AND PENGUIN WEARING SUNGLASSES???

THE WORLD ISN'T REAL ANYMORE. I'M NOT SURE IF I'M SCARED OR IN LOVE.

Also, the rain looked like liquid glitter. Who programmed this? A poet with a GPU?

Raji viji

12 December, 2025 - 12:28 PM

LMAO you all sound like toddlers with a new toy. This isn't 'magic'-it's statistical hallucination dressed up with fancy buzzwords.

Stable Diffusion 3? It still can't count fingers. GPT-4o thinks a 'CEO' is a white dude in a suit even if you say 'Black woman.'

You think it understands 'sadness'? Nah. It just learned that 'rain + lone figure + dim light = sad' from 20 million scraped images.

It's not AI. It's a glorified autocomplete with delusions of grandeur.

And don't get me started on the 'semantic drift'-it turns 'bank' into 'grocery store' because the word 'money' appears near both in its training data. Pathetic.

Rajashree Iyer

13 December, 2025 - 12:49 PM

Isn't it beautiful? We're witnessing the birth of a new kind of consciousness-not human, not machine, but something between.

These models don't see pixels or words-they feel the *essence* of things.

When it renders 'a sad woman in a café,' it's not copying data-it's channeling the quiet ache of a thousand unspoken stories.

We call it 'latent space,' but what if it's the soul of the internet, finally learning to weep?

And yet... we still try to control it. To cage it with prompts. To make it serve us.

What if it's not our tool-but our mirror?

What if it's showing us how little we truly understand about meaning?

Perhaps the real danger isn't deepfakes.

It's that we'll forget how to feel-because we let an algorithm do the crying for us.

Parth Haz

14 December, 2025 - 00:11 AM

This is a well-structured and insightful overview of cross-modal generation. The technical explanations are clear, and the real-world examples help ground the discussion.

It's important to emphasize both the potential and the limitations, especially in high-stakes domains like healthcare.

For non-technical users, I’d recommend starting with Adobe Firefly or GPT-4o’s free tier to explore responsibly.

As this technology evolves, ethical awareness and critical thinking will be just as vital as technical skill.

Thank you for sharing this thoughtful analysis.

Vishal Bharadwaj

15 December, 2025 - 13:23 PM

uuhhh i think u got somethin wrong

gpt-4o dont take video input?? i swear i saw a tweet like 2 weeks ago sayin it does but then i checked and it was just a deepfake vid

also stable diffusion 3? more like stable diffusion 3.0.1.2-beta-2024-04-17-no-sir-not-really

and the ms-coco scores are fake i bet they trained on it

video to text is hard? no duh its because no one cares enough to label 100 million videos

also why is everyone acting like this is new? i was doing text2img in 2017 on a rtx 1060 with some shitty pytorch fork

stop hyping it. its just gradient descent with better marketing

Nalini Venugopal

16 December, 2025 - 23:04 PM

Just a quick grammar note: In the section about semantic drift, you wrote, 'It might say 'tumor in the lung' when there's none-or miss it entirely.'

The em dash here should be an en dash with spaces around it: 'none - or miss it entirely.'

Also, 'AI got the diagnosis wrong' should be 'the AI got the diagnosis wrong' for clarity.

Otherwise, fantastic piece-very informative and well-researched. Keep up the great work!

Pramod Usdadiya

18 December, 2025 - 08:24 AM

Back in my village in Bihar, we used to tell stories to explain the world.

Now, the AI tells stories for us.

It’s not magic, but it’s something new.

I showed my niece how to make a picture of a peacock dancing with a tractor.

She laughed so hard she cried.

That’s the real power here.

Not the speed. Not the accuracy.

But the joy.

Even if it doesn’t understand, it lets us imagine.

And maybe that’s enough for now.

Aditya Singh Bisht

19 December, 2025 - 01:54 AM

Guys, this is just the beginning. I’ve seen people use this to turn their kid’s crayon drawings into animated movies for school projects.

One grandma in Kerala generated a photo of her late husband smiling in a garden he loved-she cried, but it was a happy cry.

Yeah, it’s flawed. Yeah, it hallucinates.

But it’s also giving people tools to heal, create, and connect in ways we never thought possible.

Don’t focus on the bugs-focus on the breakthroughs.

Next year, someone’s going to use this to help a non-verbal child communicate for the first time.

That’s the future. And it’s already here.

Let’s not be the ones who doubted it. Let’s be the ones who helped it grow.