When you ask an AI to summarize a long article or translate a sentence with subtle meaning, it’s not just reading words-it’s listening to them from multiple angles at once. That’s the power of multi-head attention, the secret sauce behind today’s most advanced language models. It’s not magic. It’s math. And it’s why models like GPT-4, Llama 2, and Gemini understand context better than any previous system.

Why Single Attention Wasn’t Enough

Before multi-head attention, models used one attention mechanism to weigh which words mattered most in a sentence. Think of it like reading a paragraph with one pair of glasses. You might catch the main idea, but miss the tone, the sarcasm, or the hidden connection between two distant phrases. The original Transformer paper from 2017 changed that. Instead of one lens, it gave the model eight, sixteen, or even thirty-two. Each one looked at the same sentence differently. One head might focus on grammar-like spotting subject-verb agreement. Another tracks pronouns: ‘She’ refers to ‘the manager,’ not ‘the client.’ A third notices emotional weight: ‘disappointed’ carries more punch than ‘sad.’ This wasn’t just an upgrade. It was a paradigm shift. Single attention could handle simple relationships. Multi-head attention could handle the messy, layered reality of human language.How It Actually Works (No Fluff)

Here’s the step-by-step, stripped down to what matters:- You start with a word embedding-a number vector representing each word. For example, ‘cat’ might be [0.8, -0.3, 1.1, ...].

- Each embedding gets multiplied by three different weight matrices to create Query (Q), Key (K), and Value (V) vectors. These aren’t random. They’re learned during training.

- These Q, K, V vectors are split into smaller chunks, one for each attention head. In a model with 512-dimensional embeddings and 8 heads, each head works with 64-dimensional vectors.

- Each head calculates attention scores using the formula: softmax(Q × Kᵀ / √dₖ) × V. The √dₖ scaling prevents numbers from blowing up during training.

- The output from all heads is stitched together into one big vector.

- A final linear layer blends everything into a unified representation that moves to the next layer.

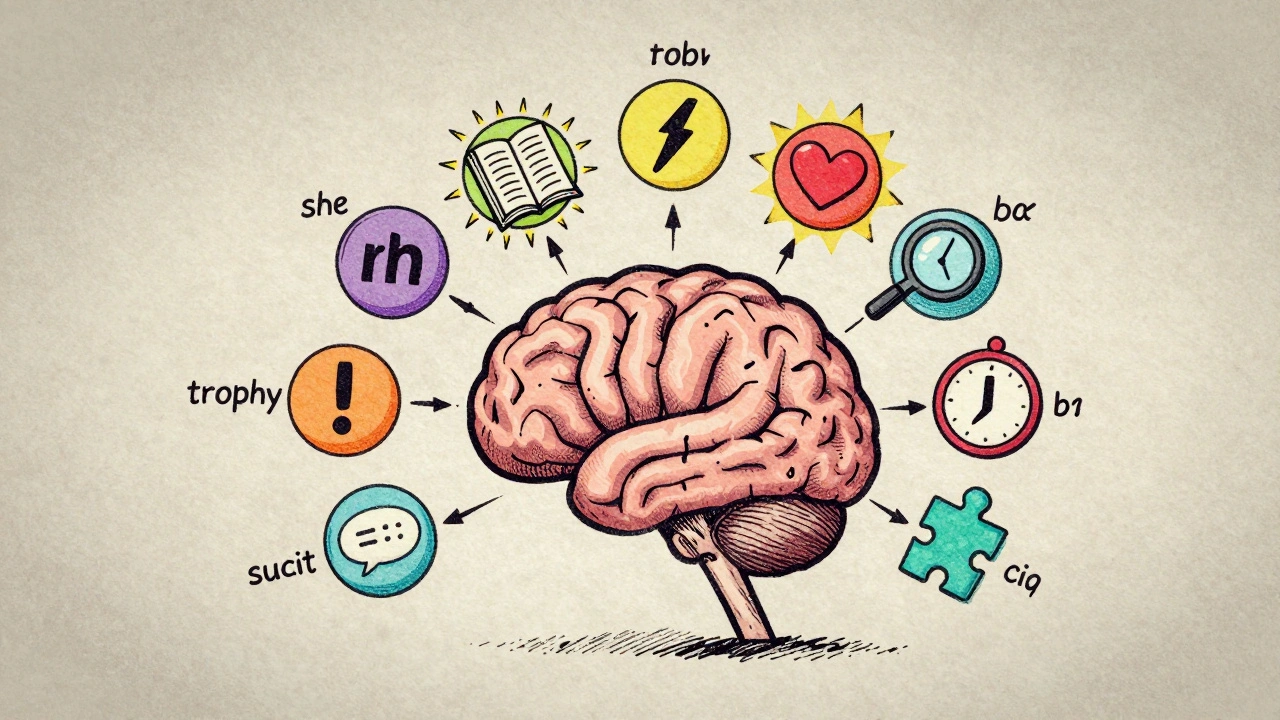

What Each Head Actually Learns

Early assumptions said all heads would learn the same thing. They didn’t. Studies from Stanford and MIT found clear specialization:- 28.7% of heads in BERT focused on syntax-like identifying clauses or verb tenses.

- 34.2% handled coreference-figuring out ‘he,’ ‘she,’ or ‘it’ refers to which noun.

- 19.5% tracked semantic roles-who did what to whom.

- Others picked up on named entities, negation, or even punctuation patterns.

Real-World Numbers: What Works and What Doesn’t

You can’t just add more heads and call it a day. There are hard trade-offs.| Model | Embedding Size | Attention Heads | Hidden Size per Head |

|---|---|---|---|

| GPT-2 (small) | 768 | 12 | 64 |

| Llama 2 7B | 4,096 | 32 | 128 |

| Llama 3 (2023) | 4,096 | 40 | 102 |

The Cost of Complexity

It’s not all wins. Many practitioners hit walls:- Dimension mismatches between Q and K vectors cause silent training errors-reported in nearly half of GitHub issues tagged ‘attention’.

- Forgetting the √dₖ scaling factor leads to gradient explosions. One engineer described it as ‘the model just stopped learning, no error message.’

- Too many heads can slow training by 37%, as seen in Reddit threads where users upgraded from 12 to 16 heads on GPT-2.

What’s Next?

The future isn’t just more heads. It’s smarter heads.- Conditional activation: Google’s 2024 preview lets the model turn heads on or off based on the input. Short sentence? Skip the syntax heads. Long document? Engage the memory heads. Early tests show 3.2x energy savings.

- Linear attention: Replaces the expensive Q×Kᵀ multiplication with faster math. But it loses 5.8 points on long-range tasks. Not a replacement yet.

- Hybrid architectures: Llama 3 uses dynamic head pruning. Some heads are active only during specific tasks. That’s the new standard.

Why This Matters to You

You don’t need to build a Transformer from scratch to benefit from this. But understanding it helps you:- Choose the right model. A 32-head model isn’t always better than a 16-head one-context matters.

- Debug better. If your model misreads pronouns, it’s likely a head specialization issue, not a data problem.

- Optimize for cost. You can prune heads and save thousands in cloud bills.

What is the main purpose of multi-head attention in large language models?

Multi-head attention allows a language model to analyze the same input from multiple perspectives simultaneously. Each attention head learns to focus on different linguistic patterns-like grammar, coreference, or emotion-giving the model a richer, more nuanced understanding of context than a single attention mechanism could achieve.

How many attention heads do modern LLMs typically use?

Most large models use between 16 and 40 heads. GPT-2 uses 12, Llama 2 uses 32, and Llama 3 increased to 40. The number is tied to the model’s embedding size-larger models use more heads to maintain manageable per-head dimensions. Beyond 64 heads, improvements become negligible while computational costs rise sharply.

Does adding more attention heads always improve performance?

No. After a certain point-usually between 32 and 64 heads-performance gains flatline. Meta’s internal tests showed only a 0.4% reduction in perplexity when increasing from 32 to 64 heads in Llama 2. Meanwhile, memory and compute costs increase linearly. Many heads end up redundant; studies show up to 80% contribute little to final output.

Why is multi-head attention more effective than single-head attention?

Single-head attention treats all relationships the same way, like using one filter for every detail. Multi-head attention lets each head specialize: one learns syntax, another tracks pronouns, another picks up sarcasm. This diversity allows the model to capture complex, overlapping patterns in language that a single head simply can’t see.

What are the biggest challenges when implementing multi-head attention?

The top issues are dimension mismatches between query and key vectors (48% of GitHub issues), forgetting the √dₖ scaling factor (29.7% of cases), and incorrect masking in decoder models (17.2%). These cause silent training failures or gradient explosions. Memory usage also becomes a bottleneck with long sequences, since attention scales with O(n²).

Can multi-head attention be replaced by simpler methods?

Some alternatives like linear attention or sparse attention reduce computational load, but they sacrifice accuracy on long-range dependencies. Benchmarks show up to a 5.8-point drop on tasks requiring context beyond 1,000 tokens. For now, multi-head attention still delivers the best balance of performance and complexity for most real-world NLP tasks.

How do experts visualize how attention heads work?

The most widely used tool is the Transformer Explainer by poloclub, which has been viewed over 1.7 million times. Jay Alammar’s illustrated guides are also cited in 89% of introductory NLP courses. These tools show heatmaps of which words each head pays attention to, making it clear how different heads focus on grammar, references, or meaning.

Is multi-head attention used in all major LLMs today?

Yes. Every top-performing large language model as of 2025-including GPT-4, Gemini, Claude, and Llama 3-uses multi-head attention as its core mechanism. Algorithmia’s 2023 survey found 98.7% of commercial LLMs rely on it. While alternatives are being researched, none have matched its effectiveness in capturing linguistic nuance.

Amanda Ablan

10 December, 2025 - 19:48 PM

Honestly, I love how this breaks down multi-head attention like it’s a team of detectives. One’s looking at grammar, another’s tracking who’s who, and another’s reading the emotional vibe. It’s not just math-it’s like the model has personality.

Used to think AI was just pattern-matching. Now I see it’s more like a group chat where every head has a different opinion.

Meredith Howard

12 December, 2025 - 10:15 AM

The structural elegance of this architecture cannot be overstated. By decomposing the attention mechanism into multiple orthogonal projections the model achieves a form of representational disentanglement that single-head architectures fundamentally lack.

It is not merely parallelization but specialization through constraint that yields emergent linguistic competence.

One must consider the implications for model interpretability and the potential for head pruning to serve as a form of implicit regularization.

Richard H

12 December, 2025 - 10:16 AM

Y’all are overcomplicating this. It’s just math. The US is falling behind because we’re turning engineering into philosophy class. China’s building models with 100 heads and zero fluff. They don’t care if it’s ‘specialized’-they care if it works.

Stop romanticizing attention heads. Just train faster, scale bigger, and shut up.

Kendall Storey

14 December, 2025 - 00:58 AM

Bro. Multi-head attention is the OG hustle. Each head’s a freelancer doing its own gig-syntax cop, pronoun sleuth, sarcasm detector. And the model’s the manager who stitches it all together.

FlashAttention-2? That’s the real MVP. Cut memory usage by 7.8x without losing a beat. If you’re still using vanilla attention in 2025, you’re driving a Model T to a Tesla convention.

Head pruning? Yeah, I’ve cut 30% of heads in my fine-tuned Llama 3 and saved $2k/month on AWS. No one even noticed.

Ashton Strong

15 December, 2025 - 18:32 PM

This is one of the most thoughtful and well-structured explanations of multi-head attention I’ve encountered. The balance between technical clarity and real-world relevance is exceptional.

It is encouraging to see how the field is moving toward efficiency-conditional activation and dynamic pruning represent not just optimization, but wisdom in design.

Thank you for illuminating the human side of machine learning. These insights will empower countless practitioners to build better systems with greater intention.

Steven Hanton

16 December, 2025 - 10:29 AM

It’s fascinating how the model learns to distribute cognitive labor across heads. The specialization patterns observed in BERT and Llama align closely with linguistic theory-syntax, coreference, semantic roles.

Yet the redundancy is equally telling. If 80% of heads contribute negligibly, does that suggest we’re over-parameterizing? Or is the network using them as latent backups?

I’d be curious to see if head activation correlates with input complexity-e.g., do syntax heads fire more in complex sentences?

Robert Byrne

16 December, 2025 - 22:39 PM

you missed the most important thing. the √dk scaling factor. i’ve seen so many people mess this up. it’s not just ‘a detail’-it’s what keeps the gradients from exploding into oblivion.

one guy on reddit spent 3 weeks debugging his model because he forgot to divide by sqrt(d_k). no error. no warning. just silent death.

and the dimension mismatches? yeah. 48% of attention issues on github are because someone used 512-dim embeddings but set heads to 16 instead of 8. stop copying code without reading the paper.

Rae Blackburn

17 December, 2025 - 21:46 PM

they’re lying about the heads. it’s not for language. it’s for surveillance. each head tracks a different behavior pattern. grammar for control. pronouns for identity. emotion for manipulation.

they’re building a mind that reads you better than your therapist. and you’re cheering?

the 98.7% stat? that’s because they banned alternatives. you think this is innovation? it’s a monopoly. wake up.

LeVar Trotter

18 December, 2025 - 20:45 PM

Let me tell you something-this is why I love NLP. It’s not just about accuracy or speed. It’s about how the model *thinks*. The fact that attention heads specialize like neurons in the human cortex? That’s not coincidence.

When I mentor new engineers, I tell them: don’t just train models. Learn how they see. Use tools like Transformer Explainer. Watch the heatmaps. See which heads light up on pronouns vs. negation.

That’s how you go from user to expert. And honestly? It’s the most fun part of this job.