Author: Calder Rivenhall - Page 2

RAG Failure Modes: Diagnosing Retrieval Gaps That Mislead Large Language Models

RAG systems often appear to work but quietly fail due to retrieval gaps that mislead large language models. Learn the 10 hidden failure modes-from embedding drift to citation hallucination-and how to detect them before they cause real damage.

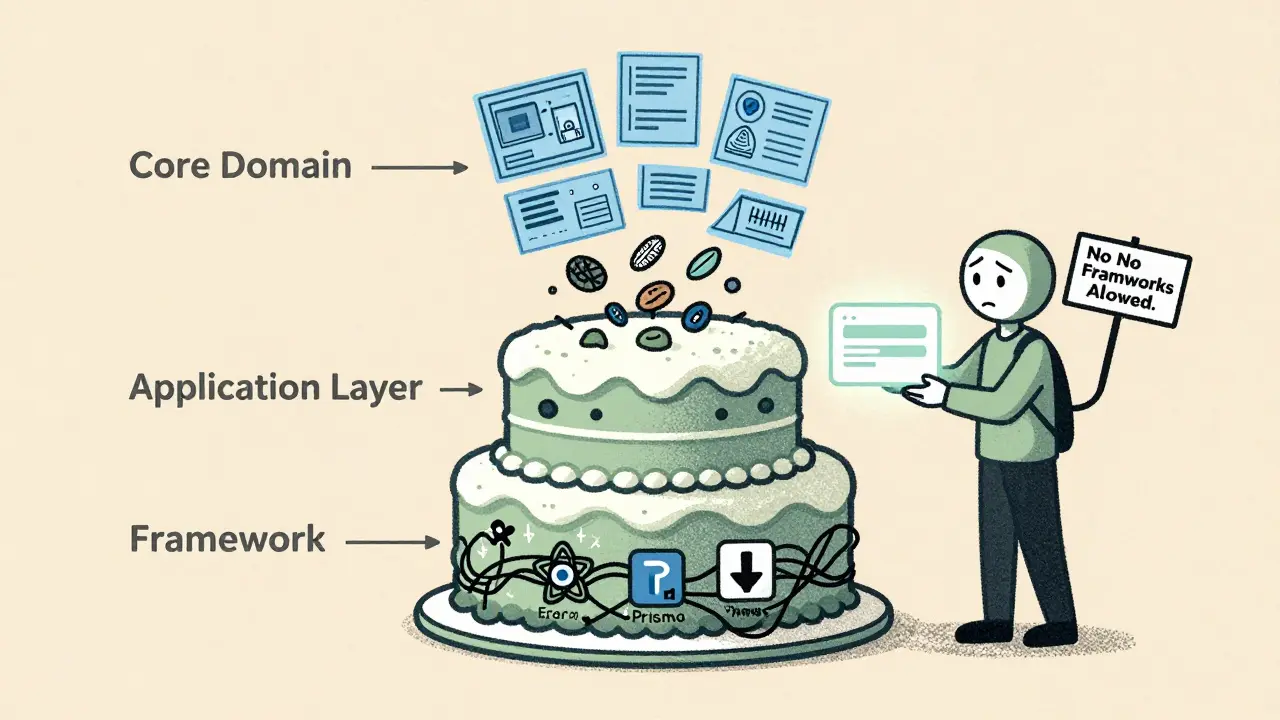

Read MoreClean Architecture in Vibe-Coded Projects: How to Keep Frameworks at the Edges

Clean Architecture keeps business logic separate from frameworks like React or Prisma. In vibe-coded projects, AI tools often mix them-leading to unmaintainable code. Learn how to enforce boundaries early and avoid framework lock-in.

Read MorePrivacy-Aware RAG: How to Protect Sensitive Data When Using Large Language Models

Privacy-Aware RAG protects sensitive data in AI systems by removing PII before it reaches large language models. Learn how it works, why it's critical for compliance, and how to implement it without losing accuracy.

Read MoreProductivity Uplift with Vibe Coding: What 74% of Developers Report

74% of developers report productivity gains with vibe coding, but real-world results vary wildly. Learn how AI coding tools actually impact speed, quality, and skill growth-and who benefits most.

Read MoreHuman-in-the-Loop Operations for Generative AI: Review, Approval, and Exceptions

Human-in-the-loop operations for generative AI ensure AI outputs are reviewed, approved, and corrected by people before deployment. Learn how top companies use structured workflows to balance speed, safety, and compliance.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches AI hallucinations before users see them, reducing errors by up to 73%. Learn how top companies use confidence scoring, domain experts, and smart workflows to prevent costly mistakes.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches dangerous AI hallucinations before users see them. Learn how it works, where it saves money and lives, and why automated filters alone aren't enough.

Read MoreScaling for Reasoning: How Thinking Tokens Are Rewriting LLM Performance Rules

Thinking tokens are transforming how LLMs reason by targeting inference-time bottlenecks. Unlike traditional scaling, they boost accuracy on math and logic tasks without retraining - but at a high compute cost.

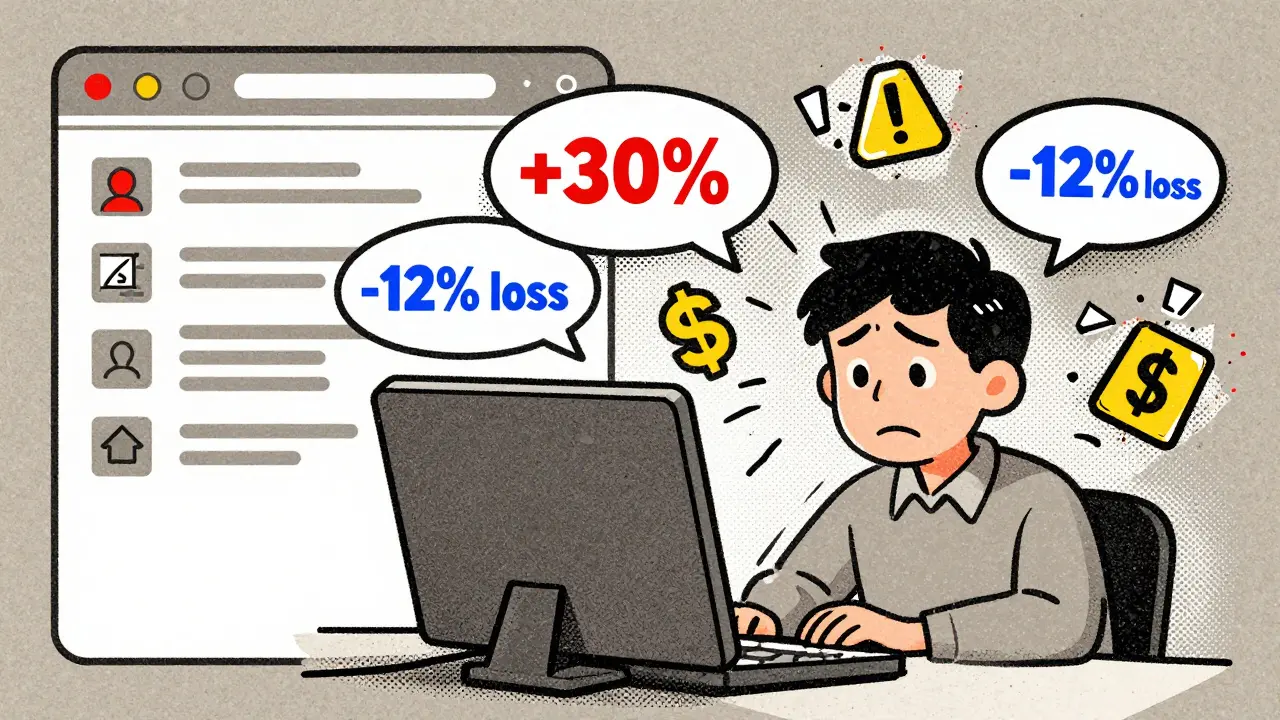

Read MoreMeasuring Hallucination Rate in Production LLM Systems: Key Metrics and Real-World Dashboards

Learn how to measure hallucination rates in production LLM systems using real-world metrics like semantic entropy and RAGAS. Discover what works, what doesn’t, and how top companies are reducing factuality risks in 2025.

Read MoreWhy Multimodality Is the Next Big Leap in Generative AI

Multimodal AI combines text, images, audio, and video to understand context like humans do-making generative AI smarter, faster, and more accurate than text-only systems. Here's how it's already changing healthcare, customer service, and marketing.

Read MoreCode Ownership Models for Vibe-Coded Repos: Avoiding Orphaned Modules

Vibe coding with AI tools like GitHub Copilot is speeding up development-but leaving behind orphaned modules no one understands or owns. Learn the three proven ownership models that prevent production disasters and how to enforce them today.

Read MoreThreat Modeling for Vibe-Coded Applications: A Practical Workshop Guide

Vibe coding speeds up development but introduces serious security risks. This guide shows how to run a lightweight threat modeling workshop to catch AI-generated flaws before they reach production.

Read More