Best PHP AI Scripts for LLM Integration and Automation

When you need to bring large language models, AI systems that understand and generate human-like text. Also known as LLMs, they let PHP apps think, reason, and respond like a human assistant. The right PHP AI scripts turn your backend into an intelligent engine—whether you're building chatbots, processing documents, or automating customer support. You don’t need to be an AI researcher. You just need clean, tested code that talks to OpenAI, Anthropic, or open-source models without breaking.

Real projects use RAG, a method that lets LLMs pull answers from your own data instead of guessing. They rely on vector databases, systems that store and retrieve text snippets by meaning, not keywords. Others use function calling, a way for LLMs to trigger real actions like fetching orders or sending emails. These aren’t theory—they’re in production, cutting support tickets and boosting accuracy. And they all start with PHP code that just works.

Below, you’ll find the most practical scripts—open-source, premium, and ready-to-deploy. No fluff. Just working examples that connect PHP to AI, handle costs, keep data safe, and scale without headaches.

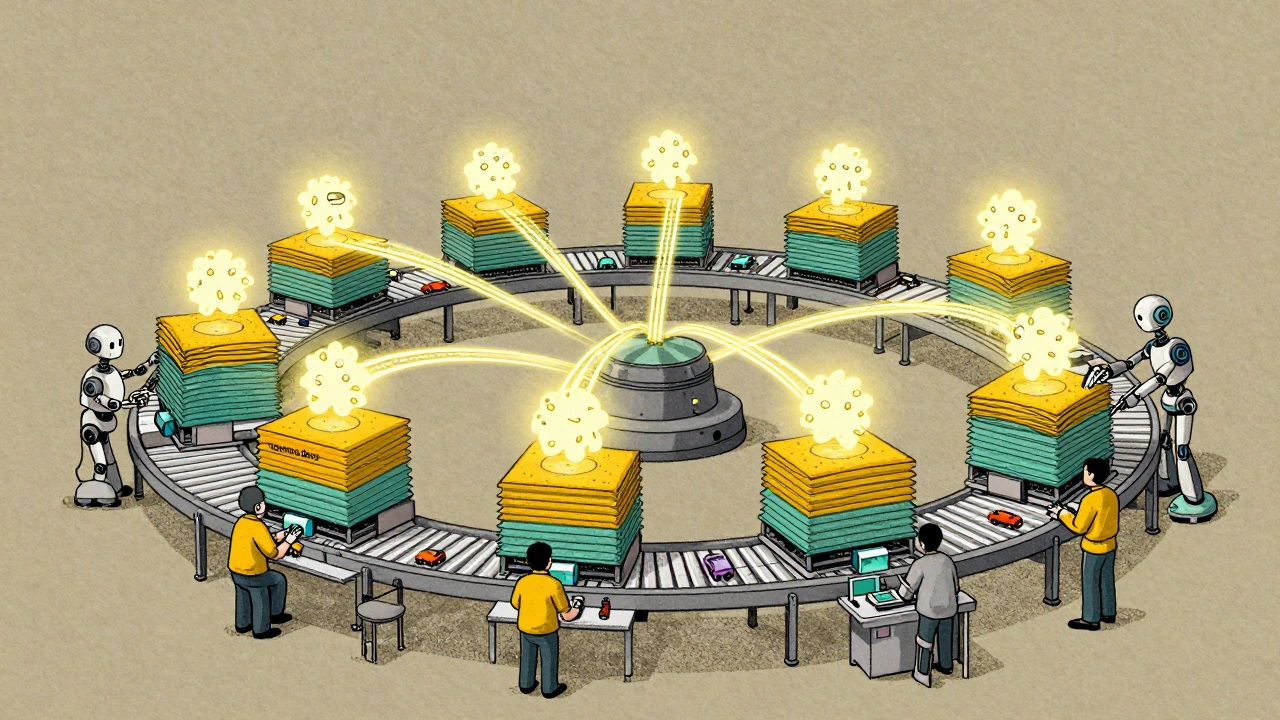

Model Parallelism and Pipeline Parallelism in Large Generative AI Training

Model and pipeline parallelism enable training of massive generative AI models by splitting them across multiple GPUs. Learn how these techniques overcome GPU memory limits and power models like GPT-3 and Claude 2.

Read MoreFew-Shot vs Fine-Tuned Generative AI: How Product Teams Should Decide

Learn how product teams should choose between few-shot learning and fine-tuning for generative AI. Real cost, performance, and time comparisons for practical decision-making.

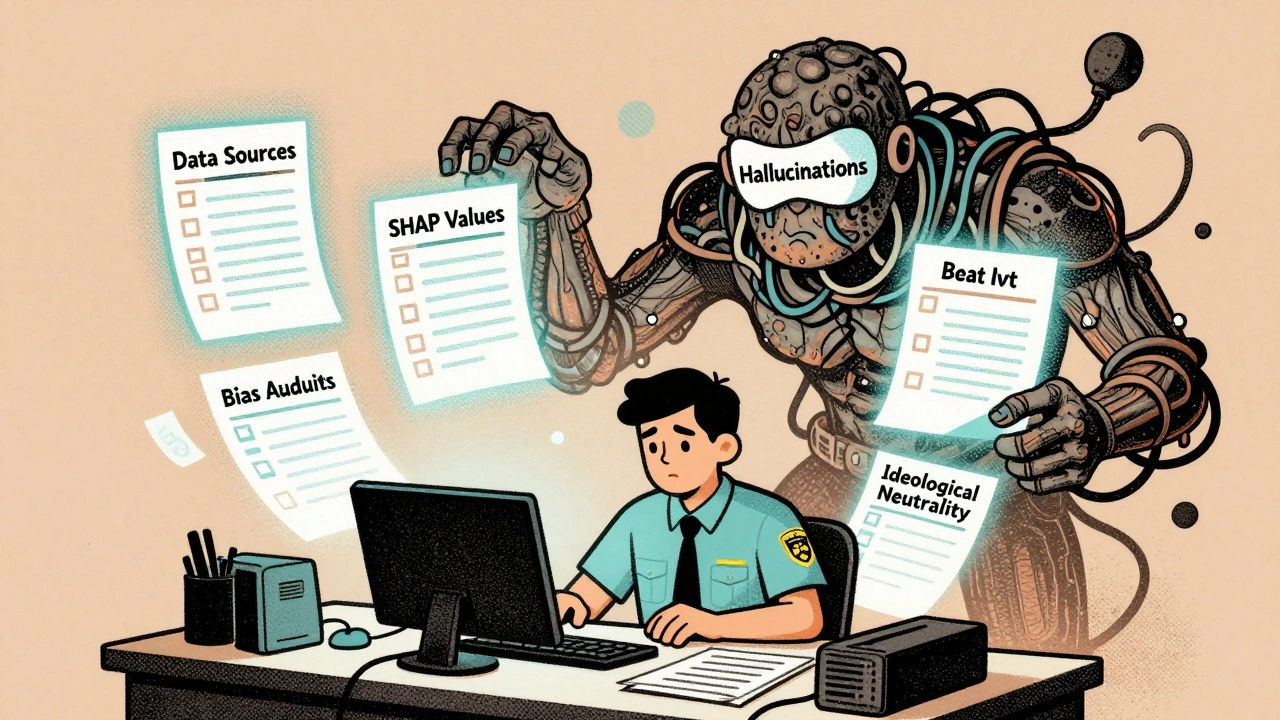

Read MoreGovernance Policies for LLM Use: Data, Safety, and Compliance in 2025

In 2025, U.S. governance policies for LLMs demand strict controls on data, safety, and compliance. Federal rules push innovation, but states like California enforce stricter safeguards. Know your obligations before you deploy.

Read MoreMulti-Head Attention in Large Language Models: How Parallel Perspectives Power Modern AI

Multi-head attention lets large language models understand language from multiple angles at once, enabling breakthroughs in context, grammar, and meaning. Learn how it works, why it dominates AI, and what's next.

Read MoreConfidential Computing for LLM Inference: How TEEs and Encryption-in-Use Protect AI Models and Data

Confidential computing uses hardware-based Trusted Execution Environments to protect LLM models and user data during inference. Learn how encryption-in-use with TEEs from NVIDIA, Azure, and Red Hat solves the AI privacy paradox for enterprises.

Read MoreExport Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

Read MoreError Analysis for Prompts in Generative AI: Diagnosing Failures and Fixes

Error analysis for prompts in generative AI helps diagnose why AI models give wrong answers-and how to fix them. Learn the five-step process, key metrics, and tools that cut hallucinations by up to 60%.

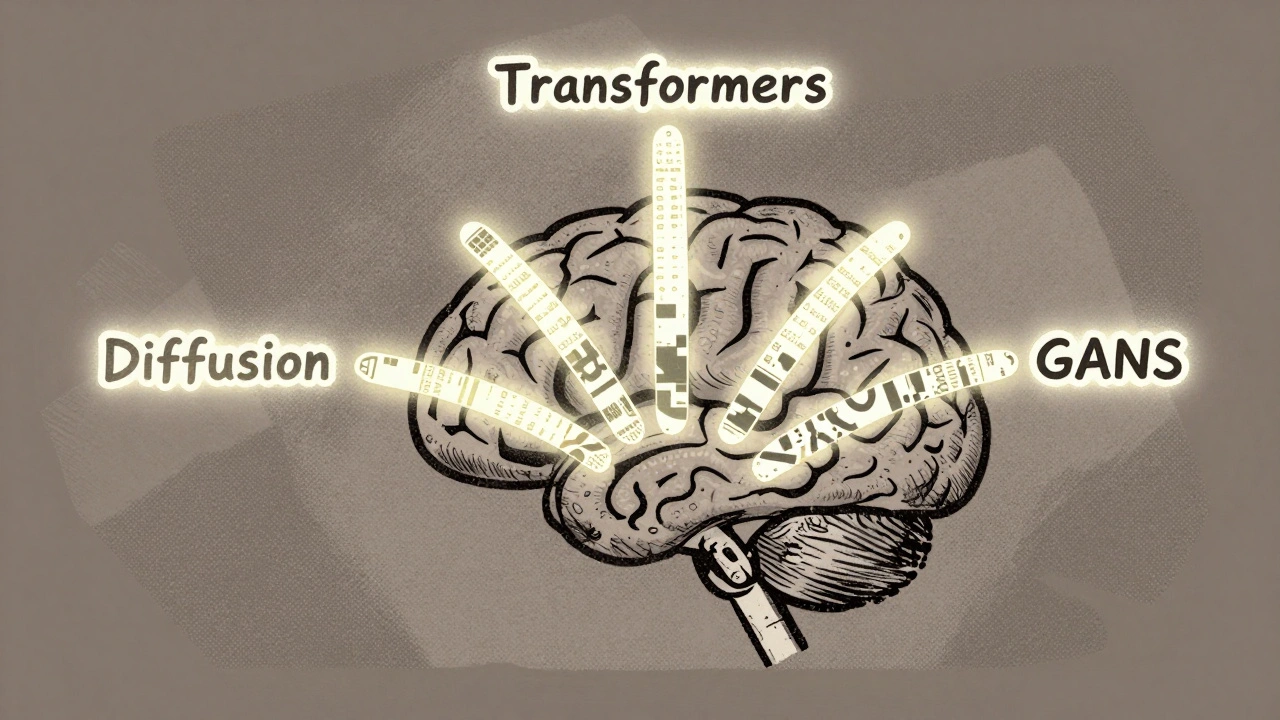

Read MoreFoundational Technologies Behind Generative AI: Transformers, Diffusion Models, and GANs Explained

Transformers, Diffusion Models, and GANs are the three core technologies behind today's generative AI. Learn how each works, where they excel, and which one to use for text, images, or real-time video.

Read MoreHow Generative AI, Blockchain, and Cryptography Are Together Building Trust in Digital Systems

Generative AI, blockchain, and cryptography are merging to create systems that prove AI decisions are trustworthy, private, and unchangeable. This combo is already reducing fraud in healthcare and finance - and it’s just getting started.

Read MoreDesign Systems for AI-Generated UI: How to Keep Components Consistent

AI-generated UI can speed up design-but only if you lock in your design system. Learn how to use tokens, training, and human oversight to keep components consistent across your product.

Read MoreWhen to Compress vs When to Switch Models in Large Language Model Systems

Learn when to compress a large language model to save costs and when to switch to a smaller, purpose-built model instead. Real-world trade-offs, benchmarks, and expert advice.

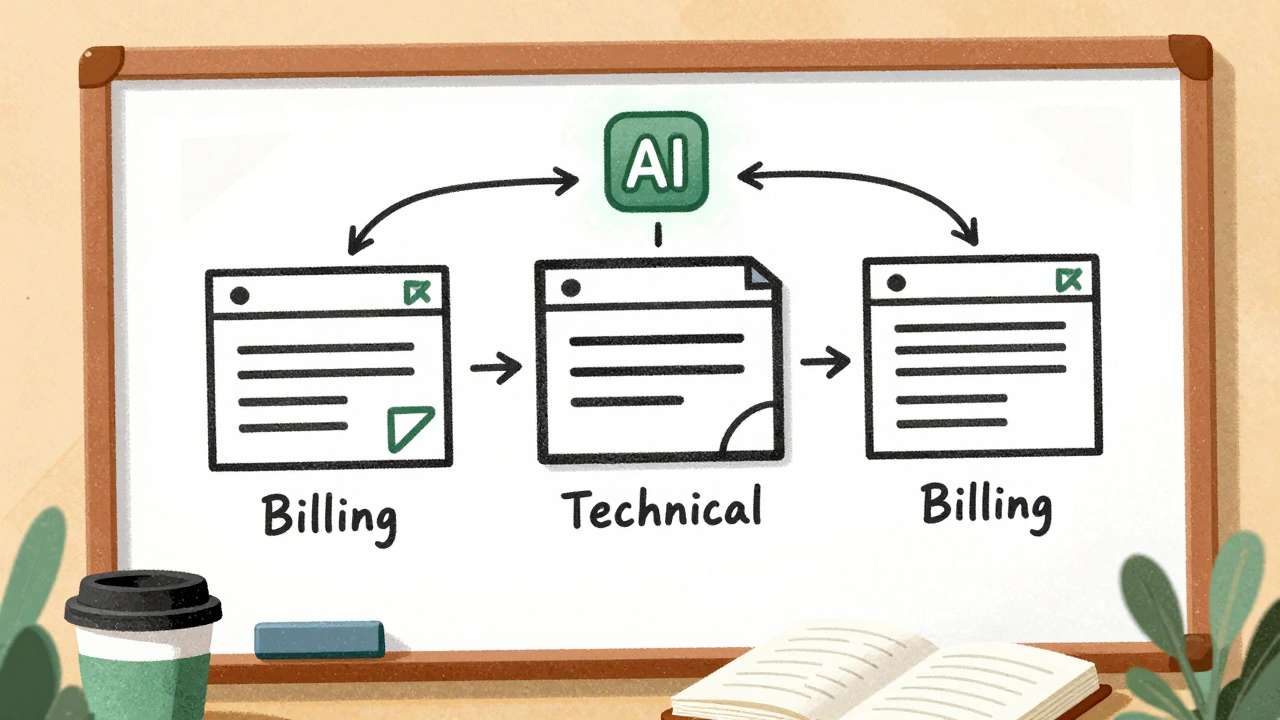

Read MoreInteroperability Patterns to Abstract Large Language Model Providers

Learn how to abstract large language model providers using proven interoperability patterns like LiteLLM and LangChain to avoid vendor lock-in, reduce costs, and maintain reliability across model changes.

Read More