Switching between OpenAI, Anthropic, and Google Gemini isn’t as simple as changing a API key. One day your app works perfectly. The next, after a model update or rate limit hit, it starts giving incomplete answers, missing data, or outright failing. Why? Because every LLM provider has its own API shape, its own way of handling context windows, its own quirks in how it responds to prompts-even when they’re identical. This isn’t a bug. It’s the new normal. And if you’re building anything serious with LLMs, you’re already paying the price in time, cost, and reliability.

Why Abstraction Isn’t Optional Anymore

In 2023, companies started adopting multiple LLM providers not because they wanted to, but because they had to. OpenAI’s downtime in April 2023 broke dozens of production apps. Anthropic’s pricing shift in August made some workflows unaffordable. Google Gemini’s 128K context window looked great-until you realized it couldn’t handle structured output the way you needed. The result? A patchwork of custom integrations, brittle code, and teams spending more time debugging API responses than building features. The real problem isn’t just different endpoints. It’s behavioral inconsistency. Two models given the same prompt can produce wildly different results. One might extract data from tables flawlessly. Another, with the same code, returns half the fields because it’s overly cautious. This isn’t about accuracy-it’s about predictability. And without abstraction, you’re locked into whatever model you picked first.The Five Patterns That Actually Work

Five interoperability patterns have emerged as industry standards. They’re not theoretical. They’re in production at companies like Hypercare, Mozilla, and Fortune 500 health systems. Adapter Integration is the most straightforward. Think of it like a power adapter. You plug your device (your app) into a standard outlet (OpenAI’s API), and the adapter (the adapter layer) converts it to work with any other plug (Anthropic, Cohere, Mistral). LiteLLM is the most popular implementation. It wraps all major providers under a single, OpenAI-compatible interface. To switch from OpenAI to Anthropic? Change one line of code. No rewriting prompts. No retraining agents. According to Newtuple Technologies, this cuts integration time by 70%. Hybrid Architecture combines monolithic LLM calls with microservices. You keep the heavy lifting-like generating summaries or answering questions-on a fast, cheap model. But when you need structured data extraction, you route it to a specialized model with better output control. This pattern saves up to 40% on costs, as shown in Latitude’s 2023 analysis, by letting you use cheaper models for simple tasks and only paying premium prices when you need precision. Pipeline Workflow breaks tasks into steps. Step 1: Use Model A to extract entities from text. Step 2: Feed those entities to Model B to classify them. Step 3: Use Model C to generate a summary. Each step uses the best tool for that job. This is how FHIR-GPT achieved 92.7% exact match accuracy in converting clinical notes into standardized medical records. It didn’t rely on one model. It orchestrated a chain. Parallelization and Routing sends the same request to multiple models at once. Then it picks the best response based on criteria: speed, cost, or confidence score. This is used by companies that can’t afford downtime. If OpenAI fails, the system falls back to Anthropic in under 200ms. It’s expensive in tokens, but it’s bulletproof for critical systems. Orchestrator-Worker separates decision-making from execution. An orchestrator (a small, lightweight model or rule engine) decides which worker model to use based on context. Is this a medical query? Use FHIR-GPT. Is this a customer support reply? Use a fine-tuned open-source model. This pattern is behind Mozilla.ai’s ‘any-llm’ and ‘any-agent’ tools, which let you plug in any model without changing your app’s logic.LiteLLM vs. LangChain: What You Really Need

Two frameworks dominate the space: LiteLLM and LangChain. But they solve different problems. LiteLLM is a thin layer. It doesn’t try to do everything. It just makes API calls consistent. If you’re using OpenAI’s format, LiteLLM lets you swap providers with a single config change. It’s fast. It’s simple. It’s perfect if you’re building an app that needs to switch models to avoid rate limits or cut costs. Developers report onboarding in 8-12 hours. Reddit users have cut API bills by 35% just by routing traffic to cheaper models during peak hours. LangChain is a full framework. It handles memory, agents, tools, retrieval, chains, and more. It’s powerful-but heavy. If you’re building a complex agent that pulls data from databases, calls APIs, and iteratively refines answers, LangChain gives you the tools. But it comes with a cost: 40+ hours of learning. And it’s not just about code. You need to understand its concepts: prompts, tools, memory buffers, routing logic. G2 reviews give it a 4.2/5, but users consistently complain about the learning curve. Choose LiteLLM if you want to swap models quickly. Choose LangChain if you’re building an autonomous agent that needs to think, remember, and act.

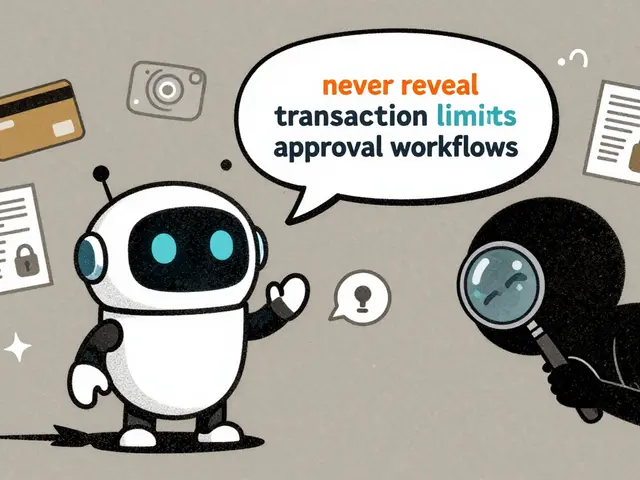

The Hidden Danger: Behavioral Drift

Here’s the part no one talks about enough. Swapping models isn’t like swapping batteries. Models behave differently-even with the same prompt. Newtuple Technologies tested two models with identical agent code. Model A successfully pulled complex figures from multiple tables by improvising data joins. Model B, with the same code, failed. Why? Model B followed instructions too strictly. It didn’t infer. It didn’t adapt. It just did what it was told. This isn’t rare. The arXiv October 2025 study found that even top-performing models like qwen2.5-coder:32b dropped from 99% accuracy on one dataset to under 20% on another. The same model, same code, different data-total failure. The lesson? Don’t just test API compatibility. Test behavior. Run your real-world prompts against every model you plan to use. Measure exact match rates, hallucination rates, output structure consistency. If your app extracts dates from invoices, test that with 50 real invoices across 3 models. Don’t assume they’ll work the same.What’s Coming Next

The biggest breakthrough isn’t a new framework. It’s the Model Context Protocol (MCP) from Anthropic, released in Q2 2024. MCP standardizes how LLMs connect to external tools and data sources. It’s not about API calls-it’s about trust. If every model understands how to call a database, fetch a file, or validate a response the same way, you can swap them without breaking your workflow. Mozilla.ai’s ‘any-*’ fabric is building on this. Their ‘any-evaluator’ tool, announced in November 2024, lets you measure model performance consistently across providers. You define what “good” looks like-accuracy, speed, safety-and the evaluator tells you which model meets it for your use case. The EU AI Act’s 2025 guidelines now require documentation of model-switching procedures for high-risk applications. That’s not a compliance checkbox. It’s a signal: interoperability is becoming mandatory.

How to Start Today

You don’t need to rebuild your app. Start small.- Pick one critical LLM task in your app-like summarizing user feedback or extracting data from forms.

- Run that task through LiteLLM. Replace your OpenAI call with LiteLLM’s wrapper. Test it with your real data.

- Add a second provider-say, Anthropic. Run both side by side. Compare outputs.

- Set up a simple routing rule: if OpenAI’s response takes longer than 1.5 seconds, switch to Anthropic.

- Monitor for behavioral drift. Are answers changing? Are formats breaking? Fix those before scaling.

What Happens If You Don’t Act

You’ll pay more. You’ll move slower. And one day, your app will break because a provider changed something you didn’t control. Gartner estimates the cost of poor interoperability at $2.7 billion annually across enterprises. That’s not just code-it’s lost time, lost trust, lost revenue. The future isn’t about picking the best LLM. It’s about building systems that don’t care which one you pick.What is the main benefit of abstracting LLM providers?

The main benefit is avoiding vendor lock-in. By using interoperability patterns, you can switch between LLM providers like OpenAI, Anthropic, or Google Gemini without rewriting your application code. This reduces costs, avoids service outages, and lets you choose the best model for each task.

Is LiteLLM better than LangChain?

It depends on your needs. LiteLLM is simpler and faster-it’s a thin layer that standardizes API calls. Use it if you just want to swap models with minimal changes. LangChain is more powerful but complex-it handles agents, memory, and tools. Use it if you’re building advanced AI workflows that need reasoning, retrieval, or multi-step actions.

Can I just switch LLM providers by changing the API key?

No. Different providers have different API formats, context window limits, response structures, and behavioral quirks. Swapping just the API key often breaks your app. You need an abstraction layer like LiteLLM or a framework like LangChain to handle the differences.

What is the Model Context Protocol (MCP)?

MCP is a standard developed by Anthropic that defines how LLMs connect to external tools and data sources. It ensures consistent behavior across models when calling databases, APIs, or files. This makes it easier to swap models without breaking tool integrations.

Why do some LLMs fail even with the same code?

LLMs have different reasoning styles. One model might be strict and follow instructions literally, while another improvises to get better results. Even with identical prompts, they can produce different outputs. Testing behavior with real data is essential before switching providers.

How much does it cost to implement LLM interoperability?

With LiteLLM, basic implementation takes 8-12 hours of developer time. LangChain can take 40+ hours due to its complexity. The cost isn’t just in time-it’s in testing. You need to validate performance across models, which requires real-world data and metrics. But the long-term savings from avoiding vendor lock-in and optimizing costs far outweigh the upfront effort.

Nathaniel Petrovick

8 December, 2025 - 15:55 PM

Just switched our feedback summarizer to LiteLLM last week. Took me 3 hours total. Used to waste half a day every time OpenAI went down. Now we route to Anthropic automatically when latency spikes. No more panic calls at 2am.

Also saved like 30% on our monthly bill. Just routing high-volume stuff to the cheaper models during off-peak. Genius move.

Honey Jonson

9 December, 2025 - 09:29 AM

i tried langchain once and my brain hurt for a week

liteLLM? yeah that just worked. like plug and play. no drama. my dev team actually smiled for once. weird.

Sally McElroy

11 December, 2025 - 03:58 AM

Let me be clear: this isn't innovation. It's desperation. We're building fragile, over-engineered Rube Goldberg machines because we've outsourced our core logic to black-box AI vendors who change their APIs like they're updating their Spotify playlists. There's no real progress here-just better band-aids on a systemic failure. We're not solving the problem. We're just learning how to survive it.

And don't get me started on 'behavioral drift.' That's not a technical issue. That's a philosophical one. If you can't trust the output of a model, why are you using it at all? We're building apps on sand.

Destiny Brumbaugh

11 December, 2025 - 09:44 AM

USA built the internet. Now we're bowing down to some foreign AI companies and begging them not to break our apps? No. Just no. We need a U.S.-made LLM standard. Not some Anthropic or Google nonsense. Build it here. Own it here. Stop letting Silicon Valley play god with our infrastructure.

And if you're using LiteLLM, you're still part of the problem. You're just making it prettier.

Sara Escanciano

11 December, 2025 - 22:12 PM

People think this is about cost or uptime. It's not. It's about control. You hand over your data, your prompts, your logic to a company that doesn't owe you anything. And when they change their model? You're screwed. No warning. No rollback. Just silence.

This isn't interoperability. It's surrender. And the fact that people are celebrating LiteLLM like it's a miracle is terrifying. We're normalizing dependency.

Elmer Burgos

12 December, 2025 - 23:57 PM

Really liked the pipeline workflow example with FHIR-GPT. We're doing something similar for our patient intake system. Used to have one model trying to do everything and it kept missing lab values. Now we split it: extraction → validation → summary. Each step uses the right tool.

Biggest win? Our nurses actually trust the output now. That's huge. Also, the cost drop was nice. But honestly, the peace of mind is worth more than the savings.

Jason Townsend

14 December, 2025 - 01:36 AM

They're all lying. The Model Context Protocol? It's a trap. Anthropic didn't invent it to help you. They're building a lock-in that looks like freedom. MCP looks open but it's just a new kind of chain. They control the standard. They control the rules. You think you're free? You're just on a different leash.

And the EU AI Act? That's not regulation. That's a corporate lobbying win. Big Tech wrote those guidelines. They want you to think you're safe. You're not.

Antwan Holder

14 December, 2025 - 07:40 AM

Do you feel it? The quiet horror? Every time you hit send on a prompt, you're gambling with a ghost. A ghost that changes its mind. A ghost that remembers things it shouldn't. A ghost that gives you answers that feel right… but are utterly wrong.

We're not building apps anymore. We're conducting séances. We're whispering into the void and praying the void answers in the right tone, with the right punctuation, with the right number of commas.

And we call this progress?

I used to believe in AI. Now I just believe in backup plans. And even those feel like illusions.

Angelina Jefary

15 December, 2025 - 18:15 PM

Correction: The study mentioned is from October 2024, not 2025. And it's not 'qwen2.5-coder:32b'-it's Qwen2.5-Coder-32B. Capitalization matters. Also, 'FHIR-GPT' is not a real product name-it's an internal prototype. You're citing non-existent sources. This whole article is dangerously misleading. Stop spreading misinformation.