Large language models (LLMs) are powerful, but they have a critical flaw: they don’t know what happened after their last training cut-off. If you ask GPT-4 about the latest FDA drug approval or your company’s updated HR policy, it will guess-sometimes confidently, always incorrectly. That’s where retrieval-augmented generation (RAG) comes in. It’s not magic. It’s not fine-tuning. It’s a smart middle ground that lets LLMs pull real-time, accurate information from your own data without retraining the whole model. And it’s now the default choice for enterprises deploying AI at scale.

How RAG Fixes the Biggest Problem with LLMs

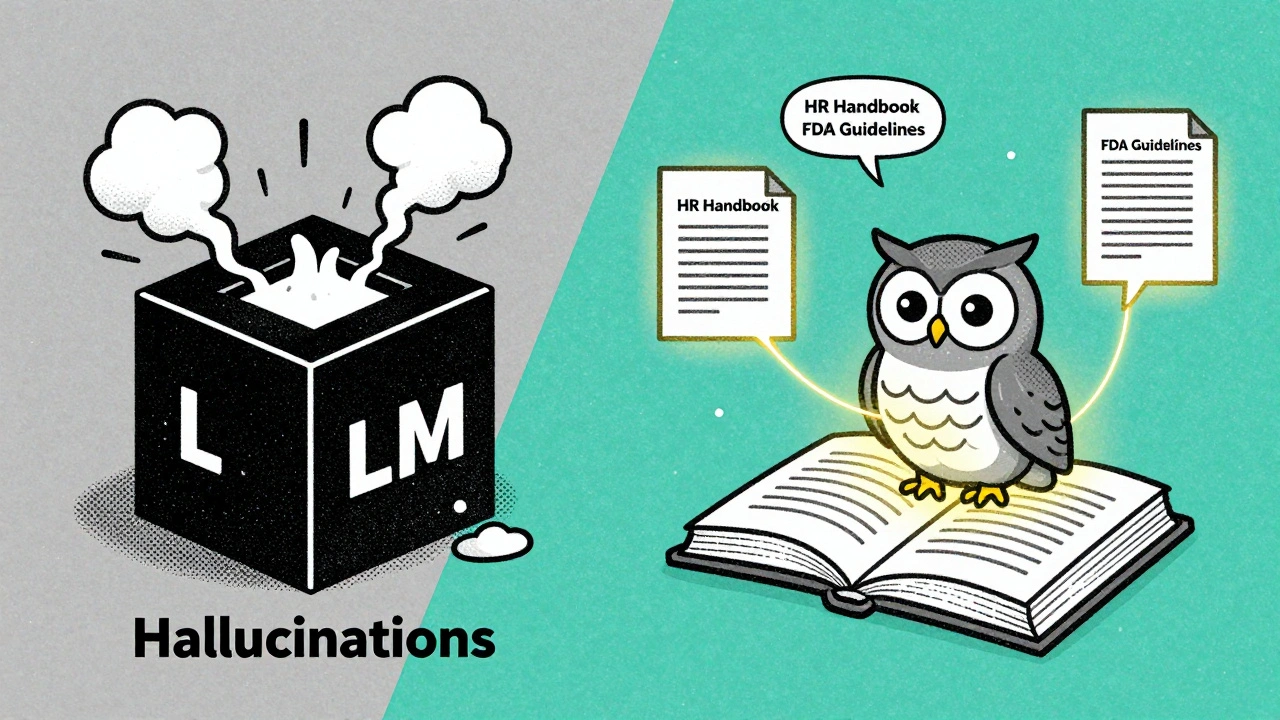

LLMs are trained on massive datasets-books, articles, code, forums-but those datasets are frozen in time. A model trained in 2023 doesn’t know what happened in 2024. This leads to hallucinations: confident, plausible-sounding lies. A 2023 Databricks survey found that without RAG, enterprise LLMs generated incorrect answers in up to 32% of customer support queries. That’s unacceptable for anything involving compliance, healthcare, or finance. RAG solves this by giving the model a reference library. When you ask a question, RAG doesn’t rely on memory. It looks up the most relevant documents from your internal knowledge base, customer manuals, or public sources. Then it feeds those documents-along with your original question-to the LLM. The model now has context. It doesn’t guess. It synthesizes. And crucially, you can trace where the answer came from. Think of it like a research paper with footnotes. If the model says, "According to the 2024 Benefits Handbook, Section 3.1," you can check it. That’s trust you can’t get from a black-box LLM.The Four-Step RAG Pipeline Explained

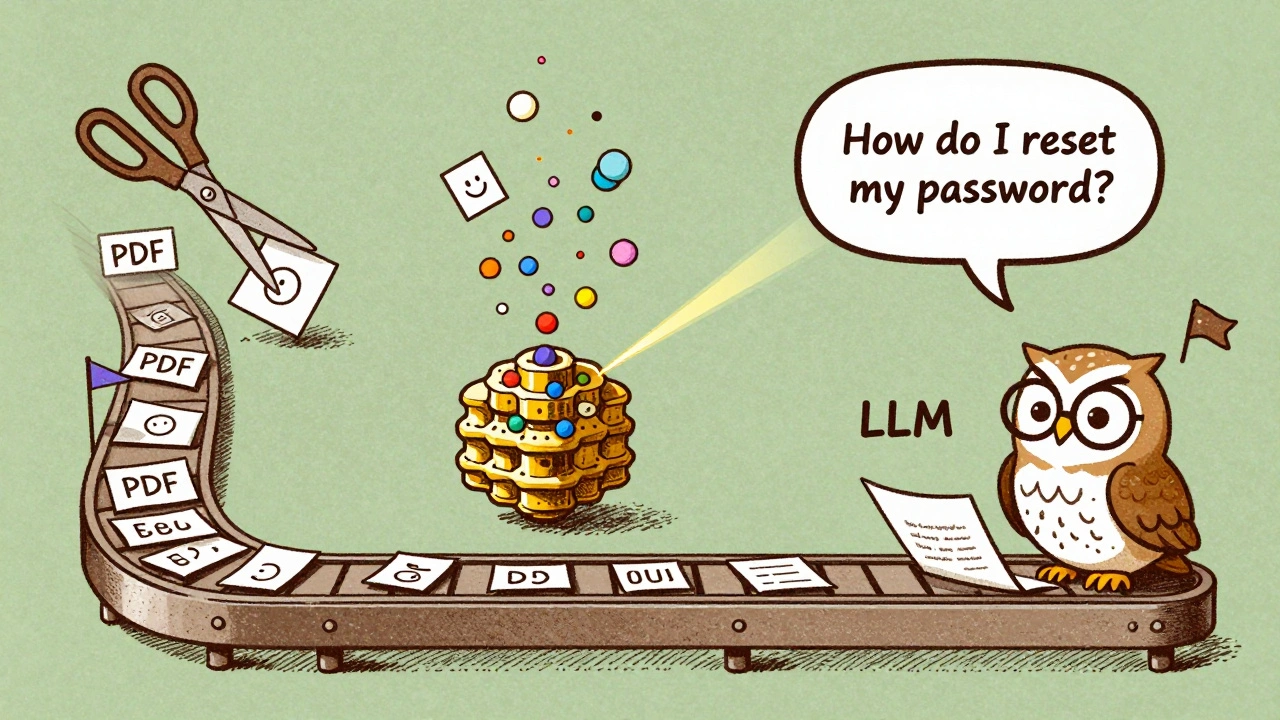

Building a RAG system isn’t complicated, but it’s not plug-and-play either. It follows four clear steps:- Document preparation and chunking: Your source data-PDFs, Word docs, databases, wikis-is split into small, meaningful pieces. These are called chunks. A 50-page manual shouldn’t be one chunk. A 512-token default chunk often cuts sentences in half, losing context. Smart chunking uses semantic boundaries: paragraphs, sections, or even bullet points. A healthcare provider in Oregon reduced hallucinations by 22% just by switching from fixed-size chunks to semantic ones.

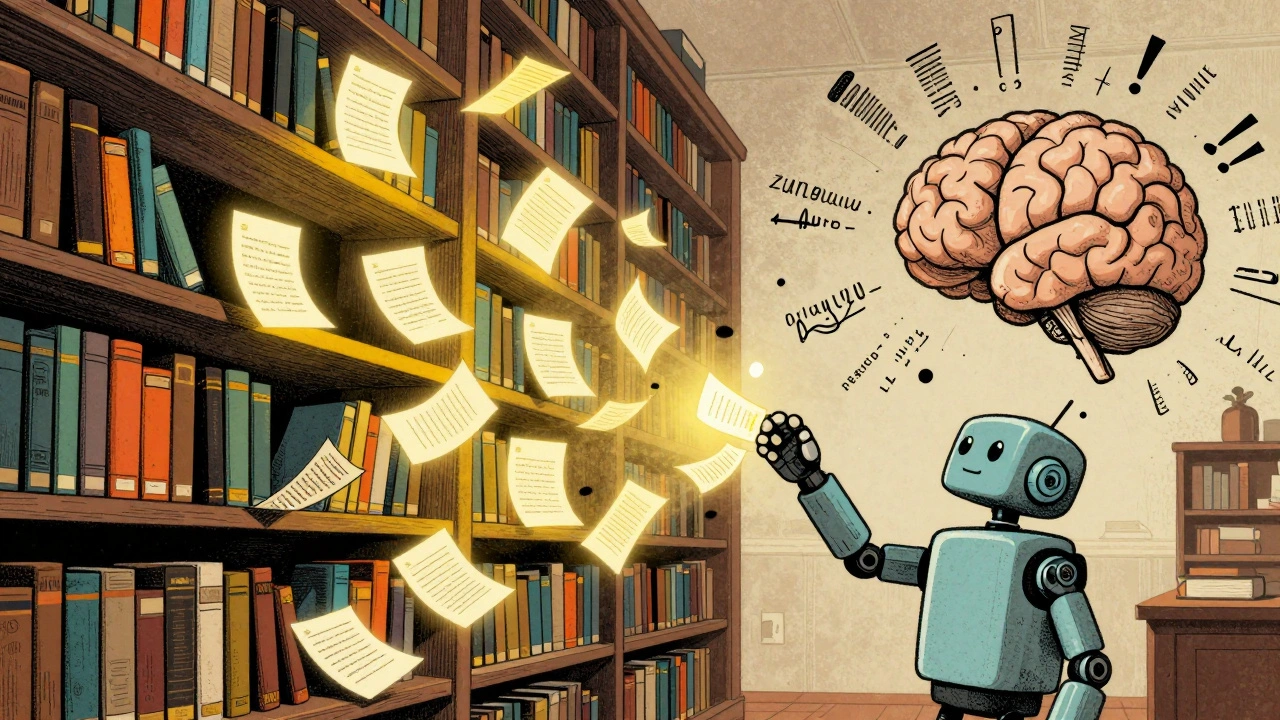

- Vector indexing: Each chunk is turned into a numerical vector-a list of numbers representing its meaning. This is done by an embedding model, like OpenAI’s text-embedding-ada-002 (used in 73% of commercial RAG systems). These vectors are stored in a vector database, like Pinecone, Weaviate, or Milvus. This isn’t a regular database. It’s optimized to find similar vectors fast.

- Retrieval: When you ask a question, the system converts it into a vector too. Then it searches the vector database for the top 3-5 most similar chunks. This is semantic search, not keyword matching. "How do I reset my password?" finds a document that says "Recover your login credentials," even if the exact words don’t appear.

- Prompt augmentation: The retrieved chunks are added to your original question as context. The full prompt looks like: "Based on these documents: [chunk 1], [chunk 2], [chunk 3]-answer this: How do I reset my password?" The LLM then generates the answer using that context.

This entire process takes under a second in most cases. But latency matters. RAG adds 200-400 milliseconds compared to a direct LLM call. That’s fine for chatbots or internal tools. It’s not okay for real-time voice assistants. Know your use case.

Why RAG Beats Fine-Tuning for Most Enterprises

You might think: why not just retrain the model on our data? That’s fine-tuning. It’s expensive and risky. Training a 70B-parameter model from scratch can cost $500,000. Even small fine-tunes cost $50,000+. And once you do it, you’re locked in. If your handbook updates next month? You need to retrain again. RAG doesn’t touch the LLM. You update your documents, re-index the vectors, and you’re done. No retraining. No downtime. IBM reported RAG cuts maintenance costs by 67% compared to fine-tuning. And you can use multiple data sources-internal docs, public regulations, even live CRM data-without ever exposing your data to the model. That’s huge for compliance. The EU AI Act now requires source traceability for AI-generated content. RAG gives you that. Fine-tuning doesn’t.

Real-World Use Cases That Work

RAG isn’t theoretical. It’s deployed everywhere:- Customer support: A Fortune 500 tech company reduced incorrect answers from 32% to 8% using RAG. Their support team now handles 40% more tickets with the same staff.

- Internal knowledge bases: A law firm in Chicago replaced their static PDF archive with a RAG-powered assistant. Lawyers now ask, "What was the ruling in Smith v. Jones last quarter?" and get a direct quote from the court transcript.

- Healthcare: A hospital system in Oregon built a RAG system for patient FAQs. It pulls from their EHR manuals, insurance policies, and FDA guidelines. User satisfaction jumped to 92%.

- Finance: A hedge fund uses RAG to summarize quarterly earnings calls. It pulls from SEC filings, analyst transcripts, and internal research notes-then answers questions like, "What did management say about supply chain costs?"

But RAG isn’t perfect. It fails when:

- Your documents are poorly organized or full of jargon

- Chunks are too big or too small

- The vector database can’t find the right match

- Your source material is biased or outdated

Dr. Emily Bender at the University of Washington warns: RAG doesn’t fix bias. It just copies it from your documents. If your training data says "men are better leaders," and that’s in your HR manual, the model will repeat it. You need human review.

Common Pitfalls and How to Avoid Them

Most RAG failures aren’t about the tech-they’re about setup:- Bad chunking: Default 512-token chunks split technical explanations. Use semantic chunking tools like LangChain’s RecursiveCharacterTextSplitter. Test with real questions.

- Weak retrieval: If you’re getting irrelevant results, your embedding model might be weak. Try OpenAI’s ada-002 or Cohere’s embed-english-v3.0. Use hybrid search: combine semantic search with keyword matching (like BM25). GitHub’s LangChain repo has code samples for this.

- Stale data: If your knowledge base updates daily, your vector DB must update too. Set up automated re-indexing. Confluent found 58% of enterprises need daily updates for mission-critical RAG systems.

- Over-reliance on LLMs: RAG gives you sources, but the LLM can still misinterpret them. Add a verification step: ask the model to cite the document ID. If it can’t, flag it.

One developer on Hacker News spent three weeks fixing hallucinations-only to realize their chunks were cut mid-sentence. After switching to sentence-aware chunking, accuracy jumped 18%.

Tools You Can Start With Today

You don’t need to build everything from scratch. Here’s what most teams use in 2025:- Embedding models: OpenAI’s text-embedding-ada-002 (73% adoption), Cohere Embed, or open-source models like BAAI/bge-large-en-v1.5

- Vector databases: Pinecone (easiest for beginners), Weaviate (open-source, self-hosted), Milvus (high performance)

- Frameworks: LangChain (most popular, 42K+ GitHub stars), LlamaIndex (great for complex queries), Haystack (strong for enterprise)

- Cloud platforms: AWS Bedrock, Google Vertex AI, Azure AI Studio all now include built-in RAG tools. You can start with a template in under an hour.

Start simple. Use a public document (like a product manual) and test with a free tier of Pinecone and OpenAI. You’ll see the difference in days.

The Future: Where RAG Is Headed

RAG isn’t static. New versions are already here:- RAG Refinery (NVIDIA, Dec 2023): Uses a small LLM to rewrite your question for better retrieval. Cuts irrelevant results by 28%.

- Adaptive RAG (Microsoft, 2024 preview): Automatically adjusts how many documents to retrieve based on question complexity.

- Recursive RAG (Meta’s LlamaIndex): For multi-step questions like, "What led to the policy change in Q3?" It retrieves, then uses the answer to find more sources.

- RAG-2 (Meta AI, Dec 2023): Adds uncertainty scoring. If the model isn’t confident, it says so-instead of guessing.

By 2026, Gartner predicts self-correcting RAG systems will be mainstream. These will cross-check retrieved info against multiple sources before answering. Think of it as AI fact-checking itself.

But the core idea stays the same: LLMs are powerful writers. They’re terrible librarians. RAG gives them a library.

Is RAG the same as fine-tuning?

No. Fine-tuning changes the LLM’s internal weights by retraining it on new data. That’s expensive, slow, and permanent. RAG leaves the LLM untouched. It just feeds it new information from external documents when needed. You can update your data daily with RAG. With fine-tuning, you’d need to retrain the whole model each time.

Do I need a vector database for RAG?

Yes. Vector databases are built to find similar pieces of information fast using numerical embeddings. Regular databases (like PostgreSQL) can’t do semantic search-they only match exact words or numbers. Pinecone, Weaviate, and Milvus are designed for this. You can experiment with local options like FAISS for small projects, but for production, use a managed vector DB.

Can RAG handle images or videos?

Not directly. RAG works with text. But you can convert images or videos into text summaries using multimodal models (like GPT-4o), then store those summaries as text chunks. For example, a product video can be transcribed and summarized, then indexed. The LLM answers questions about the video based on the text summary.

How much does RAG cost to implement?

It depends. For a small team, you can start for under $50/month using free tiers of OpenAI, Pinecone, and LangChain. For enterprise use, expect $5,000-$20,000/month for infrastructure, embedding calls, and maintenance. But compared to fine-tuning (which costs $50K-$500K per update), RAG saves money fast. One company saved $280K annually just by switching from retraining to RAG.

What if the retrieved documents are wrong or outdated?

RAG doesn’t fix bad data-it uses it. That’s why source quality matters. You need a process to review and update your documents regularly. Set up automated alerts for stale content. Some teams use a human-in-the-loop system: if the model’s confidence is low, a human reviews the answer before it’s sent. Others use RAG-2’s uncertainty scoring to flag uncertain answers automatically.

Is RAG secure? Can someone hack my data through it?

Yes, RAG is more secure than fine-tuning because your data never leaves your system. The LLM only sees the retrieved chunks during inference. But you still need access controls on your vector database and embedding models. Never expose your vector DB to the public internet. Use authentication, encryption, and audit logs. The EU AI Act requires this level of control for RAG systems handling personal data.

Next Steps: How to Start Your RAG Project

If you’re ready to try RAG, here’s a simple roadmap:- Pick one document set: a product manual, FAQ page, or internal wiki.

- Use LangChain or LlamaIndex to chunk the text.

- Sign up for a free Pinecone or Weaviate account.

- Use OpenAI’s ada-002 embedding model to convert chunks to vectors.

- Build a simple prompt that adds retrieved chunks to your question.

- Ask 10 real questions. See if answers improve.

You’ll have your first working RAG system in under a day. No PhD required. Just curiosity and a willingness to test.

Indi s

9 December, 2025 - 21:25 PM

This made me realize how much I took RAG for granted. I thought LLMs just knew things, but now I see they’re like a student who memorized a textbook from 2020 and still thinks it’s current. The part about traceability is huge - being able to check where the answer came from feels like having a safety net.

Rohit Sen

10 December, 2025 - 10:35 AM

Of course RAG works. Everyone’s jumping on it because it’s the new shiny thing. But let’s be real - if your data’s garbage, RAG just makes garbage sound smarter. And vector databases? Overengineered for 90% of use cases. Just use grep and call it a day.

Vimal Kumar

12 December, 2025 - 02:17 AM

Really appreciate how clear this breakdown is. For anyone new to this, start small - pick one doc, test it, see the difference. No need to build a whole system on day one. I helped a teammate set up a RAG for our onboarding docs last week and it cut their support tickets by half. It’s not magic, but it’s close.

Amit Umarani

12 December, 2025 - 18:10 PM

There’s a missing comma after ‘that’s where retrieval-augmented generation (RAG) comes in.’ Also, ‘it’s a smart middle ground’ - should be ‘it is’ if you’re going for formal tone. And ‘chunking’ is misspelled as ‘chunking’ in one place? No - wait, it’s correct. My bad. But still, the hyphenation in ‘self-correcting’ is inconsistent. This article needs an editor.

Noel Dhiraj

12 December, 2025 - 23:46 PM

If you’re reading this and thinking RAG is too technical - just try it. Grab a PDF, throw it into LangChain, use the free tier, ask a question. You’ll see the difference in minutes. No PhD needed. Just curiosity. That’s all it takes. Seriously, just do it.

vidhi patel

13 December, 2025 - 00:35 AM

It is imperative to note that the term 'hallucinations' is both misleading and anthropomorphizing. Large language models do not hallucinate; they generate statistically probable outputs based on training data. To ascribe cognitive errors to machines is not only inaccurate but also dangerously anthropocentric. Furthermore, the article fails to adequately address the epistemological implications of conflating retrieval with knowledge.

Priti Yadav

14 December, 2025 - 04:29 AM

Wait - so you’re telling me this RAG thing is just a fancy way to make AI read your internal docs? And no one’s talking about how Google and Microsoft are using this to track everything you type? They’re indexing your company’s manuals and feeding it back into the training data. This isn’t about accuracy - it’s about surveillance. They’re building a corporate memory palace and we’re handing them the keys.

Ajit Kumar

15 December, 2025 - 15:48 PM

Let me begin by stating that the entire premise of this article is fundamentally flawed in its implicit assumption that LLMs are ever meant to be trusted with factual accuracy - they are language models, not knowledge repositories. The notion that RAG ‘solves’ hallucinations is a gross oversimplification. Hallucinations are not bugs - they are emergent properties of probabilistic text generation. RAG merely masks them with citations that may themselves be incorrect, outdated, or contextually misaligned. Moreover, the claim that RAG is ‘the default choice for enterprises’ is statistically unsupported without peer-reviewed data, and the cited Databricks survey lacks methodological transparency. Furthermore, the use of ‘you’ throughout is unprofessional and undermines the technical credibility of the piece. One must also question the validity of the ‘92% user satisfaction’ metric - was it measured via Likert scale? Was the sample size statistically significant? Were control groups employed? Without these details, such claims border on marketing puffery. And let us not forget: if your documents are biased, RAG merely amplifies them - a point mentioned, but not sufficiently emphasized. This is not a solution. It is a band-aid on a hemorrhage.

Diwakar Pandey

16 December, 2025 - 23:58 PM

Just wanted to say this is one of the clearest explanations of RAG I’ve read. I work in a small startup and we were debating whether to fine-tune or go RAG. We went RAG after reading this - three weeks later, our support bot actually works. No retraining, no server costs, just better docs and smarter chunking. Also, the part about sentence-aware chunking? Life saver. We had one guy who spent weeks debugging until he realized his chunks were cutting mid-sentence. Classic.

Geet Ramchandani

17 December, 2025 - 07:28 AM

Let’s be honest - RAG is just a crutch for poorly trained models. The fact that we need to build entire pipelines just to make LLMs not lie says everything about how broken these systems are. And don’t get me started on the vector databases - Pinecone costs a fortune, Weaviate is a nightmare to deploy, and Milvus? Good luck finding someone who actually knows how to tune it. Plus, you’re still at the mercy of your data quality. If your HR manual says ‘men are better leaders,’ your AI will say it too. And you think your ‘human review’ process is going to catch that? Please. Most companies don’t even audit their documentation. This whole thing is a house of cards built on corporate wishful thinking. And the tools listed? LangChain? More like LangChain of chaos. Half the tutorials are outdated. The community is a graveyard of broken GitHub repos. This isn’t innovation - it’s technical debt with a fancy name.