When you ask an LLM a question about your medical records, financial history, or proprietary business data, where does that information go? If you’re using a standard cloud-based AI service, the answer is: it’s decrypted, processed in plain text, and potentially exposed to anyone with access to the server-even if that’s just a system administrator or a compromised script. This isn’t theoretical. It’s happening right now in hundreds of enterprises trying to use powerful AI models without realizing they’re handing over their most sensitive data in plain sight.

Why LLM Inference Is a Security Nightmare

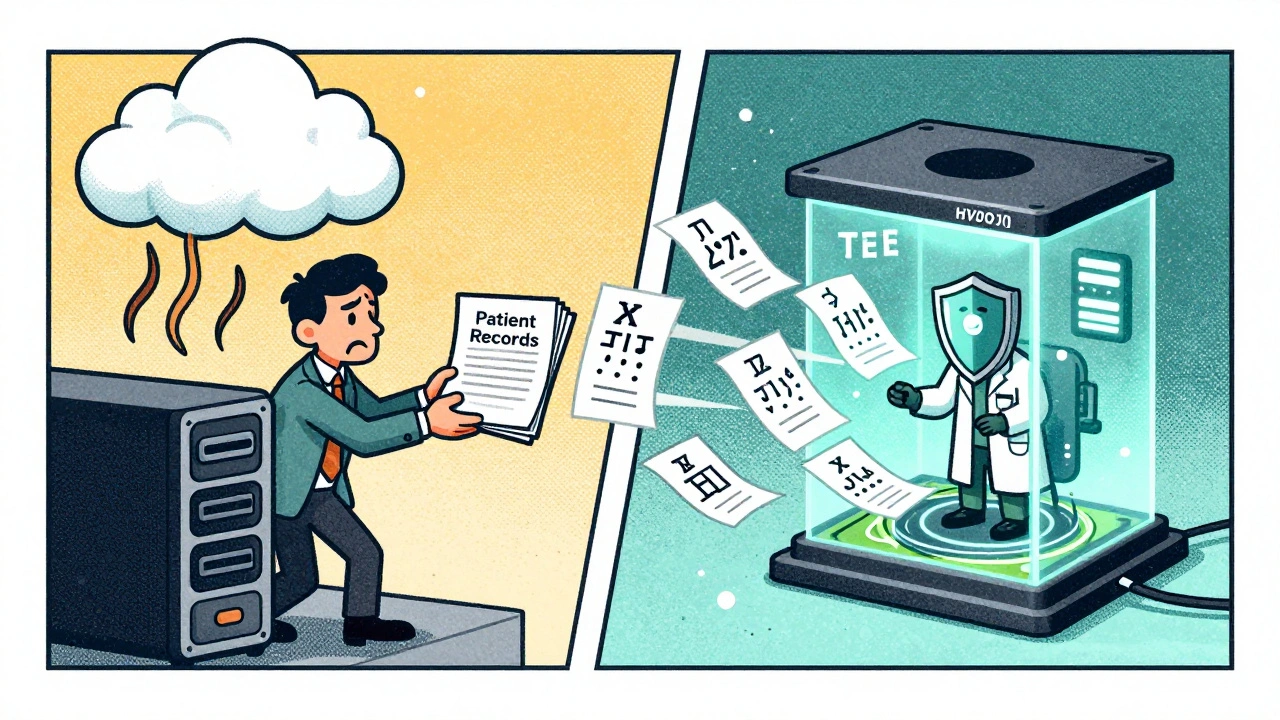

Large Language Models aren’t just big software. They’re expensive, proprietary assets. Training a 70B-parameter model can cost over $50 million. On the other side, users are feeding in personally identifiable information, trade secrets, legal documents, and health records. The traditional model-send data to the cloud, let the model run on untrusted hardware, get the answer back-is broken for anything that matters. This is the AI privacy paradox: you need powerful AI to make sense of sensitive data, but using that AI means exposing the data. For years, encryption was the go-to answer-but encryption only protects data at rest or in transit. Once the data lands on the server, it has to be decrypted to be processed. That’s the gap. Confidential computing closes it.What Confidential Computing Actually Does

Confidential computing isn’t magic. It’s hardware. Specifically, it’s Trusted Execution Environments (TEEs)-secure, isolated areas inside a processor where code and data are protected even from the operating system, hypervisor, or cloud provider. Think of it like a vault inside the CPU. Even if someone owns the server, they can’t peek inside the vault. In LLM inference, this means:- Your prompt is encrypted before it leaves your device

- It travels through the cloud network still encrypted

- Only inside the TEE does it get decrypted

- The LLM runs its calculations on that data-still encrypted in memory

- The response is re-encrypted before it leaves the secure environment

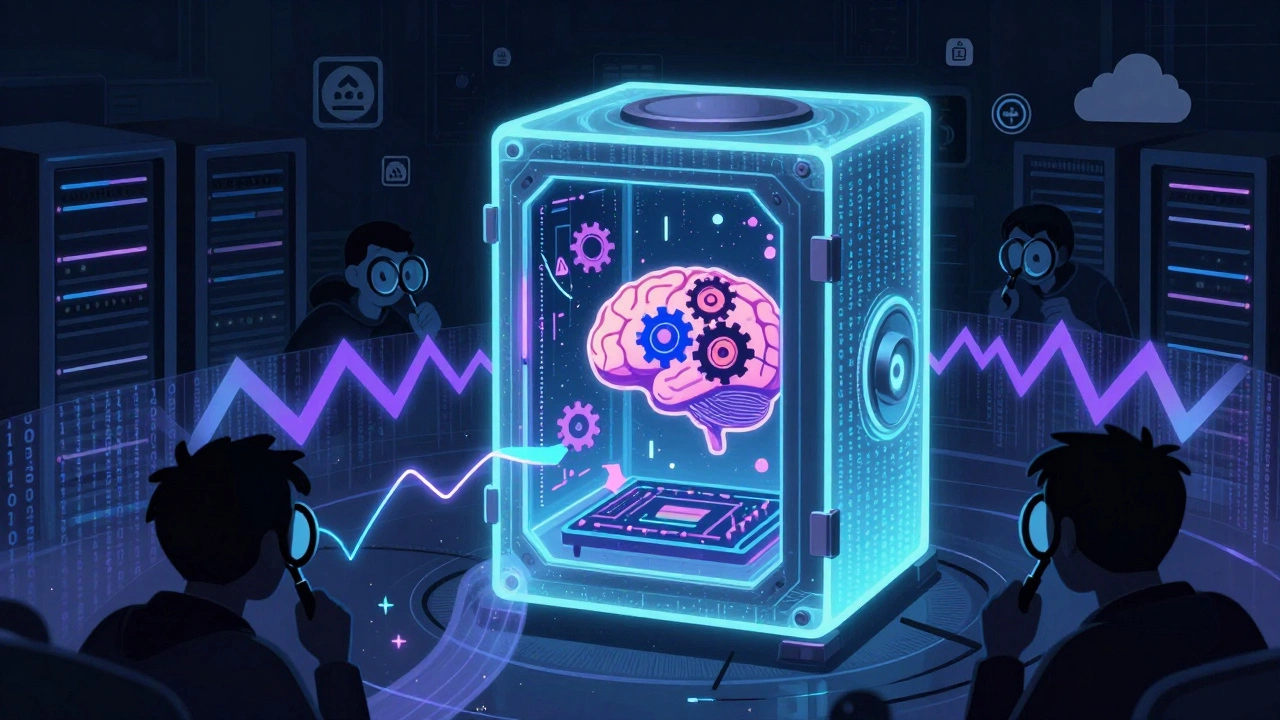

How TEEs Work in Practice

There are three main hardware platforms powering this today:- Intel TDX (Trust Domain Extensions): Used in modern Xeon processors. Provides memory encryption and remote attestation.

- AMD SEV-SNP (Secure Encrypted Virtualization - Secure Nested Paging): AMD’s answer to Intel TDX. Stronger against certain memory attacks.

- NVIDIA GPU TEEs: Built into H100, H200, and now Blackwell B200 GPUs. This is the game-changer.

- Is this a real, unmodified TEE? (via cryptographic attestation)

- Does the cloud provider have permission to load this model? (mutual attestation)

- Is the model’s decryption key securely delivered only to this specific enclave?

Cloud Providers Are Betting Big

Every major cloud provider has a confidential computing offering-but they’re not equal.| Platform | Hardware Support | Performance Overhead | Model Loading | Kubernetes Native |

|---|---|---|---|---|

| NVIDIA H100/H200/B200 | GPU TEEs | 1-5% | Best (mutual attestation) | No |

| Azure Confidential Computing | Intel TDX, AMD SEV-SNP | 5-10% | Good (application-level encryption) | Yes |

| AWS Nitro Enclaves | Intel TDX | 10-15% | Poor (no GPU TEE) | Partial |

| Red Hat OpenShift + CVMs | Intel TDX, AMD SEV-SNP | 5-12% | Good (with Tinfoil Security) | Yes |

Real-World Impact: Who’s Using This?

In healthcare, a European insurer replaced its manual claims review system with an AI assistant running in Azure Confidential VMs. Patient data stayed encrypted end-to-end. Accuracy stayed at 92%. Breach risk dropped to near zero. A Fortune 500 bank moved its credit risk model from on-premises servers to AWS Nitro Enclaves. The model weights were never exposed to the cloud provider. The result? Compliance with GDPR and Basel III requirements without sacrificing model performance. But it’s not all smooth sailing. One financial firm tried loading a 30B-parameter model into an Intel SGX enclave. It took 47 minutes. Their real-time system couldn’t wait. They had to redesign their pipeline to use smaller models or pre-process inputs into encrypted embeddings.What You Need to Get Started

If you’re considering confidential computing for LLM inference, here’s what you actually need:- Hardware: Servers with Intel TDX, AMD SEV-SNP, or NVIDIA H100+ GPUs. No point in trying this on older hardware.

- Cloud or on-prem: Most companies start in the cloud. But if you need data residency (like banks or governments), you’ll need on-prem TEE-capable servers.

- Secure model loading: This is the hardest part. You need mutual attestation-where the enclave proves it’s real, and your model proves it’s authorized to run there.

- Integration: Your MLOps pipeline must now handle encrypted prompts, attestation checks, and encrypted outputs. Tools like Red Hat’s sandboxed containers or Azure’s Attested Oblivious HTTP help.

The Bigger Picture: Why This Is the Future

The market for confidential AI computing is exploding. It was worth $1.7 billion in Q3 2025. By 2027, it’ll be over $8 billion. Why? Because regulations won’t wait. GDPR, HIPAA, CCPA, and new EU AI Act rules require that sensitive data be protected during processing-not just stored or transferred. Confidential computing is the only technology that meets that requirement. By 2027, Gartner predicts 90% of healthcare and financial services firms will use it. Right now, only 15% do. That gap is closing fast. And it’s not just about compliance. It’s about trust. Customers won’t give their data to AI systems they can’t verify are secure. Investors won’t fund AI startups that can’t prove their models aren’t leaking IP. Confidential computing turns security from a cost into a competitive advantage.What’s Next?

NVIDIA’s Blackwell B200 GPUs, launched in December 2025, now support 200B+ parameter models with under 3% performance loss. Microsoft just added GPU TEE support to Azure Confidential VMs. The Confidential Computing Consortium is building standard APIs for secure LLM serving. The next step? Making this easy. Right now, you need a team of security engineers to set it up. In two years, it’ll be a toggle in your cloud console. Until then, if you’re handling sensitive data with LLMs, you’re playing Russian roulette with your privacy and your IP. Confidential computing isn’t optional anymore. It’s the baseline.What is the difference between encryption-in-use and traditional encryption?

Traditional encryption protects data at rest (on disk) or in transit (over the network). Encryption-in-use means the data is protected even while being processed-meaning it never exists in plain text on the server. Confidential computing achieves this using hardware-based Trusted Execution Environments (TEEs) that encrypt memory while the model runs.

Can I use confidential computing with any LLM model?

Technically yes, but practically, it depends on size and hardware. Large models (70B+ parameters) require massive GPU memory and fast attestation. NVIDIA H100/B200 GPUs are the only current platforms that handle these models with under 5% performance loss. Smaller models can run on Intel or AMD TEEs, but loading times may be too slow for real-time use.

Is confidential computing only for cloud providers?

No. While cloud providers like AWS, Azure, and NVIDIA offer managed services, you can also deploy confidential computing on-premises using TEE-capable servers. Many financial institutions and government agencies prefer on-prem solutions to meet data residency rules. Red Hat OpenShift supports TEEs in private data centers.

Why is NVIDIA’s approach better than Intel’s for LLMs?

LLM inference is dominated by GPU workloads. Intel TDX runs on CPUs, which are slow for AI. NVIDIA’s TEEs are built directly into H100 and B200 GPUs, so the entire model and data stay encrypted inside the GPU’s memory during inference. This cuts performance loss from 20% (CPU TEEs) to under 5%. It’s the only way to run large models at scale without sacrificing speed.

Does confidential computing protect against insider threats?

Yes. That’s one of its biggest strengths. Even if a cloud provider’s admin, a rogue employee, or malware gains full control of the server, they can’t access data inside the TEE. The memory is encrypted with a key that only the hardware can decrypt-and only if the system passes remote attestation. This makes insider attacks nearly impossible without physical access to the chip itself.

What are the biggest challenges in adopting confidential computing?

The top three are: 1) Complex setup-attestation chains, secure model loading, and hardware compatibility require deep expertise; 2) Performance overhead on older hardware-Intel SGX can slow LLMs by 25%; 3) Limited availability-NVIDIA’s best TEEs aren’t available in all regions yet. Many teams spend months just getting the first model to load securely.

Vishal Gaur

9 December, 2025 - 23:16 PM

so i read this whole thing and honestly? my brain is fried. like, i get the whole tEE thing, but why does it take 47 minutes to load a model? that’s not secure, that’s just slow. and why do i need a whole team of engineers just to turn on a toggle? if this is the future, i hope the future comes with a ‘please make it simple’ button. also, typo: ‘tinfoil security’ lol, is that a new startup or just a paranoid dev? 😅

Nikhil Gavhane

11 December, 2025 - 03:04 AM

This is actually one of the most hopeful things I’ve read about AI in a long time. For years we’ve been told ‘trust the cloud’ but nobody ever asked who’s watching the watchers. The fact that your medical data can now stay encrypted even while being processed? That’s not just tech-that’s dignity. I work in healthcare IT and I’ve seen too many breaches. This feels like the first real step toward AI that respects people, not just exploits data.

Rajat Patil

11 December, 2025 - 10:40 AM

It is important to note that the implementation of confidential computing represents a significant advancement in data protection. The use of trusted execution environments ensures that sensitive information remains secure during processing. This method aligns with international standards for data privacy and is particularly beneficial for industries such as healthcare and finance. Organizations should consider adopting this technology to meet regulatory obligations and to build public trust.

deepak srinivasa

11 December, 2025 - 14:18 PM

Wait, so if NVIDIA’s GPU TEEs are so fast, why isn’t everyone just using them? Is it cost? Availability? Or is there some hidden downside? I’m curious if the encryption key delivery system is actually foolproof or if there’s still a risk of supply chain compromise. Also, what happens if the attestation server goes down? Does the whole system just lock up?

pk Pk

11 December, 2025 - 17:39 PM

Look, if you’re still using plain-text LLM inference on sensitive data, you’re not just being risky-you’re being irresponsible. This isn’t a ‘nice to have.’ It’s the new baseline. I’ve seen startups get crushed because their investors found out their AI was leaking client data. Don’t wait for a breach to wake up. Start testing NVIDIA’s H100 setups now. Use Azure if you need Kubernetes. But stop pretending encryption-at-rest is enough. Your users deserve better.

NIKHIL TRIPATHI

13 December, 2025 - 02:45 AM

Really solid breakdown. I’ve been playing with Azure’s confidential VMs for a small NLP project and the overhead is noticeable but manageable. The attestation part is a nightmare though-had to rewrite half my CI/CD pipeline just to handle the key exchange. Also, big +1 on the Red Hat OpenShift mention. If you’re already in Kubernetes land, that’s the smoothest path forward. One thing missing: what about open-source models? Can you load Llama 3 into a TEE without vendor lock-in? Would love to see a guide on that.

Shivani Vaidya

14 December, 2025 - 00:25 AM

This is the future. No more excuses.