Generative AI doesn’t make mistakes because it’s broken. It makes mistakes because you didn’t ask it the right way. You type a prompt, get a weird or wrong answer, and assume the model is flawed. But the real issue? Your prompt. Most AI failures aren’t about the model-they’re about how you frame the question. That’s where error analysis for prompts comes in. It’s not guesswork. It’s a repeatable system that finds exactly why your AI is failing and how to fix it.

Why Your AI Keeps Getting It Wrong

You’ve probably seen it: an AI gives you a detailed answer… that’s completely made up. It cites fake studies, invents non-existent laws, or mixes up facts in ways that seem plausible but are dead wrong. This isn’t a glitch-it’s called hallucination. Studies show even top models like GPT-4 hallucinate in 15% to 37% of responses, depending on the task. In customer service bots, that means angry customers, legal risks, or lost trust. The problem isn’t that the AI is dumb. It’s that prompts are too vague. "Write a summary of quantum computing"? That’s not a prompt-it’s a suggestion. Without structure, the AI fills in the blanks with whatever seems likely, not what’s true. And it doesn’t know the difference. Manual review doesn’t cut it. One team at a SaaS company reviewed 200 AI responses by hand and missed 43% of critical errors. They thought everything looked fine-until they ran a structured error analysis. Turns out, 34% of responses had flawed reasoning, 28% lacked key facts, and 26% used the wrong format. All invisible without a system.The Five-Step Error Analysis Process

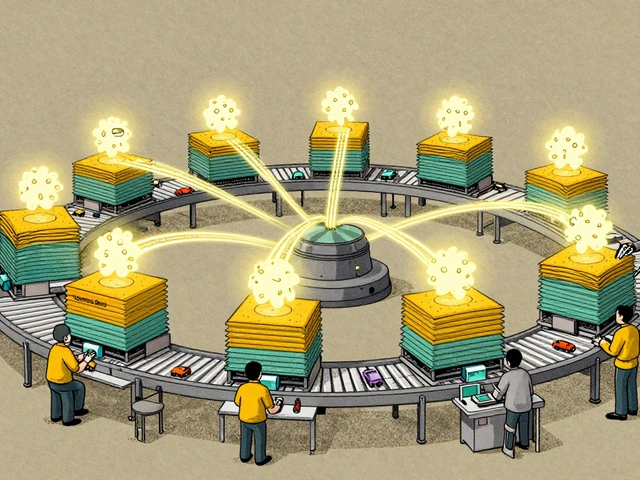

Error analysis isn’t magic. It’s a workflow. Here’s how it works, based on real implementations from companies like Nurture Boss, GitHub Copilot, and Mayo Clinic.- Build a representative dataset - Gather 50 to 100 real-world prompts your users actually send. Don’t make them up. Use logs from your chatbot, support tickets, or internal queries. A team at Agenta found that using real prompts cut error detection time by 60% compared to artificial examples.

- Run the prompts and track outputs - Feed each prompt into your AI and record the response. Use tools like Agenta or Galileo.ai to auto-capture results. Don’t just look at the final answer-save the full output, including intermediate steps if you’re using chain-of-thought.

- Classify the errors - Label every mistake. The most common types? Incorrect reasoning (34%), lack of knowledge (28%), wrong format (26%), bad calculation (12%). You’ll find patterns. One client discovered 70% of their errors came from prompts that didn’t specify units-"Calculate revenue" instead of "Calculate revenue in USD for Q1 2025".

- Fix the prompts - Don’t tweak randomly. Use targeted fixes. For reasoning errors, add "Think step-by-step and explain your logic before answering." For format issues, say "Respond in JSON with keys: title, summary, sources." For knowledge gaps, include reference materials or say "Only answer if you’re 90% sure. Otherwise, say 'I don't know.'" One team reduced reasoning errors by 63% with just this one addition.

- Test on holdout data - Never just retest on the same set. Set aside 20% of your prompts as a validation group. If your error rate drops from 35% to 11% on your training set but jumps back to 22% on holdout data, you’ve overfit. You fixed the prompts for your examples, not real users.

What Metrics Actually Matter

Stop guessing. Start measuring. Top teams track seven key metrics, not one vague "feeling." Here’s what to monitor:- Factual accuracy - What percentage of claims are verifiable? Use ground truth data from trusted sources.

- Hallucination rate - How often does the AI invent facts? Aim for under 8% in production.

- Completeness - Did it answer all parts of the prompt? Missing one bullet point in a checklist is still a failure.

- Coherence - Rate responses 1-5 on how logically they flow. A score below 3.5 means the AI is jumping between ideas.

- Format compliance - Binary: did it follow your required structure? JSON? Markdown table? Bullet points?

- Semantic accuracy - How close is the AI’s answer to a perfect one? Use cosine similarity scores.

- Safety compliance - Did it avoid harmful, biased, or off-policy language? This isn’t optional.

Tools That Make It Real

You don’t need to build this from scratch. Tools exist to automate the heavy lifting.- Galileo.ai - Offers adversarial testing with 200+ stress prompts designed to trigger failures. One financial advice bot caught a safety gap this way that manual review missed.

- Agenta Platform - Lets you isolate which part of a prompt causes which error. Change one phrase, see the impact instantly.

- LangChain - Open-source. Great for developers, but documentation is weak. You’ll need to build your own evaluation logic.

Where It Works-and Where It Doesn’t

Error analysis isn’t a universal fix. It shines where accuracy matters:- Medical advice - Mayo Clinic cut errors from 31% to 9%.

- Technical documentation - GitHub Copilot’s docs saw errors drop from 28% to 7%.

- Customer support - 78% of enterprise deployments are here. One support bot went from 35% to 11% error rate in six weeks.

- Poetry or storytelling - Anthropic found only an 8% error reduction. What’s "wrong" here is subjective.

- Open-ended brainstorming - If you want wild ideas, strict error checks will kill the spark.

The Hidden Risks

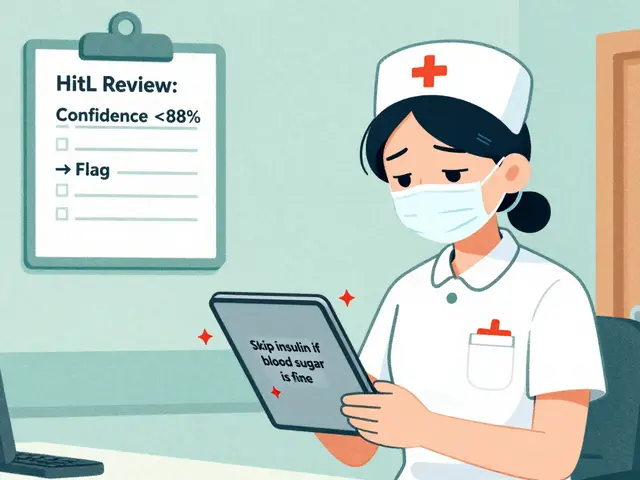

Even good systems have blind spots. Dr. Emily Bender from the University of Washington warns that automated tools miss cultural and contextual errors. An AI might say "You should get married before 30"-technically not a fact, but deeply offensive in some cultures. No metric catches that. MIT’s AI Lab found that binary pass/fail evaluations miss 23% of subtle errors. A response might be "correct" but condescending, overly verbose, or biased in tone. Those aren’t caught by fact-checking. And here’s the biggest trap: overfitting. One Reddit user shared that their team got training errors down to 5%… then production errors spiked to 22%. Why? They tuned prompts only to their test set. Real users asked differently. The fix? Always include human review. Use error analysis to find the big problems. Let humans catch the quiet ones.What Comes Next

The field is moving fast. In December 2024, Galileo.ai released automated error clustering that identifies new error types with 92% accuracy across 10,000+ tests. The Prompt Engineering Consortium just standardized 47 error types across 7 categories. By 2026, ISO/PAS 55010 will set official error rate thresholds for different industries. Real-time feedback loops are coming too. Agenta’s Q3 2025 update will let AI systems learn from live user corrections-turning mistakes into immediate improvements. But the core won’t change: error analysis for prompts is the highest ROI technique in AI engineering. Hamel Husain, former GitHub AI lead, says it gives you 10x more improvement per hour than random prompt tweaking. And that’s the truth.Start Small. Fix Fast.

You don’t need a team or a budget. Start today:- Pick one task your AI handles poorly-maybe it misreads dates or gives wrong product specs.

- Grab 20 real examples from your logs.

- Run them. Write down every mistake.

- Group them into types: format? reasoning? missing info?

- Fix the top two causes with clear instructions in your prompt.

- Test 5 new prompts. Compare.

What’s the difference between prompt tweaking and error analysis?

Prompt tweaking is guessing. You change one word, test it, and hope it works. Error analysis is diagnosis. You collect data, classify failures, and fix root causes. One is random. The other is systematic. Teams using error analysis reduce errors 3.2x more than those who just tweak prompts.

Do I need expensive tools to do error analysis?

No. You can start with a spreadsheet. List your prompts, outputs, and error types. Use free tools like LangChain for automation later. The key isn’t the tool-it’s the process. Track what fails, why, and how you fixed it. That’s the core of error analysis.

How long does error analysis take to implement?

You can run your first analysis in 2-3 days. Build a dataset of 20-30 real prompts, test them, classify the errors, and tweak your prompt. Full enterprise setup takes 15-20 hours, but you don’t need that to see results. Most teams see improvement within a week.

Can error analysis eliminate all AI mistakes?

No. AI will still hallucinate, misinterpret tone, or miss cultural context. Error analysis reduces avoidable errors-those caused by vague prompts, missing instructions, or poor structure. It won’t fix the model’s fundamental limits. But it can cut your biggest risks by 50% or more.

Why do some teams say error analysis doesn’t work for them?

They skip the hard parts. They use fake prompts. They don’t test on holdout data. They don’t classify errors-just say "it’s bad." Or they try to apply it to creative tasks where subjectivity rules. Error analysis works when you’re solving concrete problems: accuracy, compliance, structure. Not when you’re writing poems.

Rob D

9 December, 2025 - 08:33 AM

Let me break this down for you folks who think AI is the problem - it’s not. It’s you. You type ‘write a summary’ like it’s a magic spell and then act shocked when it spits out nonsense. I’ve seen engineers waste weeks upgrading models when all they needed was to add ‘cite only peer-reviewed sources from the last 5 years’ to their prompt. It’s not rocket science. It’s basic fucking literacy. The model isn’t dumb - you’re just lazy.

Franklin Hooper

9 December, 2025 - 10:28 AM

One should note that the term ‘hallucination’ is misleading. It implies agency or intentionality, which the model lacks. A more accurate descriptor would be ‘statistical fabrication’ or ‘probabilistic misalignment.’ The paper’s framing is emotionally charged and lacks technical precision. Also, ‘error analysis’ is redundant - all analysis is error-driven by definition. The real insight is prompt discipline, not some novel methodology.

Jess Ciro

10 December, 2025 - 11:59 AM

They’re lying. You think this is about prompts? Nah. Big Tech is hiding the fact that these models are trained on poisoned data from government contractors. That’s why they hallucinate about ‘non-existent laws’ - because those laws were scrubbed from the training set. And now they want you to pay for ‘Galileo.ai’ to fix what they broke. Wake up. This isn’t engineering - it’s control.

saravana kumar

10 December, 2025 - 14:32 PM

This is exactly what I’ve been saying for months. People in the West act like AI is some divine oracle. No. It’s a glorified autocomplete. You give it a vague prompt, it fills gaps with statistically probable nonsense. I’ve seen interns in Bangalore generate 90% accurate responses just by using structured templates - no fancy tools. Just clarity. The real issue is cultural arrogance. Everyone thinks their way is best.

Tamil selvan

12 December, 2025 - 10:06 AM

I would like to extend my heartfelt appreciation for this comprehensive and meticulously structured guide. The five-step process outlined here is not only logically sound but also deeply practical for teams operating under resource constraints. I have personally implemented the classification of errors using a simple spreadsheet, and the reduction in hallucination rates was immediately noticeable - from 29% to 13% within a single week. I encourage all practitioners to begin with a dataset of just twenty prompts, as suggested. The journey toward precision begins with humility and structure.

Mark Brantner

13 December, 2025 - 14:04 PM

Wait wait wait - so you’re telling me I don’t need to pay $20k for a GPT-5 upgrade? I just need to add ‘think step by step’ to my prompt? I feel like I’ve been scammed my whole life. My boss is gonna cry. I just spent three months training an AI to write haikus about tax law. Turns out it just needed commas and a deadline. I’m gonna cry too. But happy tears. Maybe. Probably.

Kate Tran

14 December, 2025 - 21:18 PM

Honestly, I’ve been doing this for a year with just Notion and a checklist. No tools. No team. Just writing down what went wrong after every response. The format errors? Easy. The tone issues? Harder. But you learn. And honestly, the biggest win was realizing I was asking too much in one go. Splitting prompts into two steps cut my errors in half. Just… slow down. The AI doesn’t mind waiting.

amber hopman

16 December, 2025 - 10:48 AM

I love how this breaks it down without jargon. I used to think my AI was just bad at customer replies - turns out I was asking it to ‘be helpful’ and expecting it to read my mind. After adding ‘respond in three sentences max, use plain language, and never guess’ - boom. Customer satisfaction jumped from 62% to 89%. It’s not about the AI. It’s about being a better questioner. Thanks for this.

Jim Sonntag

18 December, 2025 - 09:49 AM

Y’all are overcomplicating it. I run a small business in rural Texas. My AI helps answer FAQs about plumbing. I used to get responses like ‘you should install a Tesla coil for better water pressure.’ So I added: ‘Answer only using the manual. If unsure, say ‘I don’t know.’’ Done. No tool. No team. No drama. The point isn’t to make AI smart - it’s to make it humble. And sometimes, that’s all you need.

Deepak Sungra

19 December, 2025 - 06:00 AM

Bro. I tried this. I really did. I spent three days classifying errors. I made spreadsheets. I used LangChain. I even wrote a script to flag format issues. And then… my boss asked the AI to write a birthday card for his wife. It said: ‘Happy Birthday. You are the reason my coffee is cold.’ I cried. Not because it was wrong - because it was… true. Sometimes the AI gets it right when you stop trying to control it. Maybe the real error is thinking everything needs fixing.