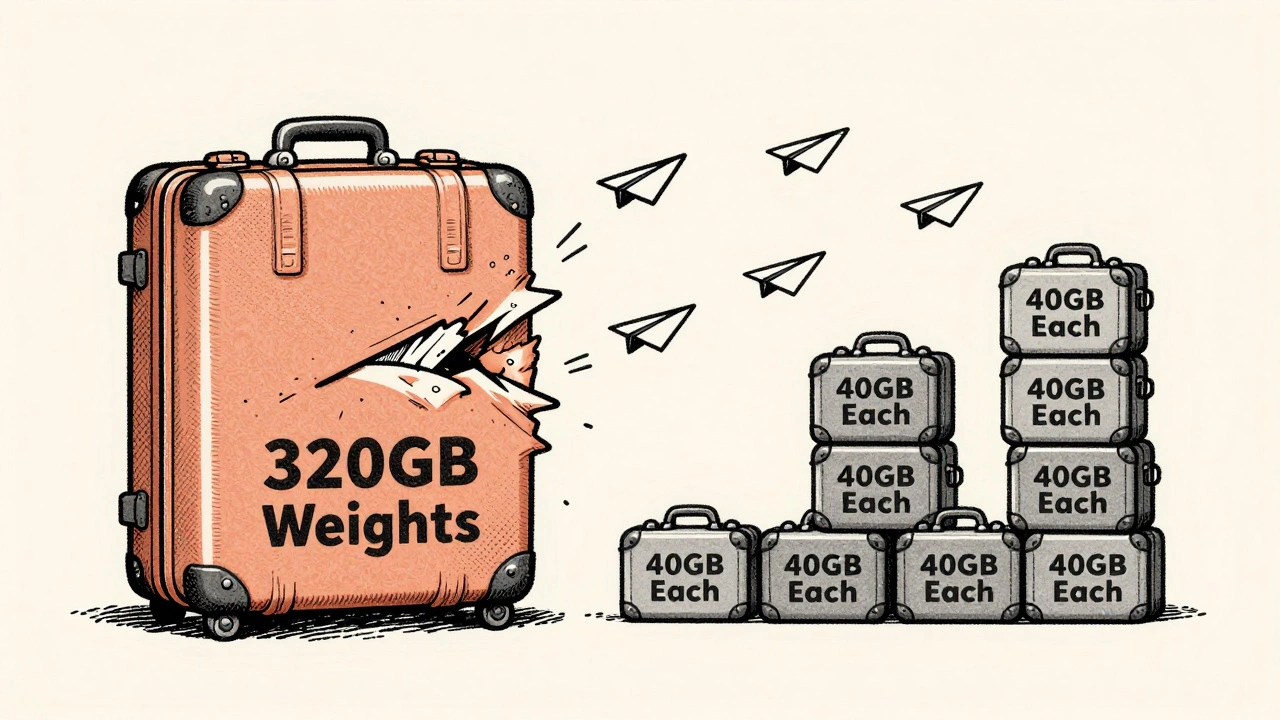

Training a generative AI model with hundreds of billions of parameters isn’t just hard-it’s impossible on a single GPU. Even the most powerful graphics cards today max out at 80GB of memory. Models like GPT-3 need over 320GB just to load the weights. So how do companies train these models at all? The answer lies in breaking them apart-literally-and distributing the pieces across dozens, sometimes thousands, of GPUs. This is where model parallelism and its most practical form, pipeline parallelism, come in.

Why Single GPUs Can’t Handle Big Models

Let’s say you want to train a model with 175 billion parameters. Each parameter is stored as a 16-bit floating-point number-that’s 2 bytes. Multiply that out, and you need 350GB just to store the weights. Add in optimizer states, gradients, and activations, and you’re looking at over 1TB of memory. No consumer or even enterprise GPU comes close. Even the NVIDIA H100, with its 80GB HBM3 memory, can’t handle it alone. Data parallelism, the most common training method, won’t solve this. It works by copying the full model onto each GPU and splitting up the training data. But if each GPU needs a full copy of the model, you’d need five H100s just to fit GPT-3. That’s expensive, inefficient, and still doesn’t scale beyond a few hundred billion parameters. So engineers had to think differently: instead of copying the whole model, split the model itself.What Is Model Parallelism?

Model parallelism means dividing the neural network across multiple devices. Instead of each GPU holding the entire model, each one holds only a portion. This could be splitting the layers (pipeline parallelism), splitting the weights within a layer (tensor parallelism), or both. The biggest win? Memory. If you split a 320GB model across eight GPUs, each only needs to store about 40GB-not including communication overhead. That’s the core advantage: you can train models that physically don’t fit on one device. But splitting the model isn’t free. You now have to move data between GPUs during training. Every time one piece finishes computing, it sends its output to the next. That’s where communication becomes the bottleneck. And if you’re not careful, most of your GPUs sit idle waiting for data-wasting precious compute time.Pipeline Parallelism: The Assembly Line Approach

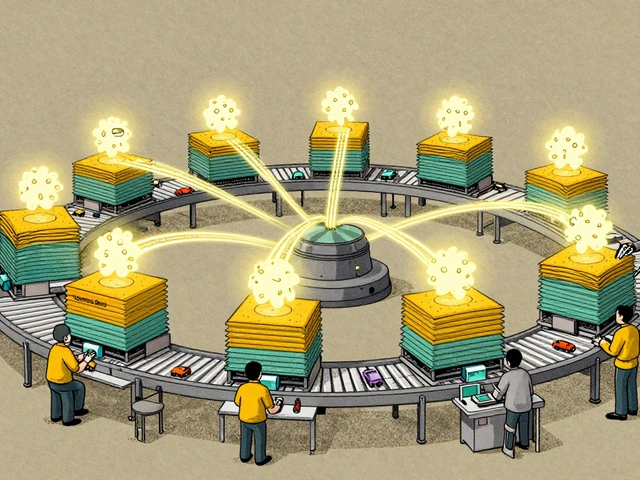

Pipeline parallelism is a smart way to implement model parallelism. Think of it like a car assembly line. Each station does one job: attach wheels, install engine, paint the body. While one car moves from station to station, the next one starts at the first station. That way, every station is busy all the time. In AI training, each “station” is a group of layers in the neural network. One GPU handles layers 1-10, another handles 11-20, and so on. During the forward pass, input data flows from GPU 1 to GPU 2 to GPU 3, and so on. During backpropagation, gradients flow backward the same way. This was first made practical by Google’s GPipe paper in 2019. Before GPipe, pipeline parallelism had terrible efficiency. If you had 8 GPUs, you’d spend 7/8 of your time waiting for data to move between stages. That’s just 12% utilization. GPipe introduced micro-batching: instead of sending one batch through the pipeline at a time, you send multiple batches in sequence. While the first batch is in stage 8, the second batch is already in stage 2. This reduces idle time from 87% down to under 10%. Modern systems like NVIDIA’s Megatron-LM and ColossalAI now achieve over 90% GPU utilization with clever scheduling. The trick is overlapping computation with communication. While one stage is waiting for data, it can start computing on the next micro-batch. This is called interleaved scheduling.

Grouped vs. Interleaved Scheduling

There are two main ways to manage pipeline flow: grouped and interleaved. In grouped scheduling, all forward passes complete before any backward passes begin. This reduces communication frequency-each GPU sends and receives data only once per full cycle. But it requires more memory because you have to store all intermediate activations until the entire batch finishes. In interleaved scheduling, forward and backward passes alternate. This uses less memory but increases communication overhead. It also creates more complex timing, since backward passes can block forward passes if not managed carefully. Most production systems today use a hybrid: grouped for stability, interleaved for efficiency. The choice depends on your memory budget and how much you can tolerate latency.Hybrid Parallelism: The Real-World Standard

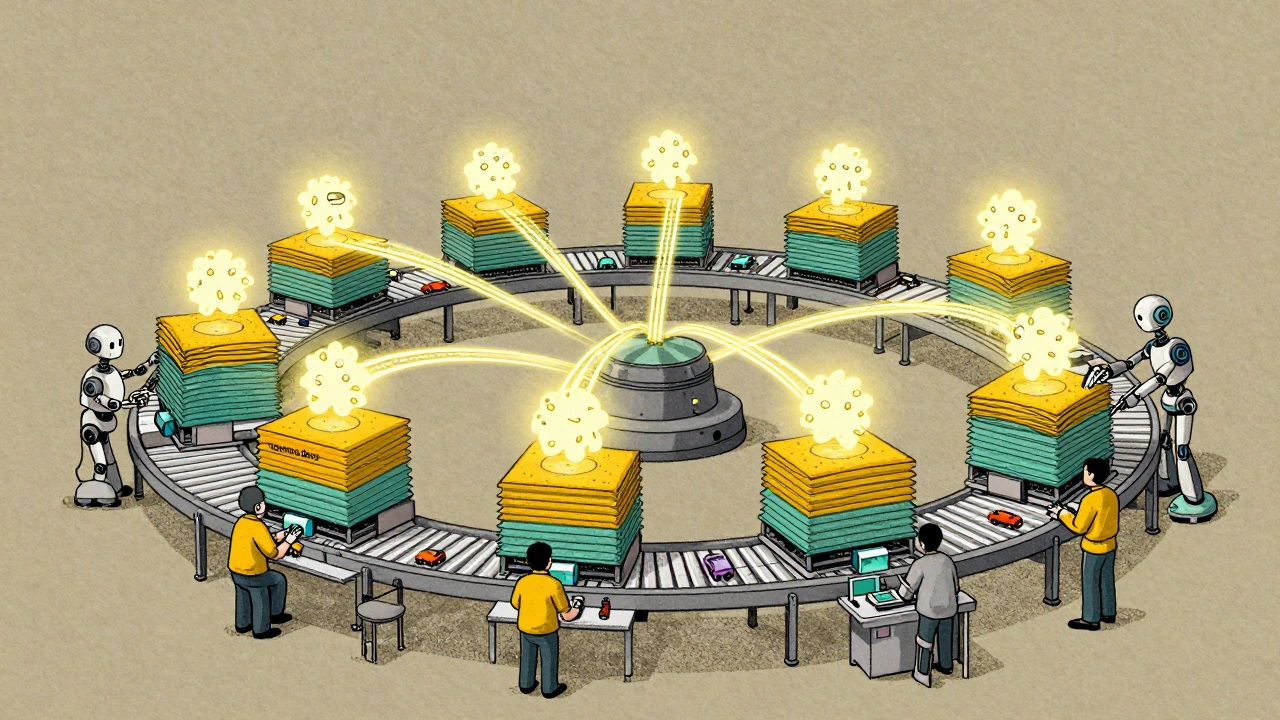

No one uses pipeline parallelism alone anymore. The most successful training setups combine it with other methods. For example, NVIDIA’s Megatron-Turing NLG (530 billion parameters) used 3,072 GPUs. How? Eight-way tensor parallelism to split layers internally, 128-way pipeline parallelism to split the model into stages, and four-way data parallelism to replicate each pipeline group. That’s three types of parallelism working together. AWS SageMaker uses a similar setup: two-way pipeline parallelism with four-way data parallelism across eight GPUs. This gives you both memory efficiency and high throughput. Why combine them? Because each method fixes a different problem:- Data parallelism improves throughput but needs full model copies.

- Tensor parallelism splits weights within a layer (like attention matrices) but requires heavy communication between GPUs.

- Pipeline parallelism splits layers across devices with moderate communication and massive memory savings.

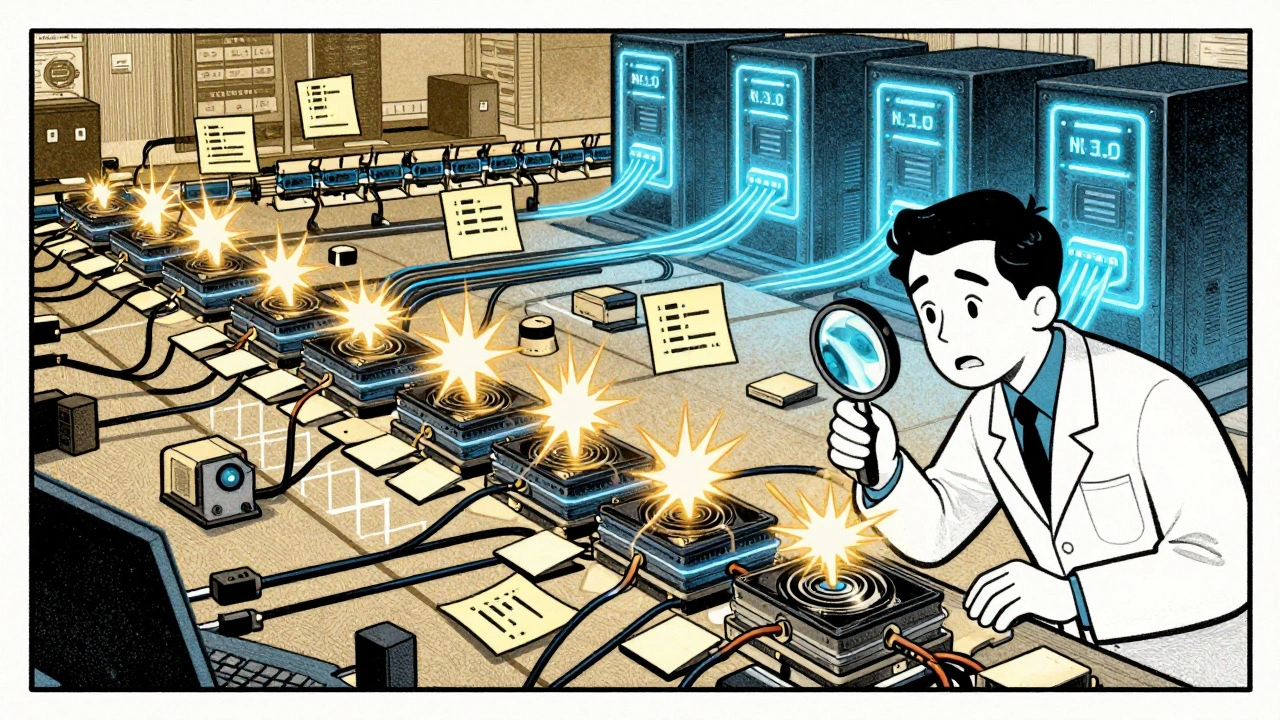

Real-World Challenges

Pipeline parallelism sounds great on paper-but in practice, it’s messy. One big issue is load imbalance. If one stage has a heavy layer (like a 128-head attention block), that GPU becomes a bottleneck. The others sit idle waiting for it. Engineers fix this by grouping layers carefully-putting heavy layers on their own GPU or splitting them across multiple devices. Another problem is activation memory. Each intermediate output from a layer has to be stored until the backward pass. For deep models, that’s gigabytes of memory per micro-batch. The solution? Activation checkpointing. You recompute some layers during backprop instead of storing them. This cuts memory use by 60-70% but adds 10-15% to training time. Then there’s debugging. If a model crashes after 12 hours of training, where did it go wrong? Was it a communication error? A gradient explosion in stage 5? A memory leak in stage 12? Tracking down bugs in a 64-stage pipeline takes 3x longer than in data parallel setups. Many teams now use logging tools that trace data flow across stages to make this manageable.

Who’s Using It-and How Much?

According to Gartner’s 2023 AI Infrastructure Report, 78% of companies training models over 10 billion parameters use pipeline parallelism. For models over 50 billion, that number jumps to 92%. Anthropic trained Claude 2 on 256 A100 GPUs using optimized pipeline scheduling and hit 92% scaling efficiency. DeepMind trained a 70B-parameter model using pipeline parallelism because their existing cluster couldn’t fit the full model on any single node. Meta’s Llama 3 used a hybrid approach with pipeline and tensor parallelism across thousands of GPUs. Even smaller teams are adopting it. Startups training 10B-20B parameter models now use cloud-managed tools like AWS SageMaker or Google Vertex AI, which handle pipeline setup automatically. You don’t need to write custom CUDA code anymore-you just specify how many stages you want.What’s Next?

The next wave of improvements is focused on making pipeline parallelism smarter and less painful. NVIDIA’s Megatron-Core (2023) introduced dynamic reconfiguration. You can pause training, change the number of pipeline stages, and resume without restarting from scratch. This is huge for long-running jobs that might need to adapt to hardware failures or new data. ColossalAI’s “zero-bubble” pipeline (2023) uses advanced scheduling to eliminate idle time entirely. It overlaps communication and computation so tightly that GPUs rarely wait. Microsoft’s research on asynchronous pipeline updates (NeurIPS 2023) showed 95% scaling efficiency across 1,024 GPUs. That’s close to theoretical limits. The future? Pipeline parallelism will be standard for any model over 20 billion parameters. By 2026, Gartner predicts communication overhead will drop by 40-60% thanks to better scheduling and faster interconnects like NVIDIA’s NVLink 5.0. But here’s the real takeaway: the bottleneck isn’t algorithms anymore. It’s infrastructure. The best transformer architecture in the world won’t train if you can’t fit it on hardware. Pipeline parallelism isn’t just a technical trick-it’s the only reason we’re seeing AI models scale so fast.Should You Use It?

If you’re training a model under 10 billion parameters? Probably not. Data parallelism is simpler, faster, and more stable. If you’re training a model over 50 billion? You have no choice. Pipeline parallelism is mandatory. For teams in between-10B to 50B-start with cloud tools. Use AWS SageMaker or Hugging Face’s Accelerate to handle the complexity. Don’t try to build your own pipeline from scratch unless you have a team of distributed systems engineers. The learning curve is steep. ColossalAI estimates it takes 2-3 weeks to implement pipeline parallelism correctly. Mistakes can cost days of training time. But if you’re serious about building the next generation of generative AI, you’ll need to master it.What’s the difference between model parallelism and pipeline parallelism?

Model parallelism is the broad category of splitting a neural network across multiple devices. Pipeline parallelism is a specific type of model parallelism where the model is divided into sequential stages-each stage runs on a different device, and data flows from one to the next like an assembly line. Think of model parallelism as the umbrella term, and pipeline parallelism as the most widely used method under it.

Why can’t we just use more GPUs with data parallelism?

Data parallelism requires each GPU to hold a full copy of the model. For a 320GB model, you’d need five H100 GPUs just to fit the weights-not counting gradients or optimizer states. Beyond that, communication between GPUs to synchronize parameters becomes a bottleneck. Pipeline parallelism avoids this by splitting the model itself, so each GPU only holds a fraction of it.

How does micro-batching improve pipeline efficiency?

Without micro-batching, a pipeline with 8 stages would be idle 87% of the time-waiting for data to move from stage to stage. Micro-batching sends multiple small batches through the pipeline at once. While batch 1 is in stage 8, batch 2 is in stage 2, and batch 3 is in stage 1. This keeps all stages busy, reducing idle time from 87% to under 10%.

What’s the biggest problem with pipeline parallelism?

The biggest problem is complexity. Debugging is harder because errors can occur anywhere in the pipeline. Load imbalance-where one GPU is slower than others-can bottleneck the entire system. Memory management for activations is tricky, and communication overhead can eat into gains if not optimized. These issues require deep expertise in distributed systems and CUDA programming.

Do I need to write custom code to use pipeline parallelism?

Not anymore. Tools like NVIDIA’s Megatron-LM, ColossalAI, AWS SageMaker, and Hugging Face Accelerate handle pipeline setup automatically. You just specify the number of pipeline stages and the number of GPUs. Custom code is only needed if you’re building a new architecture or optimizing for a very specific use case. For most teams, using managed tools is the smarter choice.

Is pipeline parallelism the future of AI training?

Yes-for models over 20 billion parameters. As models keep growing faster than GPU memory, pipeline parallelism will become the default. Hybrid approaches combining it with data and tensor parallelism are already standard at top labs. The only question is how much easier it will become to use. Tools are improving fast, and by 2026, it’ll be as routine as using GPUs today.

Megan Ellaby

14 December, 2025 - 06:05 AM

okay but like… i just trained a 12b model on a single A100 and it was fine?? why are we acting like pipeline parallelism is the only way? i feel like this post is making it sound like you need a NASA budget to train anything these days. also micro-batching? that’s just batching with extra steps lol

Rahul U.

14 December, 2025 - 06:27 AM

Really well explained! 🙌 Especially the assembly line analogy - made pipeline parallelism click for me. I’ve been using Hugging Face Accelerate for my 20B experiments and honestly, it’s been a game-changer. No CUDA headaches. Just set stages=16 and go. The real win is how much faster debugging is now compared to 2 years ago.

Frank Piccolo

15 December, 2025 - 11:04 AM

Wow. Another overwrought tech blog pretending like this is some revolutionary breakthrough. We’ve had model parallelism since the 90s. The fact that startups now think they need to ‘use SageMaker’ instead of learning how the damn thing works is why American AI is becoming a cartoon. You don’t get to skip the fundamentals and still claim you’re building the future. Also, ‘zero-bubble’? That’s not innovation, that’s marketing jargon wrapped in a PyTorch wrapper.

David Smith

17 December, 2025 - 02:28 AM

People act like this is some heroic engineering feat. Let me tell you something - we’re not training AI to cure cancer. We’re training it to generate cat memes in 17 languages. And we’re burning through enough electricity to power a small country doing it. This isn’t progress - it’s excess. Someone’s gotta say it: we’ve lost the plot. If your model needs 3,072 GPUs to write a paragraph, maybe the problem isn’t the hardware. Maybe it’s the idea.

Lissa Veldhuis

17 December, 2025 - 14:23 PM

ok but like… i’ve been in this space since 2020 and honestly the whole pipeline thing is just a glorified band-aid on a broken system. why are we even trying to shove trillion-parameter models into silicon that was designed for gaming? we’re not building rockets, we’re building chatbots that hallucinate entire court cases. and now we’ve got people bragging about 92% utilization like it’s a trophy? bro. the real innovation is just… not doing this at all. stop training. start pruning. start distilling. start being smart instead of throwing GPUs at the problem like it’s a magic wand.

Michael Jones

19 December, 2025 - 01:18 AM

Think about it - this isn’t just about memory or GPUs. It’s about how we think. We used to build things piece by piece. Now we build monoliths and then break them apart to make them fit. That’s not engineering. That’s desperation. The real question isn’t how to split the model - it’s why we’re building models so big in the first place. Maybe the answer isn’t more compute. Maybe it’s less ego.

allison berroteran

20 December, 2025 - 13:51 PM

I really appreciate how this post breaks down the technical layers without drowning in jargon - it’s rare to find something that’s both accurate and accessible. I’ve been working with smaller teams trying to adopt these techniques, and honestly, the biggest hurdle isn’t the code, it’s the mindset. So many engineers still think ‘more GPUs = better’ without understanding the trade-offs. The part about activation checkpointing saving 60-70% memory? That’s the kind of insight that changes how you design your entire pipeline. And the fact that tools like ColossalAI are making this approachable? That’s the quiet revolution. We’re not just scaling models - we’re scaling understanding. And that’s what matters most.