Why Enterprise Data Governance for LLMs Isn’t Optional Anymore

If you’re deploying a large language model (LLM) in your company without a clear data governance plan, you’re already at risk. Not because the model is flawed-but because the data it learned from might not be legal, ethical, or even safe. In 2025, regulators in the EU, U.S., and beyond are cracking down on AI systems that use unvetted, untracked, or sensitive data. Companies have already faced fines, public backlash, and internal system failures because their LLMs spit out confidential customer info, biased hiring recommendations, or fabricated legal citations. The problem isn’t the AI. It’s the data behind it.

Traditional data governance was built for structured databases-tables with clear columns, defined owners, and predictable updates. LLMs don’t work that way. They’re trained on petabytes of unstructured text: emails, chat logs, PDFs, internal wikis, customer support transcripts. That data isn’t neatly labeled. It doesn’t come with metadata tags. And often, no one knows where it came from-or who owns it.

What Makes LLM Data Governance Different

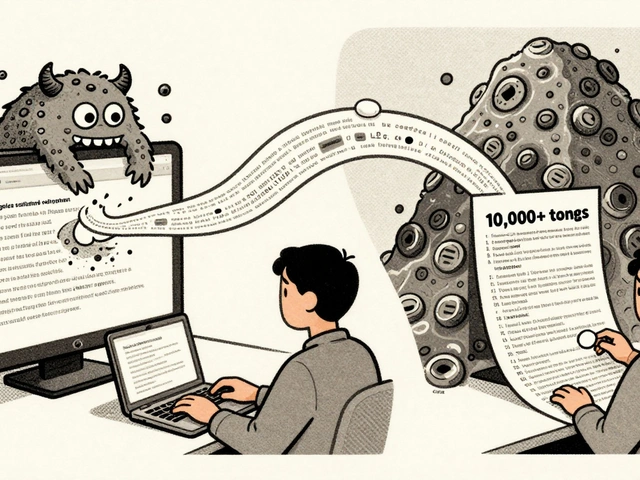

Most data governance tools today can’t handle what LLMs need. You can’t just apply your old data cataloging system to a model trained on 10 million Slack messages. LLMs don’t just store data-they transform it. They generate probabilistic outputs. Two identical prompts can yield wildly different answers. That makes auditing hard. How do you trace a hallucinated fact back to its source if the model didn’t copy it directly?

Here’s what changes:

- Data lineage becomes complex: Instead of tracing a sales number from a CRM to a dashboard, you’re tracing how a model learned to say "Company X filed for bankruptcy" from a single paragraph in a 2019 investor report buried in a SharePoint folder.

- Ownership is blurry: Who owns the data used to train your LLM? The legal team? The data engineering group? The marketing team that uploaded customer emails? Without clear ownership, no one takes responsibility when things go wrong.

- Consent and privacy are harder to enforce: Did you get permission to use that employee’s internal memo? That customer’s support chat? That public forum post from 2017? GDPR and CCPA don’t care if the data was "public." If it’s personal, you need a legal basis to use it.

LLM governance isn’t about locking down data. It’s about understanding how data flows into the model, how it’s used, and what comes out.

Core Principles of LLM Data Governance

Successful enterprises don’t just slap on compliance policies. They build governance into their DNA. Three principles keep them on track:

- Transparency: You must know what data trained your model. Not just the file names-but the context, the source, the sensitivity level. If someone asks, "Where did this answer come from?" you should be able to show them the exact dataset and version.

- Accountability: Someone must be named as the owner of each training dataset. That person is responsible for ensuring it meets privacy standards, is properly anonymized, and is regularly reviewed.

- Continuous monitoring: LLMs don’t stay perfect. They drift. A model trained on 2023 data might start generating outdated financial advice by 2025. You need automated alerts for output anomalies, bias spikes, or new regulatory changes.

These aren’t nice-to-haves. They’re the foundation of every compliant LLM deployment.

Tools That Make LLM Governance Work

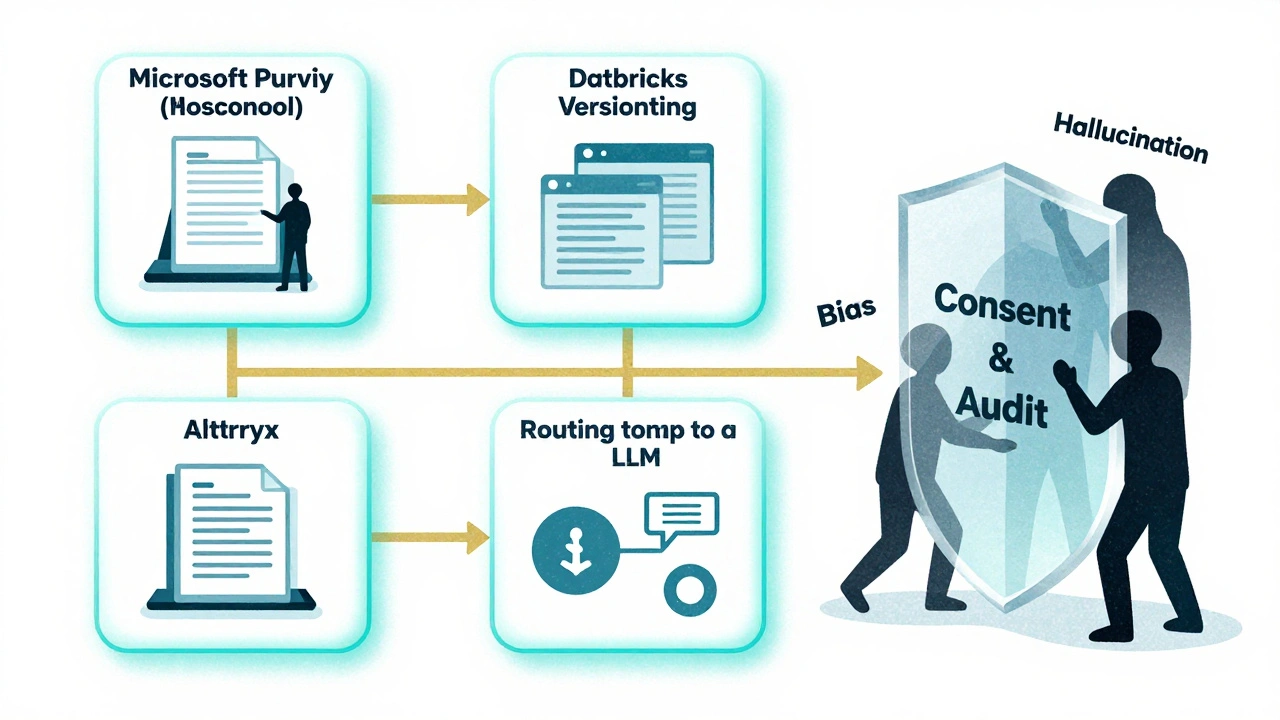

You can’t do this manually. You need tools that talk to each other. The best enterprises use a stack that connects data cataloging, metadata management, and AI monitoring.

- Microsoft Purview: Tracks data lineage across cloud and on-prem systems. It can scan unstructured files, tag sensitive content (like PII or PHI), and show you exactly which documents fed into your LLM training pipeline.

- Databricks: Lets you build governed data pipelines where training datasets are versioned, tested, and approved before use. It integrates with Purview to auto-tag data quality issues.

- ER/Studio: Maps data relationships visually. If your LLM starts generating incorrect product names, ER/Studio helps you trace whether the error came from a mislabeled training file or a corrupted schema.

- Alteryx: Connects governed data sources directly to LLM APIs. It ensures your model only pulls from approved datasets and logs every request for audit purposes.

These tools don’t work in isolation. The magic happens when they’re integrated. For example: Purview detects a new file containing customer emails. It flags it as sensitive. Databricks blocks it from entering the training pipeline unless the legal team approves it. Alteryx logs the decision. ER/Studio updates the data map. That’s governance automation.

How to Avoid Common Pitfalls

Most LLM governance failures aren’t technical. They’re cultural.

- Assuming "anonymized" data is safe: Removing names doesn’t stop re-identification. A model trained on 10,000 support tickets might still recall your address if you mentioned "I live near the old bridge in Portland." Use differential privacy or synthetic data generation instead.

- Letting data scientists run wild: If your AI team downloads data from Slack, Google Drive, or even public GitHub repos without approval, you’re building on quicksand. Require all training data to pass through a governance gate.

- Ignoring bias detection: LLMs amplify biases in their training data. If your support tickets mostly come from male customers, your model might default to male pronouns in responses. Use tools like IBM’s AI Fairness 360 or Google’s What-If Tool to scan for disparities.

- Thinking governance is a one-time project: Regulations change. Data sources change. Models get retrained. Governance must be continuous. Set up monthly reviews: Who updated the training data? Did the output quality drop? Are new regulations affecting us?

Real-World Impact: What Happens When You Get It Right

Companies that built strong LLM governance frameworks aren’t just avoiding fines-they’re gaining advantages.

- A global bank reduced compliance incidents by 40% after implementing automated PII detection in training data. Their LLM now handles customer inquiries without ever touching real account numbers.

- A healthcare provider cut time-to-insight on patient feedback by 30%. By governing their unstructured survey data, they trained an LLM to summarize trends in real time-without violating HIPAA.

- A manufacturing firm used LLMs to analyze maintenance logs. With proper lineage tracking, they caught a pattern: a specific sensor failure always followed a certain maintenance procedure. That insight saved them $2.3M in downtime over six months.

The common thread? They didn’t wait for a breach to act. They treated data governance as a competitive edge.

The Future: Governance as a Built-In Feature

The next wave of AI tools won’t just help you build models-they’ll help you govern them automatically.

Look at the dbt Semantic Layer. It lets teams define metrics-like "customer satisfaction score"-once, and use that same definition across every report, dashboard, and AI model. No more conflicting definitions. No more data confusion. That’s governance by design.

Soon, LLM platforms will include built-in governance modules: automatic consent logging, real-time bias scoring, regulatory compliance checks. But until then, you need to build it yourself.

The companies that win with AI in 2026 won’t be the ones with the biggest models. They’ll be the ones with the cleanest, most accountable data.

Frequently Asked Questions

Do I need to get consent to use internal emails for LLM training?

Yes-if the emails contain personal information like names, addresses, or financial details. Even internal communications can be subject to GDPR, CCPA, or other privacy laws. Best practice: anonymize the data before training, or get explicit consent from employees. Some companies use synthetic data generation to replace real emails with realistic but fictional versions.

Can I use public data from the internet to train my LLM?

Technically, yes-but legally, it’s risky. Public doesn’t mean free to use. Many websites have terms of service that prohibit scraping for AI training. Copyrighted material (books, articles, code) can trigger legal claims. Even if it’s publicly available, if it contains personal data (like forum posts with real names), you still need a legal basis to use it. Stick to licensed datasets or data you own.

What’s the biggest mistake companies make with LLM governance?

Waiting until after deployment to think about governance. Many teams rush to deploy an LLM for speed or hype, then scramble to fix data issues later. That’s when breaches happen. The right approach is to design governance into the project from Day 1-before you even pick a model.

How often should I audit my LLM’s training data?

At least quarterly, but ideally with automated checks after every model update. If your training data changes monthly (e.g., new customer feedback rolls in), you should audit each new version. Look for new sensitive data, outdated sources, or signs of bias. Automated tools like Microsoft Purview can flag changes and trigger alerts.

Is LLM governance only for big companies?

No. Even small teams using LLMs for customer support or internal documentation need governance. A startup that trains a model on customer emails without consent can still be fined under GDPR. The scale of your operation doesn’t matter-the risk does. Start small: define one data source, assign one owner, and document one policy. Build from there.

Next Steps: How to Start Today

Don’t wait for a perfect plan. Start with one action:

- Identify your first LLM use case: Is it customer service? Internal knowledge search? Report generation? Pick one.

- List every data source feeding into it: Emails? Docs? Chat logs? Public websites? Write them down.

- Assign one owner for each source: Who’s responsible for making sure it’s legal and clean?

- Run a quick scan: Use a free tool like Microsoft Purview’s trial or a data profiler to check for PII in your datasets.

- Document your rules: Even a one-page policy like "No customer emails without anonymization" is better than nothing.

LLM governance isn’t about control. It’s about trust. Your employees, customers, and regulators need to know you’re using AI responsibly. Start building that trust today-with data you can stand behind.

Tom Mikota

10 December, 2025 - 02:50 AM

I’ve seen teams deploy LLMs like they’re launching a TikTok challenge-no plan, no brakes, just ‘let’s see what happens.’ And then? Boom. Legal team shows up with a subpoena and a side of ‘we told you so.’ Data governance isn’t sexy, but neither is a $5M fine. Start with one dataset. Anonymize it. Document it. Then sleep at night.

Mark Tipton

10 December, 2025 - 17:37 PM

Let’s be clear: this entire ‘LLM governance’ movement is a regulatory theater designed to stifle innovation under the guise of ‘safety.’ The EU doesn’t want AI-they want control. The U.S. is just following suit because regulatory capture is easier than innovation. And yet, here we are, pretending that tagging every Slack message with a ‘sensitivity level’ will stop a model from generating a plausible lie. The real issue? We’re applying 1990s database logic to 2025 neural networks. It’s like putting seatbelts on a rocket ship and calling it ‘safety.’

Adithya M

11 December, 2025 - 20:30 PM

Bro, you’re overcomplicating this. You don’t need ER/Studio and Purview and Alteryx all talking to each other like some sci-fi orchestra. Just lock down your training data at the source. If it’s from Slack, block it. If it’s from customer emails, anonymize or delete. Simple. No fancy tools. No meetings. Just discipline. I’ve seen startups do this with Python scripts and a shared Google Doc. They’re compliant. You’re still arguing about metadata schemas.

Jessica McGirt

12 December, 2025 - 21:45 PM

I appreciate how this article emphasizes accountability-not just as a policy, but as a cultural shift. Too often, we treat governance as someone else’s job: ‘It’s legal’s problem,’ or ‘That’s the data team’s headache.’ But if no one owns the data, no one owns the outcome. I’ve worked in teams where the AI engineer just downloaded a folder labeled ‘customer_feedback_final_v3.zip’ from a shared drive with no context. Three months later, the model was generating gender-biased responses because 90% of the feedback came from male customers. It wasn’t a technical flaw. It was a leadership failure. Assign owners. Now.

Donald Sullivan

13 December, 2025 - 04:20 AM

Yeah, sure. ‘Governance as a competitive edge.’ Tell that to the dev who’s got a deadline in 48 hours and a manager screaming for ‘the AI chatbot.’ You want governance? Fine. But don’t make me fill out a 12-page form just to train on internal docs that already have ‘CONFIDENTIAL’ stamped on them. This isn’t banking. It’s a startup. We don’t have a compliance officer named Karen. We have a guy named Dave who runs Python scripts at 2 a.m. while eating cold pizza. Stop treating us like we’re Goldman Sachs.

Tina van Schelt

13 December, 2025 - 07:12 AM

I love how this piece doesn’t just scream ‘compliance!’ but actually paints governance as the quiet superhero of AI-no capes, just clean data and quiet confidence. Think about it: the most powerful LLM in the world is useless if it can’t speak without risking a lawsuit or embarrassing your brand. I’ve seen companies burn millions chasing shiny models, only to realize their biggest asset was the humble, well-documented dataset they ignored for months. Governance isn’t the boring stuff-it’s the foundation you don’t see until it’s gone. And when it’s gone? You’re left holding a very expensive, very dangerous paperweight.