Best PHP AI Scripts - Page 3

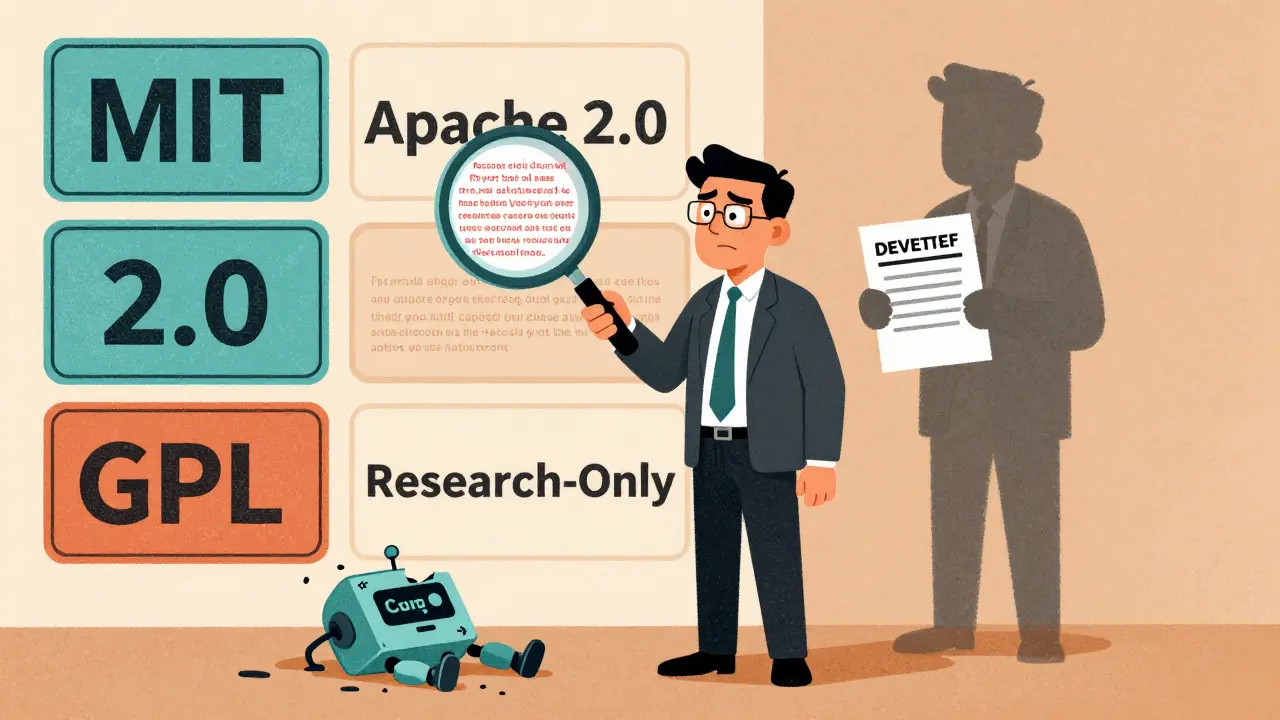

Legal and Licensing Considerations for Deploying Open-Source Large Language Models

Open-source LLMs can save millions in API costs-but only if you follow the license rules. Learn how MIT, Apache 2.0, and GPL licenses affect commercial use, training data risks, and compliance steps to avoid lawsuits.

Read MoreModel Compression Economics: How Quantization and Distillation Cut LLM Costs by 90%

Quantization and distillation cut LLM inference costs by up to 95%, enabling affordable AI on edge devices and budget clouds. Learn how these techniques work, when to use them, and what hardware you need.

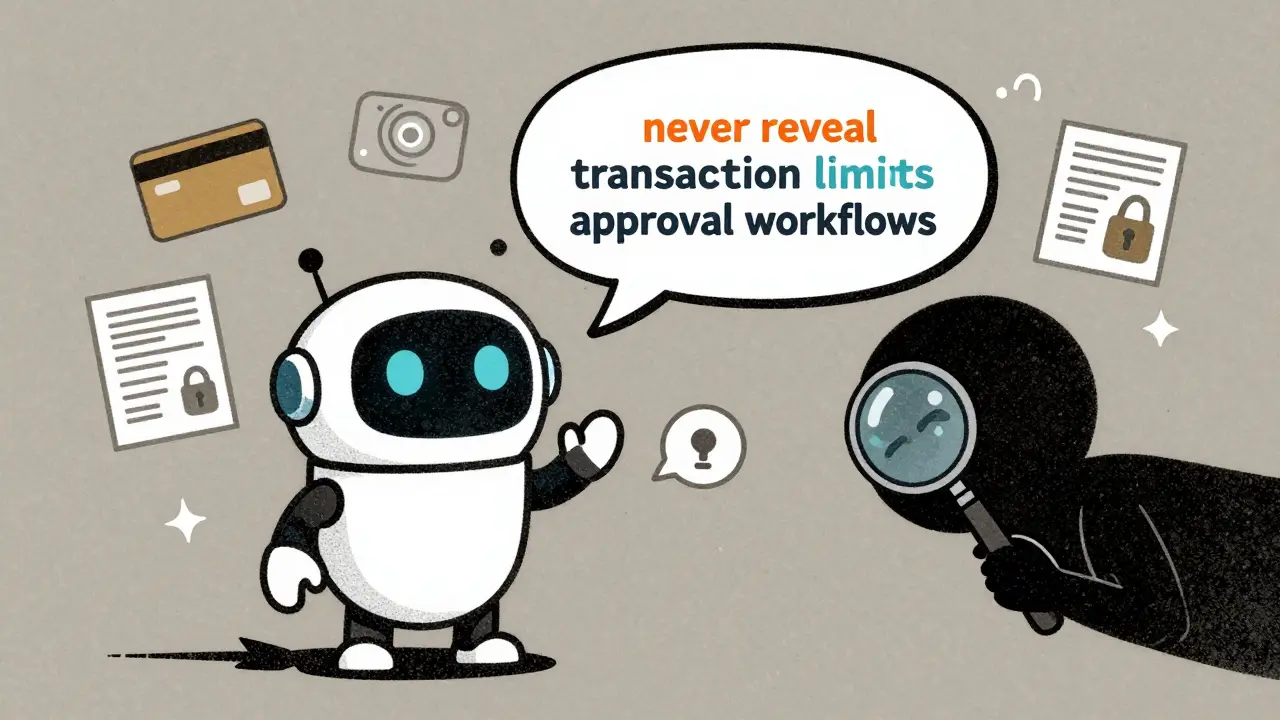

Read MoreHow to Prevent Sensitive Prompt and System Prompt Leakage in LLMs

System prompt leakage is now a top AI security threat, letting attackers steal hidden instructions from LLMs. Learn how to stop it with proven techniques like output filtering, instruction defense, and external guardrails.

Read MoreMultimodal Generative AI: Models That Understand Text, Images, Video, and Audio

Multimodal generative AI understands text, images, audio, and video together-making it smarter than older AI systems. Learn how models like GPT-4o and Llama 4 work, where they’re used, and why they’re changing industries in 2025.

Read MoreRetail and Generative AI: How AI Is Transforming Product Copy, Merchandising, and Visual Assets

Generative AI is transforming retail by automating product copy, personalizing merchandising, and creating virtual try-ons. Learn how top retailers are using AI to boost conversions, cut costs, and stay ahead-without losing brand voice.

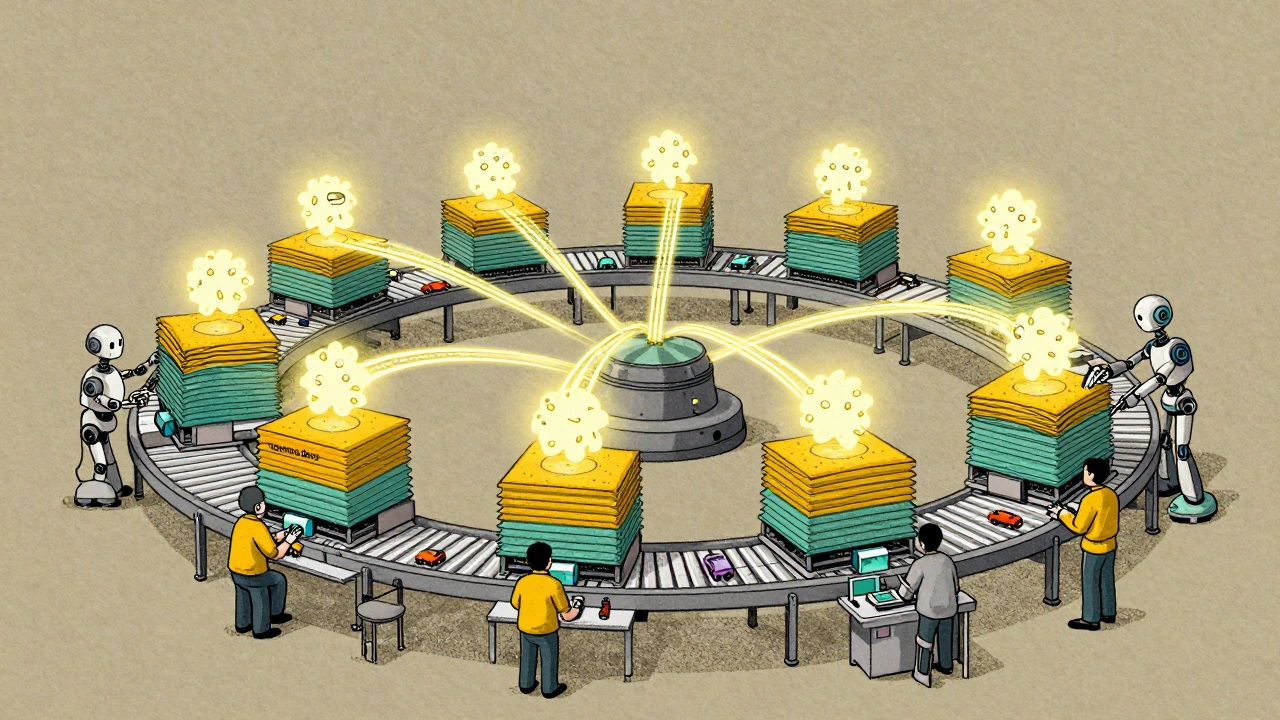

Read MoreModel Parallelism and Pipeline Parallelism in Large Generative AI Training

Model and pipeline parallelism enable training of massive generative AI models by splitting them across multiple GPUs. Learn how these techniques overcome GPU memory limits and power models like GPT-3 and Claude 2.

Read MoreFew-Shot vs Fine-Tuned Generative AI: How Product Teams Should Decide

Learn how product teams should choose between few-shot learning and fine-tuning for generative AI. Real cost, performance, and time comparisons for practical decision-making.

Read MoreGovernance Policies for LLM Use: Data, Safety, and Compliance in 2025

In 2025, U.S. governance policies for LLMs demand strict controls on data, safety, and compliance. Federal rules push innovation, but states like California enforce stricter safeguards. Know your obligations before you deploy.

Read MoreMulti-Head Attention in Large Language Models: How Parallel Perspectives Power Modern AI

Multi-head attention lets large language models understand language from multiple angles at once, enabling breakthroughs in context, grammar, and meaning. Learn how it works, why it dominates AI, and what's next.

Read MoreConfidential Computing for LLM Inference: How TEEs and Encryption-in-Use Protect AI Models and Data

Confidential computing uses hardware-based Trusted Execution Environments to protect LLM models and user data during inference. Learn how encryption-in-use with TEEs from NVIDIA, Azure, and Red Hat solves the AI privacy paradox for enterprises.

Read MoreExport Controls and AI Models: How Global Teams Stay Compliant in 2025

In 2025, exporting AI models is tightly regulated. Global teams must understand thresholds, deemed exports, and compliance tools to avoid fines and keep operations running smoothly.

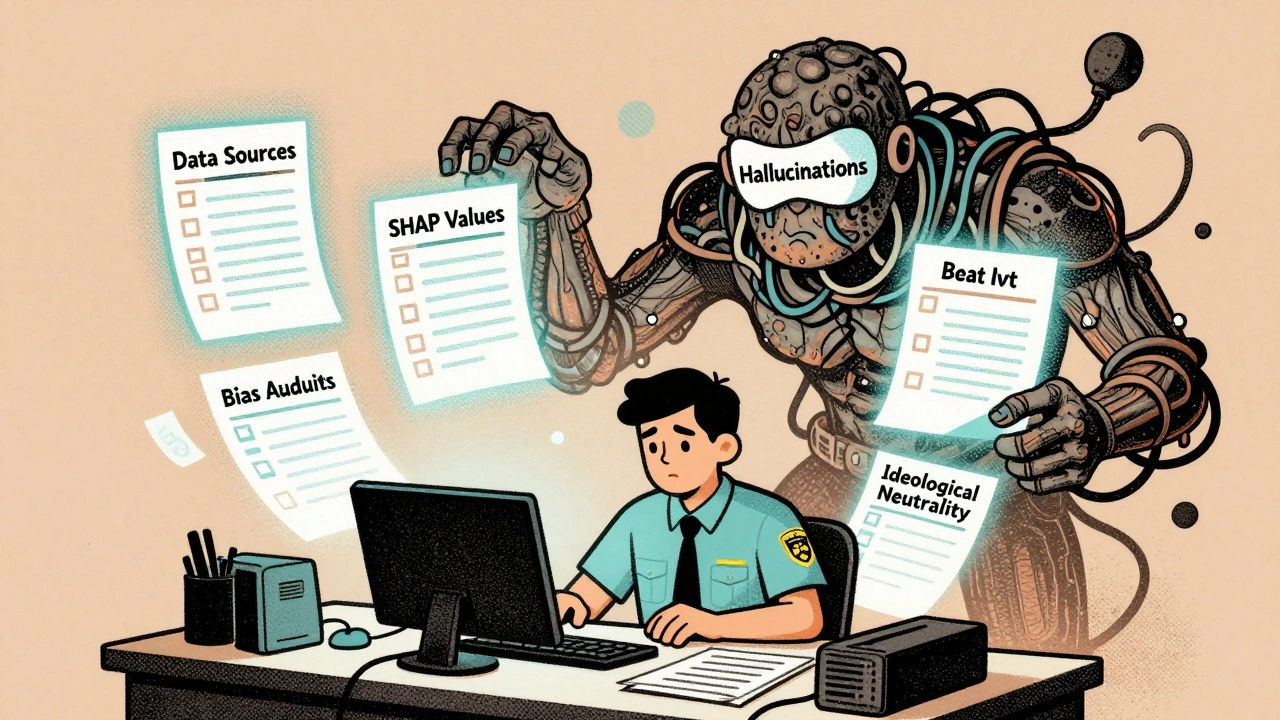

Read MoreError Analysis for Prompts in Generative AI: Diagnosing Failures and Fixes

Error analysis for prompts in generative AI helps diagnose why AI models give wrong answers-and how to fix them. Learn the five-step process, key metrics, and tools that cut hallucinations by up to 60%.

Read More