Large Language Models: How to Deploy, Govern, and Optimize Them in Production

When you use a large language model, an AI system trained on massive text datasets to generate human-like responses. Also known as LLM, it powers chatbots, content tools, and internal automation—but only if you manage it like a real product, not a demo. Most teams start with a cool proof of concept and hit a wall when they try to ship it. Only 14% of LLM projects make it to production, not because the tech is broken, but because nobody planned for the hidden costs, legal risks, and scaling headaches.

That’s why you need to think about LLM deployment, the full lifecycle of running a language model in a real app, from infrastructure to monitoring. It’s not just about picking a model like GPT-4 or Claude. It’s about how you handle data residency, model weights, and inference servers. And if you’re serving users across borders, you can’t ignore LLM governance, the policies, tools, and audits that ensure your model doesn’t break laws or spread harmful content. California’s AI laws, GDPR, and export controls aren’t suggestions—they’re fines waiting to happen if you skip this.

Then there’s the money. LLM cost optimization, the practice of reducing cloud spend without losing performance, using autoscaling, spot instances, and smarter prompting isn’t optional. Your bill doesn’t depend on how many users you have—it depends on how many tokens they use, how often they hit peak times, and whether you’re running a 70B model when a 7B one would do. And if you’re using multiple providers, you’re risking vendor lock-in unless you’ve built in LLM interoperability, the ability to switch models or providers without rewriting your whole app, using tools like LiteLLM or LangChain.

This collection isn’t about theory. It’s about what works when the clock is ticking and the server bills are piling up. You’ll find real guides on trimming LLM costs by 60%, enforcing data privacy with confidential computing, measuring truthfulness with TruthfulQA, and avoiding compliance disasters with state-level AI laws. You’ll learn how to use RAG instead of fine-tuning, how to audit your supply chain for poisoned model weights, and how to keep your AI-generated UI consistent across teams. These aren’t blog fluff—they’re battle-tested tactics from teams who’ve already been burned.

Whether you’re a founder trying to ship fast, a dev managing cloud bills, or a compliance officer trying to sleep at night, the answers here are practical, specific, and built for the real world—not the demo room.

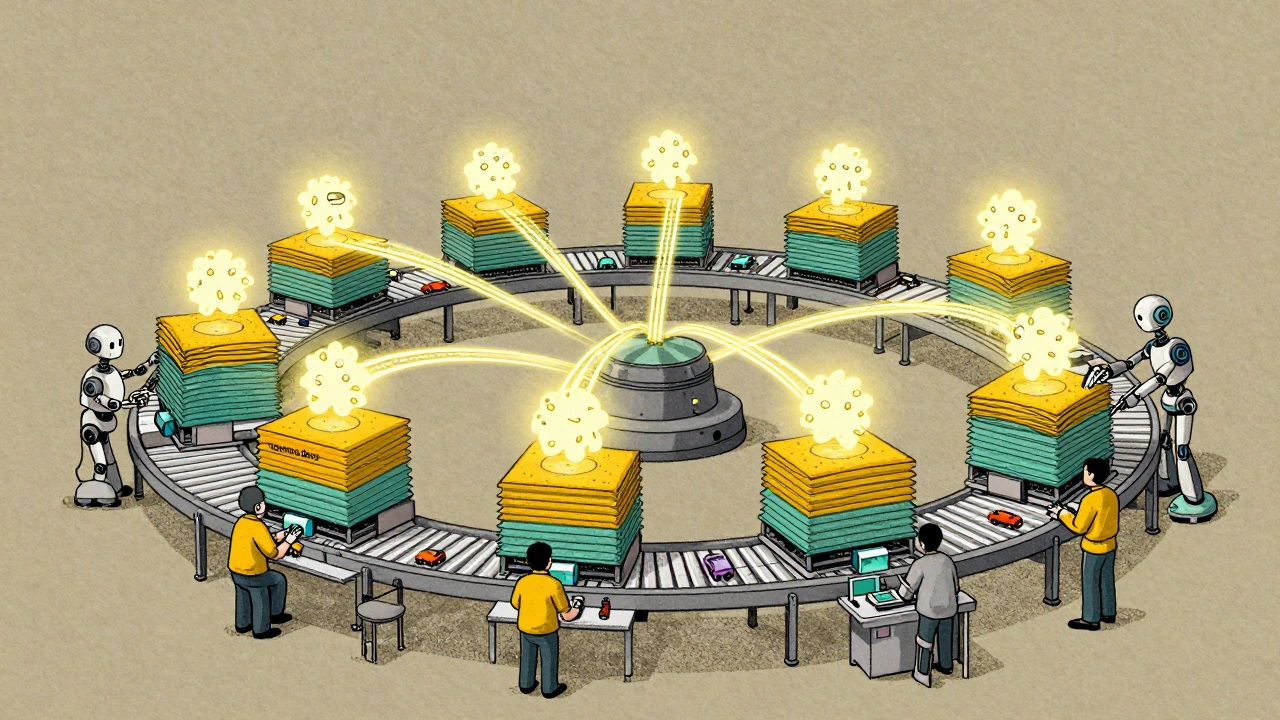

Model Parallelism and Pipeline Parallelism in Large Generative AI Training

Model and pipeline parallelism enable training of massive generative AI models by splitting them across multiple GPUs. Learn how these techniques overcome GPU memory limits and power models like GPT-3 and Claude 2.

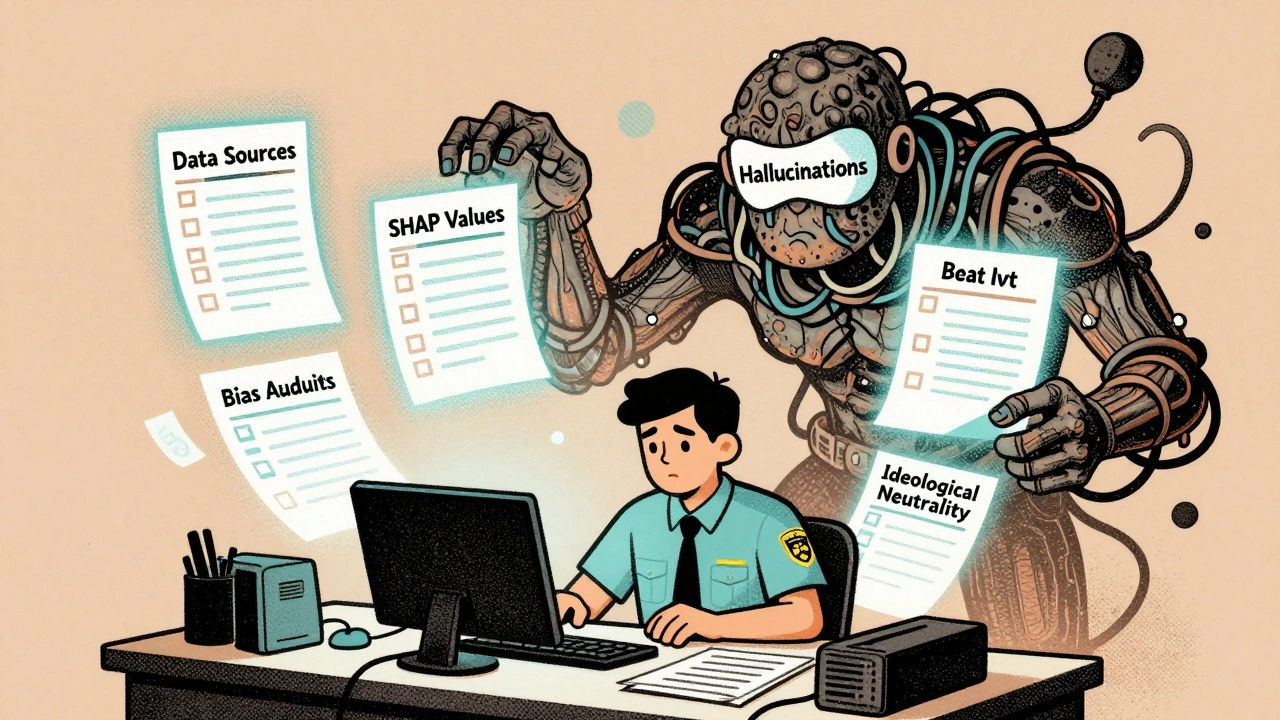

Read MoreGovernance Policies for LLM Use: Data, Safety, and Compliance in 2025

In 2025, U.S. governance policies for LLMs demand strict controls on data, safety, and compliance. Federal rules push innovation, but states like California enforce stricter safeguards. Know your obligations before you deploy.

Read MoreMulti-Head Attention in Large Language Models: How Parallel Perspectives Power Modern AI

Multi-head attention lets large language models understand language from multiple angles at once, enabling breakthroughs in context, grammar, and meaning. Learn how it works, why it dominates AI, and what's next.

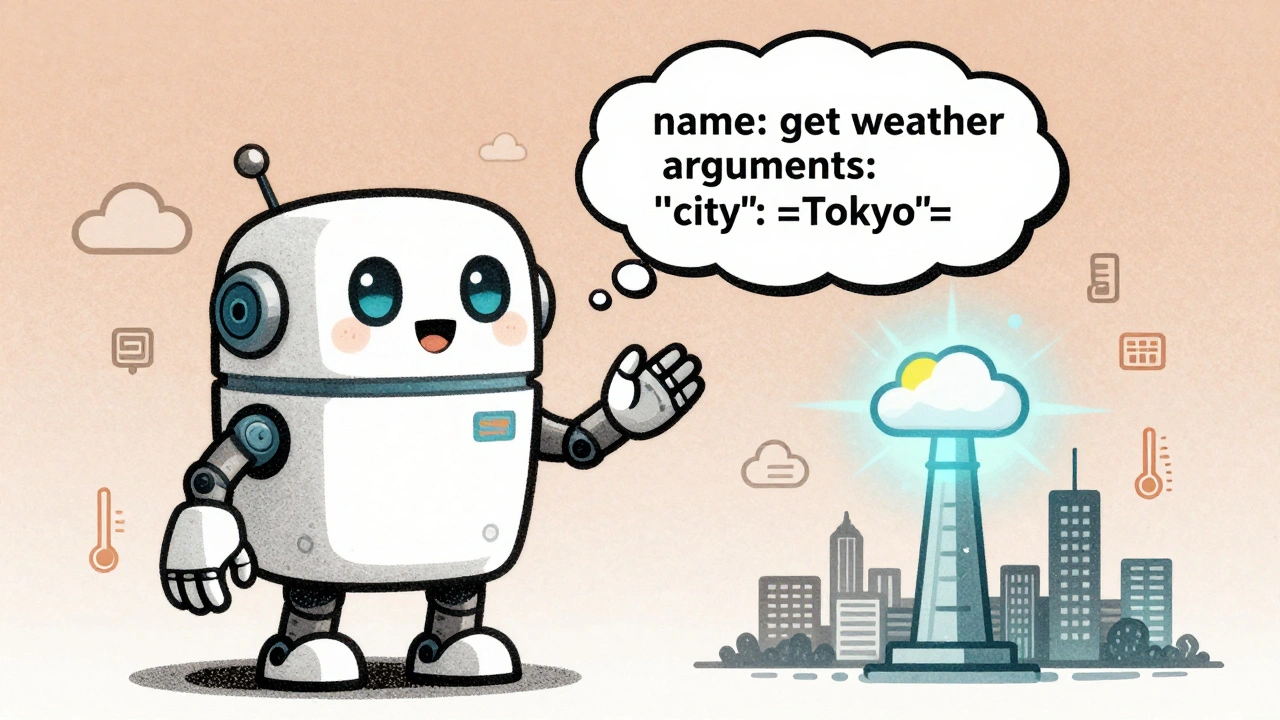

Read MoreTool Use with Large Language Models: Function Calling and External APIs Explained

Function calling lets large language models interact with real tools and APIs to access live data, reducing hallucinations and improving accuracy. Learn how it works, how major models compare, and how to build it safely.

Read MoreHow to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

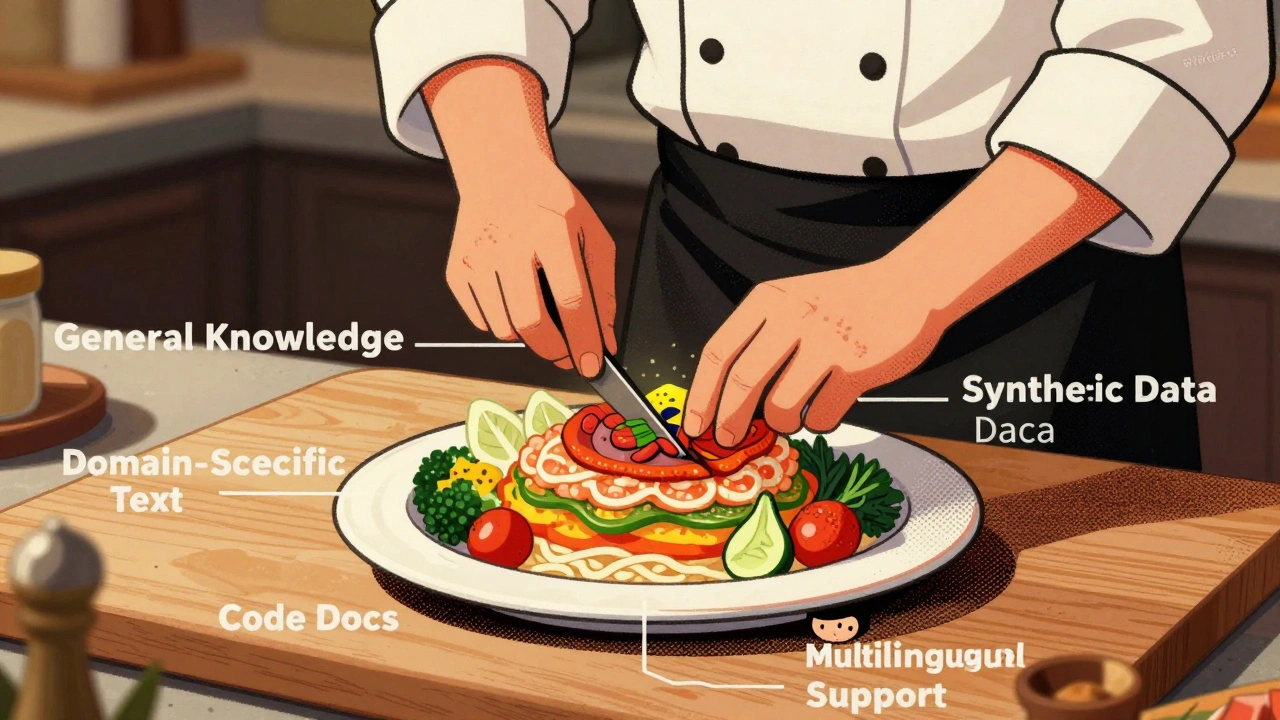

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read MoreDistributed Training at Scale: How Thousands of GPUs Power Large Language Models

Distributed training at scale lets companies train massive LLMs using thousands of GPUs. Learn how hybrid parallelism, hardware limits, and communication overhead shape real-world AI training today.

Read More