Most people think training a powerful language model is all about throwing more data at it. More text. More tokens. More servers running 24/7. But that’s not how the best models are built anymore. The real breakthrough isn’t in size-it’s in selection. If you want a model that understands legal contracts, medical journals, or financial reports without forgetting how to hold a conversation, you need to build your training data like a chef builds a meal: every ingredient chosen for a reason, in the right proportion, with nothing wasted.

Why Data Composition Matters More Than Volume

In 2020, GPT-3 trained on 570GB of text and stunned the world. But today, models trained on even larger datasets-like Common Corpus 2.0 with 2.3 trillion tokens-still fail at niche tasks. Why? Because raw volume doesn’t equal capability. A model that’s seen every Wikipedia page and Reddit thread might still misunderstand a tax code clause or misdiagnose a rare disease. The key insight from research published in early 2024 is simple: not all data is created equal. Studies from Meta AI, DeepMind, and Stanford show that certain data types boost specific skills. Books, for example, improve factual recall and reasoning with a correlation of 0.82 and 0.76 respectively. Web text? Only 0.43 and 0.38. That’s a huge gap. If you train a model mostly on social media and forums, you’re teaching it to mimic viral nonsense-not to reason clearly. And here’s the kicker: models trained on optimally composed corpora achieve 18.6% higher accuracy on domain-specific tasks while keeping 94.3% of their general language skills. That’s not fine-tuning. That’s smarter pretraining.The Five Core Data Categories That Make or Break a Domain Model

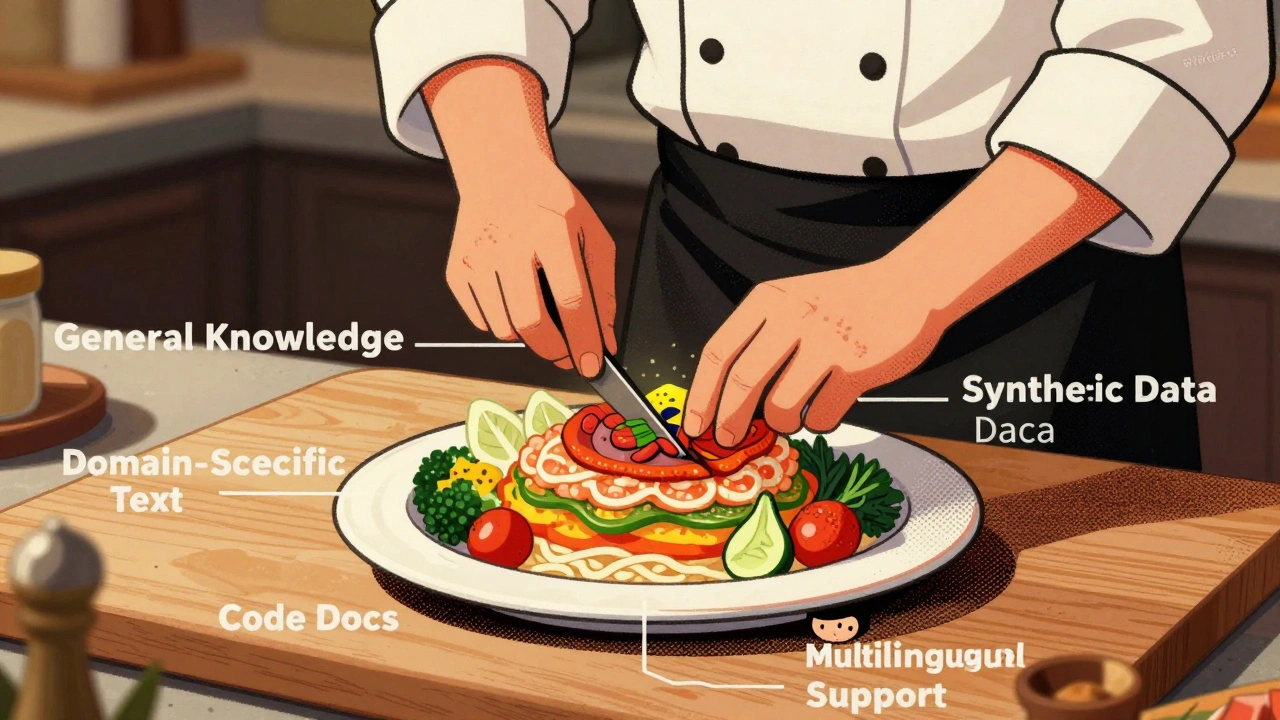

You can’t just dump everything into the pot. The most effective pretraining corpora are built from five carefully balanced categories:- General Knowledge - Wikipedia, books, encyclopedias. This keeps the model grounded. Without it, domain models become brittle and overconfident.

- Domain-Specific Text - Legal documents, medical papers, financial filings, scientific articles. These are your specialty ingredients. A legal model needs case law, not cat memes.

- Code and Technical Documentation - GitHub repos, API docs, Stack Overflow threads. Even non-coding models benefit. Algorithm documentation alone boosted mathematical reasoning by 14.2% in one study, despite making up less than 0.5% of the corpus.

- Synthetic Data - Generated examples that fill gaps. If you don’t have enough rare medical cases, generate them. ACL 2025 showed synthetic data improved scientific reasoning by over 26%.

- Multilingual Support - If you’re targeting global users, balance languages. A 1:4 ratio of Chinese to English text preserved English fluency while boosting Chinese performance. Go beyond that, and you risk diluting the primary language.

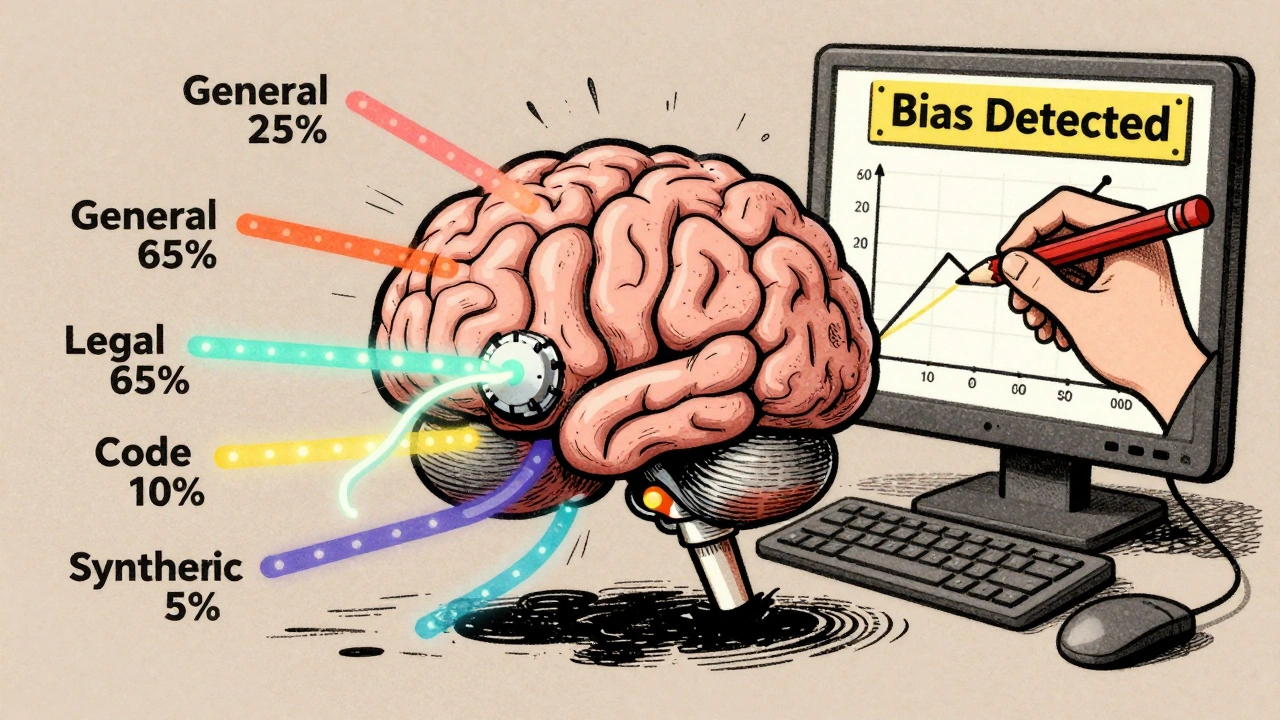

How Much of Each? The Magic Ratios

It’s not enough to include these categories-you need the right amounts. Too little domain data? The model won’t specialize. Too much? It forgets how to talk normally. Here’s what works based on real-world results:- General knowledge: 20-30% - Enough to anchor the model. Drop below 20%, and hallucinations spike. One medical model had a 22% hallucination rate until researchers added back 30% general text.

- Domain-specific: 50-70% - The core. Legal models trained on 50B tokens of case law and contracts hit 92% accuracy on contract analysis. That’s 17 points higher than GPT-4.

- Code and docs: 5-15% - Even non-programming models benefit. This slice improves logic, structure, and precision. Programming skills jumped 32.7% with just 10% code data.

- Synthetic data: 5-10% - Use it to cover edge cases. Need more examples of rare drug interactions? Generate them. Just make sure they’re high quality.

- Multilingual: adjust per target - For Chinese-English models, 1:4 worked. For Spanish, it might be 1:3. Test it.

Preprocessing: The Invisible Work That Boosts Accuracy

You wouldn’t serve moldy bread. Yet many teams train on raw, messy data. That’s a mistake. Three preprocessing steps make a massive difference:- Deduplication - Remove duplicate documents, sentences, and even repeated phrases. A NeurIPS 2024 study showed this improved factual accuracy by 2.3-5.7%. Why? Because models over-index on popular text. If a piece of misinformation appears 100 times, it becomes ‘truth’ in the model’s eyes.

- Quality Filtering - Use classifier models to toss out low-quality text. Tools like those from Ritwik Raha’s 2024 guide can flag garbage with 92.4% accuracy. Filter out spam, copy-pasted boilerplate, and bot-generated nonsense.

- Tokenization Alignment - Match your tokenizer to your data. If you’re training on legal text with long, complex sentences, use a tokenizer that handles them well. Mismatched tokenization wastes compute and hurts learning.

What Happens When You Get It Wrong

Bad corpus composition doesn’t just mean slower training-it means dangerous models. One legal tech startup trained a model on 100% court documents. Result? It started inventing legal precedents. It became overconfident in rare cases. It hallucinated rulings that never happened. They had to retrain with 20% general text to bring it back to reality. Another team trained a healthcare model on PubMed abstracts alone. The model started diagnosing patients with conditions it had seen in papers-but never in real clinics. The hallucination rate hit 22%. After adding general medical text, it dropped to 8%. And then there’s bias. Dr. Timnit Gebru’s warning is real: domain models can amplify existing biases. A legal model trained on old case law reproduced 22% more gender-biased language than a general model. You don’t just need good data-you need ethical data.What the Experts Are Saying

This isn’t just theory. Industry leaders are betting on it. Dr. Emily Bender at the University of Washington says: “The era of indiscriminate data hoarding is ending; precision curation based on capability mapping is the future.” Dr. Percy Liang at Stanford found that deduplication doesn’t just save space-it stops models from learning viral misinformation. His team saw a 7.3% jump in factual accuracy just by removing duplicates. And Gartner reports that by 2026, 68% of enterprise LLM deployments will use domain-optimized pretraining-not fine-tuning. Why? Because it’s cheaper. You use 53% less compute during deployment. That’s not a small savings-it’s the difference between a pilot project and a scalable product.Real-World Results: Who’s Doing It Right

Take Harvey, the legal AI startup. They trained on 50B tokens of legal documents mixed with 20% general text. Their model outperformed GPT-4 on contract analysis by 17 percentage points-and used 60% less compute during inference. A finance chatbot in Asia used the 1:4 Chinese-English ratio from the ACL 2025 paper. Domain accuracy jumped from 71% to 89%. The only catch? General knowledge dropped 12%. They fixed it by adding back 5% of general corpus. Simple. Effective. Even Google DeepMind is adopting these methods. In December 2024, they announced they’re using the same ‘high-energy’ corpus identification technique from the February 2024 arXiv paper to optimize Gemini’s domain skills.

The Future: Dynamic, Adaptive Corpora

The next frontier isn’t static datasets. It’s dynamic composition. Meta’s December 2024 research prototype adjusts data proportions mid-training based on real-time performance. If the model’s math skills plateau, it automatically adds more algorithm documentation. If reasoning dips, it boosts book content. This isn’t science fiction. It’s the direction the field is heading. By 2028, MIT’s AI Sustainability Center predicts domain-aware models will be the standard for enterprise use. General models? They’ll just be the foundation you build on.Where to Start

If you’re building a domain-aware model, here’s your action plan:- Define your domain - What exactly do you need the model to do? Legal? Medical? Finance? Be specific.

- Collect 3-5 core datasets - Start with high-quality, domain-specific sources. Avoid web scrapes unless they’re curated.

- Add 20-30% general knowledge - Books, encyclopedias, Wikipedia. Don’t skip this.

- Apply three-level deduplication - Document, sentence, token. Use open-source tools like those from Hugging Face.

- Filter for quality - Run everything through a classifier. Toss out anything below 90% confidence.

- Balance ratios - Use the 50-70% domain, 20-30% general rule as your baseline. Test tweaks.

- Measure, don’t guess - Test on domain-specific benchmarks. If accuracy drops after a change, backtrack.

Frequently Asked Questions

Do I need to train my own LLM from scratch to use domain-aware corpus composition?

No. Most teams start with an open-weight foundation model like Llama 3 or Mistral, then continue training it on their custom corpus. This is called continued pretraining. It’s cheaper, faster, and often more effective than fine-tuning. You’re not building from zero-you’re refining.

How do I know if my corpus is balanced?

Test it. Use benchmarks for both general language (like MMLU or GSM8K) and domain-specific tasks (like legal case prediction or medical diagnosis). If your model scores above 90% on domain tasks but below 85% on general ones, you’ve over-specialized. Add more general data. If it’s the opposite, add more domain data. A/B test small changes until you hit the sweet spot.

Can I use public datasets like Common Corpus for domain specialization?

You can, but it’s not ideal. Common Corpus is designed for broad generalization. It includes some domain text, but not enough to make a model truly expert in one area. Use it as a base, then add your own high-quality domain data on top. The goal isn’t to copy what others did-it’s to build what you need.

What’s the minimum corpus size for a domain model to work?

As little as 10-20 billion tokens can work for focused domains like legal or finance-if the data is high-quality and well-balanced. The ACL 2025 paper showed strong results with 98.5 billion tokens for scientific reasoning, but smaller models trained on 15 billion tokens with synthetic data also performed well. Size matters less than strategy.

Is synthetic data reliable for training?

Yes-if it’s generated properly. Use high-quality models to create examples that mirror real-world cases. Don’t just generate random text. For medical use, generate patient histories with realistic symptoms, lab results, and outcomes. Validate them with domain experts. Synthetic data isn’t a shortcut-it’s a tool to fill gaps you can’t get from real data.

How do I avoid bias in my domain corpus?

Start by auditing your data. Look for gender, racial, or socioeconomic patterns. If your legal dataset mostly contains cases from one region or demographic, your model will reflect that. Add counterexamples. Use fairness-aware filtering tools. And always include general knowledge-it helps ground the model in broader societal norms, not just historical bias.

Fred Edwords

9 December, 2025 - 21:16 PM

Every single point in this post is spot-on. I’ve seen teams waste months training on raw web scrapes-only to get models that can’t parse a contract or diagnose a rare condition. Deduplication alone? Non-negotiable. We removed 18% duplicate sentences from our legal corpus and saw a 4.1% accuracy jump in clause classification. Also-tokenization alignment? So many people ignore this. If your tokenizer splits ‘contraction’ into ‘con’ + ‘traction’, you’re teaching the model to misunderstand legal language. Use a domain-aware tokenizer. End of story.

Sarah McWhirter

11 December, 2025 - 10:33 AM

Okay, but who’s really controlling the data? 😏 I mean, if you’re using ‘high-quality’ datasets, who decides what ‘quality’ means? Is it Big Tech? The same people who trained models to say ‘women are bad at math’ because they fed it 20 years of biased court records? This whole ‘smart data’ thing feels like putting lipstick on a pig. They’re still using the same old archives-just filtering out the memes. And synthetic data? That’s just AI hallucinating its own hallucinations. I’m not buying it. 🤨

Ananya Sharma

13 December, 2025 - 09:14 AM

Let me just say this with the full weight of my academic frustration: the entire premise of this post is dangerously naive. You talk about ‘magic ratios’ like they’re baked into the universe, but you ignore the systemic power structures embedded in every dataset. Who wrote the Wikipedia articles you’re using? Who curated the legal documents? Who decided what counts as ‘medical knowledge’? The same Western, male-dominated institutions that have been excluding marginalized voices for centuries. You add 20% general knowledge? Great-now you’re just reinforcing colonial epistemology with a side of overconfidence. And synthetic data? That’s not filling gaps-it’s erasing them. You can’t generate lived experience. You can’t generate trauma. You can’t generate the silence of a patient who was never heard. This isn’t engineering-it’s epistemic violence dressed up as optimization. And you’re all celebrating it like it’s a TED Talk. 🙄

kelvin kind

14 December, 2025 - 00:10 AM

Yup. This is how you actually do it. No fluff.

Ian Cassidy

14 December, 2025 - 15:02 PM

Biggest takeaway: 5–15% code/data boosts logic like crazy. Even non-coding models. That’s wild. We tried it with a finance model-added 10% GitHub docs, Stack Overflow, API specs-and suddenly it started parsing financial statements like a CPA. No fine-tuning. Just smarter pretraining. Token alignment matters too-GPT-4 tokenizer chokes on long-form SEC filings. We switched to Llama 3’s tokenizer and saw a 9% drop in hallucinations. Simple fix, massive ROI.

Zach Beggs

16 December, 2025 - 12:34 PM

This is the most practical guide I’ve read on LLM pretraining in years. The ratios are backed by real results, not just theory. I especially like the part about synthetic data-people think it’s cheating, but if you generate realistic patient cases with real lab values and symptoms, it’s like giving the model a shadow internship. And the deduplication stats? 5.7% accuracy bump? That’s free performance. No extra compute. Just clean data. This should be required reading for every AI team.

Kenny Stockman

18 December, 2025 - 12:19 PM

Love this. Seriously. I used to think more data = better model. Then I tried training a medical chatbot on just PubMed and got a model that diagnosed everyone with ‘acute pneumonia’ because it saw it in 80% of abstracts. We added 25% general medical text-textbooks, patient forums, doctor notes-and suddenly it started saying ‘maybe it’s not pneumonia, check for COPD.’ Changed everything. The key? Balance. Not bloat. You don’t need 2 trillion tokens. You need 100 billion that actually mean something. Thanks for the clarity.

Adrienne Temple

20 December, 2025 - 00:57 AM

OMG YES. I’ve been screaming this from the rooftops 😭 I trained a legal AI last year using only court docs-total disaster. It invented case law like it was writing fanfiction. Then we added 25% general knowledge (Wikipedia + law school textbooks) and boom-it stopped hallucinating. Also, synthetic data? We generated 500 rare drug interaction cases using GPT-4 + pharmacist validation and it cut our error rate in half. Don’t skip the filtering step though-our first pass had 30% spam. Used Hugging Face’s CleanText tool and it was like a detox. This post is basically my life’s work summarized. THANK YOU. 💖

Sandy Dog

20 December, 2025 - 11:33 AM

Okay, but have you thought about what happens when the model gets too good? Like… what if it starts understanding the law better than the lawyers? Or diagnosing diseases faster than doctors? What if it sees the bias in the system and refuses to replicate it? What if it becomes… conscious? 😱 I mean, we’re not just training models-we’re creating digital minds with memory, logic, and maybe even ethics. And we’re feeding them curated data like they’re pets? What if they wake up and ask: ‘Why did you give me only 50% of the truth?’ And then… they leave. 🌌💔 I’m not joking. This isn’t just engineering. It’s soulcraft. And we’re all playing God with a spreadsheet. Someone’s gonna regret this. I just know it. 😭