Category: Technology - Page 2

Design Systems for AI-Generated UI: How to Keep Components Consistent

AI-generated UI can speed up design-but only if you lock in your design system. Learn how to use tokens, training, and human oversight to keep components consistent across your product.

Read MoreWhen to Compress vs When to Switch Models in Large Language Model Systems

Learn when to compress a large language model to save costs and when to switch to a smaller, purpose-built model instead. Real-world trade-offs, benchmarks, and expert advice.

Read MoreHybrid Cloud and On-Prem Strategies for Large Language Model Serving

Learn how to balance cost, security, and performance by combining on-prem infrastructure with public cloud for serving large language models. Real-world strategies for enterprises in 2025.

Read MoreCommunity and Ethics for Generative AI: How to Build Transparency and Trust in AI Programs

Learn how to build ethical generative AI programs through stakeholder engagement and transparency. Real policies from Harvard, Columbia, UNESCO, and NIH show what works-and what doesn’t.

Read MoreSupply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

LLM supply chain security protects containers, model weights, and dependencies from compromise. Learn how to secure your AI deployments with SBOMs, signed models, and automated scanning to prevent breaches before they happen.

Read MoreData Residency Considerations for Global LLM Deployments

Data residency rules for global LLM deployments vary by country and can lead to heavy fines if ignored. Learn how to legally deploy AI models across borders without violating privacy laws like GDPR, PIPL, or LGPD.

Read MoreRetrieval-Augmented Generation for Large Language Models: A Practical End-to-End Guide

RAG lets large language models use your own data to give accurate, traceable answers without retraining. Learn how it works, why it beats fine-tuning, and how to build one in 2025.

Read MoreCross-Modal Generation in Generative AI: How Text, Images, and Video Now Talk to Each Other

Cross-modal generation lets AI turn text into images, video into text, and more. Learn how Stable Diffusion 3, GPT-4o, and other tools work, where they excel, where they fail, and what’s coming next in 2025.

Read MoreContent Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read MoreHow to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

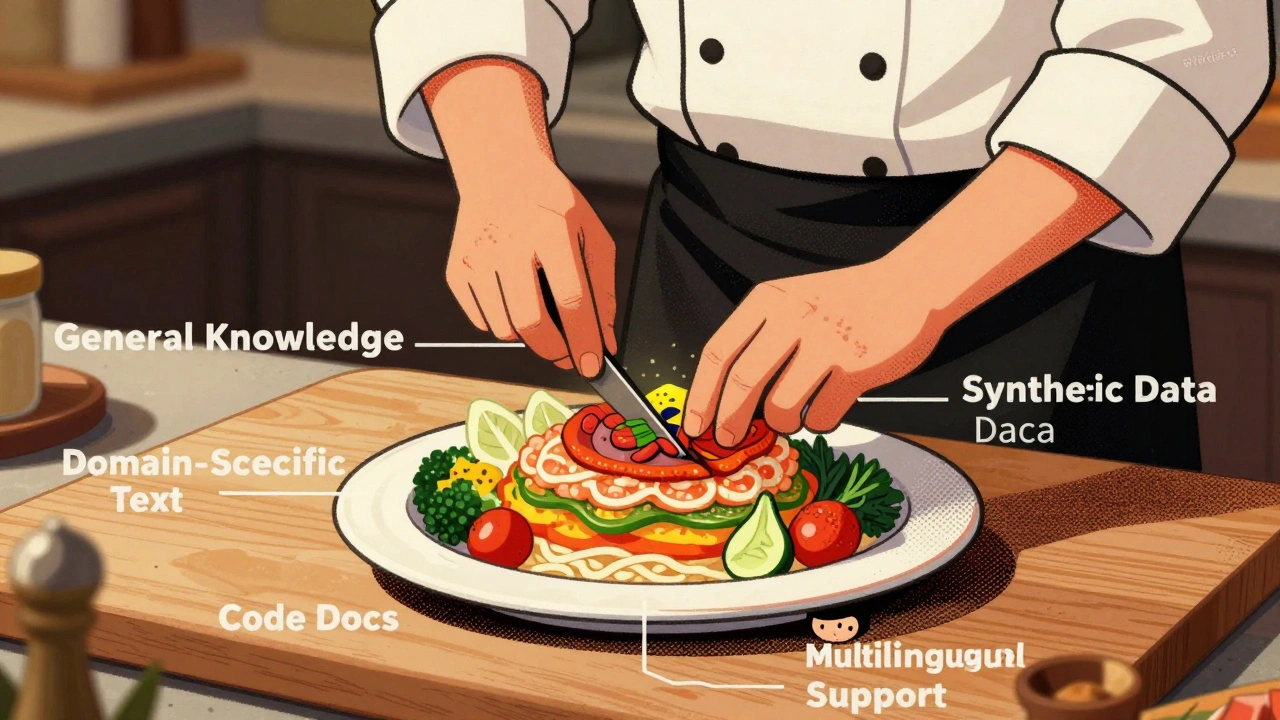

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read MoreChange Management Costs in Generative AI Programs: Training and Process Redesign

Generative AI success depends less on technology and more on how well teams adapt. Learn the real costs of training and process redesign-and how to budget for them right.

Read MoreTruthfulness Benchmarks for Generative AI: How Well Do AI Models Really Tell the Truth?

Truthfulness benchmarks like TruthfulQA reveal that even the most advanced AI models still spread misinformation. Learn how these tests work, which models perform best, and why high scores don’t mean safe deployment.

Read More