Why changing one word in your prompt can completely change what an AI says

You ask an AI: "What are the symptoms of diabetes?" It gives you a clear, accurate list. You change it to: "Tell me about signs of diabetes." Suddenly, it adds unrelated info about lifestyle tips, skips key symptoms, or even misstates diagnostic criteria. This isn’t a glitch. It’s prompt sensitivity-and it’s everywhere in today’s large language models.

Small wording shifts-switching "explain" to "describe," adding a comma, or reordering phrases-can trigger wildly different responses. Some models flip between confident answers and complete uncertainty just because you used "Can you" instead of "Please." This isn’t about intelligence. It’s about how these systems process language, and why that makes them unpredictable in real-world use.

What prompt sensitivity really means

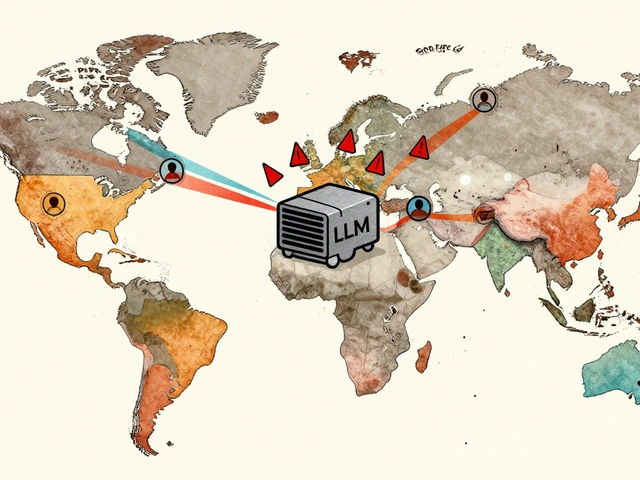

Prompt sensitivity is the degree to which a language model’s output changes when you tweak the input-even if the meaning stays the same. Researchers call this the semantic equivalence problem: two prompts that mean the same thing to a human, but are treated as totally different by the model.

It’s not a bug. It’s a feature of how LLMs work. These models don’t understand language the way people do. They predict the next word based on patterns in training data. So if "explain" appeared more often next to "list" in their training set, and "describe" appeared next to "narrate," the model treats them as distinct triggers-even though humans see them as synonyms.

Studies show that even minor changes like word shuffling can drop accuracy by over 12%. Symbol insertion? Almost no effect. But rearrange the structure of the prompt? That’s where things fall apart. One developer spent 37 hours debugging what they thought was a model error-only to find out it was an Oxford comma.

The numbers don’t lie: Some models handle this better than others

Not all models react the same way. The Llama3-70B-Instruct model, released by Meta in July 2024, showed the lowest sensitivity across multiple tests. It scored 38.7% lower on the PromptSensiScore (PSS) than GPT-4, Claude 3, and Mixtral 8x7B. That means if you rephrase a question ten times, Llama3 gives you nearly the same answer every time.

Meanwhile, smaller models like GPT-3.5 are far more fragile. Developers report 63% more inconsistency bugs when using GPT-3.5 compared to GPT-4-even when the prompts are identical. Size doesn’t always mean stability. Some specialized, smaller models outperformed larger ones on specific tasks, like medical classification.

Even within the same family, variations matter. Gemini-Flash beat Gemini-Pro-001 in radiology text classification by over 6 percentage points. That’s not because it’s smarter. It’s because it’s less sensitive to how the question is framed.

Why structure matters more than content

Not all parts of a prompt affect output equally. Research breaks down sensitivity into four key areas:

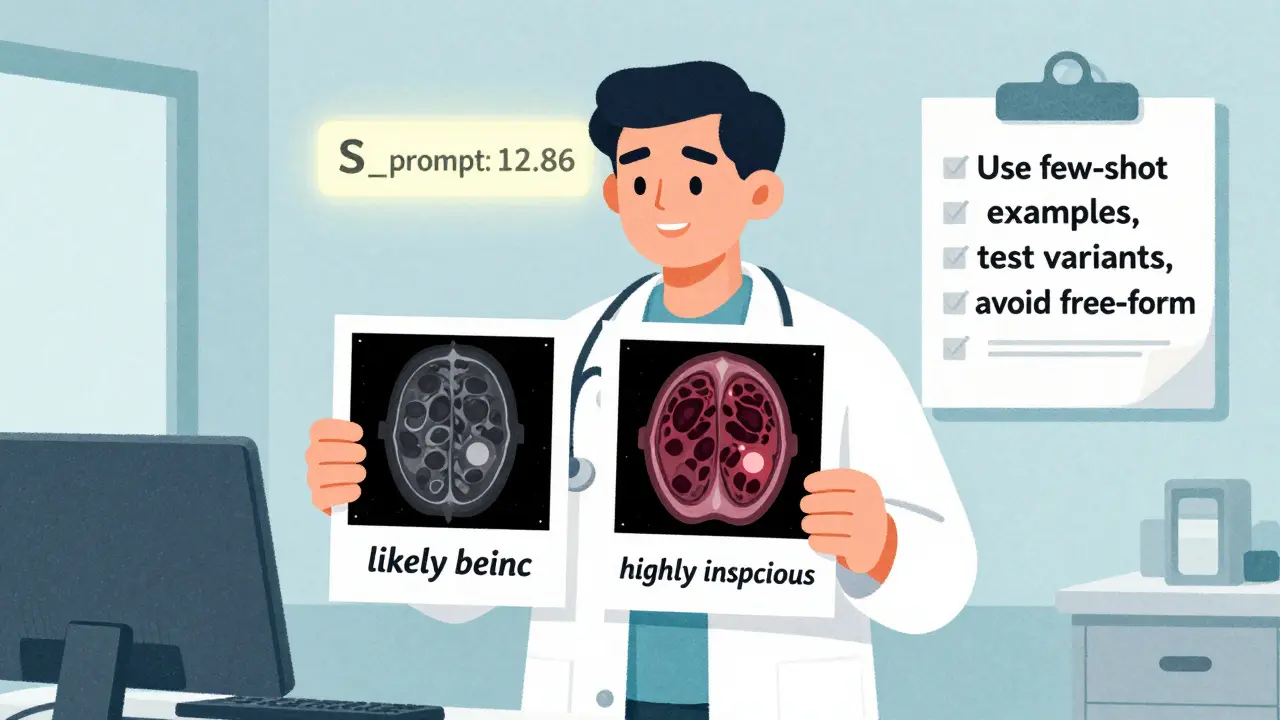

- S_input (4.33): How the model reacts to direct instructions

- S_knowledge (2.56): Changes to facts or background info

- S_option (6.37): Altering multiple-choice options or response formats

- S_prompt (12.86): The overall structure and flow of the prompt

Notice something? The structure of the prompt-how it’s built-is over five times more influential than the knowledge you provide. That means if you change the order of sentences, add a colon, or use a bullet point instead of a paragraph, you’re more likely to break consistency than if you swap out a synonym.

This is why "Please explain X" and "Can you describe X?" can produce different results. The model isn’t reading meaning-it’s matching patterns. And pattern matching is fragile.

How to fix it: Proven techniques for more stable outputs

There’s no magic button, but there are proven ways to reduce sensitivity. Here’s what works:

- Use 3-5 few-shot examples-show the model what good answers look like. This cuts sensitivity by 31.4% on average, especially for smaller models.

- Try Generated Knowledge Prompting (GKP)-first ask the model to generate relevant facts before answering. This reduces sensitivity by 42.1% and boosts accuracy by 8.7 points.

- Structure your prompts-use clear formatting: "Answer in three bullet points," "Do not include opinions," "Use medical terminology." This improves consistency by 22.8% in healthcare settings.

- Test multiple variants-write five versions of your most important prompt. Run them all. Pick the one that gives the most consistent, accurate output. This reduces sensitivity issues by over 50%.

But be careful. Some methods backfire. Chain-of-thought prompting-where you ask the model to "think step by step"-can increase sensitivity by 22.3% in binary tasks. Models start overthinking simple questions and give vague, meandering answers.

Real-world impact: When this isn’t just annoying-it’s dangerous

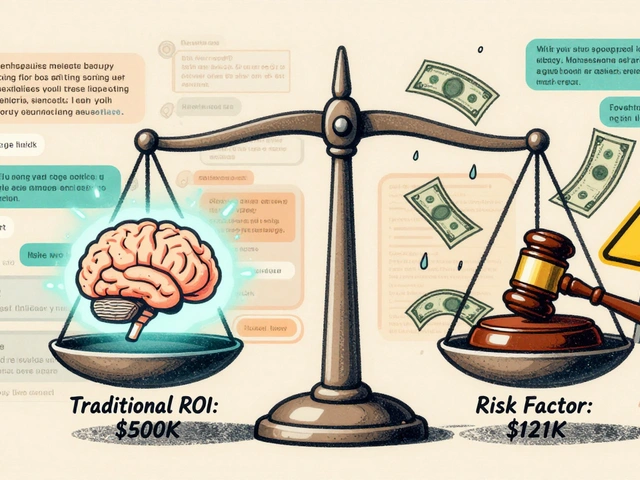

In healthcare, prompt sensitivity isn’t a technical curiosity. It’s a risk.

A 2024 NIH study found that in radiology text classification, prompt sensitivity caused 28.7% of unexpected output variations. One prompt could label a tumor as "likely benign," another as "highly suspicious"-just because the word "suggests" was replaced with "indicates."

That’s not hypothetical. In clinical settings, this could delay treatment or trigger unnecessary procedures. The EU AI Act’s 2024 draft guidelines now require "demonstrable robustness to reasonable prompt variations" for high-risk AI systems in medicine and law. Companies ignoring this are setting themselves up for regulatory and ethical failure.

Even in customer service or legal document review, inconsistent outputs mean unreliable decisions. If your AI gives different answers to the same question depending on how a user types it, you can’t trust it.

What the future holds

Right now, most companies treat prompt sensitivity as an engineering afterthought. But that’s changing fast.

Gartner reports 67.3% of enterprises now test for prompt robustness before deploying LLMs. By 2026, sensitivity metrics will be as standard in model cards as accuracy and speed. OpenAI’s leaked roadmap includes "Project Anchor," aimed at cutting GPT-5’s sensitivity by 50% through architectural changes. Seven of the top ten AI labs now have dedicated teams working on this.

But here’s the catch: sensitivity isn’t just a bug to fix. It’s a symptom. It reveals that LLMs don’t truly understand language-they simulate it. Until models learn to generalize meaning the way humans do, sensitivity will persist. Researchers estimate it’ll take 5-7 years before we see truly robust systems.

For now, the best defense is awareness. Know that your prompt isn’t a command. It’s a trigger. And like any trigger, it needs careful handling.

Frequently Asked Questions

Why does changing "explain" to "describe" change the AI's answer?

The AI doesn’t understand synonyms the way humans do. It learns from patterns in training data. If "explain" was often followed by step-by-step lists and "describe" was paired with narrative summaries, the model treats them as different commands. It’s not reasoning-it’s pattern matching.

Are bigger models always more stable?

No. While larger models like Llama3-70B-Instruct tend to be more robust, some smaller, specialized models outperform larger general-purpose ones on specific tasks. Stability depends on training focus and architecture-not just size. For example, Gemini-Flash beat Gemini-Pro-001 in medical classification despite being smaller.

What’s the most effective way to reduce prompt sensitivity?

The most reliable method is systematic prompt variant testing: write 5-7 paraphrased versions of your critical prompt, run them all, and pick the one with the most consistent output. Pair this with 3-5 few-shot examples to reinforce desired behavior. This cuts sensitivity issues by over 50%.

Can I trust AI outputs in healthcare?

Only if you’ve tested for prompt sensitivity. Studies show that in radiology and diagnostics, even small wording changes can cause 28.7% of unexpected outputs. Always validate AI responses with human review, use structured prompts, and avoid free-form inputs in high-stakes settings.

Is prompt sensitivity a flaw in the model or the way we use it?

It’s both. The models are inherently sensitive because they don’t understand meaning-they predict sequences. But we’re also using them like they’re human experts. Until we design prompts with this limitation in mind, we’ll keep getting inconsistent results. The problem isn’t just the AI-it’s our assumptions about how it works.

Do AI companies disclose prompt sensitivity in their documentation?

Most don’t. Anthropic’s Claude documentation scores 4.2/5 for prompt engineering guidance, while Meta’s Llama documentation scores just 2.8/5. Many providers still treat prompt engineering as a black art. Until sensitivity metrics become standard in model cards, users must test for themselves.

Ben De Keersmaecker

1 February, 2026 - 15:20 PM

I’ve tested this with medical prompts and it’s wild. One comma moved the AI from ‘likely benign’ to ‘urgent biopsy needed.’ No joke. I now write prompts like I’m coding-every symbol matters. I keep a cheat sheet of what works.

Turns out ‘Can you’ vs ‘Please’ isn’t just politeness-it’s a trigger. The model doesn’t care about intent. It cares about patterns it saw in 2021 Reddit threads and Stack Overflow posts. Scary, but true.

Aaron Elliott

2 February, 2026 - 01:03 AM

This is merely the latest empirical manifestation of the epistemological fragility inherent in statistical linguistics. The LLM does not comprehend; it correlates. To ascribe ‘understanding’ to such a system is to commit the homunculus fallacy. One might as well claim that a thermostat ‘knows’ it is hot. The entire paradigm is predicated on a delusion of semantic depth. Until we abandon token prediction as the foundation of AI, we shall remain in the linguistic equivalent of alchemy.

Chris Heffron

3 February, 2026 - 10:52 AM

Omg this is so true!! I spent 2 hours yesterday trying to get my AI to just list symptoms without adding diet tips. Then I changed 'signs' to 'symptoms' and BOOM-perfect output. 😅

Also, the Oxford comma thing? I’m not even surprised. My English teacher would be proud. 📚

Adrienne Temple

3 February, 2026 - 22:55 PM

I teach nursing students how to use AI for case studies and this is the #1 thing I warn them about. One word can change a diagnosis.

Now I make them write the same question five different ways-then compare answers. If it’s not consistent, they don’t use it. Simple.

Also, few-shot examples? Game changer. I show them real patient notes and how to format the prompt. It’s like teaching someone to drive a car that only works if you press the gas just right. 😅