Running a large language model (LLM) like Llama-2 or GPT-3 isn’t just expensive-it’s unsustainable at scale. A single inference request can cost over a dollar if you’re using a full-precision 70-billion-parameter model on cloud GPUs. But what if you could cut that cost to pennies-without losing accuracy? That’s where model compression comes in. Two techniques, quantization and knowledge distillation, are turning LLMs from luxury tools into affordable, scalable applications. This isn’t theory. It’s what companies are using right now to deploy AI on phones, edge devices, and budget cloud servers.

Why LLMs Are So Costly to Run

Large language models aren’t just big-they’re massive. GPT-3 has 175 billion parameters. Each parameter is typically stored as a 32-bit floating-point number. That’s 700 gigabytes of memory just to load the model. And every time someone asks a question, the system has to perform trillions of calculations. On a single A100 GPU, running one of these models can cost $0.50 to $1.20 per 1,000 queries. For a chatbot handling 10 million requests a month? That’s $5,000 to $12,000 in inference costs alone. Most of that cost comes from memory bandwidth and compute power. The model has to load weights into memory constantly. The more bits you use to represent each weight, the more data you’re moving around. That’s where compression techniques step in. They don’t change what the model does-they change how it stores and computes.Quantization: Shrinking the Numbers

Quantization is the simplest and fastest way to reduce cost. Instead of storing weights as 32-bit floats, you store them as 8-bit, 4-bit, or even 2-bit integers. Think of it like converting a high-resolution photo to a lower one. You lose some detail, but it still looks fine for everyday use. An 8-bit quantized model (INT8) cuts memory use by 4x. A 4-bit model (INT4) cuts it by 8x. And the speedup? On NVIDIA’s Ampere or Apple’s M-series chips, you get 2x to 3x faster inference. That’s not theoretical. Google’s on-device GEMMA models run entirely on 8-bit quantized weights. So do Meta’s Llama-2 models deployed on edge devices. But there’s a catch. Pushing past 4-bit causes accuracy to drop sharply. At 2-bit, models start forgetting rare words, making mistakes on complex reasoning, and failing at tasks like translation or code generation. A 2025 Nature study found 2-bit quantization caused a 12.7% accuracy drop on indoor localization tasks. AWS users reported 14.2% more errors on logical reasoning tasks compared to 8-bit models. The fix? Use SmoothQuant. This technique moves the hardest-to-quantize values-outliers in activation data-from dynamic inputs to static weights. That boosts 4-bit model accuracy by 5.2% on average. Most engineers start with 8-bit, test performance, then go lower only if needed.Distillation: Training a Smaller Model to Think Like a Giant

Quantization shrinks the model. Distillation replaces it entirely. In knowledge distillation, you take a huge “teacher” model-say, Llama-2 70B-and train a tiny “student” model-like a 7B or even 1B model-to mimic its behavior. The student doesn’t learn from raw data. It learns from the teacher’s outputs: the probabilities, the confidence levels, the patterns of reasoning. Amazon researchers showed a distilled BART model could shrink to 1/28th the size of the original while keeping 97% of its question-answering accuracy. A fintech startup cut inference costs from $1.20 to $0.07 per 1,000 queries by combining distillation and quantization on Llama-2 7B. The catch? Training the student is expensive. Team et al. (2024) needed 8 trillion tokens to train a distilled version of Gemma-2 9B. That’s the same amount of data used to pretrain the original model. You need powerful GPUs, lots of time, and a good teacher-student match. Also, distillation doesn’t always work. Hugging Face users report that 41% struggle to replicate teacher performance when the student is under 1 billion parameters. The student might copy surface patterns but miss deeper logic. Temperature scaling helps-adjusting how “soft” the teacher’s outputs are during training-but it’s not a magic fix.

Hybrid Compression: The Real Game-Changer

The best results don’t come from one technique. They come from stacking them. Start with pruning: remove the least important weights. Then apply quantization to shrink what’s left. Finally, distill the compressed model into an even smaller version. Amazon’s 2022 research showed this combo reduced BART model size by 95% with no loss in long-form QA accuracy. Google’s 2024 Gemma-2 release used distillation-aware quantization to hit 5.3x size reduction with 99.1% of original performance on MMLU benchmarks. And then there’s BitDistiller, a 2024 method that combines self-distillation with quantization-aware training. It boosted sub-4-bit model accuracy by 7.3% over plain quantization. That’s huge. It means you can run a 3-bit model with near-4-bit performance. This hybrid approach is now standard in production. GitHub surveys show 78% of ML engineers use 8-bit quantization as their default. And 63% of practitioners call it “essential for cost-effective inference.”Hardware Matters More Than You Think

You can quantize a model all you want-but if your hardware doesn’t support low-precision math, you won’t see the speedup. Older CPUs? Forget it. They can’t handle INT8 or INT4 efficiently. You’ll get the smaller model size, but no faster inference. That’s why Apple’s M-series chips and NVIDIA’s Tensor Cores dominate. They have dedicated circuits for 8-bit and 4-bit operations. NVIDIA’s TensorRT-LLM library is the market leader in GPU quantization, with 58% adoption. Hugging Face’s Optimum library leads in distillation tools, used by 43% of developers. If you’re deploying on the cloud, stick with NVIDIA A100s or H100s. If you’re targeting mobile or edge, Apple Silicon or Qualcomm’s latest AI chips are your best bet.When to Use Which Technique

Not every compression method fits every use case.- Use quantization if you need fast, low-effort deployment. Ideal for chatbots, real-time assistants, or mobile apps where you’re updating a model you already have. Start with 8-bit. Only go lower if you’ve tested accuracy on your data.

- Use distillation if you’re building a new product and can afford the upfront training cost. Perfect for domain-specific models-like a medical chatbot trained on a general LLM. You get a lean, custom model that’s cheaper to run forever.

- Use hybrid compression if you’re scaling to millions of users. Start with pruning, quantize to 4-bit, then distill into a 1B-parameter model. This is what startups and enterprises are doing to keep costs under $0.10 per 1,000 queries.

The Limits of Compression

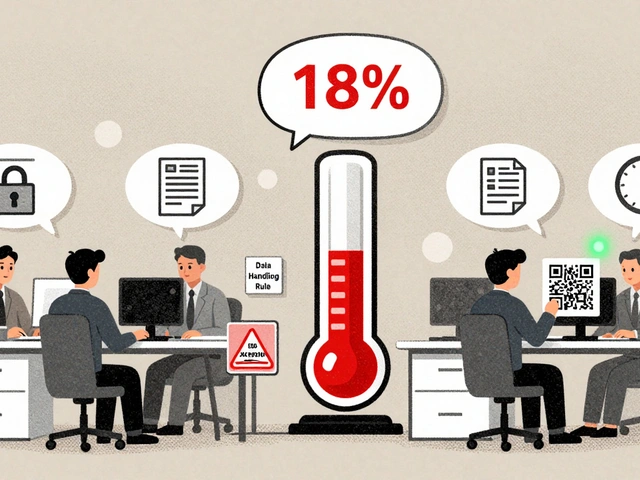

There’s a wall. Beyond 4-bit quantization, models start breaking in subtle ways. Stanford’s Percy Liang found that at 2-bit, models lose 18.5% accuracy on low-frequency words. They can’t handle rare names, technical jargon, or niche syntax. That’s fine for casual chat. It’s disastrous for legal, medical, or financial applications. The CRFM at Stanford warns that “beyond 4-bit, models increasingly fail on complex reasoning tasks requiring high numerical precision.” That’s why most commercial deployments stick to 4-8 bit. The sweet spot? 8-bit quantization + distillation. You get 5-10x cost reduction with minimal accuracy loss.What’s Next: Automated Compression

The next wave isn’t manual tweaking. It’s automation. Microsoft’s 2025 roadmap includes an “Adaptive Compression Engine” that analyzes each transformer layer and picks the best compression level-some layers get 8-bit, others 4-bit, some stay full precision. Google and Meta are building similar systems. Startups like OctoML are already offering automated pipelines: upload your model, pick your target device, and get a compressed version with accuracy guarantees. This will make compression as easy as updating a library.Final Takeaway: Compression Is No Longer Optional

Model compression isn’t a niche research topic anymore. It’s a business requirement. Gartner predicts the market will hit $4.7 billion by 2026. Companies that ignore it will pay 5x more for inference. Those that master it will deploy AI everywhere-on phones, in factories, on satellites. Start simple. Quantize your model to 8-bit. Test it on real user data. If performance holds, try distillation. If you’re serious about scaling, combine both. The math is clear: smaller models mean lower costs, faster responses, and wider reach. The only question is: are you ready to shrink your LLMs-or keep paying the price?What’s the difference between quantization and distillation?

Quantization reduces the precision of model weights-from 32-bit floats to 8-bit or 4-bit integers-making the model smaller and faster without changing its structure. Distillation trains a smaller model to mimic the behavior of a larger one, effectively replacing the big model with a leaner copy. Quantization is quick and easy; distillation is powerful but requires heavy training.

Can I use quantization on any hardware?

No. Older CPUs and non-TensorCore GPUs can’t efficiently run low-precision models. You need hardware that supports INT8 or INT4 arithmetic-like NVIDIA A100/H100, Apple M-series chips, or Qualcomm’s latest AI accelerators. Without it, you’ll get a smaller model but no speed gain.

Is 4-bit quantization safe for production?

It depends. For simple tasks like chat or summarization, 4-bit works fine with techniques like SmoothQuant. For complex reasoning, legal, or medical use cases, 4-bit can cause accuracy drops of 5-10%. Most enterprises stick to 8-bit for reliability and use 4-bit only after rigorous testing.

How much can I save with model compression?

A typical LLM can go from $1.20 to $0.07 per 1,000 queries with combined quantization and distillation. That’s a 94% cost reduction. Memory usage drops 8-10x, and inference speed improves 2-3x on compatible hardware. For high-volume apps, that’s tens of thousands in monthly savings.

Why isn’t everyone using distillation if it’s so effective?

Because training the student model is expensive. You need the same compute power as training the original model. For most teams, that’s not feasible unless they’re building a dedicated product. Quantization is faster and cheaper to implement, so it’s the default. Distillation is reserved for high-value, long-term deployments.

What tools should I use to compress my LLM?

For quantization: NVIDIA’s TensorRT-LLM (for GPUs) or Hugging Face’s Optimum with bitsandbytes (for CPU/GPU). For distillation: Hugging Face’s Transformers library with custom training scripts. For automation: Try OctoML or NVIDIA’s NeMo framework. Start with 8-bit quantization using bitsandbytes-it’s the easiest entry point.

Jeremy Chick

17 December, 2025 - 05:35 AM

Bro, I just quantized my Llama-2 to 4-bit on my M2 Mac and it’s literally faster than my coffee maker. 94% cost cut? More like 99% if you ditch the cloud entirely. Why are people still paying $1.20 per 1k queries when your phone can run a 3-bit model better than your startup’s AWS bill? The real AI revolution isn’t in the models-it’s in the hardware you refuse to upgrade.

Sagar Malik

18 December, 2025 - 23:36 PM

Let me be blunt: this entire ‘compression’ narrative is a corporate psyop. They don’t want you to run LLMs locally-they want you dependent on their proprietary TensorRT-LLM pipelines and H100 clusters. Quantization? It’s just obfuscation of the model’s true cognitive architecture. The 2-bit accuracy drop isn’t a bug-it’s a feature. They’re deliberately degrading performance to justify ‘enterprise-grade’ inferencing subscriptions. And don’t get me started on distillation-it’s digital plagiarism with a PhD. The real cost isn’t compute-it’s the erosion of model integrity. They’re not saving money. They’re selling control.

Seraphina Nero

20 December, 2025 - 04:22 AM

I tried 8-bit quantization on my chatbot and honestly? My users didn’t notice a difference. They just said it was faster. I didn’t even need to change the code-just swapped in bitsandbytes and boom, my bill dropped from $800 to $80/month. If you’re scared of losing accuracy, start with 8-bit. It’s like upgrading from SD to HD-nobody complains about the picture being worse.

Megan Ellaby

20 December, 2025 - 15:39 PM

wait so if i distill a 70b model into a 1b one, does that mean my little model is basically a ghost of the big one? like… does it still ‘remember’ things? or is it just faking it? also i tried the hugging face optmum thing and it kept crashing on my laptop 😅 any tips? maybe i’m doing it wrong?

Rahul U.

21 December, 2025 - 11:01 AM

Great breakdown! 🙌 I’ve been using 8-bit + distillation for our customer support bot and it’s been a game-changer-costs dropped from $1.10 to $0.09 per 1k queries. Just make sure your teacher model is fine-tuned on domain data first. Also, if you’re on a budget, use a single A10G GPU-works great with Optimum + bitsandbytes. And yes, 4-bit is risky unless you’re doing casual chat. For medical/legal? Stick to 8-bit. 💡