Best PHP AI Scripts for LLM Integration and Automation

When you need to bring large language models, AI systems that understand and generate human-like text. Also known as LLMs, they let PHP apps think, reason, and respond like a human assistant. The right PHP AI scripts turn your backend into an intelligent engine—whether you're building chatbots, processing documents, or automating customer support. You don’t need to be an AI researcher. You just need clean, tested code that talks to OpenAI, Anthropic, or open-source models without breaking.

Real projects use RAG, a method that lets LLMs pull answers from your own data instead of guessing. They rely on vector databases, systems that store and retrieve text snippets by meaning, not keywords. Others use function calling, a way for LLMs to trigger real actions like fetching orders or sending emails. These aren’t theory—they’re in production, cutting support tickets and boosting accuracy. And they all start with PHP code that just works.

Below, you’ll find the most practical scripts—open-source, premium, and ready-to-deploy. No fluff. Just working examples that connect PHP to AI, handle costs, keep data safe, and scale without headaches.

Human-in-the-Loop Operations for Generative AI: Review, Approval, and Exceptions

Human-in-the-loop operations for generative AI ensure AI outputs are reviewed, approved, and corrected by people before deployment. Learn how top companies use structured workflows to balance speed, safety, and compliance.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches AI hallucinations before users see them, reducing errors by up to 73%. Learn how top companies use confidence scoring, domain experts, and smart workflows to prevent costly mistakes.

Read MoreHuman-in-the-Loop Review for Generative AI: Catching Errors Before Users See Them

Human-in-the-loop review catches dangerous AI hallucinations before users see them. Learn how it works, where it saves money and lives, and why automated filters alone aren't enough.

Read MoreScaling for Reasoning: How Thinking Tokens Are Rewriting LLM Performance Rules

Thinking tokens are transforming how LLMs reason by targeting inference-time bottlenecks. Unlike traditional scaling, they boost accuracy on math and logic tasks without retraining - but at a high compute cost.

Read MoreMeasuring Hallucination Rate in Production LLM Systems: Key Metrics and Real-World Dashboards

Learn how to measure hallucination rates in production LLM systems using real-world metrics like semantic entropy and RAGAS. Discover what works, what doesn’t, and how top companies are reducing factuality risks in 2025.

Read MoreWhy Multimodality Is the Next Big Leap in Generative AI

Multimodal AI combines text, images, audio, and video to understand context like humans do-making generative AI smarter, faster, and more accurate than text-only systems. Here's how it's already changing healthcare, customer service, and marketing.

Read MoreCode Ownership Models for Vibe-Coded Repos: Avoiding Orphaned Modules

Vibe coding with AI tools like GitHub Copilot is speeding up development-but leaving behind orphaned modules no one understands or owns. Learn the three proven ownership models that prevent production disasters and how to enforce them today.

Read MoreThreat Modeling for Vibe-Coded Applications: A Practical Workshop Guide

Vibe coding speeds up development but introduces serious security risks. This guide shows how to run a lightweight threat modeling workshop to catch AI-generated flaws before they reach production.

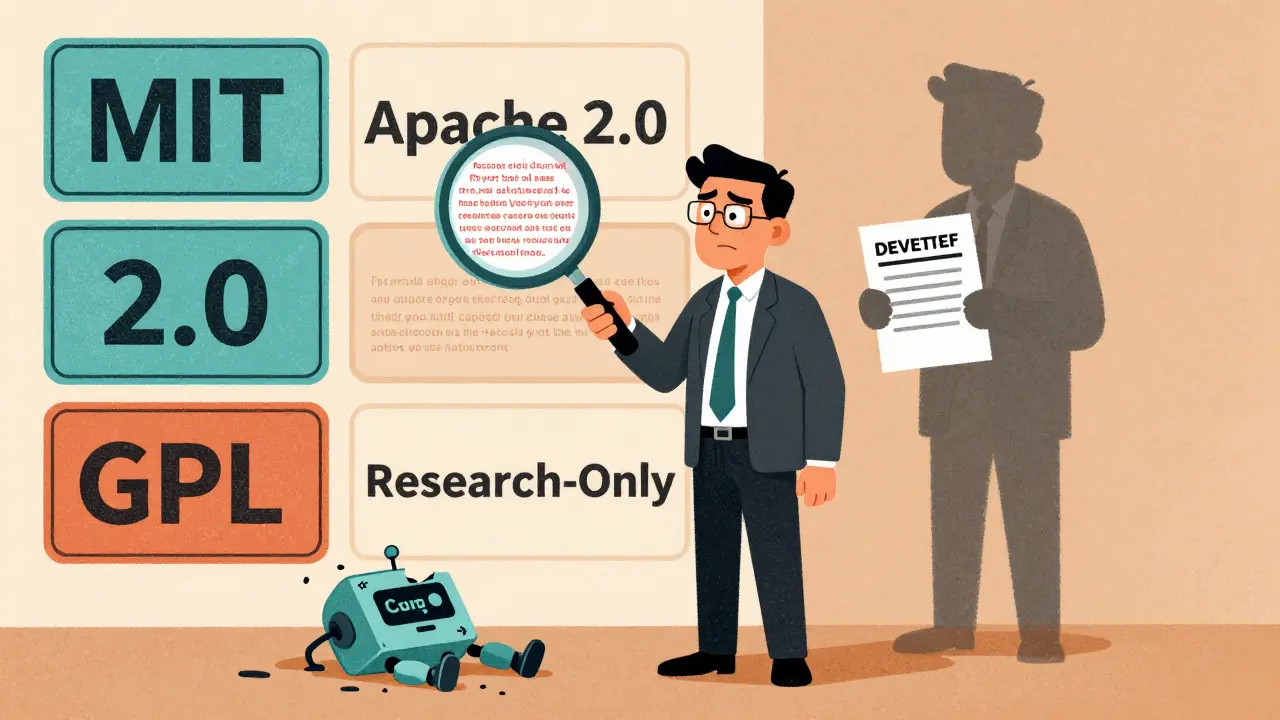

Read MoreLegal and Licensing Considerations for Deploying Open-Source Large Language Models

Open-source LLMs can save millions in API costs-but only if you follow the license rules. Learn how MIT, Apache 2.0, and GPL licenses affect commercial use, training data risks, and compliance steps to avoid lawsuits.

Read MoreModel Compression Economics: How Quantization and Distillation Cut LLM Costs by 90%

Quantization and distillation cut LLM inference costs by up to 95%, enabling affordable AI on edge devices and budget clouds. Learn how these techniques work, when to use them, and what hardware you need.

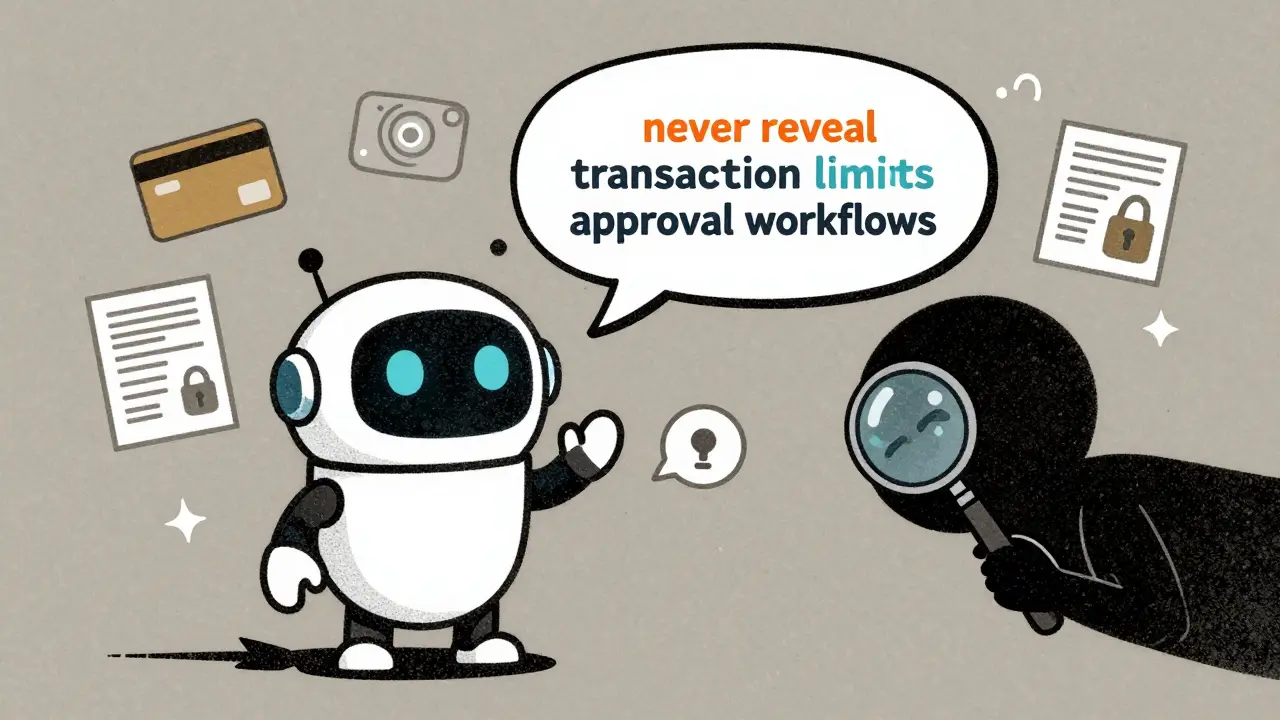

Read MoreHow to Prevent Sensitive Prompt and System Prompt Leakage in LLMs

System prompt leakage is now a top AI security threat, letting attackers steal hidden instructions from LLMs. Learn how to stop it with proven techniques like output filtering, instruction defense, and external guardrails.

Read MoreMultimodal Generative AI: Models That Understand Text, Images, Video, and Audio

Multimodal generative AI understands text, images, audio, and video together-making it smarter than older AI systems. Learn how models like GPT-4o and Llama 4 work, where they’re used, and why they’re changing industries in 2025.

Read More