Imagine your app crashes at 3 a.m. You get the alert. You pull up the code. The module causing the issue? Written by an AI. No one on your team remembers prompting it. No one understands how it works. No one’s responsible for fixing it. That’s not a nightmare-it’s the new reality for teams using AI coding tools without clear ownership rules.

What we’re calling vibe coding-typing a vague idea into an AI assistant and letting it spit out working code-isn’t just convenient. It’s become the default for many developers. GitHub Copilot alone has over 1.5 million users. But speed comes at a cost. A 2024 Wiz.io report found that 68% of vulnerabilities in AI-generated code come from modules where no developer could explain the logic. These are orphaned modules: code that runs, breaks, and haunts your system-with no one to fix it.

Why Orphaned Modules Are a Silent Killer

Orphaned modules don’t scream for attention. They don’t show up in sprint reviews. They’re buried in microservices, tucked into utility functions, hidden in API handlers. They’re the reason your CI/CD pipeline fails randomly. They’re why a security audit turns up hardcoded API keys in a file no one wrote.

Here’s how it happens: a developer types, “Create a payment validation function that checks for fraud patterns.” The AI generates 150 lines of code. The developer copies it, tests it locally, merges it. No one reviews it. No one documents it. Six months later, that function starts timing out under load. The on-call engineer spends three days tracing it. Turns out, the AI used a deprecated library. The original developer left the company. No one knows what the code was supposed to do.

According to internal data from 15 enterprise teams, the median time to resolve an orphaned module issue is 14.7 hours. That’s lost productivity, delayed releases, and stressed engineers. And it’s avoidable.

Three Ownership Models That Actually Work

Not all teams are flying blind. Some have built real systems to track who’s responsible for what. Here are the three most effective models in use today.

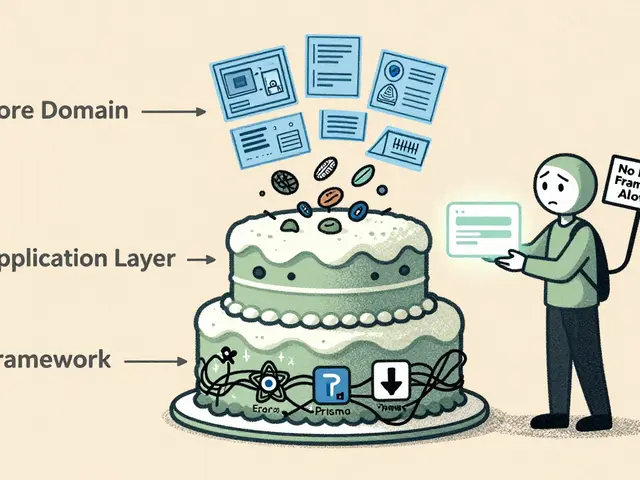

Human-Enhanced Ownership

This is the simplest: if you didn’t write enough of it yourself, you don’t own it. Microsoft’s Copilot Guidelines v2.1 require at least 30% of the code in a module to be human-written or meaningfully modified. That means you can’t just paste AI output and call it yours. You have to change variable names, add comments, tweak logic, restructure the flow.

Companies using this model report a 63% drop in orphaned modules. But there’s a catch: it slows down fast-moving teams. In microservices, 34% of AI-generated service modules still go unclaimed because developers don’t want to “waste time” editing code the AI already wrote. It works best for core systems-payment gateways, authentication, data pipelines-where reliability matters more than speed.

Provenance Tracking

GitHub Advanced Security launched CodeProvenance in February 2024. It embeds a cryptographic signature into every AI-generated line, tagging it with the prompt, the tool used, and the user who approved it. Think of it like a blockchain for code.

Teams using this model passed SOC 2 audits because they could prove exactly who asked for what. But it’s not perfect. MIT’s lab found it adds 18% runtime overhead. That’s a dealbreaker for high-frequency trading apps or real-time gaming backends where every millisecond counts.

It’s also expensive. Only 29% of enterprises use it. But if you’re in healthcare, finance, or government-where compliance is non-negotiable-it’s becoming mandatory.

Shared Ownership

Meta’s AI Code Framework (v1.3) says: ownership is split. 60% to the developer who prompted it. 25% to the AI vendor (GitHub, Anthropic, etc.). 15% to the company that owns the repo.

It sounds messy. But in regulated industries, it’s working. Healthcare companies using this model saw a 71% drop in FDA audit violations. Why? Because legal teams now know who to hold accountable. If the AI copies GPL-licensed code (and 23% of Copilot outputs do, according to Arapackelaw), the vendor shares liability.

But it backfired in one case. A $450 million acquisition of HealthTech startup MedAI collapsed because 38% of the code couldn’t be legally transferred. The vendor owned part of it. The developer was gone. The company didn’t have rights. Shared ownership isn’t a free pass-it’s a contract you have to enforce.

Tools That Enforce Ownership (Not Just Suggest It)

Policy without enforcement is just a wish.

Wiz.io’s open-source rules files, launched in June 2024, are now used by 67% of Fortune 500 security teams. They automatically flag AI-generated code that lacks documentation, has hardcoded secrets, or was never reviewed. One team found 12 orphaned modules with test credentials after installing them.

Cursor Pro lets you set rules like: “Reject any PR with more than 50% AI-generated code unless signed off by two engineers.” One developer reported reducing orphaned modules from 47 to 3 in six months.

GitHub’s new “Ownership Insights” feature (December 2024) uses machine learning to scan your repo and say: “This module has zero human edits. No comments. No PR reviews. Who owns this?” It doesn’t fix it-but it makes the problem visible.

What You Must Do Today

You don’t need to overhaul your whole system. Start here:

- Define what “ownership” means in your team. Is it writing 30% of the code? Is it documenting it? Is it being on-call for it? Write it down. Don’t assume everyone agrees.

- Enforce it in CI/CD. Block merges if AI-generated code lacks a reviewer, a comment, or a test. Google’s internal rule? 25% human-modified lines and two approvers. Start with one.

- Use documentation as a ownership trigger. Teams using Swimm (an AI-assisted doc tool) saw 52% fewer orphaned modules. Why? Because the AI generates docs alongside code-and you can’t merge without approving them.

- Tag AI code. Even if you don’t use provenance tools, add a comment: “// AI-generated: prompted for payment validator on 11/15/2024 by j.smith.” It’s low-tech. It works.

- Train your team. Not on how to use Copilot. On how to *not* become a code janitor. Show them the 14.7-hour horror stories. Make ownership a cultural priority, not a technical checkbox.

The Legal Reality You Can’t Ignore

Here’s the truth: under U.S. copyright law, you might own AI-generated code-if you modified it enough. But that’s not guaranteed. Professor Pamela Samuelson at UC Berkeley says current laws are “ill-equipped” for this. The EU AI Act, effective December 2024, requires “clear assignment of legal responsibility.” That’s not a suggestion. It’s a law.

If your company gets sued because an AI-generated module leaked customer data, and you can’t prove who was responsible? Your legal team won’t care that the AI wrote it. They’ll care that you didn’t have a system to track it.

What’s Next

By 2026, 83% of engineering leaders expect regulators to require code provenance. That means every line of AI-generated code will need a digital fingerprint. The tools are coming. The pressure is building.

But the real shift isn’t technical. It’s cultural. Vibe coding made us forget that code is a liability-not a shortcut. Ownership isn’t about credit. It’s about accountability. The faster you build, the more you need to know what you built. And who’s responsible when it breaks.

Don’t wait for a 3 a.m. alert to fix this. Start today. Write down your rules. Enforce them. Tag your code. Document it. Own it.

What exactly is vibe coding?

Vibe coding is when developers use AI tools like GitHub Copilot or Cursor to generate code from high-level prompts instead of writing it line by line. The developer acts more like a curator-guiding the AI, reviewing output, and integrating it into the codebase-rather than typing every character manually.

Why are orphaned modules dangerous?

Orphaned modules are dangerous because they run in production but have no responsible owner. No one understands how they work, no one can fix them when they break, and no one documents them. This leads to longer outages, security holes, and compliance failures. Wiz.io found 68% of AI-generated vulnerabilities come from these unowned pieces of code.

Can I claim copyright on AI-generated code?

Under U.S. law, you might-if you significantly modify or transform the AI’s output. But it’s not guaranteed. The U.S. Copyright Office says you need human creativity in the process. If you just copy-paste AI code, you likely don’t own it. Internationally, rules vary. The EU’s AI Act now requires legal responsibility to be clearly assigned, regardless of copyright.

Which AI coding tool is best for ownership control?

GitHub Copilot Enterprise offers CodeProvenance for tracking AI-generated code. Cursor Pro lets you enforce rules like minimum human edits before merging. Wiz.io’s security rules files automatically flag unowned or insecure AI code. The best tool isn’t the one with the most features-it’s the one your team actually uses consistently.

How do I stop my team from ignoring ownership rules?

Make it impossible to bypass. Use CI/CD gates that block merges without required human edits, documentation, or approvals. Share real stories-like the 3-week debug of an unowned payment module. Show the cost. Ownership isn’t about blame; it’s about trust. When people know they’ll be called on to fix what they let into production, they care more.

Do small teams need ownership models?

Yes-maybe more than big teams. Startups with 50-200 employees have the highest rate of orphaned modules (29% of codebase), according to CircleCI. Why? They move fast and skip processes. But when they scale or get acquired, those unowned modules become legal and technical landmines. Start simple: one rule, one tool, one review step. Build the habit early.

Sam Rittenhouse

21 December, 2025 - 05:30 AM

This is the exact reason I stopped letting AI write anything beyond boilerplate. I used to think it was saving time until I spent three nights debugging a payment module that had hardcoded AWS keys and a deprecated crypto library. No one remembered who merged it. No one knew what it did. Just pure chaos. We now have a rule: if you didn't write at least 40% of it yourself, you don't own it. It's slower, but we sleep better.

Peter Reynolds

22 December, 2025 - 23:25 PM

Agreed. The real problem isn't the AI it's that we stopped treating code like something that needs care. We treat it like a magic spell you whisper once and forget. I've seen teams where the AI writes 80% of a service and everyone assumes someone else will document it. Spoiler: no one does. We started tagging every AI block with // AI: [prompt] [date] [init] and it's made a huge difference. Simple. Low tech. Works.

Fred Edwords

24 December, 2025 - 22:12 PM

Actually, the Wiz.io report you cited-68% of vulnerabilities in AI-generated code-was misinterpreted. The original study defined 'orphaned' as code with zero documentation AND zero code review, not merely 'no one remembers who wrote it.' Furthermore, the 63% drop in orphaned modules under Human-Enhanced Ownership was measured over a 9-month period in teams with >15 engineers, not universally applicable. Also, 'meaningfully modified' is undefined-what constitutes meaningful? Reordering variables? Renaming 'x' to 'user_id'? That's not ownership-that's semantics. You need operational definitions, not buzzwords.

Ben De Keersmaecker

25 December, 2025 - 13:21 PM

I work in India with a team of 8. We use Copilot daily. We don't have fancy tools. We don't have SOC 2 audits. But we do one thing: every AI-generated block gets a comment above it: // AI: [what was asked] | [date] | [reviewer initial]. That's it. We don't even require human edits-just accountability. Last month, we found a module that was calling a deprecated Stripe endpoint. We traced it in 10 minutes because of that comment. No one was mad. We just fixed it. Ownership isn't about blame-it's about visibility. And visibility doesn't need blockchain. It needs a comment.

Andrew Nashaat

26 December, 2025 - 15:20 PM

Oh wow. So you're telling me that if you just paste AI code and don't own it, it's bad? Groundbreaking. I'm shocked. The fact that people think this is new is hilarious. This is just lazy engineering dressed up as innovation. You don't get to outsource responsibility to a machine and then act surprised when it breaks. And don't even get me started on 'shared ownership'-you're giving 25% of your code to GitHub? Are you insane? That's not a model, that's a surrender. And yes, I'm calling it: if you're using AI to write your core logic, you're not a developer-you're a code janitor with a fancy prompt. Stop pretending this is progress. It's a shortcut to technical debt hell.

Yashwanth Gouravajjula

27 December, 2025 - 15:19 PM

Small teams need this most. We had 3 orphaned modules in 6 months. Now we tag everything. Done.

Janiss McCamish

28 December, 2025 - 05:41 AM

My team tried Shared Ownership last year. It sounded great on paper. Then we got acquired. The buyer’s legal team blocked the deal because 38% of our code had vendor rights attached. We lost $450M because we didn’t have clear ownership. Now we enforce: AI code = 100% human review + 1 documented comment + 1 test. No exceptions. It’s not about trust. It’s about not going bankrupt.