Best PHP AI Scripts for July 2025

When you're building AI features into your PHP apps, you need scripts that actually work—fast, secure, and without overcomplicating things. PHP AI scripts, reusable code templates that connect PHP applications to artificial intelligence APIs like OpenAI. Also known as AI-ready PHP boilerplates, they let you add chatbots, text analysis, and automation without rewriting your whole system. These aren’t theory projects. They’re the code developers are using right now to cut hours off their workflow.

Most of the scripts published this month focus on three key areas: OpenAI integration, the process of connecting PHP applications to OpenAI’s API for text generation, summarization, and classification, PHP chatbots, automated conversational agents built directly into PHP-driven websites or APIs, and NLP automation, using natural language processing to extract meaning from user input without needing a full ML model. Each of these relies on clean API calls, proper error handling, and lightweight data parsing—all things you’ll find in the scripts from July 2025. You won’t see bloated frameworks or untested libraries. Just working code that handles tokens, rate limits, and JSON responses the right way.

What makes these scripts stand out? They’re built for real use cases: a support chatbot that answers FAQs using GPT-4, a script that auto-tags customer emails with NLP, a tool that summarizes long PDFs by sending them to OpenAI and returning clean summaries in PHP. One developer shared how they cut response time by 60% just by switching from a slow third-party service to a direct PHP + OpenAI setup. Another fixed a memory leak in their chatbot by using buffered output instead of storing full responses in arrays. These aren’t hypothetical fixes—they’re the exact improvements you’ll find in the posts below.

Whether you’re adding AI to a small business site or scaling an enterprise tool, the scripts from July 2025 give you a solid starting point. You’ll get Composer-ready packages, security tips for API keys, and benchmarks showing which methods use the least memory. No fluff. No theory. Just the code that works today.

How Usage Patterns Affect Large Language Model Billing in Production

LLM billing in production depends on how users interact with the model-not just how many users you have. Token usage, model choice, and peak demand drive costs. Learn how usage patterns affect your bill and what pricing models work best.

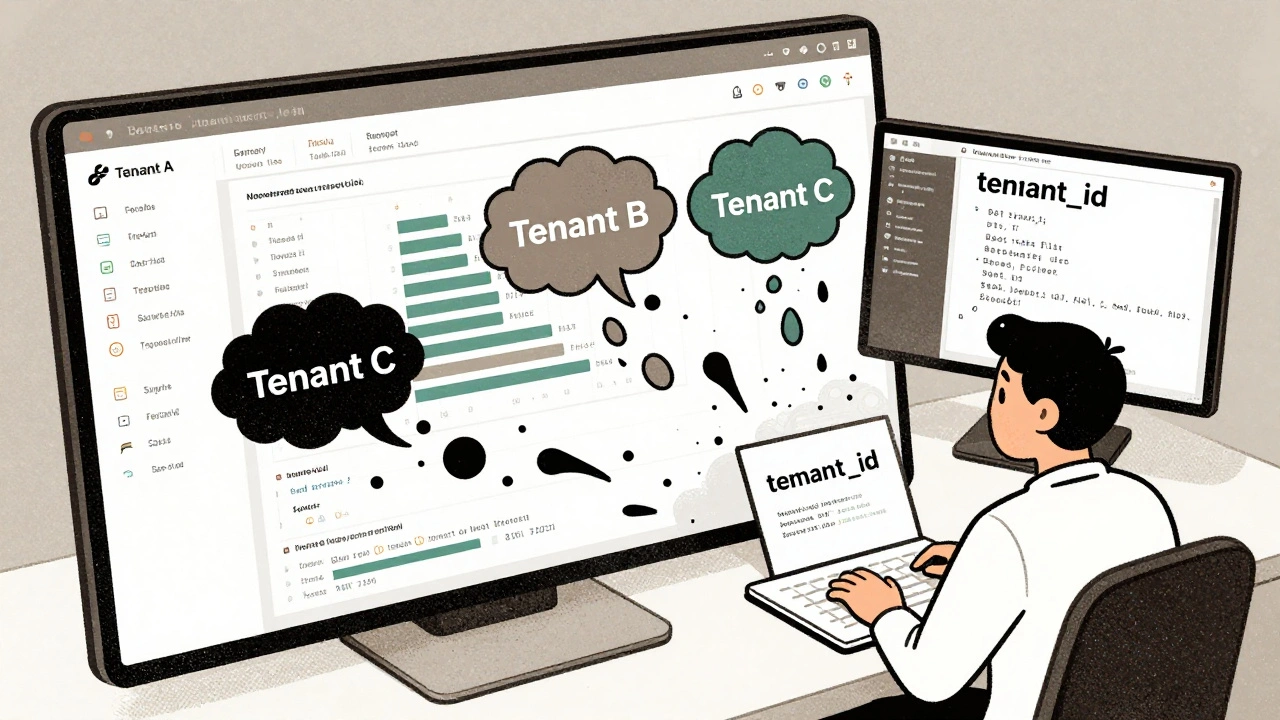

Read MoreMulti-Tenancy in Vibe-Coded SaaS: How to Get Isolation, Auth, and Cost Controls Right

Learn how to implement secure multi-tenancy in AI-assisted SaaS apps using vibe coding. Avoid data leaks, cost overruns, and authentication failures with proven strategies for isolation, auth, and usage controls.

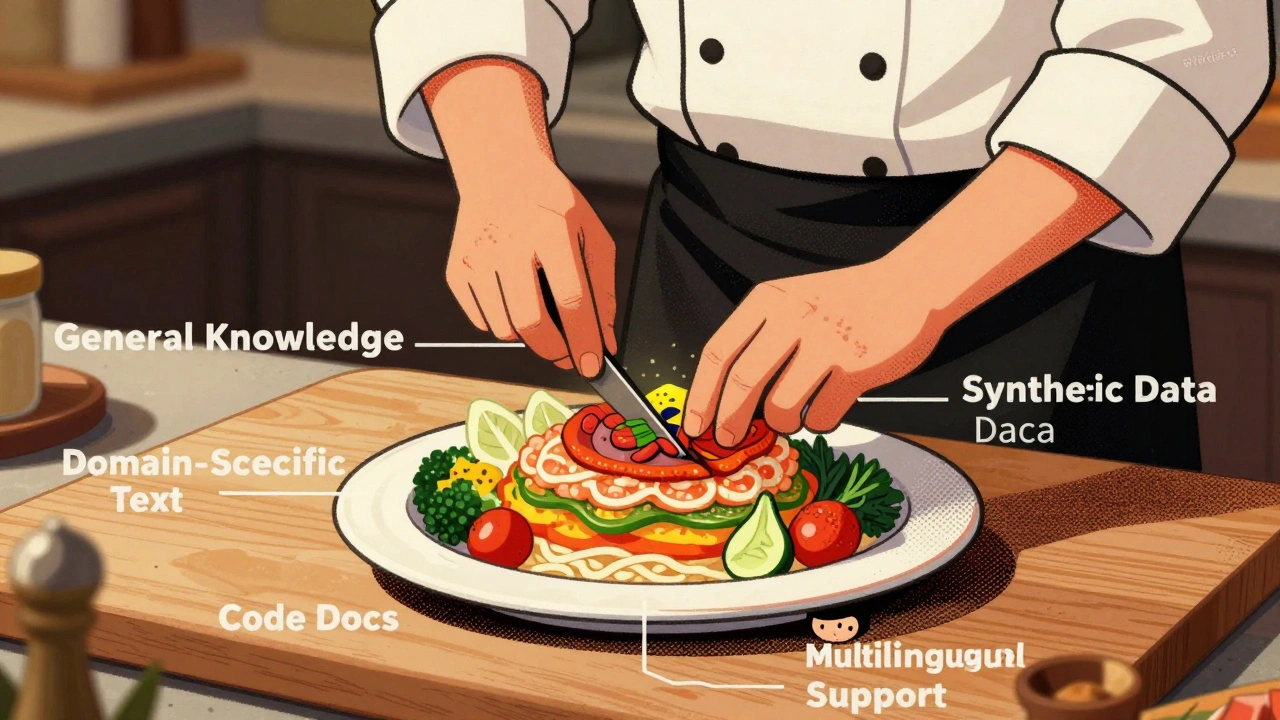

Read MoreHow to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

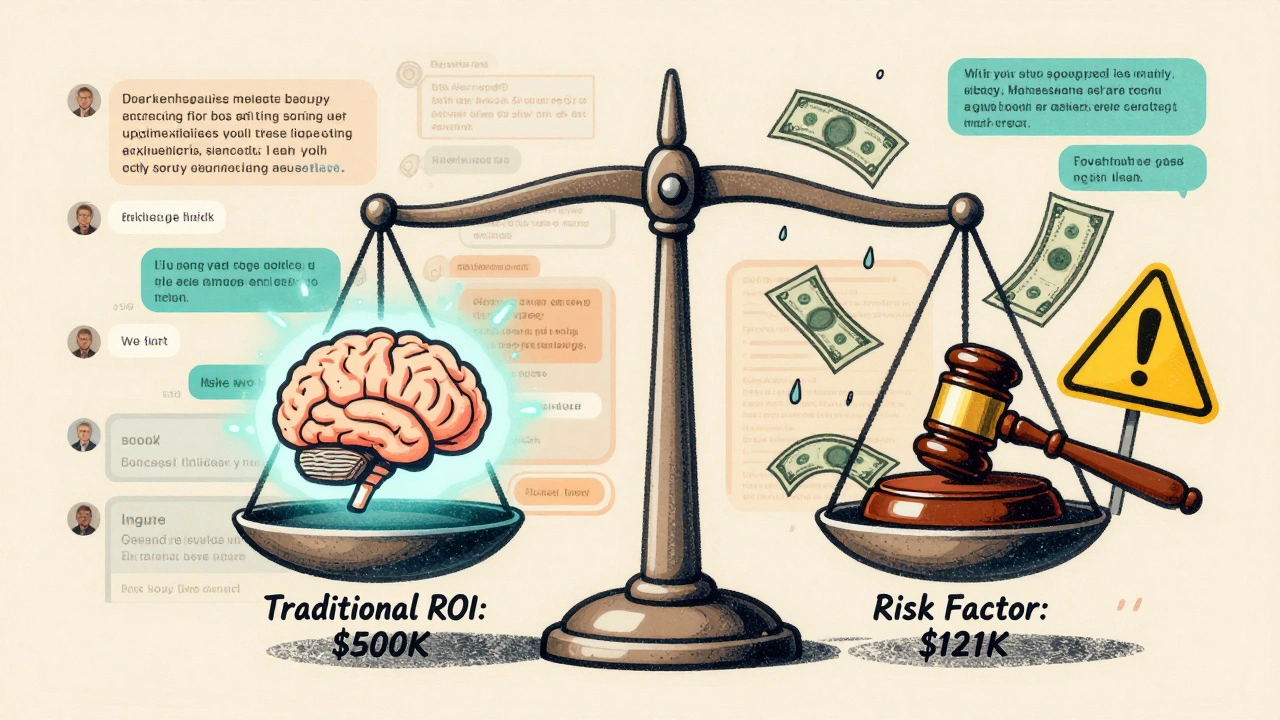

Read MoreRisk-Adjusted ROI for Generative AI: How to Calculate Real Returns with Compliance and Controls

Risk-adjusted ROI for generative AI accounts for compliance costs, legal risks, and control measures to give you a realistic return forecast. Learn how to calculate it and avoid costly mistakes.

Read MoreChange Management Costs in Generative AI Programs: Training and Process Redesign

Generative AI success depends less on technology and more on how well teams adapt. Learn the real costs of training and process redesign-and how to budget for them right.

Read MoreTruthfulness Benchmarks for Generative AI: How Well Do AI Models Really Tell the Truth?

Truthfulness benchmarks like TruthfulQA reveal that even the most advanced AI models still spread misinformation. Learn how these tests work, which models perform best, and why high scores don’t mean safe deployment.

Read MoreDistributed Training at Scale: How Thousands of GPUs Power Large Language Models

Distributed training at scale lets companies train massive LLMs using thousands of GPUs. Learn how hybrid parallelism, hardware limits, and communication overhead shape real-world AI training today.

Read MoreDeveloper Sentiment Surveys on Vibe Coding: What Questions to Ask and Why They Matter

Developer sentiment surveys on vibe coding reveal a split between productivity gains and security risks. Learn the key questions to ask to understand real adoption, hidden costs, and how to use AI tools safely.

Read More