Responsible AI: Ethical, Secure, and Compliant AI Systems for Developers

When you build with responsible AI, a framework for developing artificial intelligence that prioritizes safety, fairness, and accountability. Also known as ethical AI, it means building systems that don’t just work—but don’t harm, mislead, or violate trust. Too many teams rush to deploy large language models without asking: Who owns the data? Who’s liable if it lies? How do we stop bias from slipping into production? Responsible AI answers those questions before the first user logs in.

It’s not just about rules—it’s about systems. AI governance, the set of policies, tools, and processes that ensure AI systems follow legal and ethical standards turns vague ideals into checklists, audits, and automated controls. You can’t rely on good intentions when your model is making loan decisions or writing medical summaries. That’s why teams using enterprise data governance, structured methods to track, classify, and secure training data across teams and cloud environments see 70% fewer compliance violations. Tools like Microsoft Purview and Databricks aren’t optional—they’re the backbone of any production LLM.

And then there’s the cost of ignoring responsibility. A single hallucination in a customer support bot can cost thousands in lost trust. A data leak from an unsecured model weight file can trigger fines under GDPR or state laws like California’s AI transparency rules. That’s why AI compliance, the ongoing process of aligning AI systems with legal requirements across regions and industries isn’t a legal team’s problem—it’s a developer’s daily task. From export controls on model weights to confidential computing with TEEs, every line of code has legal weight.

Responsible AI isn’t about slowing down. It’s about building faster—without crashing. Teams that bake in truthfulness benchmarks, multi-tenancy isolation, and supply chain security from day one ship more reliably. They avoid the 86% of AI projects that die in production because they weren’t built to last. You don’t need a compliance officer to start. You just need to ask the right questions before you push to production.

Below, you’ll find real-world guides from developers who’ve walked this path—how to measure policy adherence, reduce hallucinations with RAG, control cloud costs without cutting corners, and keep AI systems honest under pressure. No theory. No fluff. Just what works when the stakes are high.

Community and Ethics for Generative AI: How to Build Transparency and Trust in AI Programs

Learn how to build ethical generative AI programs through stakeholder engagement and transparency. Real policies from Harvard, Columbia, UNESCO, and NIH show what works-and what doesn’t.

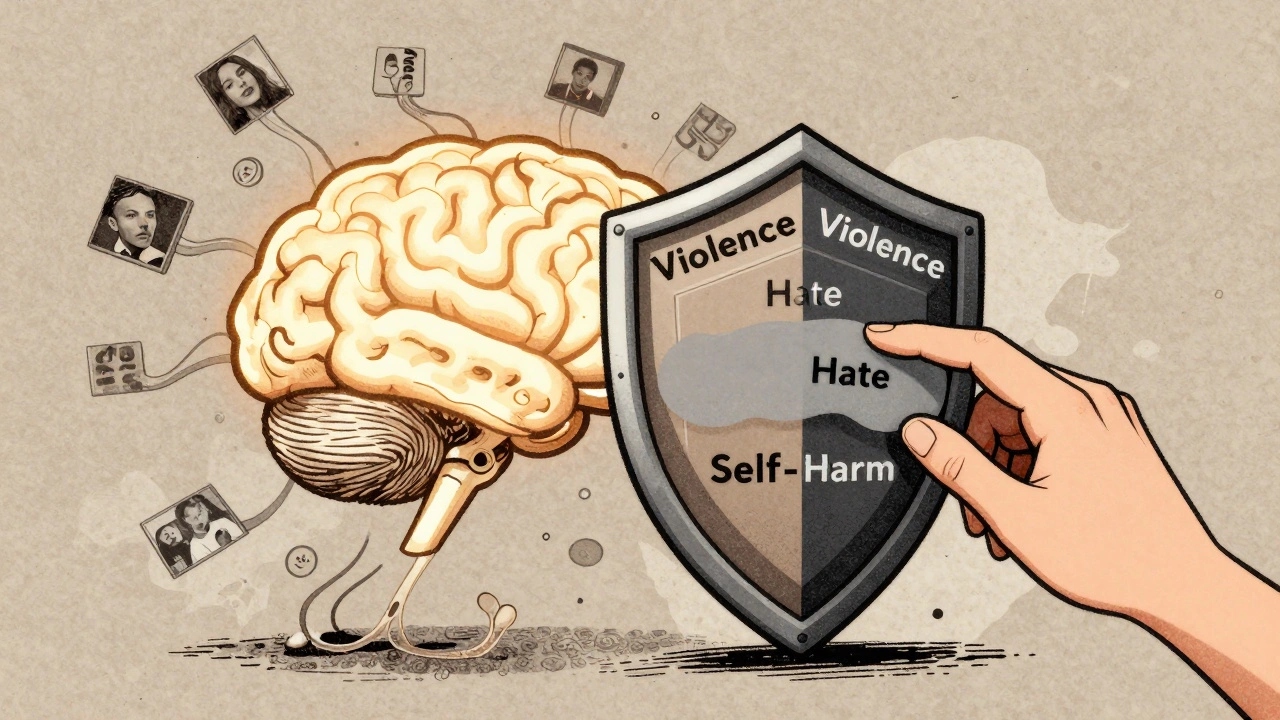

Read MoreContent Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read More