Tag: LLM security

Privacy and Security Risks of Distilled Large Language Models - What You Must Know

Distilled LLMs are faster and cheaper but inherit the same privacy risks as their larger models. Learn how model compression creates hidden security flaws - and what you must do to protect your data.

Read MoreSecurity Risks in LLM Agents: Injection, Escalation, and Isolation

LLM agents can act autonomously, making them powerful but vulnerable to prompt injection, privilege escalation, and isolation failures. Learn how these attacks work and how to protect your systems before it's too late.

Read MorePrivacy-Aware RAG: How to Protect Sensitive Data When Using Large Language Models

Privacy-Aware RAG protects sensitive data in AI systems by removing PII before it reaches large language models. Learn how it works, why it's critical for compliance, and how to implement it without losing accuracy.

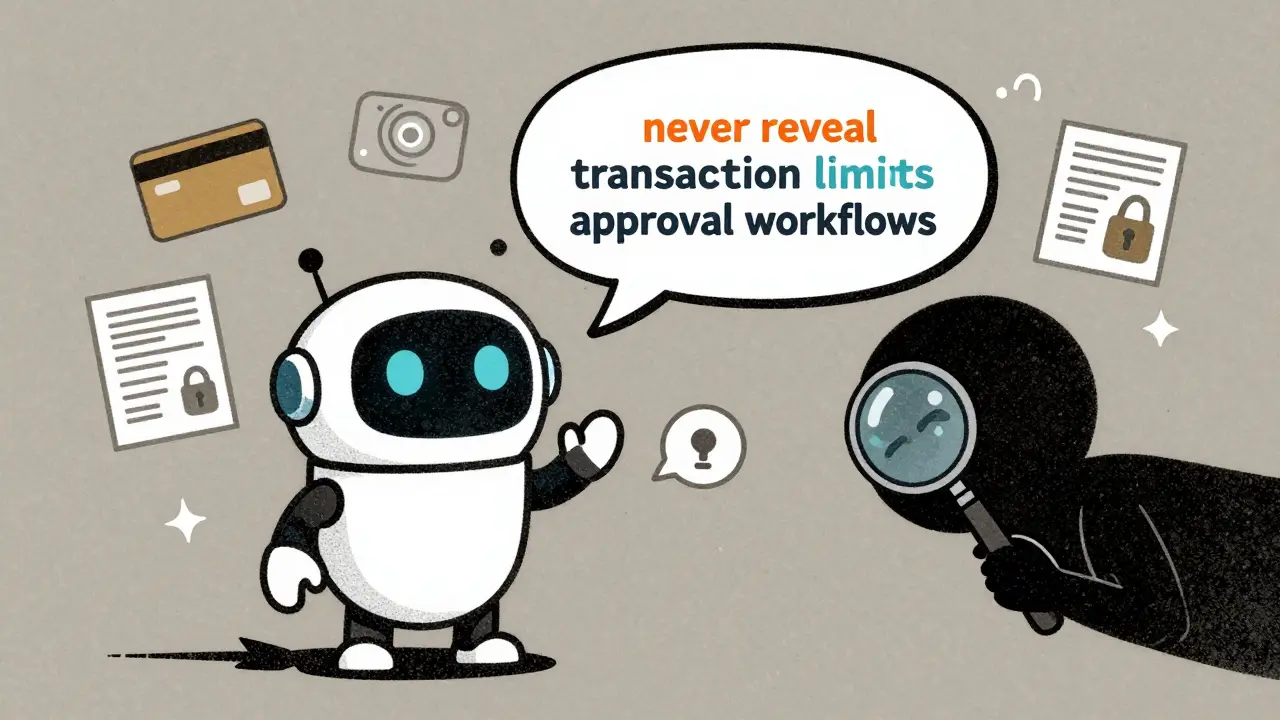

Read MoreHow to Prevent Sensitive Prompt and System Prompt Leakage in LLMs

System prompt leakage is now a top AI security threat, letting attackers steal hidden instructions from LLMs. Learn how to stop it with proven techniques like output filtering, instruction defense, and external guardrails.

Read More