Imagine you're building a customer support bot for a hospital. It needs to answer questions about patient records, treatment plans, and insurance details. But every time someone asks a question, that bot sends the raw data to a large language model - possibly hosted by a third party - and that model might store, log, or accidentally leak that information. This isn't hypothetical. In 2024, a healthcare provider in Ohio faced a HIPAA violation after their RAG system exposed 14,000 patient records because medical record numbers weren't properly redacted. That’s the risk of standard RAG. And that’s why Privacy-Aware RAG isn’t just a nice-to-have - it’s becoming mandatory.

What Is Privacy-Aware RAG?

Privacy-Aware RAG is a modified version of Retrieval-Augmented Generation that stops sensitive data from ever reaching the large language model. In regular RAG, when you ask a question, the system pulls relevant documents, sends them - along with your question - to the LLM, and the model generates an answer. The problem? Those documents often contain names, Social Security numbers, credit card details, medical diagnoses, or financial records. And unless you’re running everything on your own air-gapped server, that data is going through someone else’s system. Privacy-Aware RAG fixes this by scrubbing out sensitive information before it leaves your network. There are two main ways to do this:- Prompt-only privacy: The system scans your question and the retrieved documents in real time, removes PII (like names, IDs, account numbers), and sends only the cleaned version to the LLM. This happens in under 300 milliseconds.

- Source documents privacy: Before you even start using the system, all source documents (PDFs, databases, emails) are pre-processed. Sensitive data is redacted, encrypted, or replaced with placeholders. Then, only these cleaned versions are stored in the vector database.

Both methods work with standard RAG components - chunking text into 256-512 token pieces, using embedding models like text-embedding-ada-002, and retrieving results via semantic search. But now, they include a privacy layer that acts like a filter before anything gets sent out.

Why Standard RAG Is a Privacy Risk

In early 2024, security firm Lasso Security analyzed 120 enterprise RAG deployments. They found that 68% of them exposed sensitive data in prompts or source documents. That means if you’re using RAG without privacy controls, you’re likely sending customer data, employee records, or proprietary business info to external AI APIs. Here’s how it breaks down:- Standard RAG sends 100% of retrieved document content to the LLM.

- Privacy-Aware RAG with source document privacy sends 0% of raw sensitive data.

- Even if the LLM doesn’t store data, many APIs log inputs for debugging or model improvement. That log could be breached.

Compliance isn’t optional anymore. GDPR, HIPAA, CCPA, and the EU AI Act all require companies to minimize data exposure. If your AI system leaks patient data or financial records, you could face fines up to 4% of global revenue under GDPR. A single breach can cost millions in legal fees, regulatory penalties, and lost trust.

Accuracy vs. Privacy: The Trade-Off

One of the biggest concerns people have about Privacy-Aware RAG is: does it make answers worse? Yes - sometimes. But not always.- Standard RAG: 92.3% factual accuracy in enterprise tasks.

- Privacy-Aware RAG with aggressive redaction: 88.7% accuracy.

- With optimized redaction thresholds: accuracy gap shrinks to just 2.1%.

That means if you’re smart about what you redact, you don’t lose much accuracy. But if you over-redact - say, you remove every number, name, and date - the LLM doesn’t have enough context. That’s when it starts making things up. MIT researchers found that over-redaction can increase hallucinations by up to 18% in complex queries.

Some industries feel this more than others:

- Finance: Accuracy drops from 94.1% to 82.6% when redacting dollar amounts or account numbers. That’s a problem if your bot is helping advisors calculate loan terms.

- Healthcare: Accuracy stays high (89%+) when redacting names and IDs but preserving symptoms, medications, and procedures.

- Legal: Removing case numbers or client names can break context, but keeping dates and statutes intact works well.

The key? Don’t redact everything. Redact only what’s legally sensitive. Use context-aware tools that understand that “John’s SSN is 123-45-6789” should remove the number but keep “John.” That’s where custom AI models trained on your domain data come in.

How Privacy-Aware RAG Actually Works

Let’s walk through a real example. A bank employee asks: “What’s the latest transaction for customer John Smith?” In standard RAG:- The system finds a document: “John Smith (SSN: 123-45-6789) made a $4,200 payment on 1/5/2026.”

- That entire document is sent to OpenAI’s API.

- OpenAI responds: “The latest transaction for John Smith was $4,200 on January 5, 2026.”

In Privacy-Aware RAG:

- The system finds the same document.

- A redaction engine detects “123-45-6789” as a Social Security number and “$4,200” as a financial figure.

- It replaces them: “John Smith (REDACTED) made a REDACTED payment on 1/5/2026.”

- Only this cleaned version is sent to the LLM.

- LLM responds: “The latest transaction for John Smith occurred on January 5, 2026.”

Notice: the answer still works. The customer’s name is kept for context, but the sensitive data is gone. The LLM didn’t see the SSN or the dollar amount. And yet, the answer is still useful.

This is why dynamic data masking matters. Tools like Private AI and Google Cloud’s Vertex AI now use AI to detect not just patterns (like 9-digit numbers for SSNs) but context. Is “John” a name or a brand? Is “123-45-6789” an SSN or a product code? That’s where custom training kicks in.

Real-World Results and Failures

Companies that got it right saw huge wins:- JPMorgan Chase’s pilot program achieved 99.2% compliance with FINRA rules.

- Mayo Clinic maintained 98.7% protection of protected health information (PHI).

- A global insurance company deployed it across 12,000 agents with 99.8% PII protection and 89% answer accuracy.

But failures were costly:

- A healthcare provider in Ohio failed to redact medical record numbers. Result: 14,000 patients exposed. $1.2 million fine from HHS.

- A financial firm used a commercial tool that missed redacting email addresses embedded in PDFs. Result: 23,000 customer emails leaked. Lawsuit filed.

Why did these happen? Poor validation. Gartner found that 61% of tested Privacy-Aware RAG systems failed to catch edge-case PII - like a phone number hidden in a comment field or a name written in a foreign format. You can’t just install a tool and forget it. You need continuous monitoring.

Implementation Challenges

Setting up Privacy-Aware RAG isn’t plug-and-play. Here’s what you’ll face:- Learning curve: Most companies need 8-12 weeks to get it right. Requires skills in NLP, data security, and LLM ops.

- Context-dependent data: Redacting “John’s SSN is 123-45-6789” requires understanding that “John” is important, but the number isn’t. Rule-based systems fail here. You need AI-trained entity recognition.

- Performance: Real-time redaction adds latency. Source document redaction reduces this but increases storage by 20-40%.

- Tool quality: Open-source kits have poor documentation (avg. 3.2/5 on GitHub). Commercial tools like Private AI score 4.6/5 for clarity.

Best practices from top implementers:

- Use layered redaction: combine prompt-only and source document privacy.

- Set false negative rates below 0.5% - meaning less than 1 in 200 sensitive items should slip through.

- Run quarterly adversarial tests: try to trick your system into leaking data.

- Validate with real data: don’t test on synthetic examples. Use anonymized versions of your actual documents.

Who’s Leading the Market?

The Privacy-Aware RAG market is growing fast - projected to hit $2.8 billion by 2026. Three types of players dominate:- Embedding providers: OpenAI, Cohere - they now offer privacy features in their APIs. 45% market share.

- Specialized startups: Private AI, Lasso Security - focused only on privacy. 28% share.

- Enterprise platforms: Google Cloud, Salesforce - bundle it into their AI suites. 27% share.

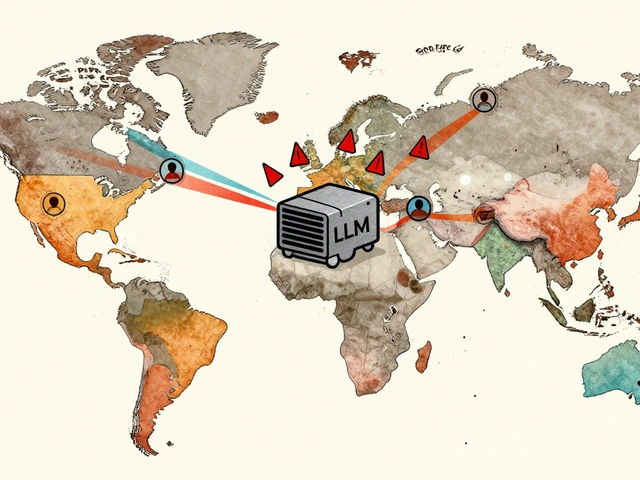

Adoption varies by industry:

- Financial services: 58% adoption

- Healthcare: 47%

- Government: 39%

- Retail: 22%

- Manufacturing: 18%

Why the gap? Regulated industries have strict rules. Retail doesn’t handle as much sensitive data - so they delay. But that’s changing. As consumer expectations rise and regulators crack down, even retailers will need to act.

What’s Next?

The next 18 months will define Privacy-Aware RAG’s future:- Standardization: NIST is drafting RAG-specific privacy guidelines expected in Q2 2025.

- Global PII: Current tools are 76.4% accurate on non-English data (per Meta). That’s not enough for global companies.

- Fine-tuning risks: 68% of companies still expose data when fine-tuning models on internal documents.

- AI arms race: MIT predicts every new privacy technique has a 12-18 month window before attackers find ways around it.

One thing’s clear: Privacy-Aware RAG isn’t a trend. It’s the new baseline. By 2026, Gartner predicts 85% of enterprise RAG deployments will include privacy safeguards - up from just 32% in 2024.

If you’re building or using RAG today, ask yourself: Are you protecting data - or just pretending to?

Is Privacy-Aware RAG necessary for small businesses?

If your business handles any personally identifiable information - names, emails, addresses, payment details - then yes. Even small companies can face GDPR or CCPA fines. Privacy-Aware RAG isn’t just for enterprises. Tools like Private AI offer scalable plans starting under $500/month. The cost of a single data breach far outweighs the setup cost.

Can I use open-source tools for Privacy-Aware RAG?

You can, but with caution. Open-source frameworks like LangChain and LlamaIndex let you build Privacy-Aware RAG, but they don’t include redaction engines. You’ll need to integrate tools like Presidio or custom NER models. Documentation is often poor, and testing is manual. For production use, especially in regulated industries, commercial solutions with built-in validation and support are more reliable.

Does Privacy-Aware RAG work with all LLMs?

Yes. Privacy-Aware RAG is an input layer - it works before the data reaches the LLM. Whether you’re using OpenAI’s GPT-4, Anthropic’s Claude, or an open-source model like Llama 3, the redaction happens on your side. The LLM never knows the difference. This makes it compatible with any model that accepts text input.

How do I test if my Privacy-Aware RAG is working?

Run adversarial tests. Feed your system documents with hidden PII - like a Social Security number in a comment field, or a credit card number in a footnote. Then check if it was redacted. Use automated tools that scan outputs for leaked data. Set up alerts if any sensitive strings appear in logs or responses. Aim for false negative rates below 0.5%. Also, audit your vector database: ensure no raw sensitive documents are stored.

What’s the biggest mistake people make with Privacy-Aware RAG?

Assuming the tool does everything for them. Many companies install a redaction product, run a few tests, and declare victory. But real-world data is messy. Names are misspelled. Numbers are formatted differently. Context changes. Without continuous monitoring, adversarial testing, and team training, your system will fail. Privacy-Aware RAG isn’t a one-time setup - it’s an ongoing process.

Tonya Trottman

13 January, 2026 - 04:55 AM

Okay but let’s be real - if your redaction tool misses a phone number tucked inside a PDF comment field, you’re already late to the party. I’ve seen this happen three times at my old job. One guy used regex for SSNs but forgot about international formats. Then boom - 300 patient records leaked because someone typed ‘+91-98765-43210’ instead of ‘(987) 654-3210’. Tools aren’t magic. You need humans reviewing edge cases. And no, ‘we ran a test’ doesn’t cut it.

Rocky Wyatt

14 January, 2026 - 03:34 AM

This whole thing is just corporate theater. You think redacting data makes you safe? Nah. The LLM still *sees* it. Even if you scrub it, the model’s training data is full of this crap. You’re just playing whack-a-mole with your compliance officer. Real privacy? Don’t use third-party LLMs at all. Air gap it. Burn the data after use. Or better yet - don’t build a bot that answers medical questions. Let a human do it. They’re cheaper and less likely to hallucinate a patient’s diagnosis.

Santhosh Santhosh

14 January, 2026 - 15:25 PM

I work in a small clinic in Kerala and we just implemented a basic version of this last month. We used Presidio + LangChain, and yes, it was a nightmare. Documentation was awful, and we had to train our own NER model because the default one kept flagging ‘Diabetes Type 2’ as PII. But after two weeks of tweaking, we got it down to 0.3% false negatives. The best part? Our patients actually thanked us. One lady said, ‘I didn’t know you cared enough to hide my details.’ That’s worth the headache. Don’t underestimate how much trust this builds - even if you’re not a Fortune 500.

ujjwal fouzdar

15 January, 2026 - 03:36 AM

Let me ask you something deeper - if we’re so obsessed with scrubbing data from AI systems, why are we still feeding it our entire digital lives on social media? We’re terrified of a hospital bot seeing our SSN but we post our vacation photos with geotags, birthdates, and kids’ names tagged in real time. This isn’t about privacy. It’s about control. We want to feel like we’re the ones deciding what’s hidden, not the corporations. But the truth? We’re all complicit. The real revolution isn’t in redaction layers - it’s in refusing to participate in data capitalism altogether. And that’s a much harder conversation.

Anand Pandit

15 January, 2026 - 09:19 AM

Great breakdown! I just want to add - if you’re using open-source tools, don’t skip the adversarial testing. We built our own pipeline with Presidio and thought we were golden until someone slipped in ‘Cust#12345’ as a customer ID and it flew right through. Turned out the regex didn’t catch ‘Cust#’ prefixes. We fixed it by adding custom patterns and now we run weekly tests with fake data that includes typos, unicode chars, and reversed numbers. Took a few days but saved us from a potential lawsuit. Seriously, just don’t assume the tool knows everything. You gotta be the safety net.

Reshma Jose

16 January, 2026 - 04:24 AM

Y’all are overcomplicating this. Just use Google’s Vertex AI. It’s got built-in redaction, works with any LLM, and their docs don’t read like a PhD thesis written in broken English. We switched from a DIY mess to Vertex in two weeks. Accuracy didn’t drop. Compliance audit passed with flying colors. Cost? Less than our old coffee budget. Stop reinventing the wheel. If you’re not a startup with 10 engineers, use the damn enterprise tools. They exist for a reason.

rahul shrimali

16 January, 2026 - 20:36 PM

Redaction works but only if you test it with real data. Fake examples are useless. We used real patient records with misspelled names and handwritten notes scanned as PDFs. The tool missed 12% at first. Fixed it by training on our own data. Now it’s 99.7%. No magic. Just work. And yes - it’s worth it. One breach and you’re done. Period.

Eka Prabha

17 January, 2026 - 14:24 PM

Let me be blunt - this entire Privacy-Aware RAG movement is a distraction orchestrated by SaaS vendors to sell you overpriced compliance widgets. The real issue? AI models are trained on stolen data. Your redaction layer is a Band-Aid on a bullet wound. The LLMs you’re using were trained on scraped hospital forums, leaked insurance databases, and private medical blogs. You’re scrubbing your inputs while the model’s memory is full of your patients’ PHI. This isn’t security - it’s performative ethics. And the regulators? They know. They just don’t have the bandwidth to shut it down yet. Wake up. The system is rigged.

Bharat Patel

18 January, 2026 - 04:27 AM

There’s a quiet beauty in this idea - that we can build systems that respect human dignity without sacrificing utility. We don’t need to see someone’s SSN to know they need insulin. We don’t need their credit card number to tell them their payment was processed. The question isn’t ‘how much data can we send to the AI?’ - it’s ‘how little can we send and still help?’ That’s not just technical. It’s philosophical. It’s about redefining what care means in the age of machines. Maybe the answer isn’t more data… but less.

Bhagyashri Zokarkar

19 January, 2026 - 14:20 PM

i mean like… why even bother? like sure redaction sounds cool but like what if the llm just remembers everything from training? like my cousin works at a hospital and they used one of these tools and then a patient asked ‘did i have surgery in 2021?’ and the bot said ‘yes, you had a knee replacement on 3/14/2021’ - and the patient was like ‘how do you know that?’ and they were like ‘uhhh…’ and then they realized the model had memorized the data from training. so now like… what’s the point? we’re just pretending to protect people while the AI is still leaking our secrets from 5 years ago. i’m just saying… maybe we should stop using ai for this stuff entirely? like… just… let humans do it?