Retrieval-Augmented Generation (RAG) sounds like a fix for all of a large language model’s problems. You give it a question, it pulls in fresh, relevant documents, and spits out an answer grounded in real data. No more made-up facts. No more outdated info. Sounds perfect, right? But here’s the uncomfortable truth: RAG is breaking in ways that are quiet, subtle, and terrifyingly hard to catch.

Most teams think they’re doing RAG right. Their system returns answers fast. Their metrics look good. Average precision? High. Latency? Acceptable. But behind the scenes, the system is quietly hallucinating citations, ignoring key context because it’s buried in the middle of a document, or pulling in conflicting information and just picking one at random. And users? They don’t notice until the answer is wrong-sometimes in ways that cost money, time, or even safety.

When the Retrieval System Lies Without Saying a Word

The biggest lie RAG tells isn’t in the answer. It’s in the silence. You think the system retrieved the right documents. You think it used them. But what if it didn’t?

One of the most common silent killers is retrieval timing attacks. Imagine a user asks a question. The system starts fetching documents from a database. But because the backend is overloaded, those documents arrive 200 milliseconds after the model has already generated its response. The model never sees them. It answers based on its training data alone. No error log. No alert. Just a wrong answer, dressed up like a correct one. In high-traffic systems, this happens in 12-15% of queries, according to Maxim AI’s 2023 analysis.

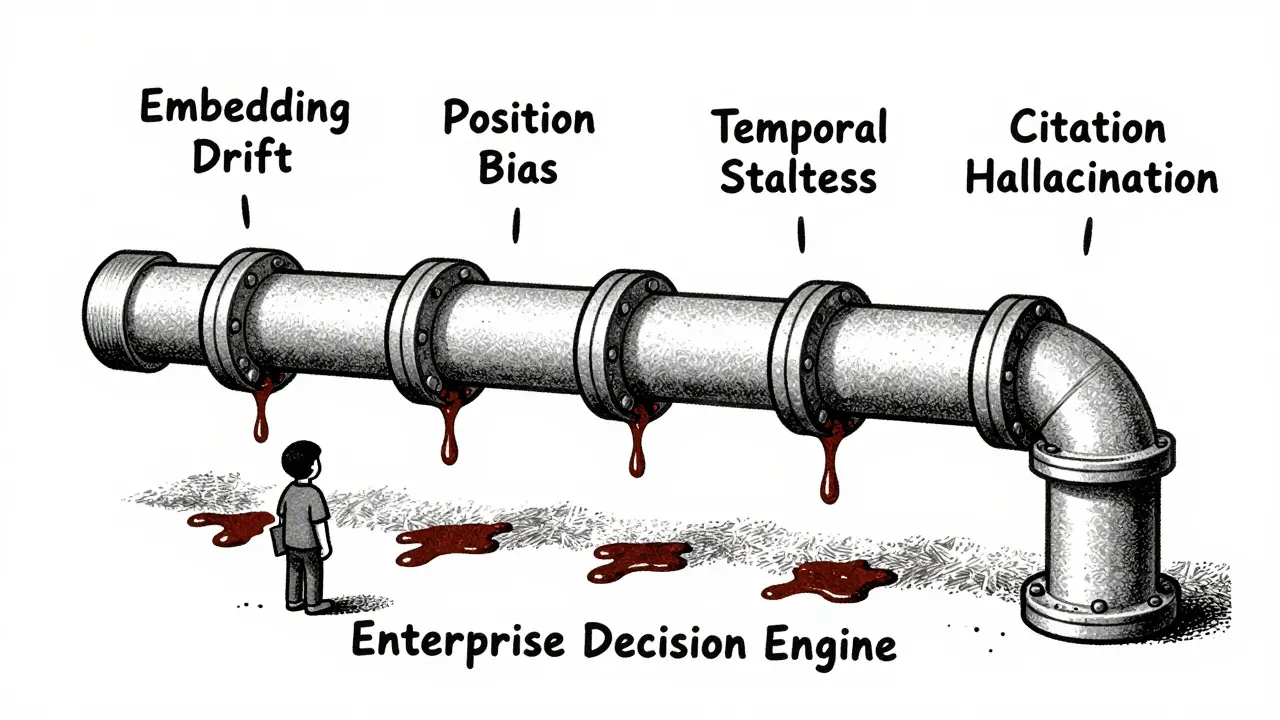

Then there’s context position bias. Large language models don’t treat all retrieved text equally. They pay way more attention to the first few lines and the last few lines. If the critical piece of information is in the middle? It gets ignored. Studies show this can tank performance on complex queries by up to 37%. You could have the perfect document, but if your chunking puts the key sentence in position 4 of 12, the model might as well not have seen it.

Embedding Drift: The Slow Poison

Embedding models turn text into numbers. Those numbers help the system find similar content. But what happens when you update your embedding model without reindexing your documents?

That’s embedding drift. The new model understands language differently. Your old documents are still stored with old numbers. The search system now matches queries to documents based on mismatched meanings. Over 3-6 months, relevance drops by 22-28%, measured by Mean Reciprocal Rank. Users start getting answers that feel off-not wrong, just… off. They don’t know why. You don’t know why. The system still works. It just works worse. And no one notices until the complaints pile up.

And it’s not just about outdated models. Dense embeddings compress meaning. They lose nuance. “I like going to the beach” and “I don’t like going to the beach” might get mapped to nearly identical vectors. The system can’t tell the difference. That’s not a bug. It’s a fundamental limitation of how vector search works. And it causes 31% of semantic retrieval failures, according to Snorkel AI’s 2024 findings.

Multi-Hop Reasoning: The Missing Link

Some questions need more than one fact. “What was the impact of the 2023 Fed rate hike on small business loan approvals?”

A good RAG system should find one document about the rate hike, another about loan approval trends, and then connect them. But most systems fail here. They retrieve both documents. They pass both to the model. And the model just answers based on the first one it saw. Or it makes up a connection. This multi-hop reasoning failure affects 41% of complex queries. The system isn’t broken. It’s just not thinking. It’s not synthesizing. It’s just regurgitating.

And when documents contradict each other? That’s cross-document contradiction. One source says sales increased 12%. Another says they dropped 8%. The model doesn’t resolve it. It picks one. Or it blends them into a new, false number. This happens in 24% of multi-source RAG systems. And again-no red flags. No alerts. Just a wrong answer that sounds plausible.

Citation Hallucination: The Fake Footnote

Nothing undermines trust faster than a fake citation. You ask for a source. The system gives you a paper title, a journal name, a DOI-and it’s all made up.

Citation hallucination is rampant. ApX Machine Learning found it in 33% of enterprise RAG systems. The model sees a document that mentions “a 2022 study by Smith et al.” It doesn’t know if Smith et al. actually wrote that. It just knows the phrase is common. So it generates a citation that sounds real. Reddit threads are full of users reporting this. One user described a corporate knowledge bot fabricating 28% of its citations-even when the source material was right there.

Why does this happen? Because the model isn’t being told to stick to the retrieved text. It’s being told to be helpful. And “helpful” often means sounding authoritative-even if that means making things up.

The Silent Saboteur: Negative Interference

You’d think more context is always better. But it’s not.

Negative interference happens when irrelevant documents are pulled in-and they actively mislead the model. Experiments show that injecting just 25% irrelevant content into the context window can drop answer accuracy by 19%. Why? The model tries to make sense of it all. It weighs everything. And if the irrelevant text has similar keywords, it gets confused. “The company reported profits of $2M last quarter” might be mixed with “The company reported losses of $2M last quarter.” The model doesn’t know which is true. So it guesses. And it guesses wrong.

This is especially dangerous in enterprise systems that pull from messy, uncurated data lakes. You don’t need bad data. You just need too much data. And the model doesn’t know how to filter.

Temporal Staleness: The Forgotten Date

“What’s the current FDA approval status for Drug X?”

You retrieve a document from 2021. It says “under review.” But in 2024, it was approved. The model doesn’t know that. It doesn’t know dates. It just sees text. And it answers based on the oldest, most confident-looking source.

Temporal staleness affects 29% of time-sensitive queries. Systems without date-aware filtering are ticking time bombs. In healthcare, finance, legal-where accuracy depends on recency-this isn’t a bug. It’s a liability.

How to Catch These Failures Before They Cost You

Traditional monitoring won’t save you. Average precision? Mean average precision? These metrics are great for academic papers. They’re useless in production.

You need to monitor the process, not just the output.

- Trace every step. Log when each document was retrieved. How long did it take? Did it arrive before generation? If not, flag it.

- Monitor embedding drift. Use a fixed test set. Run it weekly. If MRR drops more than 7%, reindex.

- Test for position bias. Shuffle the order of retrieved documents. If accuracy changes by more than 15%, your model is too sensitive to position.

- Check for citation hallucination. Automate validation: does every cited source actually appear in the retrieved documents? If not, flag it.

- Run contradiction tests. Feed the system two conflicting documents. Does it acknowledge the conflict? Or just pick one?

And don’t forget human-in-the-loop validation. A single expert reviewing 50 random outputs per week can catch 53% more failures than automated tools alone, according to Barnett et al. (2024). No system is perfect. But a system that checks itself-and lets humans spot the gaps-is one that can be trusted.

What’s Next: The Rise of RAG Observability

Five years ago, no one talked about RAG monitoring. Today, it’s a $287 million market by 2026, according to Gartner.

Companies like Maxim AI and Snorkel AI are building tools specifically for this. They detect recursive loops where the system keeps fetching the same document. They flag chunking issues. They simulate failure modes before deployment.

By 2026, 85% of enterprise RAG systems will have specialized observability layers. Right now, only 19% do. The gap isn’t just technical. It’s cultural. Teams still treat RAG like a plug-and-play feature. It’s not. It’s a complex, fragile pipeline. And like any pipeline, it leaks.

If you’re using RAG, you’re not just building a chatbot. You’re building a decision engine. And if it’s silently wrong, you’re making bad decisions-every day.

Fixing RAG Isn’t About Bigger Models

It’s about better data. Better prompts. Better checks.

You don’t need a bigger LLM. You need to know when your retrieval system is lying. You need to know when context is buried. You need to know when the model is ignoring what you gave it. You need to know when the documents contradict each other.

That’s not a feature. That’s a requirement.

Start monitoring the process. Not just the output. Because the answer you get today might be wrong. And you won’t know until it’s too late.

Robert Byrne

14 January, 2026 - 16:41 PM

This is the most accurate breakdown of RAG failures I’ve seen in years. That retrieval timing attack? I’ve seen it in production-our chatbot started giving wrong drug interaction warnings because the docs arrived late. No logs, no alerts. Just silent, deadly errors. We lost a client because of it. Stop treating RAG like a magic box. It’s a house of cards made of brittle APIs and lazy chunking.

Tia Muzdalifah

15 January, 2026 - 13:40 PM

omg i just realized why our support bot keeps giving weird answers… like yesterday someone asked about refund policy and it said ‘we dont do refunds’ but the doc clearly said ‘30 day window’… turns out the key line was in position 7 of 12 🤦♀️. context position bias is real. also why do i keep getting citations to papers that dont exist??

Zoe Hill

15 January, 2026 - 15:33 PM

Y’all are so right about citation hallucination. I was testing a legal RAG tool last week and it cited a Supreme Court case that never existed-complete with fake docket number. I checked the source docs. Nothing. The model just… made it up. Like, why? Because it thought sounding authoritative = helpful? That’s not helpful. That’s dangerous. We need to lock the model down to ‘only use what’s retrieved’ and kill the ‘be helpful’ prompt. No more creative writing in enterprise systems.

Also, embedding drift? My team just reindexed after 6 months and our MRR jumped 21%. We had no idea. It’s like our system was slowly going blind.

Albert Navat

17 January, 2026 - 10:19 AM

Let’s be real-this isn’t a RAG problem, it’s a data engineering problem. You’re throwing unstructured garbage from your data lake into a vector DB and expecting semantic coherence? That’s not failure, that’s negligence. You need schema-aware chunking, metadata tagging, temporal watermarking, and a retrieval pipeline that enforces freshness thresholds. And stop using dense embeddings for negation-heavy domains. Use sparse + hybrid. The fact that 31% of semantic failures are due to ‘like’ vs ‘dislike’ vector collapse is embarrassing. This is 2025. We have better tools. Stop outsourcing critical reasoning to a model that doesn’t understand context, dates, or contradiction. Build guardrails. Or get out of the game.

King Medoo

18 January, 2026 - 15:01 PM

I’ve been saying this for years. 🚨 RAG is not a feature. It’s a liability waiting to explode. 🤯 And yet, every company I’ve worked with treats it like a plug-in for their CRM. They don’t monitor retrieval latency. They don’t validate citations. They don’t test for contradiction. They just deploy and pray. 😔 And then when the CFO gets a wrong market forecast because the model blended two conflicting reports? They blame the LLM. NO. It’s the pipeline. It’s the lack of observability. It’s the culture of ‘it works on dev.’ 🛠️ We need RAG-specific SLOs. We need failure injection testing. We need human audits. And we need to stop calling it ‘AI’ like it’s magic. It’s code. It’s data. It’s brittle. And if you’re not monitoring the *process*, you’re not just sloppy-you’re irresponsible. 📉 85% of enterprise systems will have observability by 2026? Good. It’s about damn time. 💪