Data Privacy in AI: Protect User Data in PHP Apps with Secure LLM Integration

When you build AI features into your PHP app, data privacy, the practice of protecting user information from misuse, unauthorized access, or exposure. Also known as information privacy, it's no longer optional—it's the line between legal compliance and costly lawsuits. Every time your app sends user data to an LLM like GPT or Claude, you’re handling sensitive material. That means you’re responsible for how it’s stored, transmitted, and used. And regulators aren’t waiting. California’s AI laws, EU’s GDPR, and even Illinois’ deepfake rules now treat AI data handling like financial data—strictly.

It’s not just about hiding data. It’s about controlling it. confidential computing, a technique that encrypts data while it’s being processed, even inside cloud servers. Also known as encryption-in-use, it lets you run LLMs on user data without ever exposing the raw input to the provider. Tools like NVIDIA’s TEEs and Azure’s Confidential Computing make this possible, and they’re becoming standard for enterprise PHP apps handling healthcare, finance, or personal messages. Then there’s enterprise data governance, the framework that tracks where training data came from, who accessed it, and how it’s being used. Also known as AI data compliance, it’s what keeps your LLM deployment from accidentally leaking customer emails or credit card numbers. Without it, even the smartest model becomes a liability.

You can’t just slap on a privacy policy and call it done. Real data privacy means asking: Are you using RAG to keep data inside your own system? Are your containers signed and scanned for malware? Are you tracking MTTR when a data breach is suspected? These aren’t buzzwords—they’re the daily checks that separate safe apps from risky ones. The posts here don’t just talk about theory. They show you how to lock down LLMs in production, how to measure policy adherence, how to use tools like Microsoft Purview and Databricks, and how to avoid fines that can wipe out a startup.

Whether you’re a solo dev or running a SaaS with thousands of users, the rules are the same: protect data like it’s cash. And if you’re building AI into PHP, you’re already in the crosshairs. The good news? You don’t need to be a lawyer or a security expert to get it right. The next section gives you real, working strategies—from code-level controls to compliance checklists—that developers are using today to stay safe, legal, and trusted.

Privacy-Aware RAG: How to Protect Sensitive Data When Using Large Language Models

Privacy-Aware RAG protects sensitive data in AI systems by removing PII before it reaches large language models. Learn how it works, why it's critical for compliance, and how to implement it without losing accuracy.

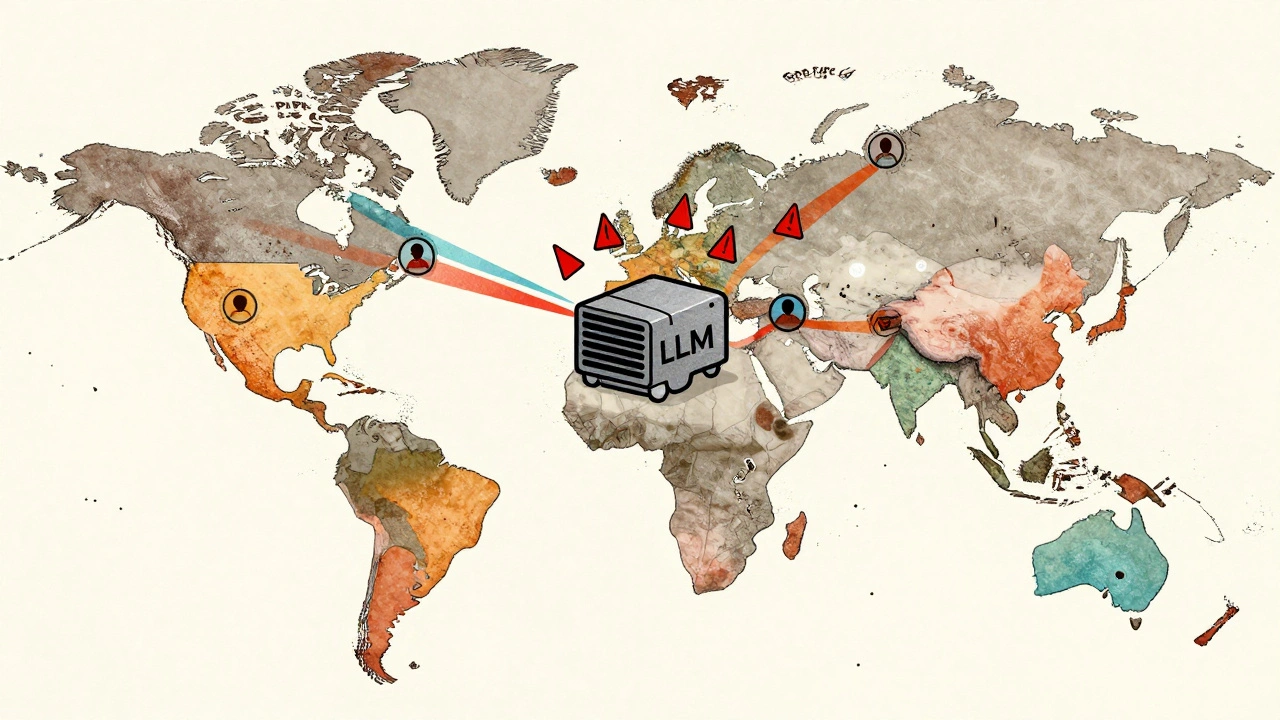

Read MoreData Residency Considerations for Global LLM Deployments

Data residency rules for global LLM deployments vary by country and can lead to heavy fines if ignored. Learn how to legally deploy AI models across borders without violating privacy laws like GDPR, PIPL, or LGPD.

Read More