AI Compliance: Laws, Governance, and Responsible Deployment for PHP Developers

When you deploy an AI model in a PHP app, you're not just writing code—you're entering a world of AI compliance, the set of legal, ethical, and operational rules that govern how artificial intelligence is used in real-world systems. Also known as responsible AI, it's what keeps your chatbot from leaking private data, your content filter from censoring harmless speech, and your company from getting fined $20 million. This isn't theoretical. California’s AI law requires transparency when users interact with AI-generated content. The EU’s AI Act classifies your LLM-based customer support tool as high-risk if it handles personal data. And if you're selling to enterprises, they’ll demand proof you’re following enterprise data governance, the structured process of managing how training data is sourced, tracked, and secured during LLM deployment—or they won’t sign the contract.

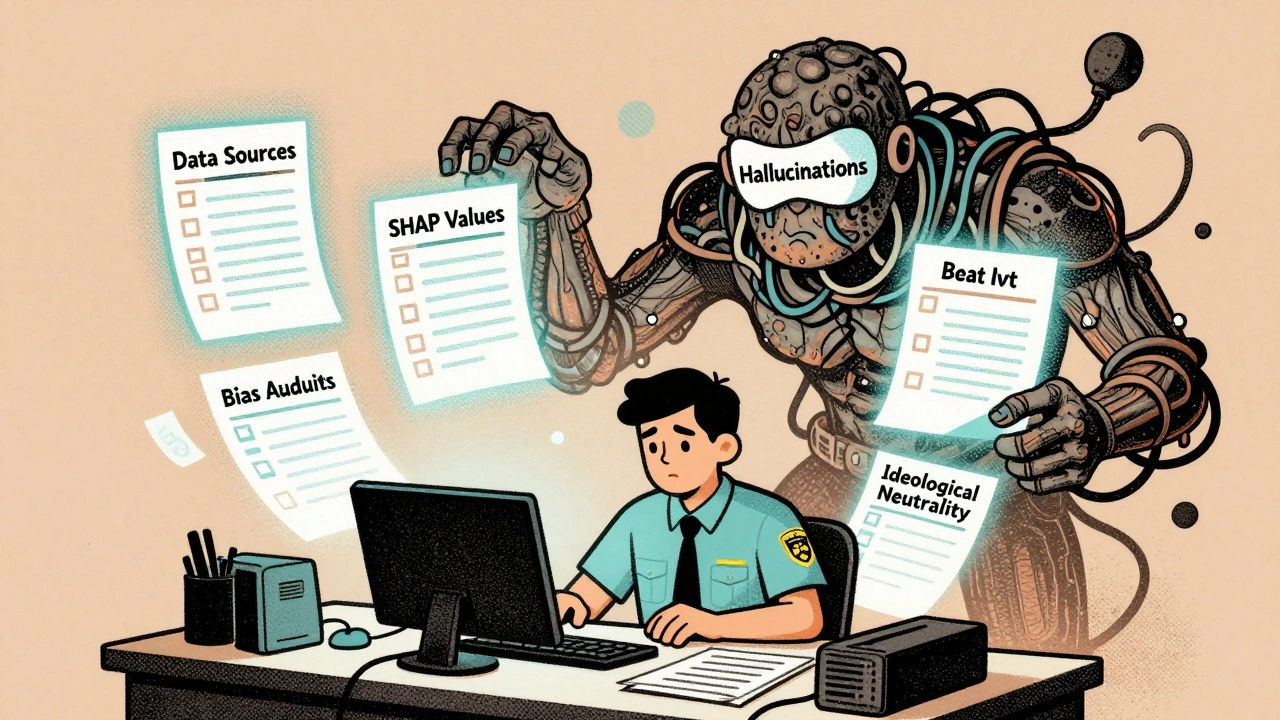

Most developers think compliance means adding a privacy policy and calling it done. But real compliance starts before you even call the OpenAI API. It’s about knowing where your training data came from, whether your model can be exported under U.S. rules, and if your users’ prompts are being logged in a way that violates GDPR. It’s about using content safety, automated systems that detect and block harmful, biased, or illegal outputs from generative AI before they reach a customer. It’s about measuring policy adherence, how consistently your team follows internal rules around AI use, from prompt engineering to data retention across every sprint. These aren’t nice-to-haves—they’re the difference between scaling smoothly and getting shut down by regulators.

What you’ll find here isn’t a list of legal jargon. It’s a practical collection of guides written by engineers who’ve lived through audits, compliance failures, and last-minute policy changes. You’ll learn how to build RAG systems that keep your data private, how to autoscale without blowing your cloud budget under new usage-based pricing, and how to use confidential computing to protect both your model and your users’ inputs. You’ll see real examples from companies that got fined—and those that turned compliance into a competitive edge. This is for developers who need to ship AI features without becoming legal experts. No fluff. No theory. Just what works when the clock is ticking and the lawyers are asking questions.

Governance Policies for LLM Use: Data, Safety, and Compliance in 2025

In 2025, U.S. governance policies for LLMs demand strict controls on data, safety, and compliance. Federal rules push innovation, but states like California enforce stricter safeguards. Know your obligations before you deploy.

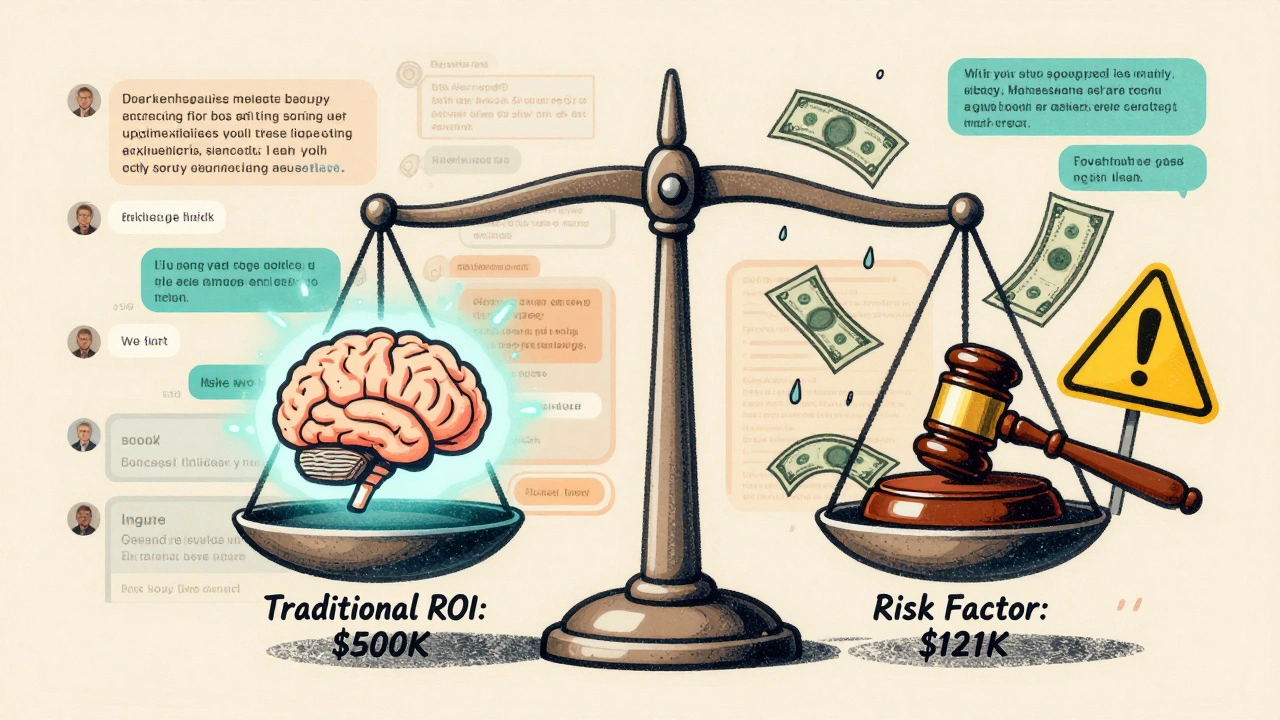

Read MoreRisk-Adjusted ROI for Generative AI: How to Calculate Real Returns with Compliance and Controls

Risk-adjusted ROI for generative AI accounts for compliance costs, legal risks, and control measures to give you a realistic return forecast. Learn how to calculate it and avoid costly mistakes.

Read More