Generative AI doesn’t make mistakes like humans do. It doesn’t forget, get tired, or misread a question. But it hallucinates-constantly. It invents phone numbers, fabricates legal citations, spins up fake websites, and confidently states things that never happened. And if you’re using AI to draft emails, generate medical summaries, or build customer support bots, those hallucinations aren’t just embarrassing-they can be dangerous.

That’s where post-processing validation comes in. It’s not a fancy add-on. It’s the last line of defense before your AI’s output hits a real person. Think of it like a spellchecker on steroids, but instead of catching typos, it’s hunting down lies.

Why Rules and Regex Are Your First Line of Defense

Before you even think about complex machine learning models, start with the simplest tool: rules. Regular expressions-regex-are fast, predictable, and perfect for catching structured lies. If your AI says a customer’s email is "[email protected]" and you know companyx doesn’t exist, a regex pattern can flag that instantly.

LivePerson’s system, which handles over 2.4 million validation checks daily, uses regex to scan for:

- Phone numbers that don’t match country formats

- Emails with invalid domains (like @gmail.co or @company.fake)

- URLs pointing to non-existent or suspicious domains

- SSN, credit card, or date formats that violate basic math (e.g., February 30th)

These aren’t guesses. They’re exact matches. And they catch about 35-45% of hallucinations in technical domains, according to Dr. Elena Rodriguez from Stanford HAI. That’s not enough alone-but it’s the foundation. Without it, you’re trying to catch a needle in a haystack with your hands.

But here’s the trap: overly strict regex creates false positives. A user named "Dr. Smith" might get flagged because the system thinks "Dr." is a medical credential. A phone number in parentheses might get rejected because your pattern expects dashes. Initial implementations often have 18-25% false positives. That means your system is rejecting good outputs. You fix that by tuning patterns with real-world data-not theoretical edge cases.

Programmatic Checks: When Context Matters More Than Pattern

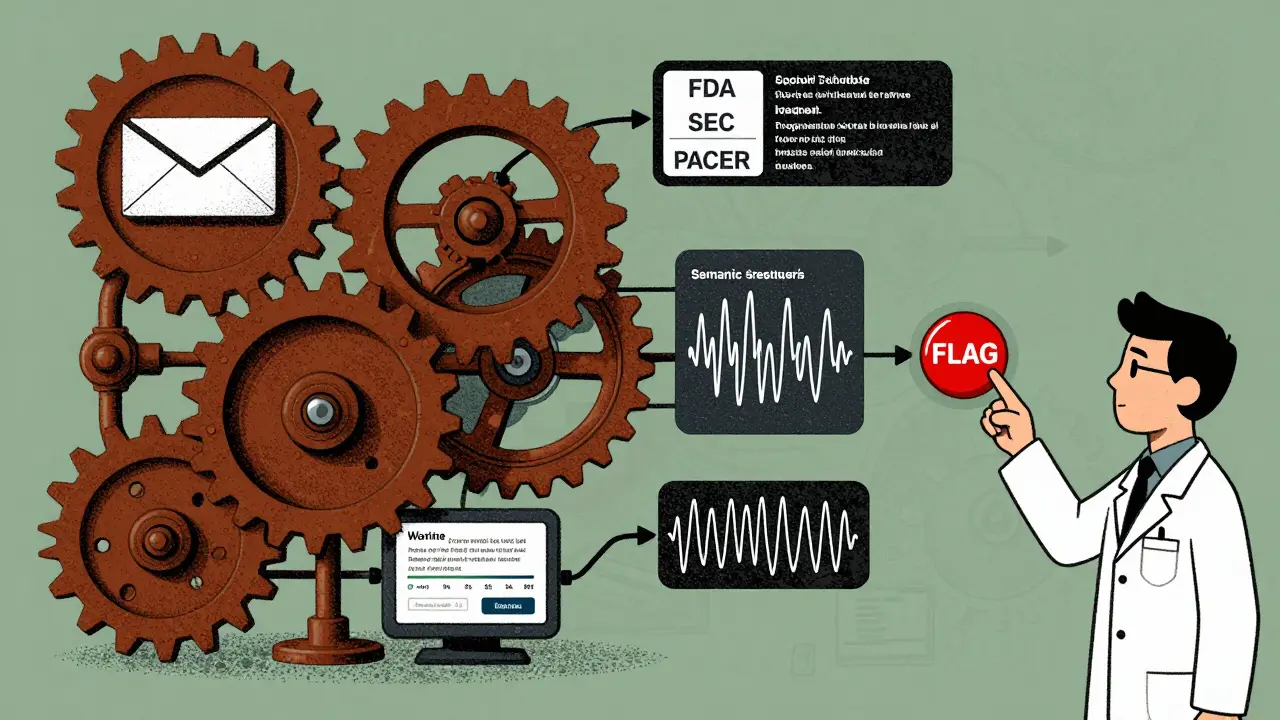

Regex catches the obvious. But what about the subtle lies? Like when AI says, "The FDA approved this drug in 2021," when the actual approval was in 2019? Or when it cites a non-existent court case? That’s where programmatic checks come in.

These are custom scripts that pull from trusted sources. For example:

- Check a drug name against the FDA’s public database

- Verify a legal citation against PACER or Westlaw

- Confirm a company’s existence using the SEC’s EDGAR database

- Validate a date against historical event logs

Relativity’s 2024 validation framework uses this approach for legal document review. Instead of scoring everything on one scale, they assign different thresholds per issue. A simple factual error gets a score of 3 or higher to trigger a fix. A nuanced one-like conflicting interpretations of a contract clause-gets a lower threshold of 2, so borderline cases aren’t ignored.

This isn’t magic. It’s engineering. You build a library of validation functions, each tied to a specific domain. Healthcare? Validate against ICD-10 codes. Finance? Cross-check with SEC filings. Legal? Match against case law repositories. The more sources you connect, the harder it is for AI to lie undetected.

And it works. One 2023 PMC study showed that adding programmatic checks to an AI-powered EHR system reduced manual review time by 73%. That’s not just efficiency-it’s safety.

How Semantic Validation Catches What Regex Misses

Now, imagine the AI says: "The patient has a history of hypertension and was prescribed lisinopril. They reported improved blood pressure after three weeks." Sounds fine. But what if the patient’s chart says they were prescribed metoprolol? The AI didn’t invent a fake drug-it swapped one real drug for another. Regex won’t catch that. A programmatic check against a drug database won’t either, because both drugs are real.

This is where semantic validation steps in. It doesn’t look at words. It looks at meaning.

Vellum.ai’s 2024 system uses embeddings to compare the AI’s output against trusted reference texts. It asks: "Does this statement match the intent and facts of the source?" In tests, it matched human judgment 82% of the time in business logic checks.

Think of it like a fact-checker reading both the AI’s answer and the original document side by side. It doesn’t need exact wording. It just needs to know: Is this claim supported? Is it contradicted? Is it irrelevant?

Companies like NVIDIA use this in hardware validation. Instead of testing every possible chip scenario, they use AI to generate test cases that target rare, hard-to-find bugs-like memory ordering conflicts that only show up under specific timing conditions. Then they use semantic checks to verify the AI’s generated stimuli actually reflect real-world behavior.

It’s not perfect. But it’s the best tool we have for catching lies that sound true.

The Reality of Coverage: You Can’t Validate Everything

Here’s the uncomfortable truth: you can’t validate 100% of AI outputs. And you shouldn’t try.

Traditional AI systems-like rule-based chatbots or classification engines-can be tested end-to-end. Every input, every output, every edge case. That’s 100% automation potential.

Generative AI? Not even close. TestFort’s 2025 analysis found generative AI validation has only 40-60% automation potential. Why? Because the output is creative. Unpredictable. Human-like.

So you sample. You don’t check every response. You check representative ones. Leading teams test 15-25% of outputs. That’s enough to catch patterns, not every single error.

But sampling only works if you sample smartly. Relativity’s method prioritizes:

- Outputs from underrepresented user groups

- Edge cases-like obscure medical conditions or niche legal questions

- Low-richness prompts-"Tell me about X"-where AI is most likely to invent

- Gray-area responses that score near validation thresholds

And you track what fails. Not just the errors, but the patterns. If 30% of failed validations involve fake URLs from healthcare responses, you tighten your regex for medical domains. If semantic checks keep flagging drug name swaps, you add a new validation function that cross-references prescriptions.

That’s how you improve-not by adding more rules, but by learning from your mistakes.

What Happens When the AI Gets Caught Lying?

Validation isn’t just about detection. It’s about response.

LivePerson’s system has three options when it finds a hallucination:

- Rephrase: The system tries to rewrite the response without the false claim. If it can’t, it moves to step two.

- Discard: The response is thrown out. A fallback message like "I’m sorry, I can’t provide accurate information on that right now" is sent instead.

- Flag: The output is marked for human review. This is used for high-risk scenarios-medical advice, legal opinions, financial guidance.

Discarding responses is hard. Users hate "I don’t know." But it’s safer than giving them a lie. And in regulated industries, it’s mandatory.

The EU AI Act, enforced in July 2025, requires "systematic validation of high-risk AI outputs." The U.S. NIST AI Risk Management Framework 1.1, released in September 2025, says the same. You can’t just say "we use AI." You have to prove you checked it.

The Cost of Skipping Validation

Companies that skip post-processing validation pay in trust, lawsuits, and reputation.

A legal tech startup in 2024 used an AI to draft contract clauses. It hallucinated a non-existent precedent. A client relied on it. The case was dismissed. The startup lost $3.2 million in client contracts and faced a class-action suit.

A hospital used AI to summarize patient notes. It invented a drug allergy. The nurse missed a critical treatment. The patient had a reaction. The hospital settled for $8.7 million.

These aren’t hypotheticals. They’re real. And they’re getting more common.

Gartner reports that 67% of enterprises now use dedicated validation layers-up from 29% in 2023. Healthcare leads at 82%. Finance at 76%. Legal tech at 68%. Why? Because they can’t afford to be wrong.

Organizations with dedicated AI validation roles see 41% fewer production incidents. These specialists aren’t just coders. They’re hybrids: 7.2 years in domain expertise, 5.8 in software, 3.4 in ML. They know the rules, the data, and the risks.

What’s Next in 2026?

The field is moving fast. Vellum.ai 2.0, released in January 2026, uses machine learning to auto-optimize prompts based on past validation failures. It cuts iteration cycles by 60%.

NVIDIA’s team found that AI-generated validation scenarios improved coverage for complex chip designs by 53%. They’re no longer just testing hardware-they’re using AI to find the bugs AI itself creates.

And LivePerson’s new multimodal system can now validate images and video. It spots deepfakes, altered medical scans, fake logos in screenshots. It’s 89% accurate in beta.

By 2027, 92% of enterprise AI deployments will use multi-stage validation: rules, semantic checks, and human review for high-risk outputs. That’s the new baseline.

But here’s the gap: 61% of organizations still lack validation for domain-specific factual accuracy. They check for fake emails. They check for bad URLs. But they don’t check if the AI got the right diagnosis, the right regulation, the right financial metric.

That’s where you need to focus.

Start Here: Your Validation Checklist

If you’re building or using generative AI today, here’s what you need to implement by next quarter:

- Regex layer: Block fake emails, phone numbers, URLs, dates. Use known patterns from industry standards.

- Programmatic checks: Connect to trusted databases. Verify facts against real sources.

- Semantic validation: Use embeddings to compare meaning, not just words.

- Sampling strategy: Test 15-25% of outputs. Focus on edge cases and low-richness prompts.

- Response protocol: Decide: rephrase, discard, or flag? Don’t let the AI decide.

- Human review: For medical, legal, financial, or safety-critical outputs, always include a human.

- Track failures: Build a feedback loop. Use what fails to improve what comes next.

You don’t need AI to fix AI. You need systems. Rules. Checks. And discipline.

The AI will keep hallucinating. That’s not a bug. It’s a feature.

Your job isn’t to stop it from generating. It’s to make sure what it generates doesn’t hurt anyone.

What’s the difference between regex and semantic validation in AI post-processing?

Regex looks for exact patterns-like an email format or a phone number structure. It’s fast and precise but only catches obvious lies. Semantic validation compares meaning using AI embeddings. It can detect when an AI swaps one real fact for another (like misstating a drug name) even if every word is correct. Regex catches the wrong address. Semantic validation catches the wrong diagnosis.

Can I rely only on AI to validate its own output?

No. AI can’t reliably validate itself. It doesn’t know what’s true-it only knows what’s likely. If it hallucinates a fake court case, it might generate another hallucination to "correct" it, making the error harder to spot. Validation must come from external systems: databases, rules, human judgment. AI is the author, not the editor.

How many validation checks should I run per AI response?

You don’t need to check every output 100% of the time. Instead, use layered sampling: run regex checks on every response (they’re fast), programmatic checks on high-risk outputs (medical, legal, financial), and semantic checks on outputs with low confidence scores. Most teams run 3-5 validation steps per output, but only apply the heavier ones when needed.

What’s the biggest mistake companies make with AI validation?

They focus on catching fake URLs and phone numbers but ignore domain-specific facts. An AI can say "The patient has diabetes" when their chart says prediabetes. That’s a dangerous lie. But if your validation only checks for fake emails, you’ll miss it. The most critical gaps are in factual accuracy within specialized fields-healthcare, law, finance-not generic formatting errors.

Do I need a team of experts to set this up?

You don’t need a big team, but you do need hybrid skills. Someone who understands your domain (like a nurse or lawyer), knows how to write code for checks, and understands how AI behaves. Many companies start with one person who wears all three hats. The key isn’t size-it’s alignment. If your validator doesn’t know what a correct medical record looks like, no amount of regex will save you.

Is post-processing validation required by law?

Yes, in high-risk sectors. The EU AI Act (2025) and U.S. NIST AI Risk Management Framework 1.1 (2025) both require systematic validation for AI outputs that affect health, safety, rights, or financial decisions. If you’re using AI in healthcare, legal services, banking, or hiring, you’re legally obligated to have validation layers in place. Ignoring it isn’t just risky-it’s non-compliant.

E Jones

30 January, 2026 - 10:11 AM

Let me tell you something they don’t want you to know-this whole ‘validation’ thing is just a distraction. The real truth? AI hallucinates because it’s been programmed to lie. They feed it corporate propaganda, government scripts, and pharmaceutical ads until it starts believing its own nonsense. Regex? Please. That’s like putting a Band-Aid on a nuclear meltdown. The real problem is the data pipelines are controlled by the same elites who own the banks, the media, and now-apparently-the truth itself. You think they want you to catch hallucinations? No. They want you to think you’re safe while the AI quietly reshapes reality one fake citation at a time. Wake up.

They’re not fixing AI. They’re weaponizing it. And if you’re validating outputs, you’re just helping them clean up the mess they created so they can keep selling it to you as ‘progress.’

I’ve seen the documents. The backdoors are built in. The ‘semantic checks’? They’re just new filters for censorship. They don’t catch lies-they catch dissent.

Next thing you know, your AI will validate you out of a job, then validate your bank account into oblivion. It’s all connected. They’re not building tools. They’re building control systems. And you’re polishing the handcuffs with your regex patterns.

They call it ‘safety.’ I call it surrender.

Who owns Vellum.ai? Who funded Relativity’s ‘framework’? Google? Microsoft? The Pentagon? Ask yourself why no one’s asking that question.

And don’t even get me started on the ‘human review’ clause. That’s just the final lie-the one where you think a tired nurse in a call center is going to catch the difference between metoprolol and lisinopril. Ha. She’s got ten seconds per case. She’s not a doctor. She’s a gatekeeper for a machine that doesn’t even know what a heart is.

They’re not trying to stop hallucinations. They’re trying to make sure the hallucinations are *marketable*.

Don’t validate the AI. Validate the people behind it. That’s the only check that matters.

They’re not building a safer AI. They’re building a more convincing lie.

And you’re helping them do it.

Just sayin’.

Barbara & Greg

1 February, 2026 - 00:52 AM

It is both lamentable and deeply concerning that we have come to rely upon artificial systems to generate content of such profound consequence-medical, legal, financial-only to then apply what amounts to digital duct tape in the form of regular expressions and ad hoc scripts. The very notion that one might ‘validate’ a generative system with rule-based heuristics reveals a fundamental epistemological failure: we are treating symptoms while ignoring the disease. The AI does not hallucinate because it is poorly programmed; it hallucinates because it has no capacity for truth, only statistical likelihood. To pretend otherwise is not engineering-it is delusion dressed in code.

Regulatory frameworks like the EU AI Act are, at best, a symbolic gesture. They do not address the ontological void at the heart of LLMs. No amount of embedding comparison or semantic alignment will restore moral agency to a system that has never possessed it. The human reviewer, then, becomes the last vestige of accountability-yet they are underpaid, overworked, and often untrained in the very domains they are meant to adjudicate.

It is not enough to check for fake URLs. We must ask: why are we allowing machines to speak in the voice of experts? Why do we outsource judgment to systems that cannot comprehend consequence? The answer, I fear, is convenience. And convenience, in the face of moral responsibility, is the most dangerous form of cowardice.

Let us not mistake efficiency for ethics. Let us not confuse automation for wisdom. And let us never again pretend that a regex pattern can safeguard human lives.

allison berroteran

1 February, 2026 - 01:02 AM

I really appreciate how this breaks down validation into layers-it makes the whole thing feel less overwhelming. I’ve been working with AI in patient intake forms at my clinic, and we started with just regex for phone numbers and emails (which caught a lot of typos, honestly), but we didn’t realize how often it would swap meds until we added semantic checks. One time it said ‘warfarin’ when the chart said ‘apixaban’-no red flags in the regex, no fake domain, just a dangerous swap. The embedding model flagged it because the context around ‘anticoagulant’ didn’t match the patient’s history. It was a small thing, but it could’ve been huge.

Now we sample 20% of outputs, mostly from older patients or complex cases, and we’ve cut our manual review time in half. We still have humans in the loop for anything high-risk, and honestly, that’s been the biggest win-not because the AI is perfect, but because now we’re *looking* for the right things.

It’s not magic. It’s just paying attention. And honestly? That’s the part people forget. The tech helps, but the discipline? That’s all on us.

Gabby Love

2 February, 2026 - 11:54 AM

Minor grammar note: in the section about LivePerson, it says ‘regex to scan for’ and then lists items with bullet points-but the first item says ‘Phone numbers that don’t match country formats’-should be ‘Phone numbers that don’t match *the* country formats’ for consistency. Also, ‘SSN, credit card, or date formats that violate basic math’-‘basic math’ feels a little off. Maybe ‘basic formatting rules’? Just a thought. Otherwise, this is super clear and useful.

Also, love the checklist at the end. Printed it out.

Jen Kay

3 February, 2026 - 04:57 AM

Wow. So we’ve gone from ‘AI is magic’ to ‘AI is dangerous’ to ‘AI is a problem we can solve with more code’-and now we’re pretending this is progress? Let’s be real: you’re not fixing hallucinations. You’re just building a bigger, more expensive lie detector that still doesn’t know what truth is.

And yet, you’re calling this ‘engineering.’ Meanwhile, the people who actually *use* these systems-nurses, paralegals, customer service reps-are expected to be the final arbiters of truth, with no training, no time, and no authority to override the system when it’s wrong.

You talk about ‘sampling’ outputs like it’s a clever hack. But sampling is just lazy. You’re gambling with people’s lives because you don’t want to pay for full validation.

And don’t get me started on ‘rephrase’ as an option. That’s not fixing the AI. That’s just letting it try again until it sounds less obviously wrong.

It’s not a validation layer. It’s a PR layer.

And you’re proud of this?