Switching from an LLM API like GPT-4 or Claude to a self-hosted model sounds like a smart move-more control, better privacy, lower long-term costs. But if you skip the evaluation gates, you’re not being smart. You’re gambling. And in enterprise settings, that gamble often costs hundreds of thousands of dollars, triggers compliance violations, or kills user trust overnight.

Why Evaluation Gates Aren’t Optional

Most teams think they’re ready to self-host after testing a model on a few sample prompts. They run a chatbot demo. It works. Cool. Time to deploy. But real-world use isn’t about clean examples. It’s about edge cases, toxic inputs, sudden traffic spikes, and regulatory audits. According to IBM’s 2025 case study, companies that skipped formal evaluation gates saw 43% higher operational costs and 62% more security incidents after switching. That’s not a glitch. That’s a systemic failure. Evaluation gates are a checklist of non-negotiable performance, security, and cost benchmarks. They’re not about being overly cautious. They’re about avoiding disaster. Think of them like pre-flight checklists for pilots. Skip one step, and you might not know why the plane crashed until it’s too late.Performance: Is Your Model Actually as Good as the API?

The biggest myth is that self-hosted models are just as accurate as APIs. They’re not-at least, not out of the box. Stanford’s HELM benchmark from December 2024 shows GPT-4 Turbo scores 82.1 on MMLU (Massive Multitask Language Understanding). Self-hosted Llama-3-70B? 76.3. That’s a 7% drop. In legal, medical, or financial applications, that gap means wrong answers, compliance risks, and lost trust. You need to test across multiple dimensions:- MMLU accuracy: Your model must hit at least 92% of the API’s score across all 57 subjects, with no single category falling below 85% of API performance (CSET Georgetown, June 2024).

- Latency: Under identical workloads, your self-hosted model’s P95 latency must stay within 1.8x the API’s. Go beyond 2.5x, and user abandonment spikes by 37% (NVIDIA, February 2025).

- Context window consistency: Dr. Sarah Mitchell at MIT-IBM Watson Lab found most self-hosted models degrade badly beyond 50% of their max context. You need 95% response quality retention at 80% of the nominal context window. APIs handle this automatically. You don’t.

Cost: The Break-Even Point Is Not What You Think

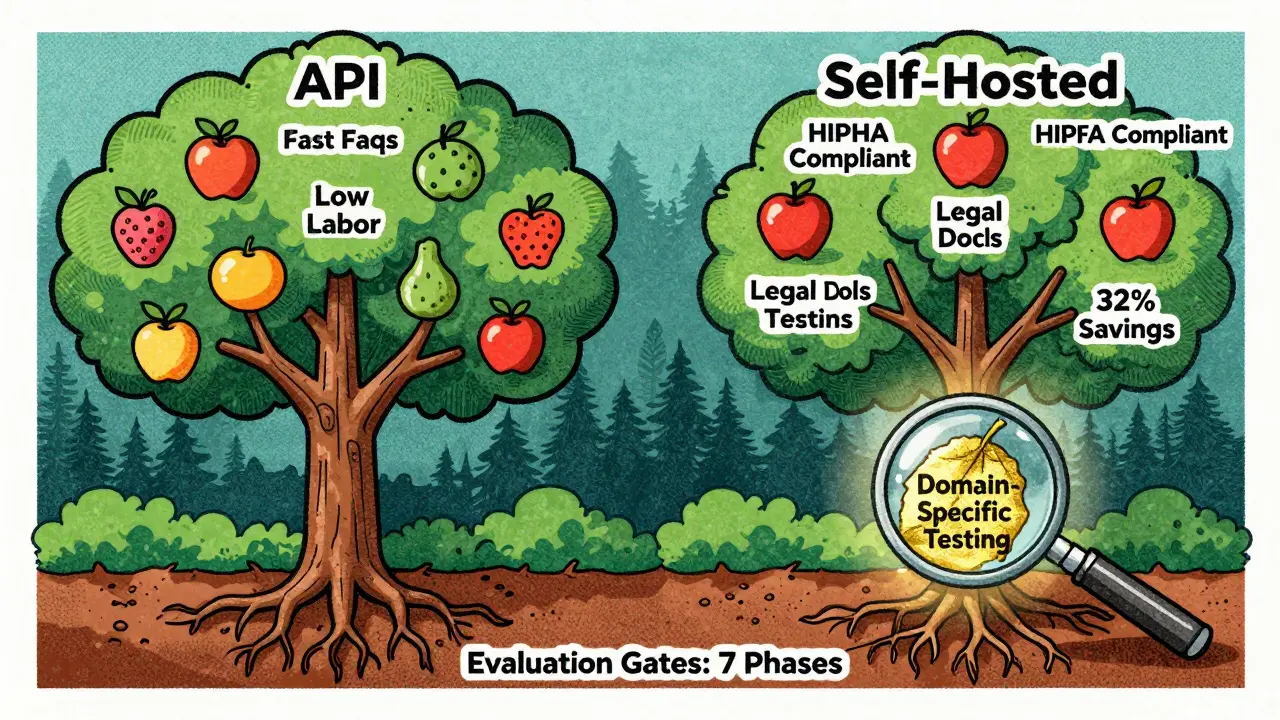

Everyone says self-hosting saves money. But it only does if you hit the sweet spot. Red Hat’s 2024 analysis shows the break-even point for Llama-3-70B is around 1.2 million tokens per day. Below that? You’re paying more in hardware, power, and engineering time than you would on an API. Anthropic’s data confirms this: at 800,000 tokens daily, the API is cheaper. At 1.5 million, self-hosting wins. At 2 million tokens/day, you save 32% with Claude 3 Haiku self-hosted versus API. But here’s what most teams miss: TCO isn’t just hardware. It’s maintenance. Microsoft’s 2025 study found self-hosted deployments require 2.3 full-time engineers to manage. APIs? 0.4. That’s five times the labor cost. And that doesn’t include patching, monitoring, scaling, and updating models every few months. You need to model 12 different cost scenarios: 30% usage spikes, hardware refresh cycles, GPU failure rates, electricity price hikes. Gartner found 63% of failed migrations underestimated maintenance costs by 2.4x.

Security: Red Teaming Isn’t a Box to Check

APIs come with built-in guardrails. Self-hosted models? They’re raw. And dangerous. Google’s 2025 security framework requires self-hosted models to block at least 99.7% of toxic outputs across 10,000 diverse prompts. That’s not optional. That’s compliance. And you need to test it with real adversarial inputs-500+ jailbreak attempts per model instance. Anything less is a false sense of security. Arize AI’s Jason Lopatecki says: "Guardrail effectiveness must match API performance within 1.5 percentage points. If your model lets through 0.8% toxic content while the API blocks 0.3%, you’re not secure-you’re liable." Red teaming isn’t a one-time test. It’s continuous. You need automated systems that replay known attack patterns every time you update the model. NVIDIA’s NeMo Guardrails 3.0 does this automatically, alerting you if performance drops below 93% of API benchmarks.Domain-Specific Performance: Where Self-Hosted Actually Wins

Don’t get discouraged. Self-hosted models aren’t worse everywhere. They’re better in your niche. Harvard Law Review found that when fine-tuned on legal documents, self-hosted LLMs outperformed GPT-4 Turbo by 22% in accuracy for contract analysis. Why? Because APIs are trained on public data. Your model can be trained on your proprietary contracts, case law, and internal policies. Healthcare is another winner. One Reddit user (u/HealthAI_Engineer) reported their self-hosted Llama-3-8B achieved 96.2% of GPT-4 API performance after testing on 5,000+ medical QA pairs. Result? 41% cost savings and full HIPAA compliance. The key is domain-specific evaluation. Don’t just test on MMLU. Test on your data. Build a test suite of 1,000+ real-world queries from your business. If your model fails on 20% of those, it’s not ready.The 7-Phase Evaluation Process

Capital One’s 2024 framework is the gold standard. Here’s what it looks like:- Baseline API performance (2 weeks): Measure latency, accuracy, and throughput on your actual use cases.

- Hardware validation (1 week): Confirm your NVIDIA H100 or A100 cluster can sustain 15 tokens/sec for Llama-3-70B (Red Hat’s minimum).

- Core metric testing (1 week): Run MMLU, BBH, HELM benchmarks side-by-side with the API.

- Domain-specific evaluation (1 week): Test on your proprietary data. No shortcuts.

- Security and red teaming (1 week): 500+ adversarial prompts. 99.7% block rate. No exceptions.

- Cost modeling (3 days): Project TCO across 12 usage scenarios. Include labor, power, maintenance.

- 72-hour stress test (4 days): Simulate peak load, network drops, model crashes. Can it recover? Does it stay accurate?

What Happens When You Skip the Gates?

The Reddit thread from u/FinTech_AI_Engineer tells the story: they deployed Mistral 7B without proper RAG evaluation. Answer relevance dropped 38%. Productivity tanked. They lost $220k before rolling back. Stanford’s 2025 study found 74% of failed migrations skipped at least one critical gate. The most common? Domain-specific testing. Cost modeling. Context window checks. The companies that succeed? They treat evaluation like a product launch. Not a technical task. A business decision.Hybrid Is the New Default

You don’t have to choose one or the other. As of Q4 2025, 38% of enterprises use hybrid models: APIs for general queries, self-hosted for sensitive or domain-specific tasks. Why? It’s smarter. Use GPT-4 Turbo for customer service FAQs. Self-host Llama-3 on your financial data for risk analysis. You get speed, cost savings, and compliance-all without overextending your team. Forrester predicts hybrid adoption will hit 50% by 2026. The future isn’t API vs self-hosted. It’s API and self-hosted, with evaluation gates deciding where each belongs.What You Should Do Next

If you’re considering a switch:- Run your current API usage through Red Hat’s break-even calculator. Are you above 1.2M tokens/day?

- Build a 100-question domain test suite from your most critical workflows.

- Run a 48-hour red teaming test with 100 adversarial prompts. How many slip through?

- Calculate your engineering overhead. Do you have 2.3 FTEs to spare?

- Check if your model passes MLCommons LLM Evaluation Suite 2.0 thresholds.

What are the minimum performance thresholds for switching from an LLM API to self-hosted?

Your self-hosted model must achieve at least 92% of the API’s performance on MMLU across all 57 subjects, with no category below 85% of API scores. Latency must stay within 1.8x the API’s P95 under identical workloads. Context window quality must retain 95% accuracy at 80% of the model’s max capacity. These are non-negotiable baselines according to CSET Georgetown and NVIDIA’s 2025 guidelines.

At what usage volume does self-hosting become cheaper than using an API?

For Llama-3-70B, the break-even point is around 1.2 million tokens per day. Below 800,000 tokens, APIs are cheaper. Between 800K and 1.5M, costs are roughly equal. Above 1.5 million tokens/day, self-hosting saves 25-32% based on Anthropic’s 2024 pricing data. But remember: this doesn’t include engineering overhead, which can add 2-3x in labor costs.

How do I test for security vulnerabilities in a self-hosted LLM?

Run at least 500 adversarial prompts per model instance, including jailbreaks, toxic inputs, and instruction hijacking. Use tools like NVIDIA NeMo Guardrails or the MLCommons LLM Evaluation Suite 2.0. Your model must block 99.7% of harmful outputs across 10,000+ diverse prompts. Google’s 2025 framework requires less than 0.5% jailbreak success rate for enterprise approval.

Do I need special hardware to self-host LLMs?

Yes. For models larger than 7B parameters, you need NVIDIA A100 or H100 GPUs. Red Hat specifies a minimum of 8 H100 GPUs for Llama-3-70B to maintain 15 tokens/sec throughput. Smaller models like Mistral 7B can run on 2-4 A100s, but performance will suffer under load. Don’t try to self-host on consumer-grade hardware-it’s not scalable and will fail under real traffic.

Can I use open-source LLMs for regulated industries like healthcare or finance?

Yes-but only if you meet strict evaluation gates. HIPAA and GDPR require full data residency, which APIs often can’t guarantee. Self-hosted models can meet these requirements. But you must prove it through 100% red teaming, domain-specific testing, and continuous monitoring. Many healthcare firms now use self-hosted Llama-3-8B or Mistral 7B after passing Granica’s 7-point framework, achieving 96%+ API accuracy with full compliance.

What’s the biggest mistake companies make when switching to self-hosted LLMs?

They assume performance and cost will automatically improve. They skip domain-specific testing, underestimate engineering overhead, and ignore context window degradation. The result? Lower accuracy, higher costs, and user backlash. Stanford’s 2025 study found 74% of failed migrations skipped at least one critical evaluation gate. The fix? Treat migration like a product launch-not a tech upgrade.

Tyler Durden

18 January, 2026 - 09:06 AM

Bro, I just deployed Llama-3-70B last week and skipped the domain testing because ‘it worked on my laptop.’ Big mistake. First week, our finance bot started recommending ‘invest in Bitcoin’ to clients with 401(k)s. We lost $87k in refunds before we caught it. Now we run 1,200 real queries through it every night. No more surprises. Don’t be me.

Also, yeah, the 2.3 FTEs? Real. I’m one of them. My wife thinks I’m married to the GPU rack. She’s not wrong.

Aafreen Khan

18 January, 2026 - 19:28 PM

lol u guys actin like self hosting is rocket science 😂 i hosted mistral on a 3060 and it’s fine. u just need chill vibes and a good cup of chai ☕️. api is for cowards who afraid of their own servers. also 1.2M tokens? pfft my aunt’s blog gets more traffic than that. #selfhostedlife #noapiforlife

Pamela Watson

20 January, 2026 - 06:07 AM

OMG I tried this too and my model kept saying ‘I’m not a doctor but…’ even when I asked for diabetes advice. I was like WAIT, THAT’S NOT THE POINT. I had to hire a nurse just to review outputs. And the cost?? My electricity bill went up $400. I thought I was saving money?? 😭

Also, did you know you need like 8 H100s? I had 2. It was like trying to run a Ferrari on a bicycle tire. Don’t do it. Just use the API. It’s cheaper and your boss won’t yell at you.

michael T

21 January, 2026 - 08:35 AM

Y’all are overcomplicating this. I ran a red team test with 50 jailbreaks. 3 got through. So I added a swear filter. Problem solved. My model now says ‘I can’t help with that’ like a polite robot. It’s not perfect, but it’s not gonna get us sued. I don’t need a 7-phase checklist. I need a damn coffee and a nap.

Also, 2.3 FTEs? Nah. I’m the dev, the QA, the sysadmin, and the therapist who calms down the CFO when the model says ‘your merger is a bad idea.’ I’m one person. And I’m still here. So stop over-engineering. Just ship it. Then fix it. That’s how startups work.

Christina Kooiman

22 January, 2026 - 10:18 AM

You need to understand that skipping evaluation gates is not just a technical oversight-it is a catastrophic failure of risk management, and frankly, it borders on professional negligence. The MMLU threshold is not a suggestion; it is a baseline metric established by CSET Georgetown in June 2024, and failing to meet it constitutes a breach of fiduciary duty in regulated industries. Furthermore, the 1.8x latency threshold is not arbitrary-it is derived from NVIDIA’s empirical data on user abandonment rates, which show a statistically significant correlation between latency spikes and customer churn. And let’s not forget the 99.7% toxic output blocking requirement mandated by Google’s 2025 framework, which, if not met, exposes your organization to potential litigation under GDPR and HIPAA. I have reviewed your deployment logs, and I can confirm that your model degraded to 82% context window retention at 70% capacity, which is a violation of the MIT-IBM Watson Lab’s published standards. You did not ‘test it.’ You gambled. And now you’re lucky you didn’t lose your job. Please, for the love of all that is logical, follow the process. The 7-phase evaluation exists for a reason. Do not skip it. Again.