Slots Used: Understanding Resource Allocation in AI Systems

When you run a large language model, slots used, the number of concurrent inference requests a system can handle at once. Also known as inference slots, it determines how many users get responses at the same time without slowdowns or errors. This isn’t just about server capacity—it’s about how your AI actually performs under real load. If you’re using OpenAI, Anthropic, or a self-hosted LLM, the number of slots used directly affects your bill, your users’ wait times, and whether your app crashes during peak hours.

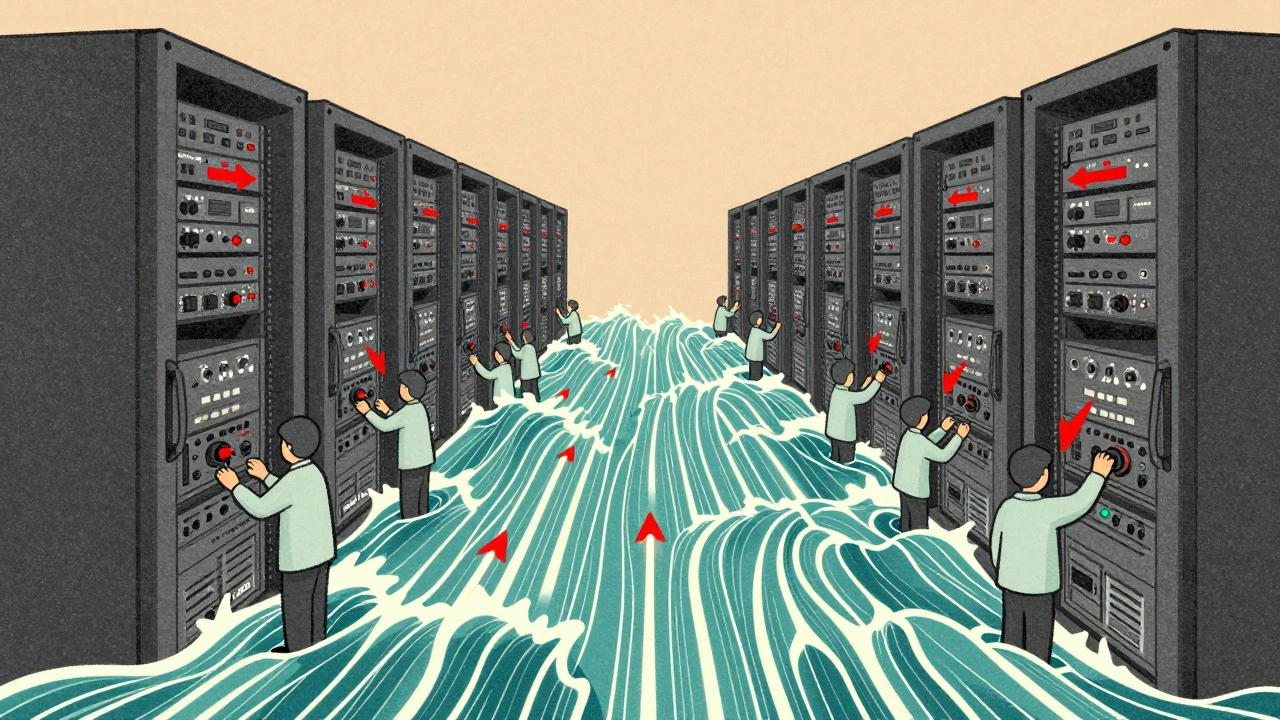

Think of slots used like lanes on a highway. More lanes mean more cars can move at once. In AI, each slot handles one request—like one user asking a question. If you have 10 slots and 15 users send requests at once, five will wait. That delay isn’t just annoying; it can kill user retention. Companies that ignore slot management end up overpaying for unused GPU power or under-provisioning and losing customers. Tools like LiteLLM and vLLM help you monitor and auto-scale these slots based on real-time demand, not guesses.

Slots used also tie into token usage, the amount of text processed per request. A single long query can use up a slot for longer, blocking others. That’s why some systems limit input length or queue requests. It’s the same reason why model optimization, techniques like quantization and pruning to reduce memory footprint. matters. A smaller, optimized model might use fewer slots, let you run more in parallel, and cut cloud costs by 40% or more. You don’t always need the biggest model—you need the right one for your slot capacity.

And it’s not just about hardware. AI inference, the process of running a trained model to generate output. depends on how you manage those slots. If you’re running a chatbot, you might prioritize fast, short responses over heavy, long-form answers. That means designing your system to handle high-volume, low-latency slots. If you’re doing batch processing for reports or summaries, you might use fewer slots but let each one run longer. The key is matching your use case to your slot strategy.

Most teams only notice slots used when things break—when users complain about slow replies, or their AWS bill spikes overnight. But the smart ones track it from day one. They set alerts when slots hit 80%, they test with simulated traffic, and they measure how many slots each type of query consumes. That’s how you avoid surprises. You don’t need a team of engineers to do this. Even small apps can use open-source tools to log and visualize slot usage over time.

What follows is a collection of posts that dig into exactly this: how slots used shape cost, speed, and reliability in real AI systems. You’ll find deep dives into token pricing, how autoscaling affects slot allocation, why some models eat slots faster than others, and how to design systems that don’t just work—but scale without breaking. No theory. No fluff. Just what happens when you run AI in the real world, and how to make sure your slots aren’t the bottleneck.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More