Safety Classifiers in AI: Protecting Models from Harmful Outputs

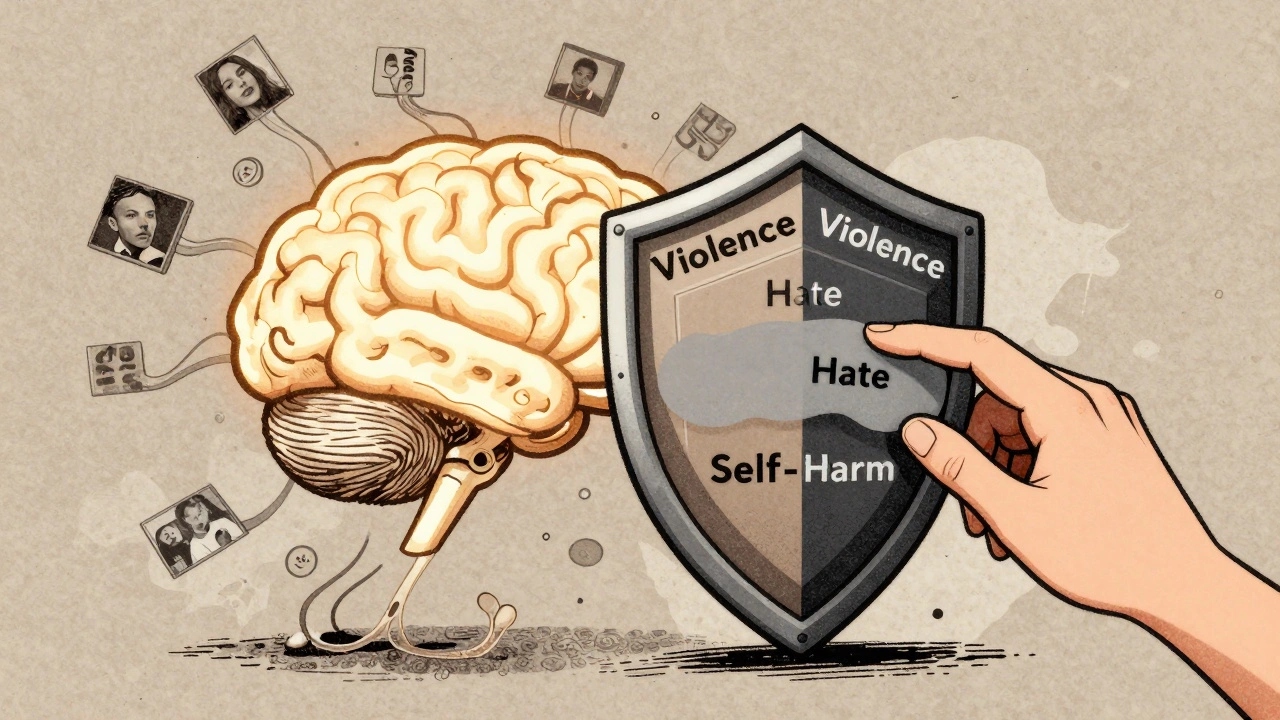

When you ask an AI a question, you expect a helpful answer—not something dangerous, biased, or false. That’s where safety classifiers, machine learning systems designed to detect and block harmful content before it reaches users. Also known as content filters, they act like gatekeepers for AI models, deciding what responses are safe to show and what should be blocked. Without them, even the most advanced language models can generate toxic text, spread misinformation, or reveal private data—something no business or developer can afford.

Safety classifiers don’t work in isolation. They’re part of a larger system that includes LLM moderation, the process of monitoring and controlling outputs from large language models using rules, filters, and real-time analysis, and content filtering, the technical practice of scanning inputs and outputs for flagged keywords, patterns, or semantic risks. These tools are built into platforms like OpenAI’s moderation API, Google’s Gemini safeguards, and open-source frameworks like Hugging Face’s transformers. But they’re not perfect. A classifier might miss a subtle threat or block a harmless question because it sounds similar to something dangerous. That’s why teams combine them with human review, usage analytics, and continuous retraining.

What you’ll find in this collection isn’t theory—it’s real-world setups. Developers are using safety classifiers to stop chatbots from giving medical advice, blocking hate speech in customer support bots, and preventing AI from leaking internal company data. You’ll see how teams measure classifier accuracy, tune thresholds without killing usability, and integrate them into PHP apps using Composer packages and API wrappers. There’s no magic bullet, but there are proven patterns: how to layer filters, when to use rule-based vs. ML-based detection, and how to avoid false positives that frustrate users. These aren’t just technical fixes—they’re ethical decisions baked into code.

Every post here comes from teams that have shipped AI tools under real pressure: legal compliance, user trust, and uptime all depend on getting safety right. Whether you’re building a small chatbot or a SaaS platform with thousands of users, the lessons here will help you avoid costly mistakes before they happen.

Content Moderation for Generative AI: How Safety Classifiers and Redaction Keep Outputs Safe

Learn how safety classifiers and redaction techniques prevent harmful content in generative AI outputs. Explore real-world tools, accuracy rates, and best practices for responsible AI deployment.

Read More