SaaS Architecture: Build Scalable, Secure, and Cost-Effective AI-Powered Apps

When you build a SaaS architecture, a software delivery model where applications are hosted centrally and accessed over the internet by multiple customers. Also known as cloud-based software, it's the backbone of modern AI tools—from chatbots to automated content engines—that need to serve hundreds or thousands of users without crashing or bleeding cash. Unlike old-school software you install on a server, SaaS runs in the cloud, scales on demand, and updates automatically. But when you add large language models (LLMs) into the mix, things get tricky. You’re not just serving web pages anymore—you’re running AI inference engines that chew through GPU credits, need strict data isolation, and can’t afford to hallucinate in front of paying customers.

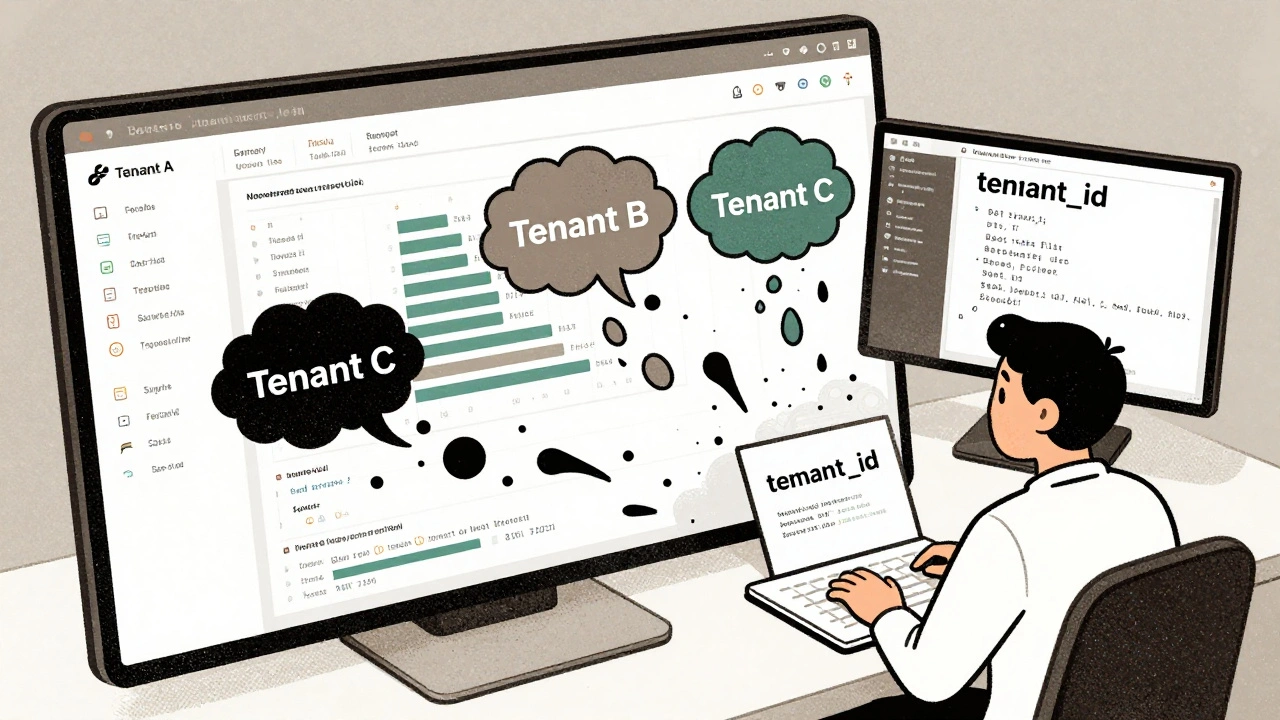

That’s where multi-tenant systems, architectures that serve multiple customers from a single instance while keeping their data and settings separate come in. You can’t just throw all users into one LLM pool—privacy, compliance, and performance demand isolation. Think of it like an apartment building: everyone shares the same pipes and power grid, but each unit has its own lock. Tools like LLM interoperability, patterns that let you switch between AI providers without rewriting your code (like LiteLLM or LangChain) help you avoid vendor lock-in while keeping costs under control. And because LLMs are billed per token, your architecture must anticipate usage spikes. One user asking 100 questions in a minute shouldn’t crash the system for everyone else. That’s where autoscaling, automatically adjusting computing resources based on real-time demand becomes non-negotiable. You don’t run 10 GPUs 24/7 if only 3 are needed at night.

Security isn’t an add-on—it’s baked into the design. Enterprise customers demand confidential computing, hardware-level encryption that protects data even while it’s being processed so your AI can’t accidentally leak their private info. And because you’re handling sensitive data, enterprise data governance, policies that track where training data came from and who can access outputs isn’t optional. You need to log everything: who asked what, which model responded, and whether the output passed safety checks. This isn’t just for compliance—it’s how you earn trust.

Most SaaS startups fail at scaling because they treat AI like a magic box. It’s not. It’s a resource-heavy system that needs careful tuning. The posts below show you exactly how top teams handle this: how they cut cloud costs by 60% using spot instances, how they measure governance with real KPIs like MTTR and policy adherence, how they avoid hallucinations with RAG, and how they keep AI-generated UIs consistent across products. You’ll see what works when you’re not just testing in a lab—but running a live product with real users, real bills, and real consequences. No theory. No fluff. Just the patterns used by teams shipping AI SaaS apps today.

Multi-Tenancy in Vibe-Coded SaaS: How to Get Isolation, Auth, and Cost Controls Right

Learn how to implement secure multi-tenancy in AI-assisted SaaS apps using vibe coding. Avoid data leaks, cost overruns, and authentication failures with proven strategies for isolation, auth, and usage controls.

Read More