Pretraining Corpus: What It Is and Why It Shapes Every AI Model

When you hear about pretraining corpus, the massive collection of text used to teach AI models how language works before they ever see a user question. Also known as training dataset, it’s not just background noise—it’s the raw material that turns random code into something that understands context, tone, and meaning. Without a good pretraining corpus, even the most advanced neural network is just guessing. Think of it like teaching a child to read: you don’t start with advanced literature. You give them books, articles, conversations—real, messy, varied language—and over time, they start to recognize patterns, grammar, and intent. That’s exactly what a pretraining corpus does for AI.

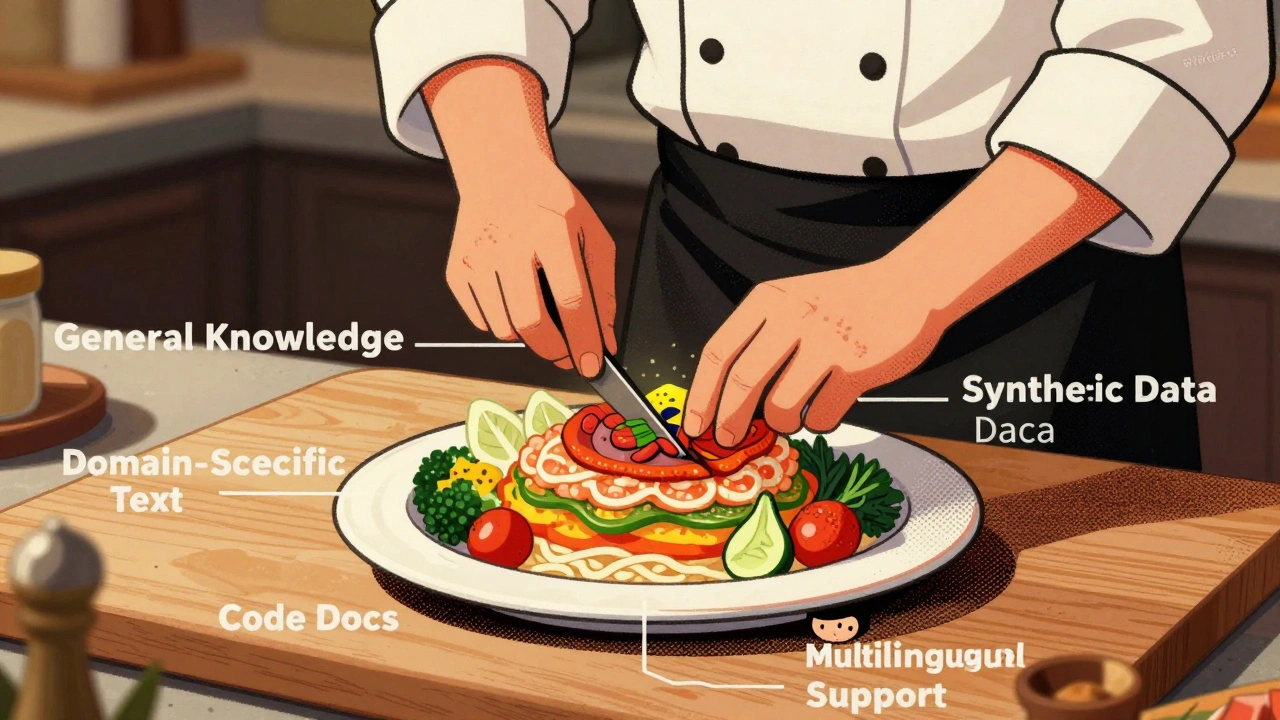

But not all corpora are created equal. A poorly built one can teach an AI to repeat biases, hallucinate facts, or misunderstand sarcasm. The best ones are huge—sometimes hundreds of gigabytes—and carefully filtered. They pull from public websites, books, code repositories, forums, and even archived social media. But here’s the catch: large language models, AI systems trained on massive text datasets to generate human-like responses don’t just need volume. They need diversity. If your corpus is 90% English Wikipedia and 10% Reddit, your model will sound like a textbook that occasionally swears. That’s why top teams spend more time cleaning and curating data than tweaking model architecture.

And it’s not just about what’s in there—it’s about what’s left out. A good pretraining corpus avoids copyrighted material, private data, and toxic content. But even then, traces slip through. That’s why companies now use data governance, the set of practices to track, control, and audit the data used to train AI systems like Microsoft Purview or Databricks to log every source. It’s not just compliance—it’s quality control. If your model starts making up fake medical advice or repeating conspiracy theories, the problem isn’t the algorithm. It’s the data it was fed.

What you’ll find in this collection are deep dives into how real teams build, audit, and improve their pretraining corpora. You’ll see how companies balance scale with safety, how they detect and remove harmful content, and why some teams spend months just cleaning a single dataset. You’ll also learn how a well-curated corpus can reduce hallucinations, cut cloud costs, and make your AI more reliable—even with a smaller model. This isn’t theory. These are the hidden steps behind every chatbot, summarizer, and code assistant you use.

How to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read More