Prefill Queue: How AI Systems Prepare Responses Before You Ask

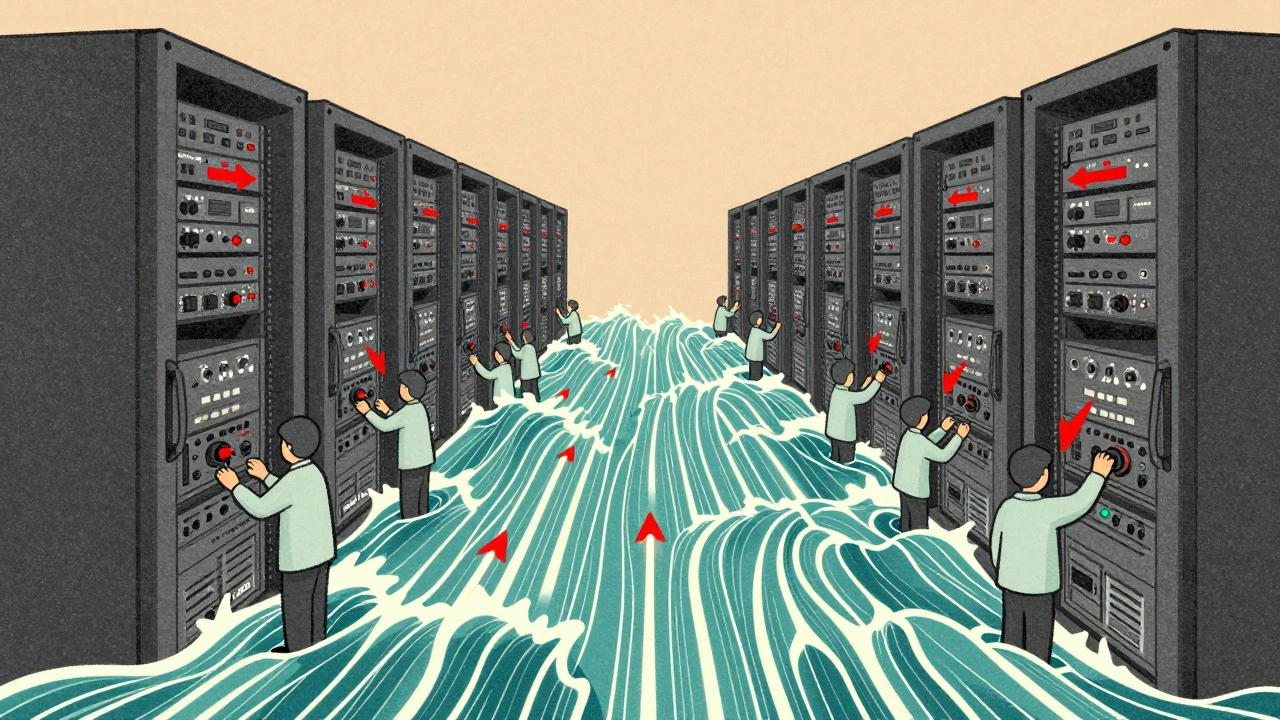

When you type a question to an AI chatbot, it doesn’t wait for you to finish before thinking. Behind the scenes, a prefill queue, a system that predicts and prepares responses before user input is fully received. Also known as early generation, it’s what makes AI feel instant—even when the model is processing hundreds of tokens. This isn’t magic. It’s smart buffering. The system looks at what you’ve typed so far, guesses what you might say next, and starts generating the reply while you’re still typing. That’s why your chatbot answers before you hit enter.

How does this work? The AI uses token prediction, the process of estimating the most likely next words based on context and past patterns. It’s not guessing randomly—it’s using the same attention mechanisms that power large language models to jump ahead. This is especially critical in LLM inference, the real-time use of trained models to generate output, where delays of even 200 milliseconds break the illusion of conversation. Companies like OpenAI, Anthropic, and Meta use prefill queues to keep response times under 500ms across millions of users. Without it, every chat would feel like a dial-up connection.

But it’s not just about speed. A well-tuned prefill queue cuts cloud costs. If the model starts generating early, it reduces idle GPU time. That’s why cloud cost optimization posts on this site show teams saving up to 40% on inference bills just by optimizing how they queue and predict tokens. It also helps with generative AI performance, how quickly and reliably an AI system delivers usable output under real-world load. If your chatbot freezes while waiting for input to finish, users leave. If it’s already halfway through its reply, they stay.

What you’ll find below are deep dives into how prefill queues work under the hood, how they interact with retrieval systems like RAG, how they affect latency in multi-tenant SaaS apps, and why some models choke on them when scaled. You’ll see real benchmarks from production deployments, code patterns for PHP-based AI backends, and how to debug when your queue starts falling behind. No theory without practice. Just what you need to make your AI feel fast, reliable, and ready before the user even finishes typing.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More