Model Weights Explained: What They Are and How They Power AI Models

When you use an AI that writes like a human or answers questions accurately, it’s not magic—it’s model weights, numerical values inside a neural network that store what the model has learned from data. Also known as parameters, these weights are the result of training—millions or billions of adjustments made to minimize errors until the model gets good at predicting the next word, detecting spam, or translating speech. Think of them like the brain’s synapses: they don’t store facts, but they store patterns. Without them, a large language model is just empty code.

Model weights are what make large language models work. Every time you ask ChatGPT or Claude something, it’s running your input through a massive grid of these numbers, calculating which path makes the most sense. But weights aren’t just about size. How they’re stored, compressed, or moved between servers affects everything—speed, cost, and even safety. For example, quantization, the process of reducing the precision of weights from 32-bit to 8-bit or even 4-bit lets you run powerful models on cheaper hardware. That’s why companies use it to cut cloud bills without losing much accuracy. And when you hear about neural networks, the layered structures that process data in AI systems, what you’re really hearing about is how weights flow through those layers to produce output.

Managing weights isn’t just a technical detail—it’s a business decision. Too many weights? Your AI runs slow and costs too much. Too few? It gives vague, wrong, or made-up answers. That’s why posts here cover how to compress weights without breaking performance, how to switch between models based on weight efficiency, and how tools like LiteLLM help you handle different weight formats across providers. You’ll also find guides on how training data shapes weights, why some weights are better for legal or medical use, and how confidential computing protects weights during inference.

What you’ll find below isn’t theory. It’s what real developers and teams are doing right now to make AI faster, cheaper, and more reliable. Whether you’re trimming weights to cut costs, choosing a model based on its weight structure, or trying to understand why your AI hallucinates—this collection gives you the practical, no-fluff answers you need.

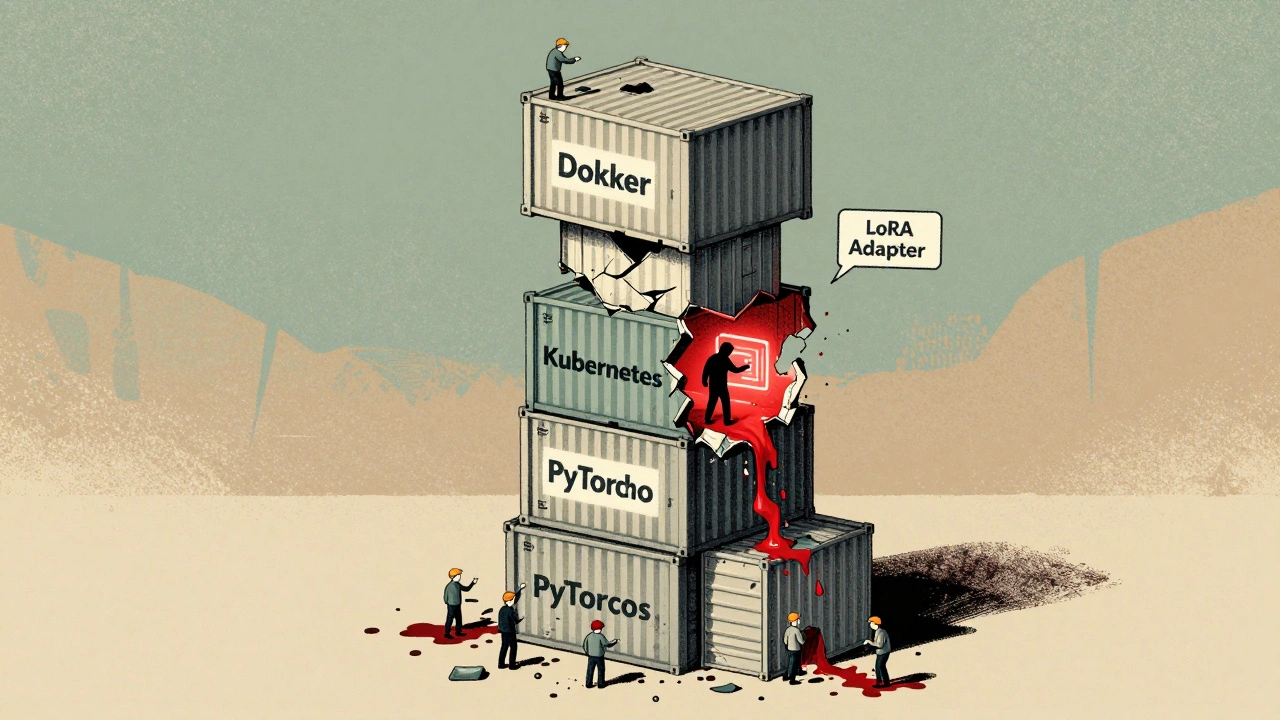

Supply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

LLM supply chain security protects containers, model weights, and dependencies from compromise. Learn how to secure your AI deployments with SBOMs, signed models, and automated scanning to prevent breaches before they happen.

Read More