LLM Training Data: What It Is, Why It Matters, and How It Shapes AI

When you ask an AI a question, it doesn’t pull answers from thin air—it’s replaying patterns learned from LLM training data, the massive collection of text, code, and media used to teach large language models how to understand and generate human-like language. Also known as pre-training datasets, this data is the foundation of every chatbot, summarizer, and code assistant you interact with. Without clean, diverse, and well-curated training data, even the most powerful models will hallucinate, repeat biases, or fail basic facts.

It’s not just about volume. AI data governance, the set of policies and practices that control how training data is collected, labeled, and used. Also known as data stewardship, it ensures models don’t learn from copyrighted material, private records, or harmful content. Companies like Microsoft and OpenAI use tools like Databricks and Microsoft Purview to track where data comes from and who has access. But governance isn’t just legal—it’s practical. Poor data leads to bad outputs, and bad outputs cost money, damage trust, and even break laws.

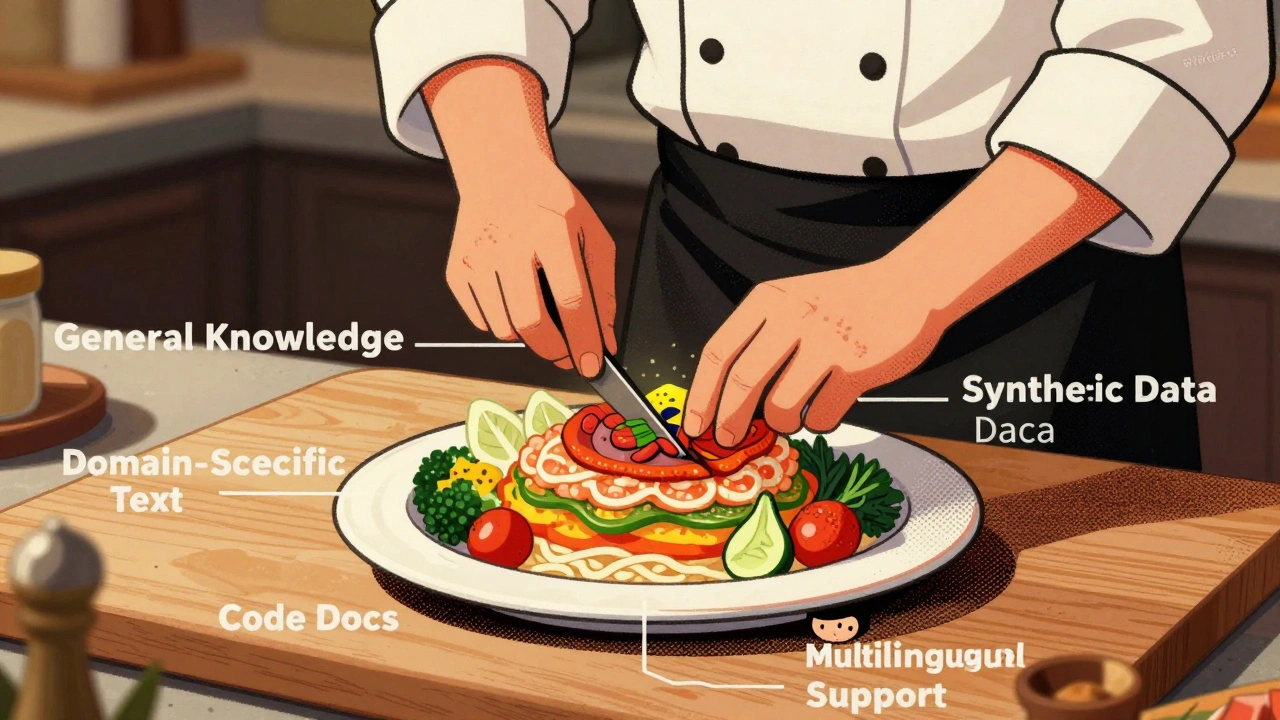

Training data isn’t just text from books and websites. It includes GitHub code, Reddit threads, medical journals, legal documents, and even social media posts. That’s why training data quality, how accurate, balanced, and representative the data is. Also known as data fidelity, it determines whether a model can handle medical questions, write legal briefs, or avoid generating hate speech. A model trained mostly on English web pages won’t understand regional slang or non-Western perspectives. A model fed too much biased data will mirror that bias—sometimes dangerously.

And it’s not just about what’s in the data—it’s what’s missing. Many public datasets overrepresent tech blogs and underrepresent small languages, indigenous knowledge, or local dialects. That’s why enterprise teams now audit their training data for gaps, not just errors. Some even use synthetic data to fill holes without risking privacy violations.

When you see a model get something wrong, it’s rarely because the algorithm is broken. It’s because the data it learned from was flawed, incomplete, or poorly managed. That’s why the best AI teams spend more time cleaning and curating data than tweaking model parameters. They know: garbage in, garbage out—and in AI, garbage can cost you millions.

Below, you’ll find real-world guides on how companies handle this. From how to audit training data for bias, to how to legally source content, to how to measure if your data is actually helping—or hurting—your AI. These aren’t theory pieces. They’re battle-tested strategies from teams running LLMs in production, dealing with regulators, and fixing hallucinations before users notice.

How to Build a Domain-Aware LLM: The Right Pretraining Corpus Composition

Learn how to build domain-aware LLMs by strategically composing pretraining corpora with the right mix of data types, ratios, and preprocessing techniques to boost accuracy while reducing costs.

Read More