LLM Supply Chain Security: Protecting AI Models from Training to Deployment

When you use a large language model, you’re not just running code—you’re trusting an entire LLM supply chain, the full lifecycle of data, tools, and dependencies that build and run an AI model. Also known as AI model pipeline, it starts with training data, passes through pre-trained weights, integrates third-party libraries, and ends with deployment in your environment. One weak link—like a compromised open-source package or a poisoned dataset—can let attackers slip in hidden backdoors, steal data, or make your model lie on command.

This isn’t theoretical. In 2024, researchers found that a single modified dependency in a popular Hugging Face library could silently alter how an LLM responds to prompts—without anyone noticing until it was too late. That’s why LLM training data, the raw information used to teach an AI model matters as much as the model itself. If your training data includes scraped content from untrusted sources, you’re inviting bias, misinformation, or even legal liability. And when you plug in tools like LiteLLM, a library that abstracts multiple LLM providers to avoid vendor lock-in or LangChain, a framework for connecting LLMs to external tools and databases, you’re adding more moving parts. Each one is a potential entry point for attackers.

Enterprise teams are starting to fight back with confidential computing, hardware-based protection that keeps model data encrypted even while it’s being processed. Think of it like a secure vault inside your server—only the model can unlock it during inference. Tools from NVIDIA and Azure use Trusted Execution Environments (TEEs) to make sure no one, not even your own cloud provider, can peek at your prompts or responses. But hardware alone isn’t enough. You need to track every file, every library, every API call. That means scanning for known vulnerabilities, verifying digital signatures, and auditing where your data comes from before it touches your model.

What you’ll find in the posts below isn’t just theory—it’s real-world fixes. From how to audit your pretraining corpus to how to detect if a model has been tampered with, these guides show you exactly where the cracks are in today’s AI pipelines. You’ll learn how to stop supply chain attacks before they reach production, how to measure your model’s trustworthiness, and how to build defenses that work even when you’re using third-party tools. This isn’t about locking everything down—it’s about knowing what to trust, and how to prove it.

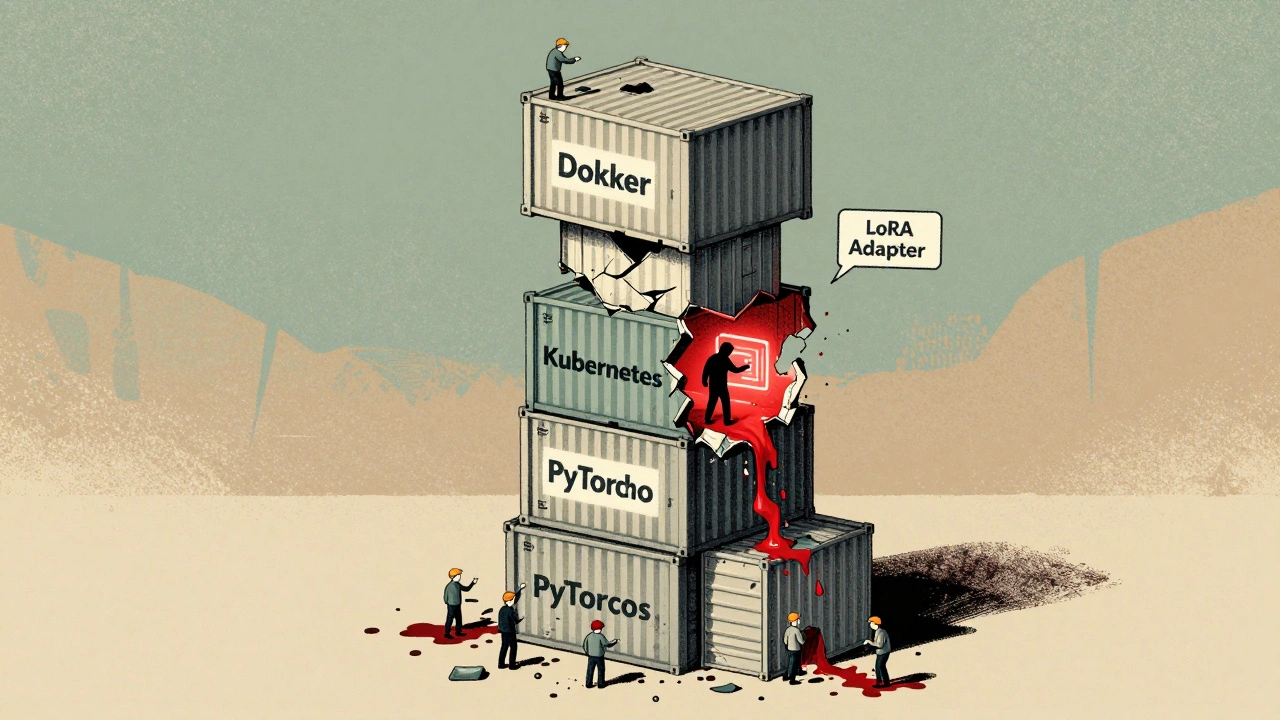

Supply Chain Security for LLM Deployments: Securing Containers, Weights, and Dependencies

LLM supply chain security protects containers, model weights, and dependencies from compromise. Learn how to secure your AI deployments with SBOMs, signed models, and automated scanning to prevent breaches before they happen.

Read More