LLM Autoscaling: How to Dynamically Manage AI Workloads Without Overspending

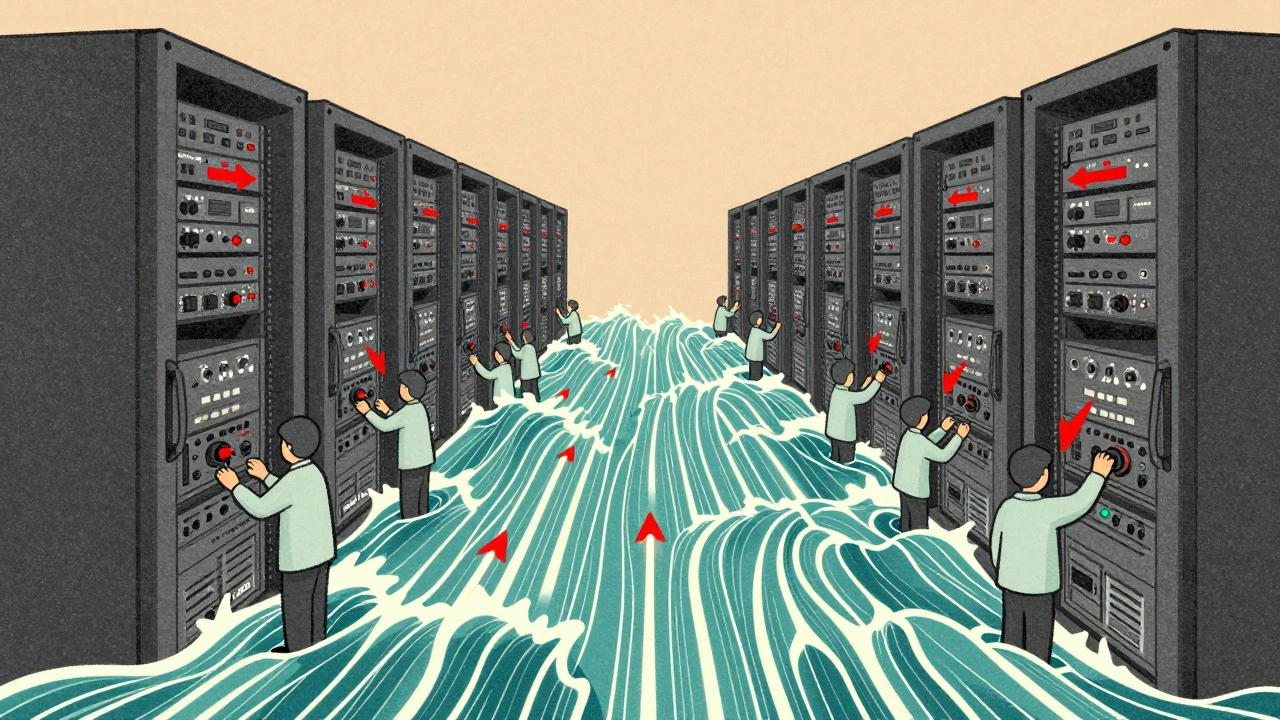

When you run LLM autoscaling, a system that automatically adjusts computing resources for large language models based on real-time demand. Also known as AI workload scaling, it’s what keeps your chatbot from crashing at 9 PM when users flood in—and stops you from paying for ten GPUs sitting idle at 3 AM. Most teams think autoscaling is just turning on a slider in their cloud dashboard. It’s not. It’s about matching the right trigger to the right model, and knowing when to scale up before users notice lag—and scale down before your bill spikes.

LLM autoscaling doesn’t work in a vacuum. It connects directly to cloud cost optimization, strategies that reduce spending on AI infrastructure without cutting performance. You can’t scale smartly if you don’t track token usage, model choice, and peak hours—exactly what post "How Usage Patterns Affect Large Language Model Billing in Production" breaks down. It also ties into spot instances AI, low-cost cloud compute cycles that can be interrupted but are perfect for non-critical LLM tasks. Companies using spot instances with autoscaling cut their AI cloud bills by 60% or more, as shown in "Cloud Cost Optimization for Generative AI". But if your scaling logic doesn’t account for cold starts or model loading times, you’ll see delays that hurt user experience—even if your bill looks good.

Real-world LLM autoscaling isn’t just about servers. It’s about user behavior. A support chatbot might need 20 instances during business hours but only 2 at night. A content generator for marketing might spike every Monday morning. The best systems don’t just react to CPU usage—they watch queue length, request latency, and even user session patterns. That’s why tools like LiteLLM and LangChain, mentioned in "Interoperability Patterns to Abstract Large Language Model Providers", help you layer scaling rules across multiple models and providers without locking yourself in.

And don’t forget the hidden cost: complexity. Autoscaling adds layers—monitoring, alerts, fallbacks, retry logic. If your team isn’t set up to manage it, you’ll spend more time debugging scaling loops than building features. That’s why the most successful teams start small: one model, one endpoint, one clear metric to scale on. Then they expand.

Below, you’ll find real guides on how to set this up, what metrics to watch, how to avoid common pitfalls, and which cloud tools actually work in production—not just in demos. No fluff. Just what you need to make your LLMs run fast, stay reliable, and stay affordable.

Autoscaling Large Language Model Services: Policies, Signals, and Costs

Learn how to autoscale LLM services effectively using prefill queue size, slots_used, and HBM usage. Reduce costs by up to 60% while keeping latency low with proven policies and real-world benchmarks.

Read More